Werner Friedl

State- and context-dependent robotic manipulation and grasping via uncertainty-aware imitation learning

Oct 31, 2024

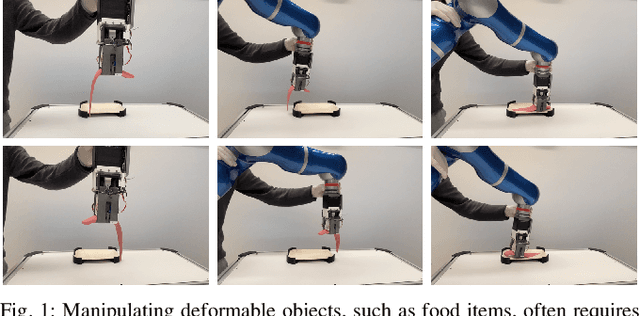

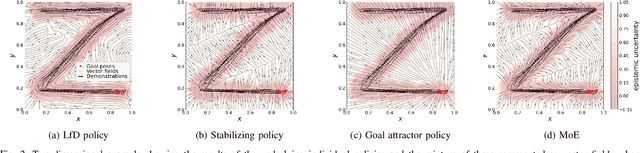

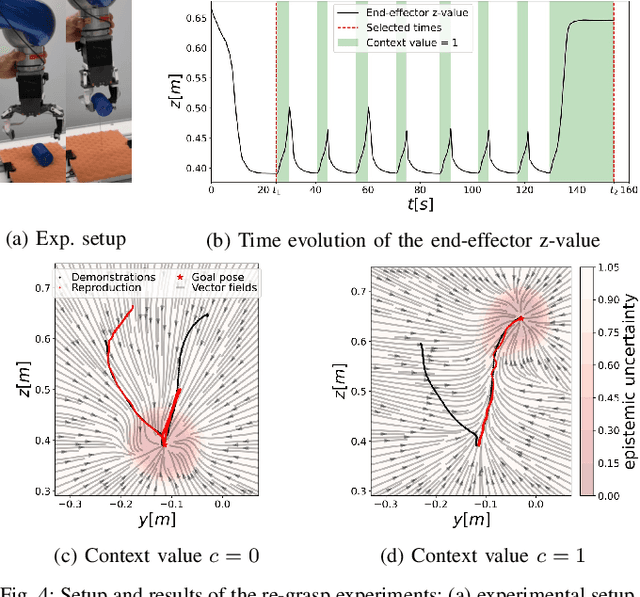

Abstract:Generating context-adaptive manipulation and grasping actions is a challenging problem in robotics. Classical planning and control algorithms tend to be inflexible with regard to parameterization by external variables such as object shapes. In contrast, Learning from Demonstration (LfD) approaches, due to their nature as function approximators, allow for introducing external variables to modulate policies in response to the environment. In this paper, we utilize this property by introducing an LfD approach to acquire context-dependent grasping and manipulation strategies. We treat the problem as a kernel-based function approximation, where the kernel inputs include generic context variables describing task-dependent parameters such as the object shape. We build on existing work on policy fusion with uncertainty quantification to propose a state-dependent approach that automatically returns to demonstrations, avoiding unpredictable behavior while smoothly adapting to context changes. The approach is evaluated against the LASA handwriting dataset and on a real 7-DoF robot in two scenarios: adaptation to slippage while grasping and manipulating a deformable food item.

Co-Designing Tools and Control Policies for Robust Manipulation

Sep 17, 2024Abstract:Inherent robustness in manipulation is prevalent in biological systems and critical for robotic manipulation systems due to real-world uncertainties and disturbances. This robustness relies not only on robust control policies but also on the design characteristics of the end-effectors. This paper introduces a bi-level optimization approach to co-designing tools and control policies to achieve robust manipulation. The approach employs reinforcement learning for lower-level control policy learning and multi-task Bayesian optimization for upper-level design optimization. Diverging from prior approaches, we incorporate caging-based robustness metrics into both levels, ensuring manipulation robustness against disturbances and environmental variations. Our method is evaluated in four non-prehensile manipulation environments, demonstrating improvements in task success rate under disturbances and environment changes. A real-world experiment is also conducted to validate the framework's practical effectiveness.

Unknown Object Segmentation from Stereo Images

Mar 11, 2021

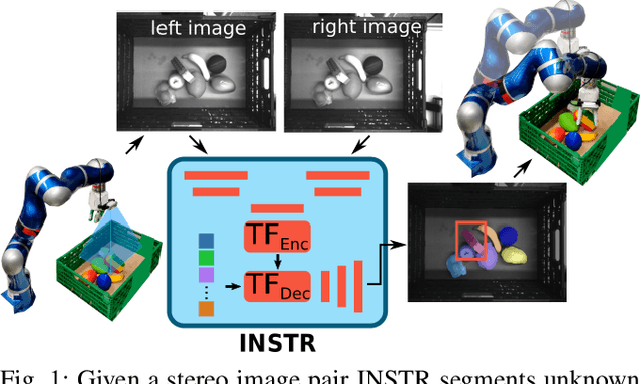

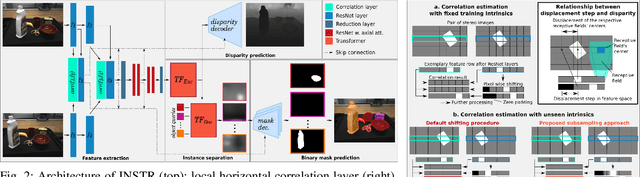

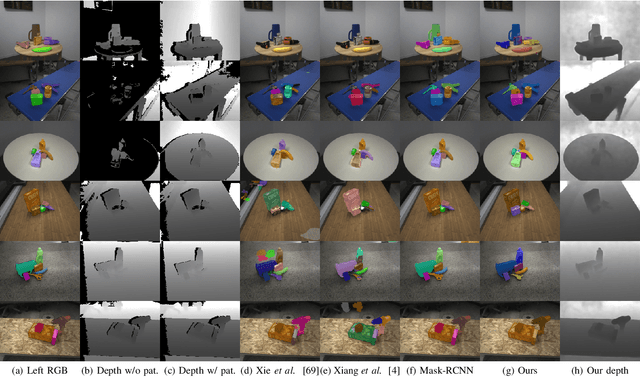

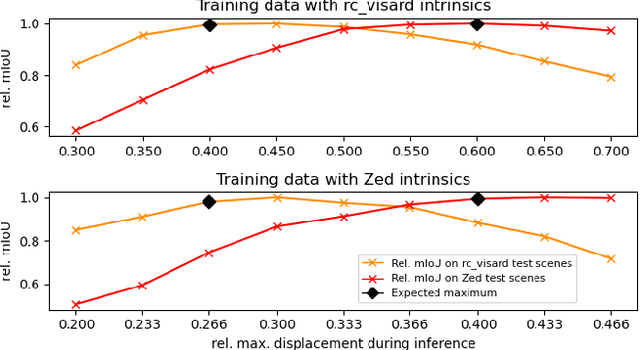

Abstract:Although instance-aware perception is a key prerequisite for many autonomous robotic applications, most of the methods only partially solve the problem by focusing solely on known object categories. However, for robots interacting in dynamic and cluttered environments, this is not realistic and severely limits the range of potential applications. Therefore, we propose a novel object instance segmentation approach that does not require any semantic or geometric information of the objects beforehand. In contrast to existing works, we do not explicitly use depth data as input, but rely on the insight that slight viewpoint changes, which for example are provided by stereo image pairs, are often sufficient to determine object boundaries and thus to segment objects. Focusing on the versatility of stereo sensors, we employ a transformer-based architecture that maps directly from the pair of input images to the object instances. This has the major advantage that instead of a noisy, and potentially incomplete depth map as an input, on which the segmentation is computed, we use the original image pair to infer the object instances and a dense depth map. In experiments in several different application domains, we show that our Instance Stereo Transformer (INSTR) algorithm outperforms current state-of-the-art methods that are based on depth maps. Training code and pretrained models will be made available.

CLASH WRIST -- A hardware to increase the capability of CLASH fruit gripper to use environment constraints exploration

Apr 02, 2020

Abstract:Humans use environmental constraints (EC) in manipulation to compensate for uncertainties in their world model. The same principle was recently applied to robotics, so that soft underactuated hands improve their grasping capability by using environmental constraints exploitation (ECE) [1]. Due to orientation of the robotic hand for example in the EC wall grasp, the length of the robot wrist plus the hand length gets quite important, if objects are grasp out of a box [2] . Most of the modern cobots have quite long wrist, so we have constructed a two degree of freedom wrist for the CLASH [3], to solve this problem (Fig. 1).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge