Ashok M. Sundaram

State- and context-dependent robotic manipulation and grasping via uncertainty-aware imitation learning

Oct 31, 2024

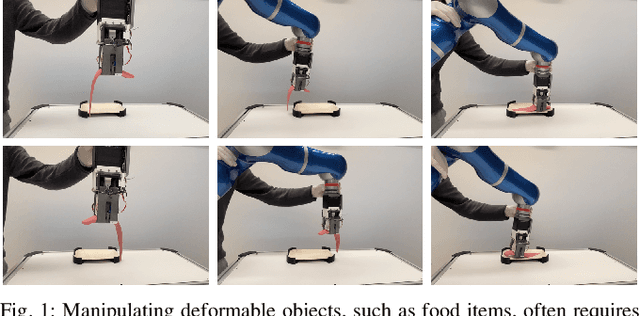

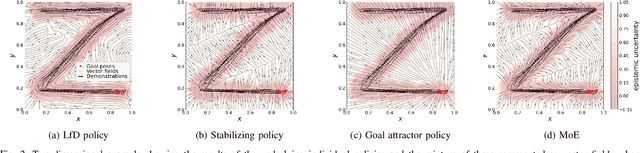

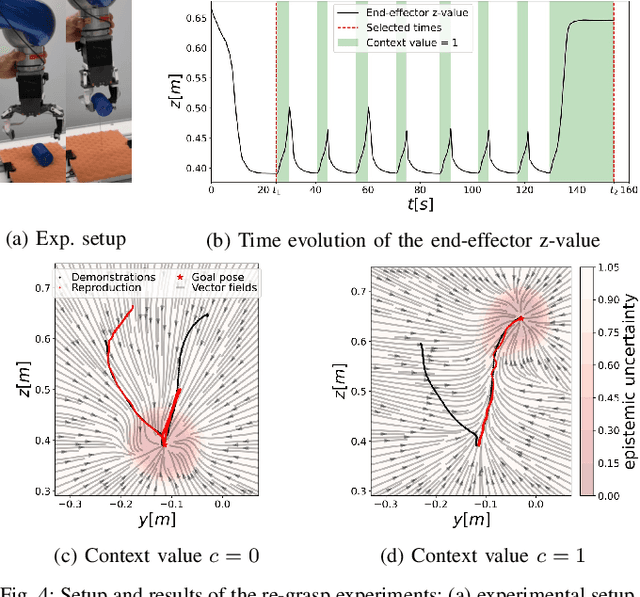

Abstract:Generating context-adaptive manipulation and grasping actions is a challenging problem in robotics. Classical planning and control algorithms tend to be inflexible with regard to parameterization by external variables such as object shapes. In contrast, Learning from Demonstration (LfD) approaches, due to their nature as function approximators, allow for introducing external variables to modulate policies in response to the environment. In this paper, we utilize this property by introducing an LfD approach to acquire context-dependent grasping and manipulation strategies. We treat the problem as a kernel-based function approximation, where the kernel inputs include generic context variables describing task-dependent parameters such as the object shape. We build on existing work on policy fusion with uncertainty quantification to propose a state-dependent approach that automatically returns to demonstrations, avoiding unpredictable behavior while smoothly adapting to context changes. The approach is evaluated against the LASA handwriting dataset and on a real 7-DoF robot in two scenarios: adaptation to slippage while grasping and manipulating a deformable food item.

Bayesian optimization for robust robotic grasping using a sensorized compliant hand

Oct 23, 2024Abstract:One of the first tasks we learn as children is to grasp objects based on our tactile perception. Incorporating such skill in robots will enable multiple applications, such as increasing flexibility in industrial processes or providing assistance to people with physical disabilities. However, the difficulty lies in adapting the grasping strategies to a large variety of tasks and objects, which can often be unknown. The brute-force solution is to learn new grasps by trial and error, which is inefficient and ineffective. In contrast, Bayesian optimization applies active learning by adding information to the approximation of an optimal grasp. This paper proposes the use of Bayesian optimization techniques to safely perform robotic grasping. We analyze different grasp metrics to provide realistic grasp optimization in a real system including tactile sensors. An experimental evaluation in the robotic system shows the usefulness of the method for performing unknown object grasping even in the presence of noise and uncertainty inherent to a real-world environment.

Unknown Object Grasping for Assistive Robotics

Apr 23, 2024

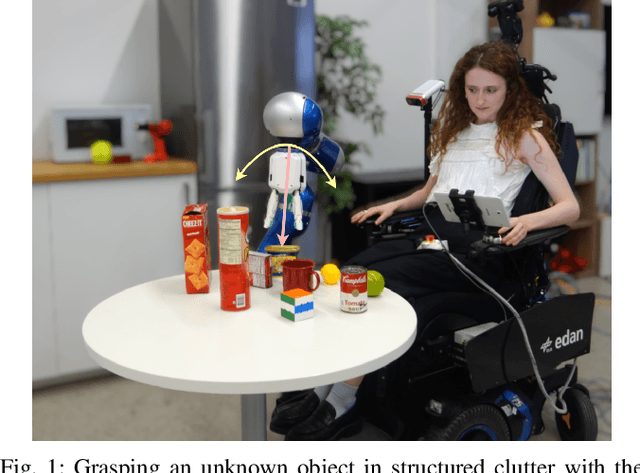

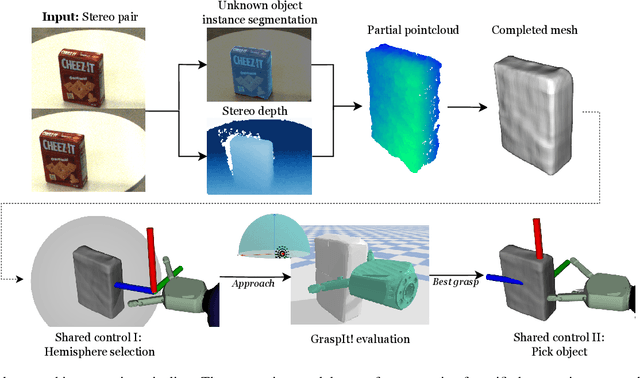

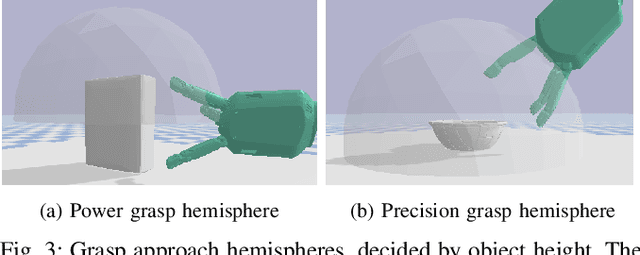

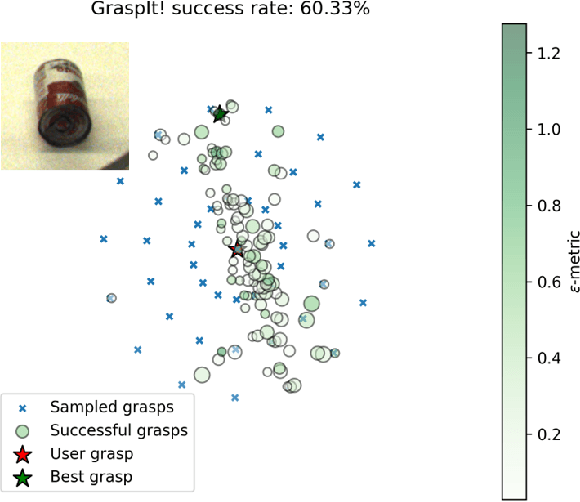

Abstract:We propose a novel pipeline for unknown object grasping in shared robotic autonomy scenarios. State-of-the-art methods for fully autonomous scenarios are typically learning-based approaches optimised for a specific end-effector, that generate grasp poses directly from sensor input. In the domain of assistive robotics, we seek instead to utilise the user's cognitive abilities for enhanced satisfaction, grasping performance, and alignment with their high level task-specific goals. Given a pair of stereo images, we perform unknown object instance segmentation and generate a 3D reconstruction of the object of interest. In shared control, the user then guides the robot end-effector across a virtual hemisphere centered around the object to their desired approach direction. A physics-based grasp planner finds the most stable local grasp on the reconstruction, and finally the user is guided by shared control to this grasp. In experiments on the DLR EDAN platform, we report a grasp success rate of 87% for 10 unknown objects, and demonstrate the method's capability to grasp objects in structured clutter and from shelves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge