Freek Stulp

Ecole Nationale Superieure de Techniques Avancees

Model Reconciliation through Explainability and Collaborative Recovery in Assistive Robotics

Jan 10, 2026Abstract:Whenever humans and robots work together, it is essential that unexpected robot behavior can be explained to the user. Especially in applications such as shared control the user and the robot must share the same model of the objects in the world, and the actions that can be performed on these objects. In this paper, we achieve this with a so-called model reconciliation framework. We leverage a Large Language Model to predict and explain the difference between the robot's and the human's mental models, without the need of a formal mental model of the user. Furthermore, our framework aims to solve the model divergence after the explanation by allowing the human to correct the robot. We provide an implementation in an assistive robotics domain, where we conduct a set of experiments with a real wheelchair-based mobile manipulator and its digital twin.

A Unified Framework for Probabilistic Dynamic-, Trajectory- and Vision-based Virtual Fixtures

Jun 11, 2025Abstract:Probabilistic Virtual Fixtures (VFs) enable the adaptive selection of the most suitable haptic feedback for each phase of a task, based on learned or perceived uncertainty. While keeping the human in the loop remains essential, for instance, to ensure high precision, partial automation of certain task phases is critical for productivity. We present a unified framework for probabilistic VFs that seamlessly switches between manual fixtures, semi-automated fixtures (with the human handling precise tasks), and full autonomy. We introduce a novel probabilistic Dynamical System-based VF for coarse guidance, enabling the robot to autonomously complete certain task phases while keeping the human operator in the loop. For tasks requiring precise guidance, we extend probabilistic position-based trajectory fixtures with automation allowing for seamless human interaction as well as geometry-awareness and optimal impedance gains. For manual tasks requiring very precise guidance, we also extend visual servoing fixtures with the same geometry-awareness and impedance behaviour. We validate our approach experimentally on different robots, showcasing multiple operation modes and the ease of programming fixtures.

State- and context-dependent robotic manipulation and grasping via uncertainty-aware imitation learning

Oct 31, 2024

Abstract:Generating context-adaptive manipulation and grasping actions is a challenging problem in robotics. Classical planning and control algorithms tend to be inflexible with regard to parameterization by external variables such as object shapes. In contrast, Learning from Demonstration (LfD) approaches, due to their nature as function approximators, allow for introducing external variables to modulate policies in response to the environment. In this paper, we utilize this property by introducing an LfD approach to acquire context-dependent grasping and manipulation strategies. We treat the problem as a kernel-based function approximation, where the kernel inputs include generic context variables describing task-dependent parameters such as the object shape. We build on existing work on policy fusion with uncertainty quantification to propose a state-dependent approach that automatically returns to demonstrations, avoiding unpredictable behavior while smoothly adapting to context changes. The approach is evaluated against the LASA handwriting dataset and on a real 7-DoF robot in two scenarios: adaptation to slippage while grasping and manipulating a deformable food item.

Interactive incremental learning of generalizable skills with local trajectory modulation

Sep 09, 2024Abstract:The problem of generalization in learning from demonstration (LfD) has received considerable attention over the years, particularly within the context of movement primitives, where a number of approaches have emerged. Recently, two important approaches have gained recognition. While one leverages via-points to adapt skills locally by modulating demonstrated trajectories, another relies on so-called task-parameterized models that encode movements with respect to different coordinate systems, using a product of probabilities for generalization. While the former are well-suited to precise, local modulations, the latter aim at generalizing over large regions of the workspace and often involve multiple objects. Addressing the quality of generalization by leveraging both approaches simultaneously has received little attention. In this work, we propose an interactive imitation learning framework that simultaneously leverages local and global modulations of trajectory distributions. Building on the kernelized movement primitives (KMP) framework, we introduce novel mechanisms for skill modulation from direct human corrective feedback. Our approach particularly exploits the concept of via-points to incrementally and interactively 1) improve the model accuracy locally, 2) add new objects to the task during execution and 3) extend the skill into regions where demonstrations were not provided. We evaluate our method on a bearing ring-loading task using a torque-controlled, 7-DoF, DLR SARA robot.

Unknown Object Grasping for Assistive Robotics

Apr 23, 2024

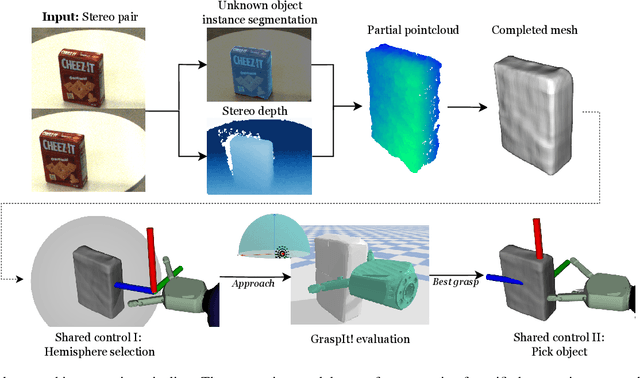

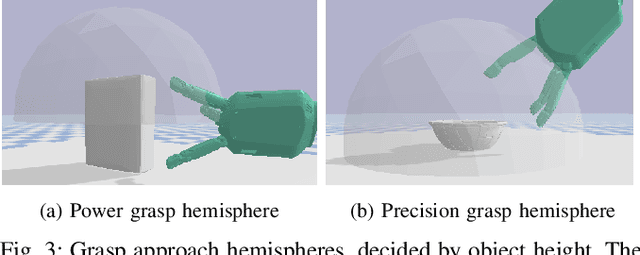

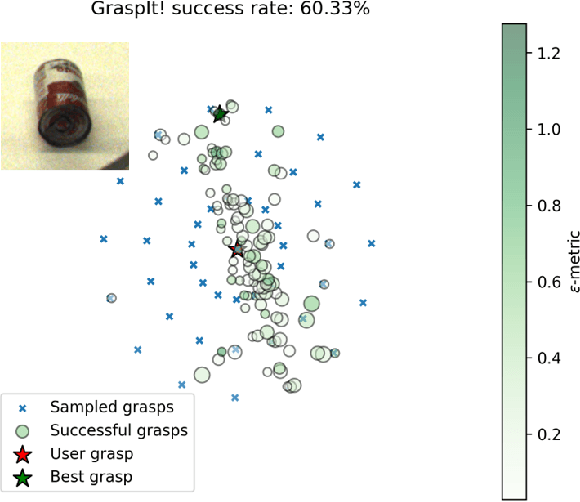

Abstract:We propose a novel pipeline for unknown object grasping in shared robotic autonomy scenarios. State-of-the-art methods for fully autonomous scenarios are typically learning-based approaches optimised for a specific end-effector, that generate grasp poses directly from sensor input. In the domain of assistive robotics, we seek instead to utilise the user's cognitive abilities for enhanced satisfaction, grasping performance, and alignment with their high level task-specific goals. Given a pair of stereo images, we perform unknown object instance segmentation and generate a 3D reconstruction of the object of interest. In shared control, the user then guides the robot end-effector across a virtual hemisphere centered around the object to their desired approach direction. A physics-based grasp planner finds the most stable local grasp on the reconstruction, and finally the user is guided by shared control to this grasp. In experiments on the DLR EDAN platform, we report a grasp success rate of 87% for 10 unknown objects, and demonstrate the method's capability to grasp objects in structured clutter and from shelves.

AI-enabled Cyber-Physical In-Orbit Factory -- AI approaches based on digital twin technology for robotic small satellite production

Feb 05, 2024Abstract:With the ever increasing number of active satellites in space, the rising demand for larger formations of small satellites and the commercialization of the space industry (so-called New Space), the realization of manufacturing processes in orbit comes closer to reality. Reducing launch costs and risks, allowing for faster on-demand deployment of individually configured satellites as well as the prospect for possible on-orbit servicing for satellites makes the idea of realizing an in-orbit factory promising. In this paper, we present a novel approach to an in-orbit factory of small satellites covering a digital process twin, AI-based fault detection, and teleoperated robot-control, which are being researched as part of the "AI-enabled Cyber-Physical In-Orbit Factory" project. In addition to the integration of modern automation and Industry 4.0 production approaches, the question of how artificial intelligence (AI) and learning approaches can be used to make the production process more robust, fault-tolerant and autonomous is addressed. This lays the foundation for a later realisation of satellite production in space in the form of an in-orbit factory. Central aspect is the development of a robotic AIT (Assembly, Integration and Testing) system where a small satellite could be assembled by a manipulator robot from modular subsystems. Approaches developed to improving this production process with AI include employing neural networks for optical and electrical fault detection of components. Force sensitive measuring and motion training helps to deal with uncertainties and tolerances during assembly. An AI-guided teleoperated control of the robot arm allows for human intervention while a Digital Process Twin represents process data and provides supervision during the whole production process. Approaches and results towards automated satellite production are presented in detail.

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Oct 17, 2023

Abstract:Large, high-capacity models trained on diverse datasets have shown remarkable successes on efficiently tackling downstream applications. In domains from NLP to Computer Vision, this has led to a consolidation of pretrained models, with general pretrained backbones serving as a starting point for many applications. Can such a consolidation happen in robotics? Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train generalist X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms. More details can be found on the project website $\href{https://robotics-transformer-x.github.io}{\text{robotics-transformer-x.github.io}}$.

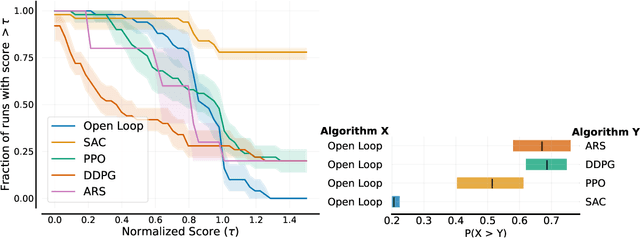

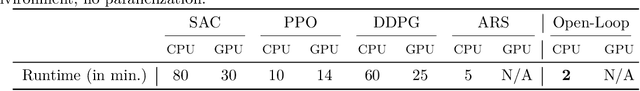

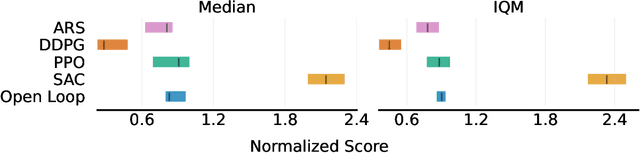

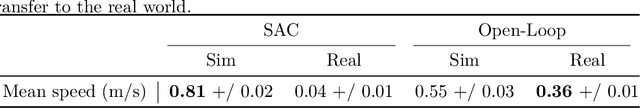

A Simple Open-Loop Baseline for Reinforcement Learning Locomotion Tasks

Oct 09, 2023

Abstract:In search of the simplest baseline capable of competing with Deep Reinforcement Learning on locomotion tasks, we propose a biologically inspired model-free open-loop strategy. Drawing upon prior knowledge and harnessing the elegance of simple oscillators to generate periodic joint motions, it achieves respectable performance in five different locomotion environments, with a number of tunable parameters that is a tiny fraction of the thousands typically required by RL algorithms. Unlike RL methods, which are prone to performance degradation when exposed to sensor noise or failure, our open-loop oscillators exhibit remarkable robustness due to their lack of reliance on sensors. Furthermore, we showcase a successful transfer from simulation to reality using an elastic quadruped, all without the need for randomization or reward engineering.

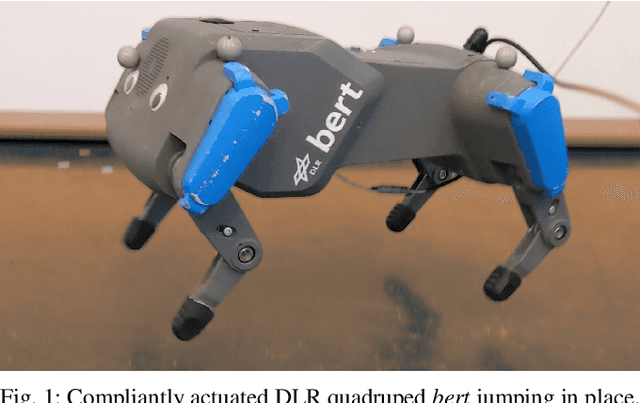

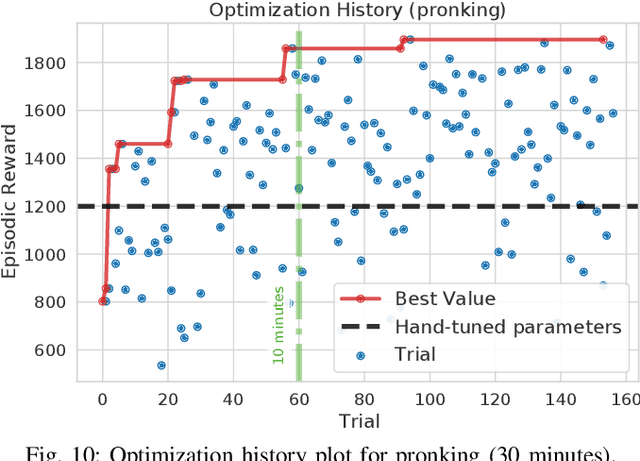

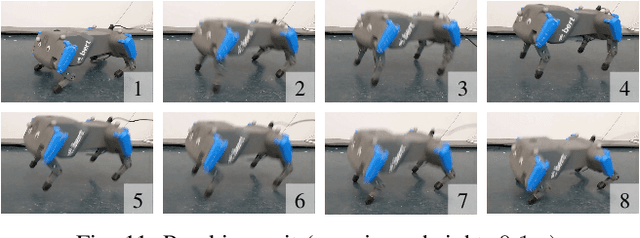

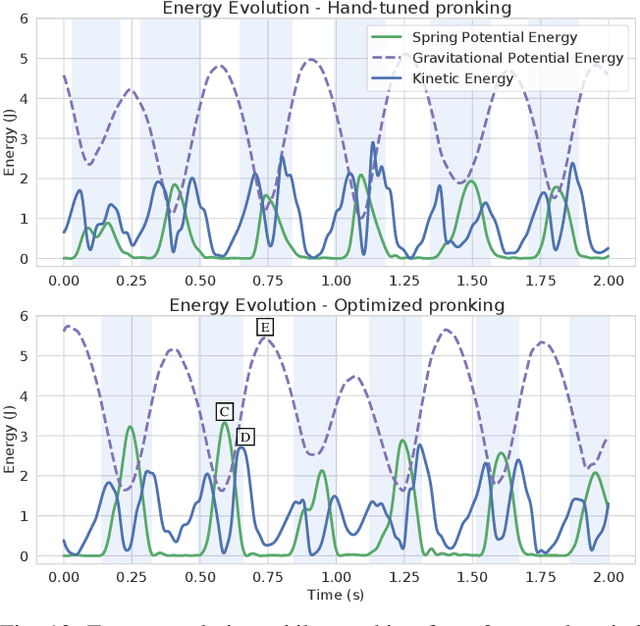

Learning to Exploit Elastic Actuators for Quadruped Locomotion

Sep 15, 2022

Abstract:Spring-based actuators in legged locomotion provide energy-efficiency and improved performance, but increase the difficulty of controller design. Whereas previous works have focused on extensive modeling and simulation to find optimal controllers for such systems, we propose to learn model-free controllers directly on the real robot. In our approach, gaits are first synthesized by central pattern generators (CPGs), whose parameters are optimized to quickly obtain an open-loop controller that achieves efficient locomotion. Then, to make that controller more robust and further improve the performance, we use reinforcement learning to close the loop, to learn corrective actions on top of the CPGs. We evaluate the proposed approach in DLR's elastic quadruped bert. Our results in learning trotting and pronking gaits show that exploitation of the spring actuator dynamics emerges naturally from optimizing for dynamic motions, yielding high-performing locomotion despite being model-free. The whole process takes no more than 1.5 hours on the real robot and results in natural-looking gaits.

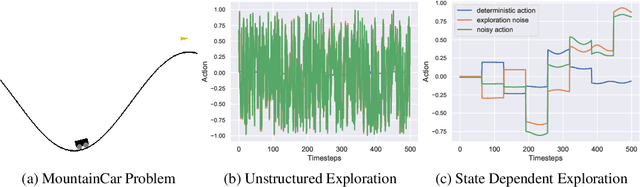

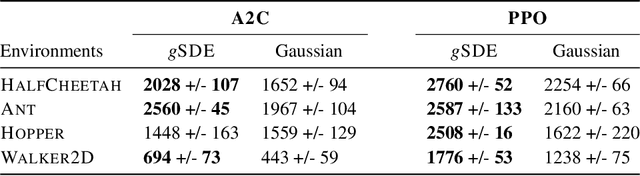

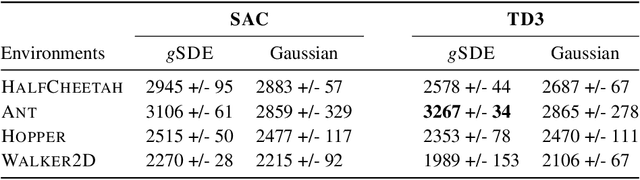

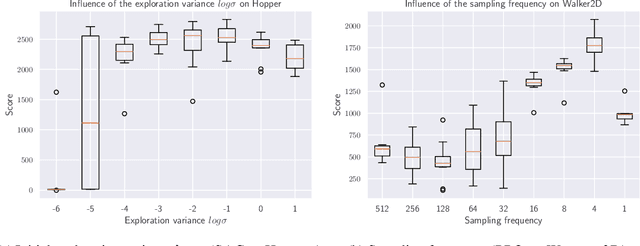

Generalized State-Dependent Exploration for Deep Reinforcement Learning in Robotics

May 12, 2020

Abstract:Reinforcement learning (RL) enables robots to learn skills from interactions with the real world. In practice, the unstructured step-based exploration used in Deep RL -- often very successful in simulation -- leads to jerky motion patterns on real robots. Consequences of the resulting shaky behavior are poor exploration, or even damage to the robot. We address these issues by adapting state-dependent exploration (SDE) to current Deep RL algorithms. To enable this adaptation, we propose three extensions to the original SDE, which leads to a new exploration method generalized state-dependent exploration (gSDE). We evaluate gSDE both in simulation, on PyBullet continuous control tasks, and directly on a tendon-driven elastic robot. gSDE yields competitive results in simulation but outperforms the unstructured exploration on the real robot. The code is available at https://github.com/DLR-RM/stable-baselines3/tree/sde.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge