Alin Albu-Schäffer

Discovering Optimal Natural Gaits of Dissipative Systems via Virtual Energy Injection

Nov 19, 2025Abstract:Legged robots offer several advantages when navigating unstructured environments, but they often fall short of the efficiency achieved by wheeled robots. One promising strategy to improve their energy economy is to leverage their natural (unactuated) dynamics using elastic elements. This work explores that concept by designing energy-optimal control inputs through a unified, multi-stage framework. It starts with a novel energy injection technique to identify passive motion patterns by harnessing the system's natural dynamics. This enables the discovery of passive solutions even in systems with energy dissipation caused by factors such as friction or plastic collisions. Building on these passive solutions, we then employ a continuation approach to derive energy-optimal control inputs for the fully actuated, dissipative robotic system. The method is tested on simulated models to demonstrate its applicability in both single- and multi-legged robotic systems. This analysis provides valuable insights into the design and operation of elastic legged robots, offering pathways to improve their efficiency and adaptability by exploiting the natural system dynamics.

A Unified Framework for Probabilistic Dynamic-, Trajectory- and Vision-based Virtual Fixtures

Jun 11, 2025Abstract:Probabilistic Virtual Fixtures (VFs) enable the adaptive selection of the most suitable haptic feedback for each phase of a task, based on learned or perceived uncertainty. While keeping the human in the loop remains essential, for instance, to ensure high precision, partial automation of certain task phases is critical for productivity. We present a unified framework for probabilistic VFs that seamlessly switches between manual fixtures, semi-automated fixtures (with the human handling precise tasks), and full autonomy. We introduce a novel probabilistic Dynamical System-based VF for coarse guidance, enabling the robot to autonomously complete certain task phases while keeping the human operator in the loop. For tasks requiring precise guidance, we extend probabilistic position-based trajectory fixtures with automation allowing for seamless human interaction as well as geometry-awareness and optimal impedance gains. For manual tasks requiring very precise guidance, we also extend visual servoing fixtures with the same geometry-awareness and impedance behaviour. We validate our approach experimentally on different robots, showcasing multiple operation modes and the ease of programming fixtures.

When do Lyapunov Subcenter Manifolds become Eigenmanifolds?

May 19, 2025

Abstract:Multi-body mechanical systems have rich internal dynamics, which can be exploited to formulate efficient control targets. For periodic regulation tasks in robotics applications, this motivated the extension of the theory on nonlinear normal modes to Riemannian manifolds, and led to the definition of Eigenmanifolds. This definition is geometric, which is advantageous for generality within robotics but also obscures the connection of Eigenmanifolds to a large body of results from the literature on nonlinear dynamics. We bridge this gap, showing that Eigenmanifolds are instances of Lyapunov subcenter manifolds (LSMs), and that their stronger geometric properties with respect to LSMs follow from a time-symmetry of conservative mechanical systems. This directly leads to local existence and uniqueness results for Eigenmanifolds. Furthermore, we show that an additional spatial symmetry provides Eigenmanifolds with yet stronger properties of Rosenberg manifolds, which can be favorable for control applications, and we present a sufficient condition for their existence and uniqueness. These theoretical results are numerically confirmed on two mechanical systems with a non-constant inertia tensor: a double pendulum and a 5-link pendulum.

Software for the SpaceDREAM Robotic Arm

Sep 26, 2024

Abstract:Impedance-controlled robots are widely used on Earth to perform interaction-rich tasks and will be a key enabler for In-Space Servicing, Assembly and Manufacturing (ISAM) activities. This paper introduces the software architecture used on the On-Board Computer (OBC) for the planned SpaceDREAM mission aiming to validate such robotic arm in Lower Earth Orbit (LEO) conducted by the German Aerospace Center (DLR) in cooperation with KINETIK Space GmbH and the Technical University of Munich (TUM). During the mission several free motion as well as contact tasks are to be performed in order to verify proper functionality of the robot in position and impedance control on joint level as well as in cartesian control. The tasks are selected to be representative for subsequent servicing missions e.g. requiring interface docking or precise manipulation. The software on the OBC commands the robot's joints via SpaceWire to perform those mission tasks, reads camera images and data from additional sensors and sends telemetry data through an Ethernet link via the spacecraft down to Earth. It is set up to execute a predefined mission after receiving a start signal from the spacecraft while it should be extendable to receive commands from Earth for later missions. Core design principle was to reuse as much existing software and to stay as close as possible to existing robot software stacks at DLR. This allowed for a quick full operational start of the robot arm compared to a custom development of all robot software, a lower entry barrier for software developers as well as a reuse of existing libraries. While not every line of code can be tested with this design, most of the software has already proven its functionality through daily execution on multiple robot systems.

Interactive incremental learning of generalizable skills with local trajectory modulation

Sep 09, 2024Abstract:The problem of generalization in learning from demonstration (LfD) has received considerable attention over the years, particularly within the context of movement primitives, where a number of approaches have emerged. Recently, two important approaches have gained recognition. While one leverages via-points to adapt skills locally by modulating demonstrated trajectories, another relies on so-called task-parameterized models that encode movements with respect to different coordinate systems, using a product of probabilities for generalization. While the former are well-suited to precise, local modulations, the latter aim at generalizing over large regions of the workspace and often involve multiple objects. Addressing the quality of generalization by leveraging both approaches simultaneously has received little attention. In this work, we propose an interactive imitation learning framework that simultaneously leverages local and global modulations of trajectory distributions. Building on the kernelized movement primitives (KMP) framework, we introduce novel mechanisms for skill modulation from direct human corrective feedback. Our approach particularly exploits the concept of via-points to incrementally and interactively 1) improve the model accuracy locally, 2) add new objects to the task during execution and 3) extend the skill into regions where demonstrations were not provided. We evaluate our method on a bearing ring-loading task using a torque-controlled, 7-DoF, DLR SARA robot.

Swing-Up of a Weakly Actuated Double Pendulum via Nonlinear Normal Modes

Apr 12, 2024

Abstract:We identify the nonlinear normal modes spawning from the stable equilibrium of a double pendulum under gravity, and we establish their connection to homoclinic orbits through the unstable upright position as energy increases. This result is exploited to devise an efficient swing-up strategy for a double pendulum with weak, saturating actuators. Our approach involves stabilizing the system onto periodic orbits associated with the nonlinear modes while gradually injecting energy. Since these modes are autonomous system evolutions, the required control effort for stabilization is minimal. Even with actuator limitations of less than 1% of the maximum gravitational torque, the proposed method accomplishes the swing-up of the double pendulum by allowing sufficient time.

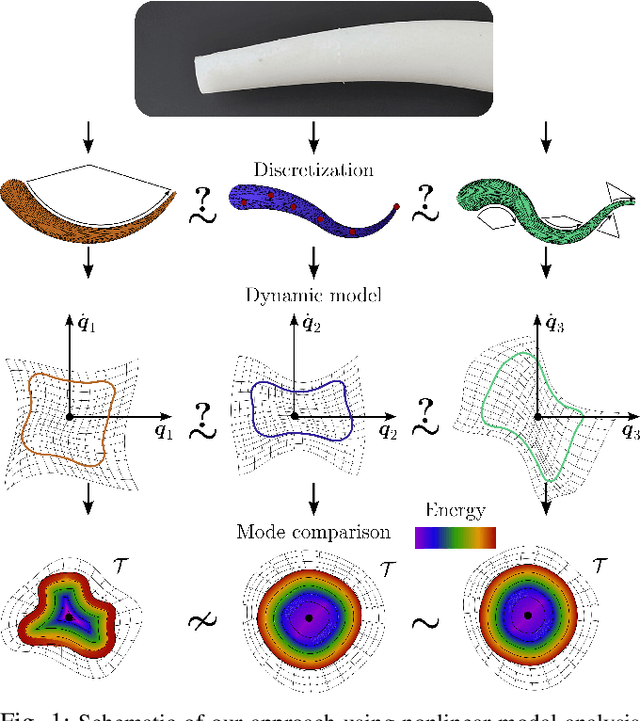

Nonlinear Modes as a Tool for Comparing the Mathematical Structure of Dynamic Models of Soft Robots

Feb 10, 2024

Abstract:Continuum soft robots are nonlinear mechanical systems with theoretically infinite degrees of freedom (DoFs) that exhibit complex behaviors. Achieving motor intelligence under dynamic conditions necessitates the development of control-oriented reduced-order models (ROMs), which employ as few DoFs as possible while still accurately capturing the core characteristics of the theoretically infinite-dimensional dynamics. However, there is no quantitative way to measure if the ROM of a soft robot has succeeded in this task. In other fields, like structural dynamics or flexible link robotics, linear normal modes are routinely used to this end. Yet, this theory is not applicable to soft robots due to their nonlinearities. In this work, we propose to use the recent nonlinear extension in modal theory -- called eigenmanifolds -- as a means to evaluate control-oriented models for soft robots and compare them. To achieve this, we propose three similarity metrics relying on the projection of the nonlinear modes of the system into a task space of interest. We use this approach to compare quantitatively, for the first time, ROMs of increasing order generated under the piecewise constant curvature (PCC) hypothesis with a high-dimensional finite element (FE)-like model of a soft arm. Results show that by increasing the order of the discretization, the eigenmanifolds of the PCC model converge to those of the FE model.

AI-enabled Cyber-Physical In-Orbit Factory -- AI approaches based on digital twin technology for robotic small satellite production

Feb 05, 2024Abstract:With the ever increasing number of active satellites in space, the rising demand for larger formations of small satellites and the commercialization of the space industry (so-called New Space), the realization of manufacturing processes in orbit comes closer to reality. Reducing launch costs and risks, allowing for faster on-demand deployment of individually configured satellites as well as the prospect for possible on-orbit servicing for satellites makes the idea of realizing an in-orbit factory promising. In this paper, we present a novel approach to an in-orbit factory of small satellites covering a digital process twin, AI-based fault detection, and teleoperated robot-control, which are being researched as part of the "AI-enabled Cyber-Physical In-Orbit Factory" project. In addition to the integration of modern automation and Industry 4.0 production approaches, the question of how artificial intelligence (AI) and learning approaches can be used to make the production process more robust, fault-tolerant and autonomous is addressed. This lays the foundation for a later realisation of satellite production in space in the form of an in-orbit factory. Central aspect is the development of a robotic AIT (Assembly, Integration and Testing) system where a small satellite could be assembled by a manipulator robot from modular subsystems. Approaches developed to improving this production process with AI include employing neural networks for optical and electrical fault detection of components. Force sensitive measuring and motion training helps to deal with uncertainties and tolerances during assembly. An AI-guided teleoperated control of the robot arm allows for human intervention while a Digital Process Twin represents process data and provides supervision during the whole production process. Approaches and results towards automated satellite production are presented in detail.

Towards Safe and Collaborative Robotic Ultrasound Tissue Scanning in Neurosurgery

Jan 04, 2024

Abstract:Intraoperative ultrasound imaging is used to facilitate safe brain tumour resection. However, due to challenges with image interpretation and the physical scanning, this tool has yet to achieve widespread adoption in neurosurgery. In this paper, we introduce the components and workflow of a novel, versatile robotic platform for intraoperative ultrasound tissue scanning in neurosurgery. An RGB-D camera attached to the robotic arm allows for automatic object localisation with ArUco markers, and 3D surface reconstruction as a triangular mesh using the ImFusion Suite software solution. Impedance controlled guidance of the US probe along arbitrary surfaces, represented as a mesh, enables collaborative US scanning, i.e., autonomous, teleoperated and hands-on guided data acquisition. A preliminary experiment evaluates the suitability of the conceptual workflow and system components for probe landing on a custom-made soft-tissue phantom. Further assessment in future experiments will be necessary to prove the effectiveness of the presented platform.

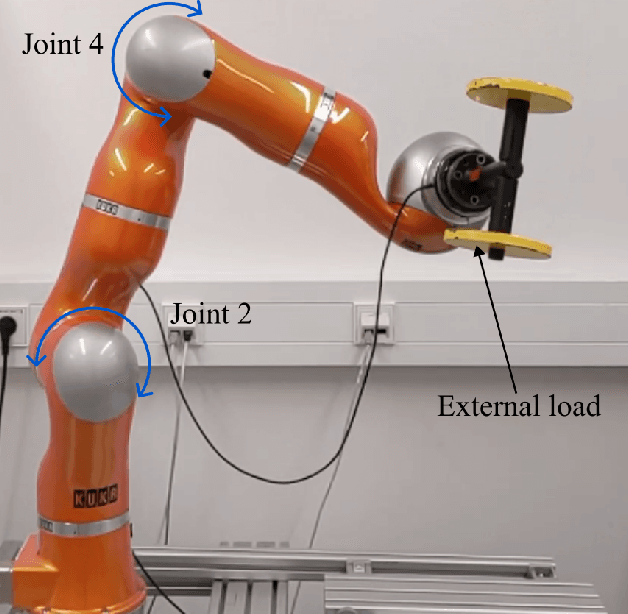

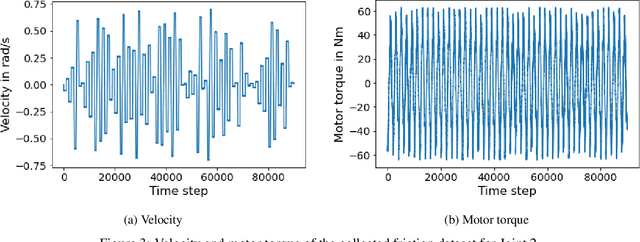

Learning-based adaption of robotic friction models

Oct 25, 2023

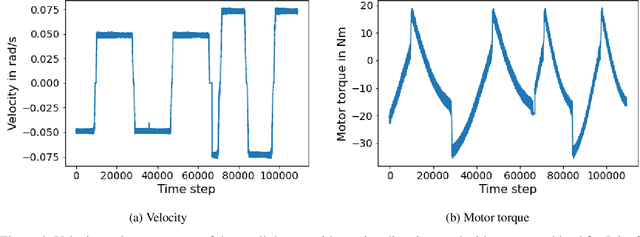

Abstract:In the Fourth Industrial Revolution, wherein artificial intelligence and the automation of machines occupy a central role, the deployment of robots is indispensable. However, the manufacturing process using robots, especially in collaboration with humans, is highly intricate. In particular, modeling the friction torque in robotic joints is a longstanding problem due to the lack of a good mathematical description. This motivates the usage of data-driven methods in recent works. However, model-based and data-driven models often exhibit limitations in their ability to generalize beyond the specific dynamics they were trained on, as we demonstrate in this paper. To address this challenge, we introduce a novel approach based on residual learning, which aims to adapt an existing friction model to new dynamics using as little data as possible. We validate our approach by training a base neural network on a symmetric friction data set to learn an accurate relation between the velocity and the friction torque. Subsequently, to adapt to more complex asymmetric settings, we train a second network on a small dataset, focusing on predicting the residual of the initial network's output. By combining the output of both networks in a suitable manner, our proposed estimator outperforms the conventional model-based approach and the base neural network significantly. Furthermore, we evaluate our method on trajectories involving external loads and still observe a substantial improvement, approximately 60-70\%, over the conventional approach. Our method does not rely on data with external load during training, eliminating the need for external torque sensors. This demonstrates the generalization capability of our approach, even with a small amount of data-only 43 seconds of a robot movement-enabling adaptation to diverse scenarios based on prior knowledge about friction in different settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge