Cosimo Della Santina

Department of Cognitive Robotics, Delft University of Technology, Delft, The Netherlands, Institute of Robotics and Mechatronics, German Aerospace Center

Model-based Optimal Control for Rigid-Soft Underactuated Systems

Feb 03, 2026Abstract:Continuum soft robots are inherently underactuated and subject to intrinsic input constraints, making dynamic control particularly challenging, especially in hybrid rigid-soft robots. While most existing methods focus on quasi-static behaviors, dynamic tasks such as swing-up require accurate exploitation of continuum dynamics. This has led to studies on simple low-order template systems that often fail to capture the complexity of real continuum deformations. Model-based optimal control offers a systematic solution; however, its application to rigid-soft robots is often limited by the computational cost and inaccuracy of numerical differentiation for high-dimensional models. Building on recent advances in the Geometric Variable Strain model that enable analytical derivatives, this work investigates three optimal control strategies for underactuated soft systems-Direct Collocation, Differential Dynamic Programming, and Nonlinear Model Predictive Control-to perform dynamic swing-up tasks. To address stiff continuum dynamics and constrained actuation, implicit integration schemes and warm-start strategies are employed to improve numerical robustness and computational efficiency. The methods are evaluated in simulation on three Rigid-Soft and high-order soft benchmark systems-the Soft Cart-Pole, the Soft Pendubot, and the Soft Furuta Pendulum- highlighting their performance and computational trade-offs.

Segmenting the Complex and Irregular in Two-Phase Flows: A Real-World Empirical Study with SAM2

Aug 07, 2025Abstract:Segmenting gas bubbles in multiphase flows is a critical yet unsolved challenge in numerous industrial settings, from metallurgical processing to maritime drag reduction. Traditional approaches-and most recent learning-based methods-assume near-spherical shapes, limiting their effectiveness in regimes where bubbles undergo deformation, coalescence, or breakup. This complexity is particularly evident in air lubrication systems, where coalesced bubbles form amorphous and topologically diverse patches. In this work, we revisit the problem through the lens of modern vision foundation models. We cast the task as a transfer learning problem and demonstrate, for the first time, that a fine-tuned Segment Anything Model SAM v2.1 can accurately segment highly non-convex, irregular bubble structures using as few as 100 annotated images.

A Survey on Soft Robot Adaptability: Implementations, Applications, and Prospects

Jun 24, 2025Abstract:Soft robots, compared to rigid robots, possess inherent advantages, including higher degrees of freedom, compliance, and enhanced safety, which have contributed to their increasing application across various fields. Among these benefits, adaptability is particularly noteworthy. In this paper, adaptability in soft robots is categorized into external and internal adaptability. External adaptability refers to the robot's ability to adjust, either passively or actively, to variations in environments, object properties, geometries, and task dynamics. Internal adaptability refers to the robot's ability to cope with internal variations, such as manufacturing tolerances or material aging, and to generalize control strategies across different robots. As the field of soft robotics continues to evolve, the significance of adaptability has become increasingly pronounced. In this review, we summarize various approaches to enhancing the adaptability of soft robots, including design, sensing, and control strategies. Additionally, we assess the impact of adaptability on applications such as surgery, wearable devices, locomotion, and manipulation. We also discuss the limitations of soft robotics adaptability and prospective directions for future research. By analyzing adaptability through the lenses of implementation, application, and challenges, this paper aims to provide a comprehensive understanding of this essential characteristic in soft robotics and its implications for diverse applications.

When do Lyapunov Subcenter Manifolds become Eigenmanifolds?

May 19, 2025

Abstract:Multi-body mechanical systems have rich internal dynamics, which can be exploited to formulate efficient control targets. For periodic regulation tasks in robotics applications, this motivated the extension of the theory on nonlinear normal modes to Riemannian manifolds, and led to the definition of Eigenmanifolds. This definition is geometric, which is advantageous for generality within robotics but also obscures the connection of Eigenmanifolds to a large body of results from the literature on nonlinear dynamics. We bridge this gap, showing that Eigenmanifolds are instances of Lyapunov subcenter manifolds (LSMs), and that their stronger geometric properties with respect to LSMs follow from a time-symmetry of conservative mechanical systems. This directly leads to local existence and uniqueness results for Eigenmanifolds. Furthermore, we show that an additional spatial symmetry provides Eigenmanifolds with yet stronger properties of Rosenberg manifolds, which can be favorable for control applications, and we present a sufficient condition for their existence and uniqueness. These theoretical results are numerically confirmed on two mechanical systems with a non-constant inertia tensor: a double pendulum and a 5-link pendulum.

Contact-Aware Safety in Soft Robots Using High-Order Control Barrier and Lyapunov Functions

May 05, 2025Abstract:Robots operating alongside people, particularly in sensitive scenarios such as aiding the elderly with daily tasks or collaborating with workers in manufacturing, must guarantee safety and cultivate user trust. Continuum soft manipulators promise safety through material compliance, but as designs evolve for greater precision, payload capacity, and speed, and increasingly incorporate rigid elements, their injury risk resurfaces. In this letter, we introduce a comprehensive High-Order Control Barrier Function (HOCBF) + High-Order Control Lyapunov Function (HOCLF) framework that enforces strict contact force limits across the entire soft-robot body during environmental interactions. Our approach combines a differentiable Piecewise Cosserat-Segment (PCS) dynamics model with a convex-polygon distance approximation metric, named Differentiable Conservative Separating Axis Theorem (DCSAT), based on the soft robot geometry to enable real-time, whole-body collision detection, resolution, and enforcement of the safety constraints. By embedding HOCBFs into our optimization routine, we guarantee safety and actively regulate environmental coupling, allowing, for instance, safe object manipulation under HOCLF-driven motion objectives. Extensive planar simulations demonstrate that our method maintains safety-bounded contacts while achieving precise shape and task-space regulation. This work thus lays a foundation for the deployment of soft robots in human-centric environments with provable safety and performance.

Versatile, Robust, and Explosive Locomotion with Rigid and Articulated Compliant Quadrupeds

Apr 17, 2025Abstract:Achieving versatile and explosive motion with robustness against dynamic uncertainties is a challenging task. Introducing parallel compliance in quadrupedal design is deemed to enhance locomotion performance, which, however, makes the control task even harder. This work aims to address this challenge by proposing a general template model and establishing an efficient motion planning and control pipeline. To start, we propose a reduced-order template model-the dual-legged actuated spring-loaded inverted pendulum with trunk rotation-which explicitly models parallel compliance by decoupling spring effects from active motor actuation. With this template model, versatile acrobatic motions, such as pronking, froggy jumping, and hop-turn, are generated by a dual-layer trajectory optimization, where the singularity-free body rotation representation is taken into consideration. Integrated with a linear singularity-free tracking controller, enhanced quadrupedal locomotion is achieved. Comparisons with the existing template model reveal the improved accuracy and generalization of our model. Hardware experiments with a rigid quadruped and a newly designed compliant quadruped demonstrate that i) the template model enables generating versatile dynamic motion; ii) parallel elasticity enhances explosive motion. For example, the maximal pronking distance, hop-turn yaw angle, and froggy jumping distance increase at least by 25%, 15% and 25%, respectively; iii) parallel elasticity improves the robustness against dynamic uncertainties, including modelling errors and external disturbances. For example, the allowable support surface height variation increases by 100% for robust froggy jumping.

Explosive Jumping with Rigid and Articulated Soft Quadrupeds via Example Guided Reinforcement Learning

Mar 20, 2025Abstract:Achieving controlled jumping behaviour for a quadruped robot is a challenging task, especially when introducing passive compliance in mechanical design. This study addresses this challenge via imitation-based deep reinforcement learning with a progressive training process. To start, we learn the jumping skill by mimicking a coarse jumping example generated by model-based trajectory optimization. Subsequently, we generalize the learned policy to broader situations, including various distances in both forward and lateral directions, and then pursue robust jumping in unknown ground unevenness. In addition, without tuning the reward much, we learn the jumping policy for a quadruped with parallel elasticity. Results show that using the proposed method, i) the robot learns versatile jumps by learning only from a single demonstration, ii) the robot with parallel compliance reduces the landing error by 11.1%, saves energy cost by 15.2% and reduces the peak torque by 15.8%, compared to the rigid robot without parallel elasticity, iii) the robot can perform jumps of variable distances with robustness against ground unevenness (maximal 4cm height perturbations) using only proprioceptive perception.

MUKCa: Accurate and Affordable Cobot Calibration Without External Measurement Devices

Mar 16, 2025

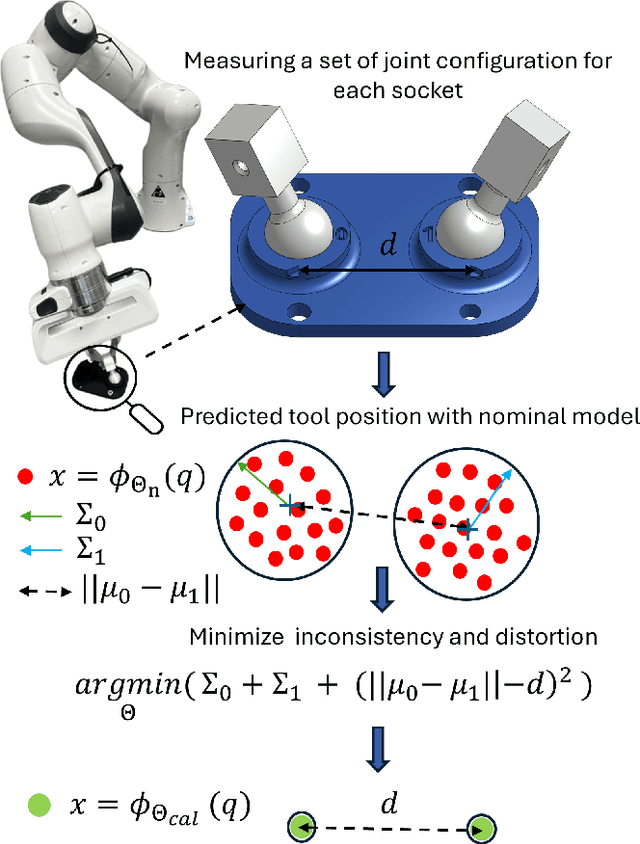

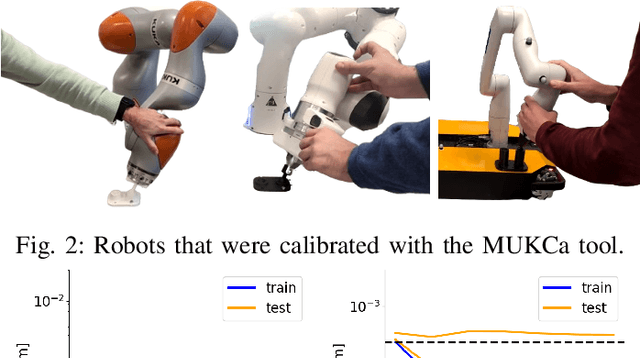

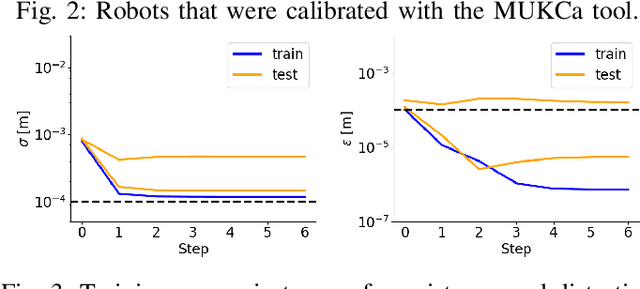

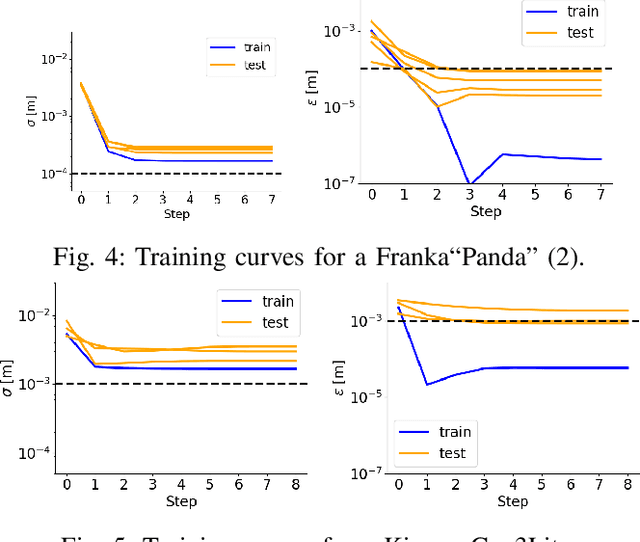

Abstract:To increase the reliability of collaborative robots in performing daily tasks, we require them to be accurate and not only repeatable. However, having a calibrated kinematics model is regrettably a luxury, as available calibration tools are usually more expensive than the robots themselves. With this work, we aim to contribute to the democratization of cobots calibration by providing an inexpensive yet highly effective alternative to existing tools. The proposed minimalist calibration routine relies on a 3D-printable tool as the only physical aid to the calibration process. This two-socket spherical-joint tool kinematically constrains the robot at the end effector while collecting the training set. An optimization routine updates the nominal model to ensure a consistent prediction for each socket and the undistorted mean distance between them. We validated the algorithm on three robotic platforms: Franka, Kuka, and Kinova Cobots. The calibrated models reduce the mean absolute error from the order of 10 mm to 0.2 mm for both Franka and Kuka robots. We provide two additional experimental campaigns with the Franka Robot to render the improvements more tangible. First, we implement Cartesian control with and without the calibrated model and use it to perform a standard peg-in-the-hole task with a tolerance of 0.4 mm between the peg and the hole. Second, we perform a repeated drawing task combining Cartesian control with learning from demonstration. Both tasks consistently failed when the model was not calibrated, while they consistently succeeded after calibration.

Beyond Behavior Cloning: Robustness through Interactive Imitation and Contrastive Learning

Feb 11, 2025Abstract:Behavior cloning (BC) traditionally relies on demonstration data, assuming the demonstrated actions are optimal. This can lead to overfitting under noisy data, particularly when expressive models are used (e.g., the energy-based model in Implicit BC). To address this, we extend behavior cloning into an iterative process of optimal action estimation within the Interactive Imitation Learning framework. Specifically, we introduce Contrastive policy Learning from Interactive Corrections (CLIC). CLIC leverages human corrections to estimate a set of desired actions and optimizes the policy to select actions from this set. We provide theoretical guarantees for the convergence of the desired action set to optimal actions in both single and multiple optimal action cases. Extensive simulation and real-robot experiments validate CLIC's advantages over existing state-of-the-art methods, including stable training of energy-based models, robustness to feedback noise, and adaptability to diverse feedback types beyond demonstrations. Our code will be publicly available soon.

SpikingSoft: A Spiking Neuron Controller for Bio-inspired Locomotion with Soft Snake Robots

Jan 31, 2025

Abstract:Inspired by the dynamic coupling of moto-neurons and physical elasticity in animals, this work explores the possibility of generating locomotion gaits by utilizing physical oscillations in a soft snake by means of a low-level spiking neural mechanism. To achieve this goal, we introduce the Double Threshold Spiking neuron model with adjustable thresholds to generate varied output patterns. This neuron model can excite the natural dynamics of soft robotic snakes, and it enables distinct movements, such as turning or moving forward, by simply altering the neural thresholds. Finally, we demonstrate that our approach, termed SpikingSoft, naturally pairs and integrates with reinforcement learning. The high-level agent only needs to adjust the two thresholds to generate complex movement patterns, thus strongly simplifying the learning of reactive locomotion. Simulation results demonstrate that the proposed architecture significantly enhances the performance of the soft snake robot, enabling it to achieve target objectives with a 21.6% increase in success rate, a 29% reduction in time to reach the target, and smoother movements compared to the vanilla reinforcement learning controllers or Central Pattern Generator controller acting in torque space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge