Matteo Saveriano

Dept. of Industrial Engineering

Autonomous Robotic Tissue Palpation and Abnormalities Characterisation via Ergodic Exploration

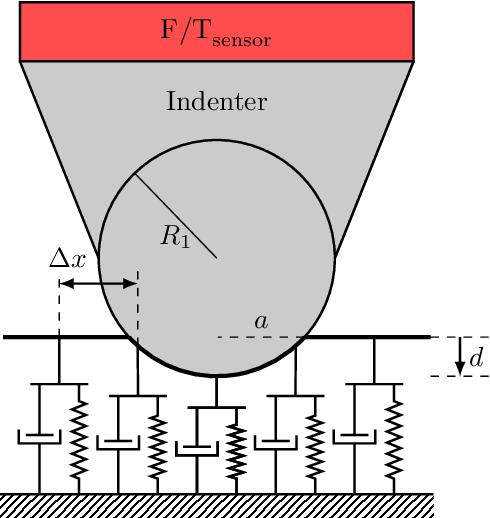

Feb 15, 2026Abstract:We propose a novel autonomous robotic palpation framework for real-time elastic mapping during tissue exploration using a viscoelastic tissue model. The method combines force-based parameter estimation using a commercial force/torque sensor with an ergodic control strategy driven by a tailored Expected Information Density, which explicitly biases exploration toward diagnostically relevant regions by jointly considering model uncertainty, stiffness magnitude, and spatial gradients. An Extended Kalman Filter is employed to estimate viscoelastic model parameters online, while Gaussian Process Regression provides spatial modelling of the estimated elasticity, and a Heat Equation Driven Area Coverage controller enables adaptive, continuous trajectory planning. Simulations on synthetic stiffness maps demonstrate that the proposed approach achieves better reconstruction accuracy, enhanced segmentation capability, and improved robustness in detecting stiff inclusions compared to Bayesian Optimisation-based techniques. Experimental validation on a silicone phantom with embedded inclusions emulating pathological tissue regions further corroborates the potential of the method for autonomous tissue characterisation in diagnostic and screening applications.

Versatile, Robust, and Explosive Locomotion with Rigid and Articulated Compliant Quadrupeds

Apr 17, 2025Abstract:Achieving versatile and explosive motion with robustness against dynamic uncertainties is a challenging task. Introducing parallel compliance in quadrupedal design is deemed to enhance locomotion performance, which, however, makes the control task even harder. This work aims to address this challenge by proposing a general template model and establishing an efficient motion planning and control pipeline. To start, we propose a reduced-order template model-the dual-legged actuated spring-loaded inverted pendulum with trunk rotation-which explicitly models parallel compliance by decoupling spring effects from active motor actuation. With this template model, versatile acrobatic motions, such as pronking, froggy jumping, and hop-turn, are generated by a dual-layer trajectory optimization, where the singularity-free body rotation representation is taken into consideration. Integrated with a linear singularity-free tracking controller, enhanced quadrupedal locomotion is achieved. Comparisons with the existing template model reveal the improved accuracy and generalization of our model. Hardware experiments with a rigid quadruped and a newly designed compliant quadruped demonstrate that i) the template model enables generating versatile dynamic motion; ii) parallel elasticity enhances explosive motion. For example, the maximal pronking distance, hop-turn yaw angle, and froggy jumping distance increase at least by 25%, 15% and 25%, respectively; iii) parallel elasticity improves the robustness against dynamic uncertainties, including modelling errors and external disturbances. For example, the allowable support surface height variation increases by 100% for robust froggy jumping.

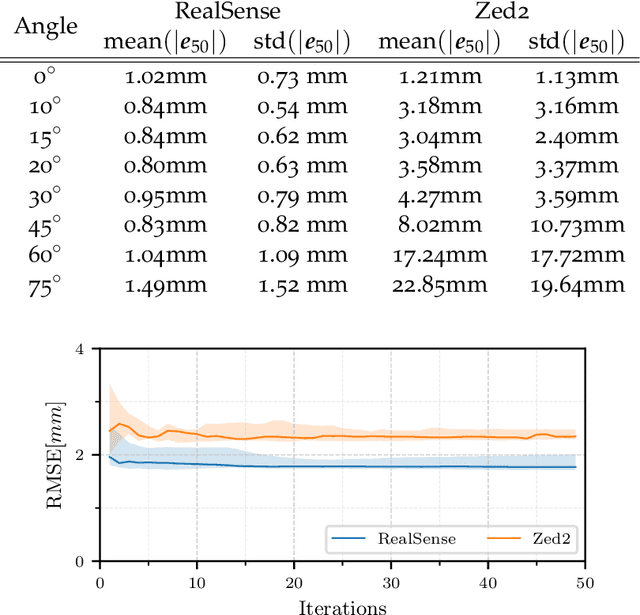

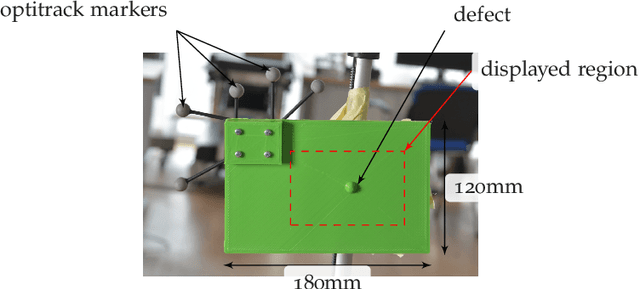

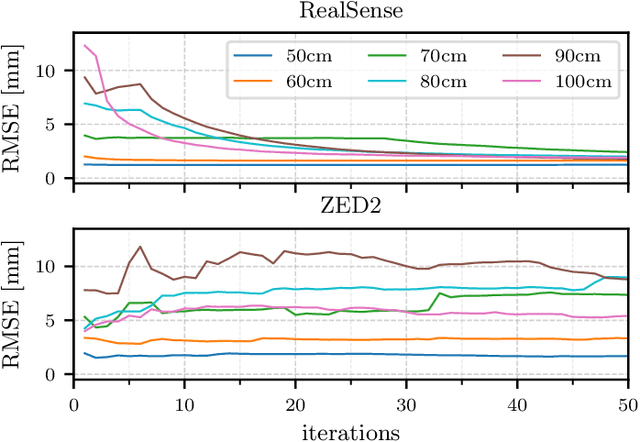

Surface Defect Identification using Bayesian Filtering on a 3D Mesh

Jan 30, 2025

Abstract:This paper presents a CAD-based approach for automated surface defect detection. We leverage the a-priori knowledge embedded in a CAD model and integrate it with point cloud data acquired from commercially available stereo and depth cameras. The proposed method first transforms the CAD model into a high-density polygonal mesh, where each vertex represents a state variable in 3D space. Subsequently, a weighted least squares algorithm is employed to iteratively estimate the state of the scanned workpiece based on the captured point cloud measurements. This framework offers the potential to incorporate information from diverse sensors into the CAD domain, facilitating a more comprehensive analysis. Preliminary results demonstrate promising performance, with the algorithm achieving convergence to a sub-millimeter standard deviation in the region of interest using only approximately 50 point cloud samples. This highlights the potential of utilising commercially available stereo cameras for high-precision quality control applications.

Reactive Robot Navigation Using Quasi-conformal Mappings and Control Barrier Functions

Nov 22, 2024

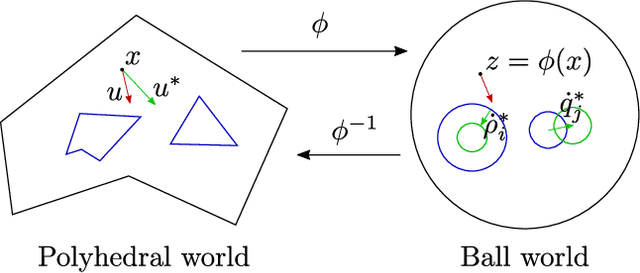

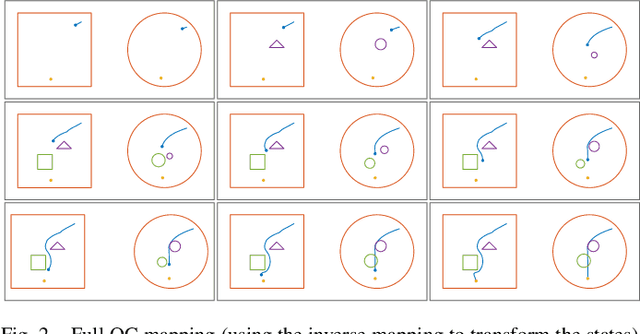

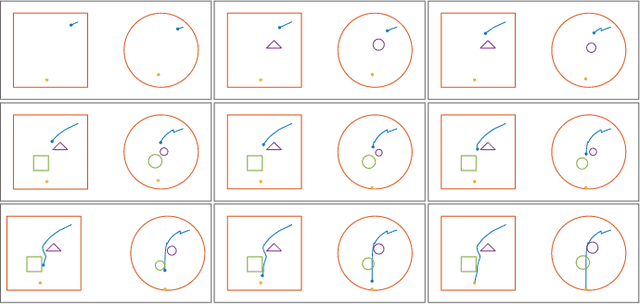

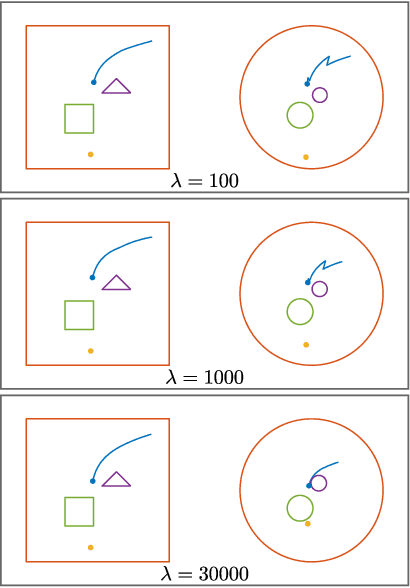

Abstract:This paper presents a robot control algorithm suitable for safe reactive navigation tasks in cluttered environments. The proposed approach consists of transforming the robot workspace into the \emph{ball world}, an artificial representation where all obstacle regions are closed balls. Starting from a polyhedral representation of obstacles in the environment, obtained using exteroceptive sensor readings, a computationally efficient mapping to ball-shaped obstacles is constructed using quasi-conformal mappings and M\"obius transformations. The geometry of the ball world is amenable to provably safe navigation tasks achieved via control barrier functions employed to ensure collision-free robot motions with guarantees both on safety and on the absence of deadlocks. The performance of the proposed navigation algorithm is showcased and analyzed via extensive simulations and experiments performed using different types of robotic systems, including manipulators and mobile robots.

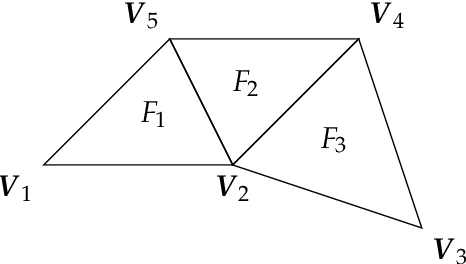

MeshDMP: Motion Planning on Discrete Manifolds using Dynamic Movement Primitives

Oct 19, 2024Abstract:An open problem in industrial automation is to reliably perform tasks requiring in-contact movements with complex workpieces, as current solutions lack the ability to seamlessly adapt to the workpiece geometry. In this paper, we propose a Learning from Demonstration approach that allows a robot manipulator to learn and generalise motions across complex surfaces by leveraging differential mathematical operators on discrete manifolds to embed information on the geometry of the workpiece extracted from triangular meshes, and extend the Dynamic Movement Primitives (DMPs) framework to generate motions on the mesh surfaces. We also propose an effective strategy to adapt the motion to different surfaces, by introducing an isometric transformation of the learned forcing term. The resulting approach, namely MeshDMP, is evaluated both in simulation and real experiments, showing promising results in typical industrial automation tasks like car surface polishing.

An Anatomy-Aware Shared Control Approach for Assisted Teleoperation of Lung Ultrasound Examinations

Sep 25, 2024

Abstract:The introduction of artificial intelligence and robotics in telehealth is enabling personalised treatment and supporting teleoperated procedures such as lung ultrasound, which has gained attention during the COVID-19 pandemic. Although fully autonomous systems face challenges due to anatomical variability, teleoperated systems appear to be more practical in current healthcare settings. This paper presents an anatomy-aware control framework for teleoperated lung ultrasound. Using biomechanically accurate 3D models such as SMPL and SKEL, the system provides a real-time visual feedback and applies virtual constraints to assist in precise probe placement tasks. Evaluations on five subjects show the accuracy of the biomechanical models and the efficiency of the system in improving probe placement and reducing procedure time compared to traditional teleoperation. The results demonstrate that the proposed framework enhances the physician's capabilities in executing remote lung ultrasound examinations, towards more objective and repeatable acquisitions.

A Passivity-Based Variable Impedance Controller for Incremental Learning of Periodic Interactive Tasks

Aug 20, 2024

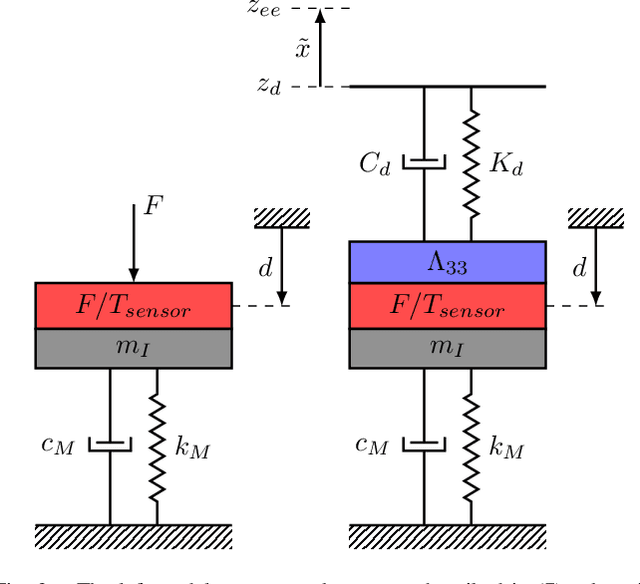

Abstract:In intelligent manufacturing, robots are asked to dynamically adapt their behaviours without reducing productivity. Human teaching, where an operator physically interacts with the robot to demonstrate a new task, is a promising strategy to quickly and intuitively reconfigure the production line. However, physical guidance during task execution poses challenges in terms of both operator safety and system usability. In this paper, we solve this issue by designing a variable impedance control strategy that regulates the interaction with the environment and the physical demonstrations, explicitly preventing at the same time passivity violations. We derive constraints to limit not only the exchanged energy with the environment but also the exchanged power, resulting in smoother interactions. By monitoring the energy flow between the robot and the environment, we are able to distinguish between disturbances (to be rejected) and physical guidance (to be accomplished), enabling smooth and controlled transitions from teaching to execution and vice versa. The effectiveness of the proposed approach is validated in wiping tasks with a real robotic manipulator.

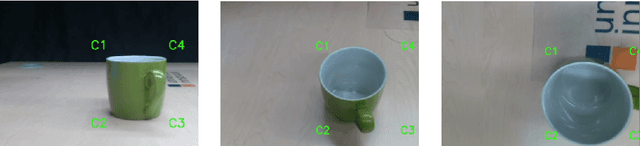

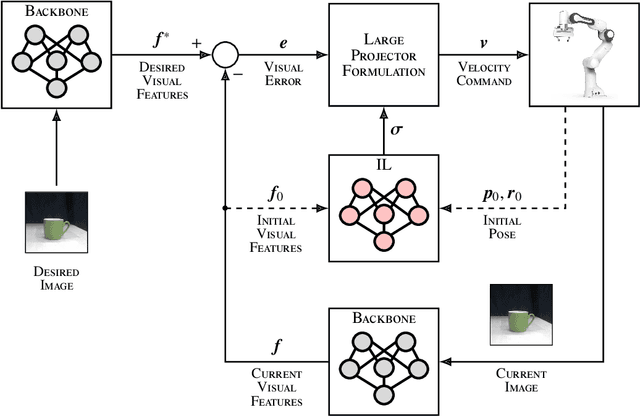

Direct Imitation Learning-based Visual Servoing using the Large Projection Formulation

Jun 13, 2024

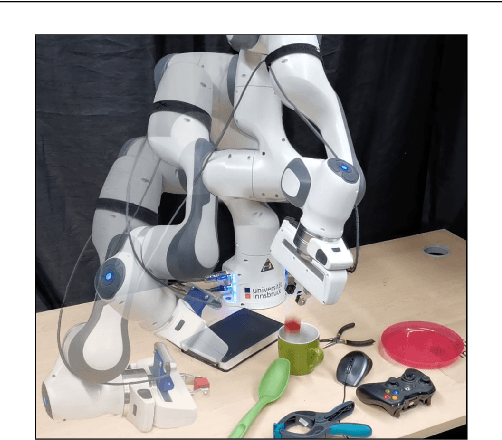

Abstract:Today robots must be safe, versatile, and user-friendly to operate in unstructured and human-populated environments. Dynamical system-based imitation learning enables robots to perform complex tasks stably and without explicit programming, greatly simplifying their real-world deployment. To exploit the full potential of these systems it is crucial to implement closed loops that use visual feedback. Vision permits to cope with environmental changes, but is complex to handle due to the high dimension of the image space. This study introduces a dynamical system-based imitation learning for direct visual servoing. It leverages off-the-shelf deep learning-based perception backbones to extract robust features from the raw input image, and an imitation learning strategy to execute sophisticated robot motions. The learning blocks are integrated using the large projection task priority formulation. As demonstrated through extensive experimental analysis, the proposed method realizes complex tasks with a robotic manipulator.

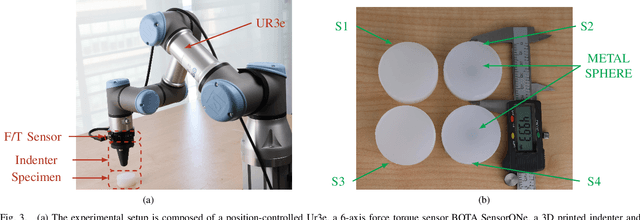

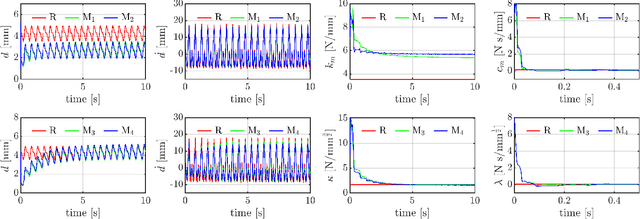

Towards Robotised Palpation for Cancer Detection through Online Tissue Viscoelastic Characterisation with a Collaborative Robotic Arm

Apr 15, 2024

Abstract:This paper introduces a new method for estimating the penetration of the end effector and the parameters of a soft body using a collaborative robotic arm. This is possible using the dimensionality reduction method that simplifies the Hunt-Crossley model. The parameters can be found without a force sensor thanks to the information of the robotic arm controller. To achieve an online estimation, an extended Kalman filter is employed, which embeds the contact dynamic model. The algorithm is tested with various types of silicone, including samples with hard intrusions to simulate cancerous cells within a soft tissue. The results indicate that this technique can accurately determine the parameters and estimate the penetration of the end effector into the soft body. These promising preliminary results demonstrate the potential for robots to serve as an effective tool for early-stage cancer diagnostics.

Safe Execution of Learned Orientation Skills with Conic Control Barrier Functions

Mar 08, 2024

Abstract:In the field of Learning from Demonstration (LfD), Dynamical Systems (DSs) have gained significant attention due to their ability to generate real-time motions and reach predefined targets. However, the conventional convergence-centric behavior exhibited by DSs may fall short in safety-critical tasks, specifically, those requiring precise replication of demonstrated trajectories or strict adherence to constrained regions even in the presence of perturbations or human intervention. Moreover, existing DS research often assumes demonstrations solely in Euclidean space, overlooking the crucial aspect of orientation in various applications. To alleviate these shortcomings, we present an innovative approach geared toward ensuring the safe execution of learned orientation skills within constrained regions surrounding a reference trajectory. This involves learning a stable DS on SO(3), extracting time-varying conic constraints from the variability observed in expert demonstrations, and bounding the evolution of the DS with Conic Control Barrier Function (CCBF) to fulfill the constraints. We validated our approach through extensive evaluation in simulation and showcased its effectiveness for a cutting skill in the context of assisted teleoperation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge