Matteo Dalle Vedove

A Task-Driven, Planner-in-the-Loop Computational Design Framework for Modular Manipulators

Dec 18, 2025Abstract:Modular manipulators composed of pre-manufactured and interchangeable modules offer high adaptability across diverse tasks. However, their deployment requires generating feasible motions while jointly optimizing morphology and mounted pose under kinematic, dynamic, and physical constraints. Moreover, traditional single-branch designs often extend reach by increasing link length, which can easily violate torque limits at the base joint. To address these challenges, we propose a unified task-driven computational framework that integrates trajectory planning across varying morphologies with the co-optimization of morphology and mounted pose. Within this framework, a hierarchical model predictive control (HMPC) strategy is developed to enable motion planning for both redundant and non-redundant manipulators. For design optimization, the CMA-ES is employed to efficiently explore a hybrid search space consisting of discrete morphology configurations and continuous mounted poses. Meanwhile, a virtual module abstraction is introduced to enable bi-branch morphologies, allowing an auxiliary branch to offload torque from the primary branch and extend the achievable workspace without increasing the capacity of individual joint modules. Extensive simulations and hardware experiments on polishing, drilling, and pick-and-place tasks demonstrate the effectiveness of the proposed framework. The results show that: 1) the framework can generate multiple feasible designs that satisfy kinematic and dynamic constraints while avoiding environmental collisions for given tasks; 2) flexible design objectives, such as maximizing manipulability, minimizing joint effort, or reducing the number of modules, can be achieved by customizing the cost functions; and 3) a bi-branch morphology capable of operating in a large workspace can be realized without requiring more powerful basic modules.

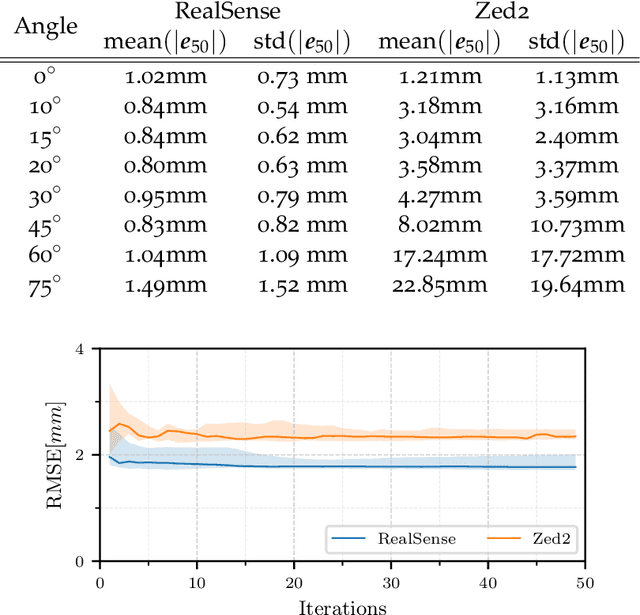

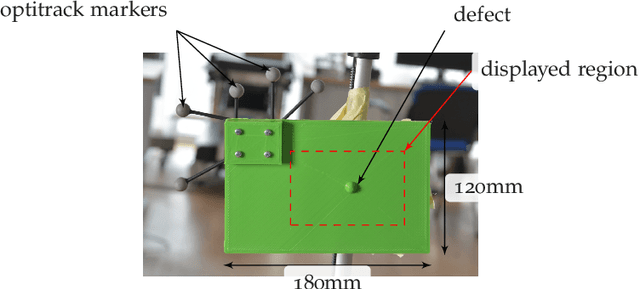

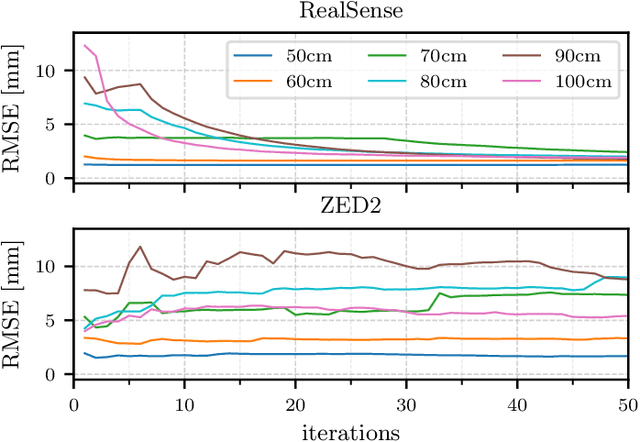

Surface Defect Identification using Bayesian Filtering on a 3D Mesh

Jan 30, 2025

Abstract:This paper presents a CAD-based approach for automated surface defect detection. We leverage the a-priori knowledge embedded in a CAD model and integrate it with point cloud data acquired from commercially available stereo and depth cameras. The proposed method first transforms the CAD model into a high-density polygonal mesh, where each vertex represents a state variable in 3D space. Subsequently, a weighted least squares algorithm is employed to iteratively estimate the state of the scanned workpiece based on the captured point cloud measurements. This framework offers the potential to incorporate information from diverse sensors into the CAD domain, facilitating a more comprehensive analysis. Preliminary results demonstrate promising performance, with the algorithm achieving convergence to a sub-millimeter standard deviation in the region of interest using only approximately 50 point cloud samples. This highlights the potential of utilising commercially available stereo cameras for high-precision quality control applications.

MeshDMP: Motion Planning on Discrete Manifolds using Dynamic Movement Primitives

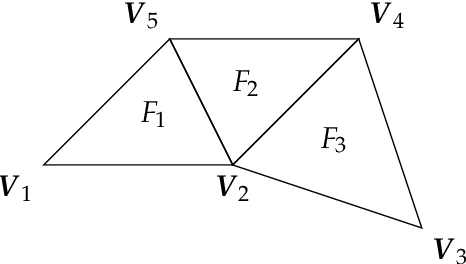

Oct 19, 2024Abstract:An open problem in industrial automation is to reliably perform tasks requiring in-contact movements with complex workpieces, as current solutions lack the ability to seamlessly adapt to the workpiece geometry. In this paper, we propose a Learning from Demonstration approach that allows a robot manipulator to learn and generalise motions across complex surfaces by leveraging differential mathematical operators on discrete manifolds to embed information on the geometry of the workpiece extracted from triangular meshes, and extend the Dynamic Movement Primitives (DMPs) framework to generate motions on the mesh surfaces. We also propose an effective strategy to adapt the motion to different surfaces, by introducing an isometric transformation of the learned forcing term. The resulting approach, namely MeshDMP, is evaluated both in simulation and real experiments, showing promising results in typical industrial automation tasks like car surface polishing.

A Passivity-Based Variable Impedance Controller for Incremental Learning of Periodic Interactive Tasks

Aug 20, 2024

Abstract:In intelligent manufacturing, robots are asked to dynamically adapt their behaviours without reducing productivity. Human teaching, where an operator physically interacts with the robot to demonstrate a new task, is a promising strategy to quickly and intuitively reconfigure the production line. However, physical guidance during task execution poses challenges in terms of both operator safety and system usability. In this paper, we solve this issue by designing a variable impedance control strategy that regulates the interaction with the environment and the physical demonstrations, explicitly preventing at the same time passivity violations. We derive constraints to limit not only the exchanged energy with the environment but also the exchanged power, resulting in smoother interactions. By monitoring the energy flow between the robot and the environment, we are able to distinguish between disturbances (to be rejected) and physical guidance (to be accomplished), enabling smooth and controlled transitions from teaching to execution and vice versa. The effectiveness of the proposed approach is validated in wiping tasks with a real robotic manipulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge