Edoardo Lamon

Dept. of Eng. and Computer Science

A Temporal Planning Framework for Multi-Agent Systems via LLM-Aided Knowledge Base Management

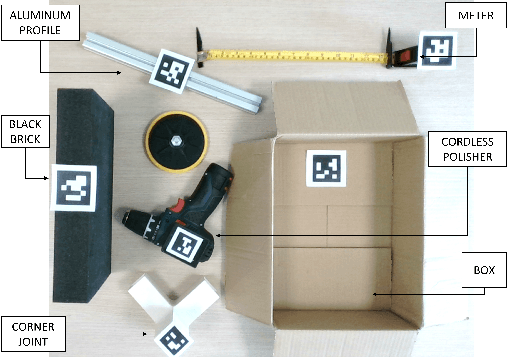

Feb 26, 2025Abstract:This paper presents a novel framework, called PLANTOR (PLanning with Natural language for Task-Oriented Robots), that integrates Large Language Models (LLMs) with Prolog-based knowledge management and planning for multi-robot tasks. The system employs a two-phase generation of a robot-oriented knowledge base, ensuring reusability and compositional reasoning, as well as a three-step planning procedure that handles temporal dependencies, resource constraints, and parallel task execution via mixed-integer linear programming. The final plan is converted into a Behaviour Tree for direct use in ROS2. We tested the framework in multi-robot assembly tasks within a block world and an arch-building scenario. Results demonstrate that LLMs can produce accurate knowledge bases with modest human feedback, while Prolog guarantees formal correctness and explainability. This approach underscores the potential of LLM integration for advanced robotics tasks requiring flexible, scalable, and human-understandable planning.

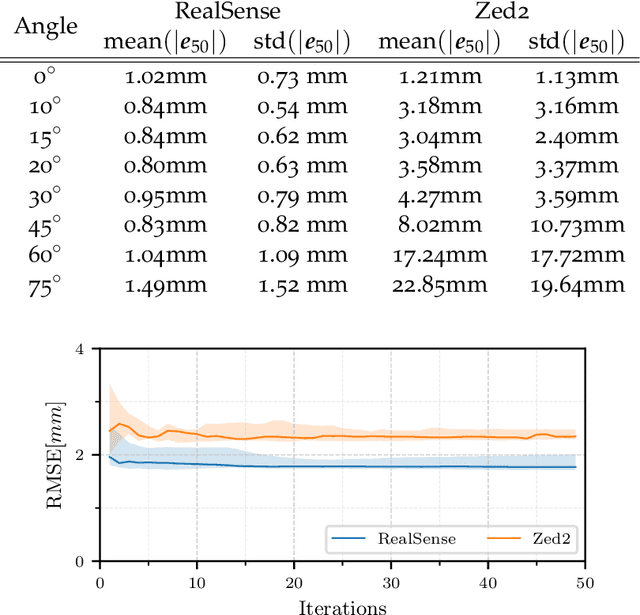

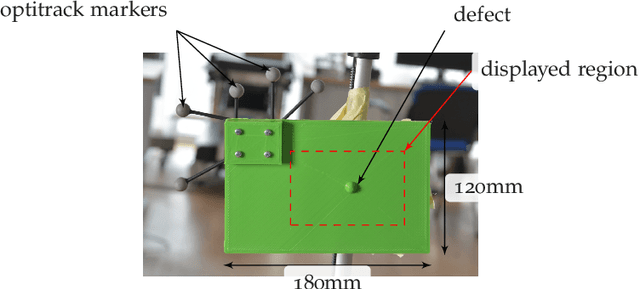

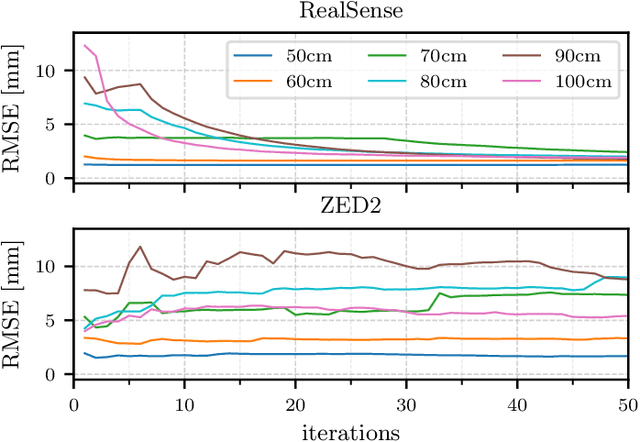

Surface Defect Identification using Bayesian Filtering on a 3D Mesh

Jan 30, 2025

Abstract:This paper presents a CAD-based approach for automated surface defect detection. We leverage the a-priori knowledge embedded in a CAD model and integrate it with point cloud data acquired from commercially available stereo and depth cameras. The proposed method first transforms the CAD model into a high-density polygonal mesh, where each vertex represents a state variable in 3D space. Subsequently, a weighted least squares algorithm is employed to iteratively estimate the state of the scanned workpiece based on the captured point cloud measurements. This framework offers the potential to incorporate information from diverse sensors into the CAD domain, facilitating a more comprehensive analysis. Preliminary results demonstrate promising performance, with the algorithm achieving convergence to a sub-millimeter standard deviation in the region of interest using only approximately 50 point cloud samples. This highlights the potential of utilising commercially available stereo cameras for high-precision quality control applications.

Exploiting Information Theory for Intuitive Robot Programming of Manual Activities

Oct 31, 2024

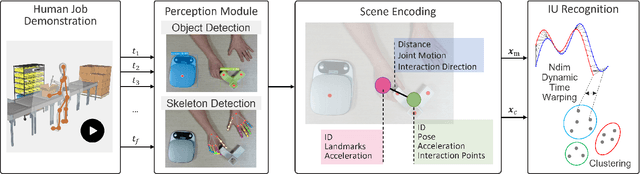

Abstract:Observational learning is a promising approach to enable people without expertise in programming to transfer skills to robots in a user-friendly manner, since it mirrors how humans learn new behaviors by observing others. Many existing methods focus on instructing robots to mimic human trajectories, but motion-level strategies often pose challenges in skills generalization across diverse environments. This paper proposes a novel framework that allows robots to achieve a \textit{higher-level} understanding of human-demonstrated manual tasks recorded in RGB videos. By recognizing the task structure and goals, robots generalize what observed to unseen scenarios. We found our task representation on Shannon's Information Theory (IT), which is applied for the first time to manual tasks. IT helps extract the active scene elements and quantify the information shared between hands and objects. We exploit scene graph properties to encode the extracted interaction features in a compact structure and segment the demonstration into blocks, streamlining the generation of Behavior Trees for robot replicas. Experiments validated the effectiveness of IT to automatically generate robot execution plans from a single human demonstration. Additionally, we provide HANDSOME, an open-source dataset of HAND Skills demOnstrated by Multi-subjEcts, to promote further research and evaluation in this field.

An Anatomy-Aware Shared Control Approach for Assisted Teleoperation of Lung Ultrasound Examinations

Sep 25, 2024

Abstract:The introduction of artificial intelligence and robotics in telehealth is enabling personalised treatment and supporting teleoperated procedures such as lung ultrasound, which has gained attention during the COVID-19 pandemic. Although fully autonomous systems face challenges due to anatomical variability, teleoperated systems appear to be more practical in current healthcare settings. This paper presents an anatomy-aware control framework for teleoperated lung ultrasound. Using biomechanically accurate 3D models such as SMPL and SKEL, the system provides a real-time visual feedback and applies virtual constraints to assist in precise probe placement tasks. Evaluations on five subjects show the accuracy of the biomechanical models and the efficiency of the system in improving probe placement and reducing procedure time compared to traditional teleoperation. The results demonstrate that the proposed framework enhances the physician's capabilities in executing remote lung ultrasound examinations, towards more objective and repeatable acquisitions.

A Passivity-Based Variable Impedance Controller for Incremental Learning of Periodic Interactive Tasks

Aug 20, 2024

Abstract:In intelligent manufacturing, robots are asked to dynamically adapt their behaviours without reducing productivity. Human teaching, where an operator physically interacts with the robot to demonstrate a new task, is a promising strategy to quickly and intuitively reconfigure the production line. However, physical guidance during task execution poses challenges in terms of both operator safety and system usability. In this paper, we solve this issue by designing a variable impedance control strategy that regulates the interaction with the environment and the physical demonstrations, explicitly preventing at the same time passivity violations. We derive constraints to limit not only the exchanged energy with the environment but also the exchanged power, resulting in smoother interactions. By monitoring the energy flow between the robot and the environment, we are able to distinguish between disturbances (to be rejected) and physical guidance (to be accomplished), enabling smooth and controlled transitions from teaching to execution and vice versa. The effectiveness of the proposed approach is validated in wiping tasks with a real robotic manipulator.

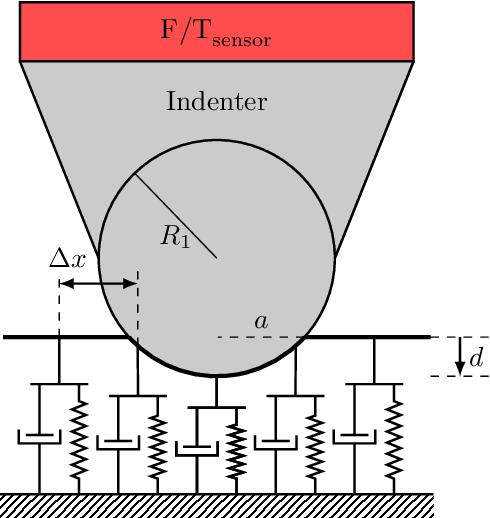

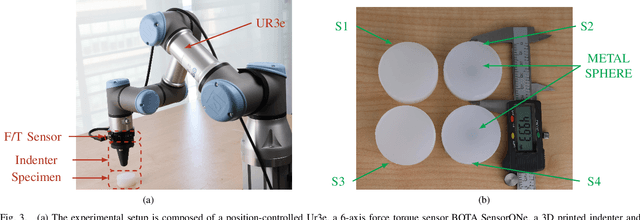

Towards Robotised Palpation for Cancer Detection through Online Tissue Viscoelastic Characterisation with a Collaborative Robotic Arm

Apr 15, 2024

Abstract:This paper introduces a new method for estimating the penetration of the end effector and the parameters of a soft body using a collaborative robotic arm. This is possible using the dimensionality reduction method that simplifies the Hunt-Crossley model. The parameters can be found without a force sensor thanks to the information of the robotic arm controller. To achieve an online estimation, an extended Kalman filter is employed, which embeds the contact dynamic model. The algorithm is tested with various types of silicone, including samples with hard intrusions to simulate cancerous cells within a soft tissue. The results indicate that this technique can accurately determine the parameters and estimate the penetration of the end effector into the soft body. These promising preliminary results demonstrate the potential for robots to serve as an effective tool for early-stage cancer diagnostics.

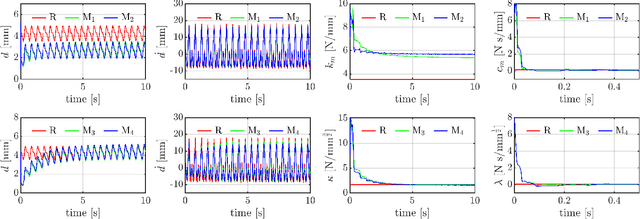

Elasticity Measurements of Expanded Foams using a Collaborative Robotic Arm

Dec 15, 2023Abstract:Medical applications of robots are increasingly popular to objectivise and speed up the execution of several types of diagnostic and therapeutic interventions. Particularly important is a class of diagnostic activities that require physical contact between the robotic tool and the human body, such as palpation examinations and ultrasound scans. The practical application of these techniques can greatly benefit from an accurate estimation of the biomechanical properties of the patient's tissues. In this paper, we evaluate the accuracy and precision of a robotic device used for medical purposes in estimating the elastic parameters of different materials. The measurements are evaluated against a ground truth consisting of a set of expanded foam specimens with different elasticity that are characterised using a high-precision device. The experimental results in terms of precision are comparable with the ground truth and suggest future ambitious developments.

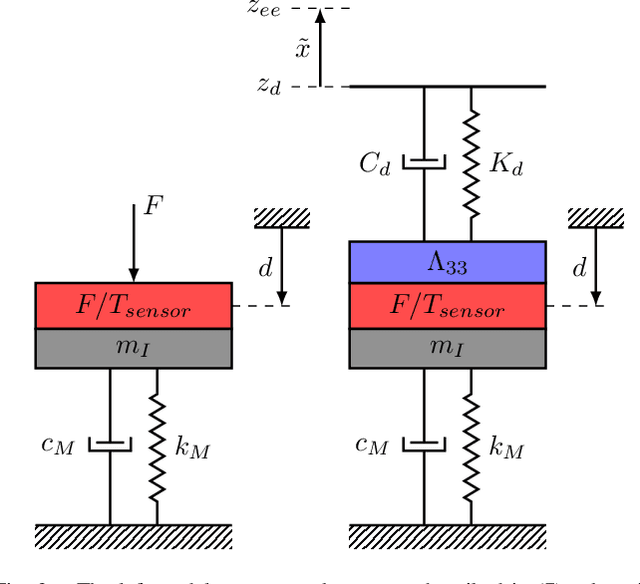

A Passive Variable Impedance Control Strategy with Viscoelastic Parameters Estimation of Soft Tissues for Safe Ultrasonography

Sep 26, 2023

Abstract:In the context of telehealth, robotic approaches have proven a valuable solution to in-person visits in remote areas, with decreased costs for patients and infection risks. In particular, in ultrasonography, robots have the potential to reproduce the skills required to acquire high-quality images while reducing the sonographer's physical efforts. In this paper, we address the control of the interaction of the probe with the patient's body, a critical aspect of ensuring safe and effective ultrasonography. We introduce a novel approach based on variable impedance control, allowing real-time optimisation of a compliant controller parameters during ultrasound procedures. This optimisation is formulated as a quadratic programming problem and incorporates physical constraints derived from viscoelastic parameter estimations. Safety and passivity constraints, including an energy tank, are also integrated to minimise potential risks during human-robot interaction. The proposed method's efficacy is demonstrated through experiments on a patient dummy torso, highlighting its potential for achieving safe behaviour and accurate force control during ultrasound procedures, even in cases of contact loss.

When Prolog meets generative models: a new approach for managing knowledge and planning in robotic applications

Sep 26, 2023

Abstract:In this paper, we propose a robot oriented knowledge management system based on the use of the Prolog language. Our framework hinges on a special organisation of knowledge base that enables: 1. its efficient population from natural language texts using semi-automated procedures based on Large Language Models, 2. the bumpless generation of temporal parallel plans for multi-robot systems through a sequence of transformations, 3. the automated translation of the plan into an executable formalism (the behaviour trees). The framework is supported by a set of open source tools and is shown on a realistic application.

Automatic Interaction and Activity Recognition from Videos of Human Manual Demonstrations with Application to Anomaly Detection

Apr 19, 2023

Abstract:This paper presents a new method to describe spatio-temporal relations between objects and hands, to recognize both interactions and activities within video demonstrations of manual tasks. The approach exploits Scene Graphs to extract key interaction features from image sequences, encoding at the same time motion patterns and context. Additionally, the method introduces an event-based automatic video segmentation and clustering, which allows to group similar events, detecting also on the fly if a monitored activity is executed correctly. The effectiveness of the approach was demonstrated in two multi-subject experiments, showing the ability to recognize and cluster hand-object and object-object interactions without prior knowledge of the activity, as well as matching the same activity performed by different subjects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge