Elena Merlo

Human-Robot Interfaces and Interaction Laboratory, Istituto Italiano di Tecnologia, Genoa, Italy, Dept. of Informatics, Bioengineering, Robotics, and Systems Engineering, University of Genoa, Genoa, Italy

Information-Theoretic Detection of Bimanual Interactions for Dual-Arm Robot Plan Generation

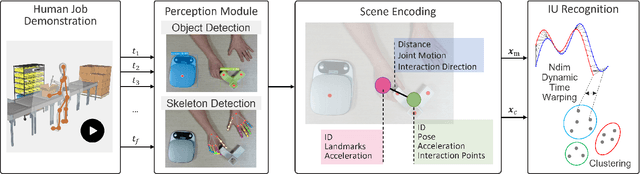

Jan 27, 2026Abstract:Programming by demonstration is a strategy to simplify the robot programming process for non-experts via human demonstrations. However, its adoption for bimanual tasks is an underexplored problem due to the complexity of hand coordination, which also hinders data recording. This paper presents a novel one-shot method for processing a single RGB video of a bimanual task demonstration to generate an execution plan for a dual-arm robotic system. To detect hand coordination policies, we apply Shannon's information theory to analyze the information flow between scene elements and leverage scene graph properties. The generated plan is a modular behavior tree that assumes different structures based on the desired arms coordination. We validated the effectiveness of this framework through multiple subject video demonstrations, which we collected and made open-source, and exploiting data from an external, publicly available dataset. Comparisons with existing methods revealed significant improvements in generating a centralized execution plan for coordinating two-arm systems.

Intuitive Programming, Adaptive Task Planning, and Dynamic Role Allocation in Human-Robot Collaboration

Nov 11, 2025

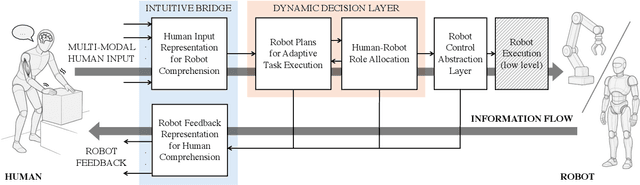

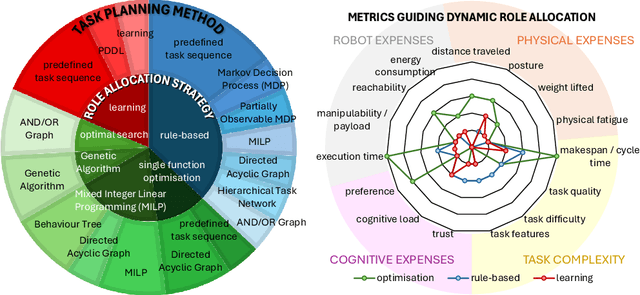

Abstract:Remarkable capabilities have been achieved by robotics and AI, mastering complex tasks and environments. Yet, humans often remain passive observers, fascinated but uncertain how to engage. Robots, in turn, cannot reach their full potential in human-populated environments without effectively modeling human states and intentions and adapting their behavior. To achieve a synergistic human-robot collaboration (HRC), a continuous information flow should be established: humans must intuitively communicate instructions, share expertise, and express needs. In parallel, robots must clearly convey their internal state and forthcoming actions to keep users informed, comfortable, and in control. This review identifies and connects key components enabling intuitive information exchange and skill transfer between humans and robots. We examine the full interaction pipeline: from the human-to-robot communication bridge translating multimodal inputs into robot-understandable representations, through adaptive planning and role allocation, to the control layer and feedback mechanisms to close the loop. Finally, we highlight trends and promising directions toward more adaptive, accessible HRC.

* Published in the Annual Review of Control, Robotics, and Autonomous Systems, Volume 9; copyright 2026 the author(s), CC BY 4.0, https://www.annualreviews.org

A Human-in-the-loop Approach to Robot Action Replanning through LLM Common-Sense Reasoning

Jul 28, 2025Abstract:To facilitate the wider adoption of robotics, accessible programming tools are required for non-experts. Observational learning enables intuitive human skills transfer through hands-on demonstrations, but relying solely on visual input can be inefficient in terms of scalability and failure mitigation, especially when based on a single demonstration. This paper presents a human-in-the-loop method for enhancing the robot execution plan, automatically generated based on a single RGB video, with natural language input to a Large Language Model (LLM). By including user-specified goals or critical task aspects and exploiting the LLM common-sense reasoning, the system adjusts the vision-based plan to prevent potential failures and adapts it based on the received instructions. Experiments demonstrated the framework intuitiveness and effectiveness in correcting vision-derived errors and adapting plans without requiring additional demonstrations. Moreover, interactive plan refinement and hallucination corrections promoted system robustness.

Exploiting Information Theory for Intuitive Robot Programming of Manual Activities

Oct 31, 2024

Abstract:Observational learning is a promising approach to enable people without expertise in programming to transfer skills to robots in a user-friendly manner, since it mirrors how humans learn new behaviors by observing others. Many existing methods focus on instructing robots to mimic human trajectories, but motion-level strategies often pose challenges in skills generalization across diverse environments. This paper proposes a novel framework that allows robots to achieve a \textit{higher-level} understanding of human-demonstrated manual tasks recorded in RGB videos. By recognizing the task structure and goals, robots generalize what observed to unseen scenarios. We found our task representation on Shannon's Information Theory (IT), which is applied for the first time to manual tasks. IT helps extract the active scene elements and quantify the information shared between hands and objects. We exploit scene graph properties to encode the extracted interaction features in a compact structure and segment the demonstration into blocks, streamlining the generation of Behavior Trees for robot replicas. Experiments validated the effectiveness of IT to automatically generate robot execution plans from a single human demonstration. Additionally, we provide HANDSOME, an open-source dataset of HAND Skills demOnstrated by Multi-subjEcts, to promote further research and evaluation in this field.

Automatic Interaction and Activity Recognition from Videos of Human Manual Demonstrations with Application to Anomaly Detection

Apr 19, 2023

Abstract:This paper presents a new method to describe spatio-temporal relations between objects and hands, to recognize both interactions and activities within video demonstrations of manual tasks. The approach exploits Scene Graphs to extract key interaction features from image sequences, encoding at the same time motion patterns and context. Additionally, the method introduces an event-based automatic video segmentation and clustering, which allows to group similar events, detecting also on the fly if a monitored activity is executed correctly. The effectiveness of the approach was demonstrated in two multi-subject experiments, showing the ability to recognize and cluster hand-object and object-object interactions without prior knowledge of the activity, as well as matching the same activity performed by different subjects.

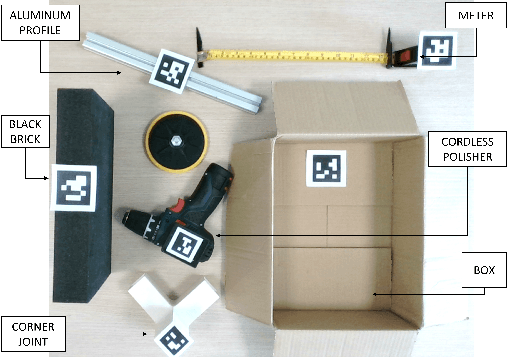

An Ergonomic Role Allocation Framework for Dynamic Human-Robot Collaborative Tasks

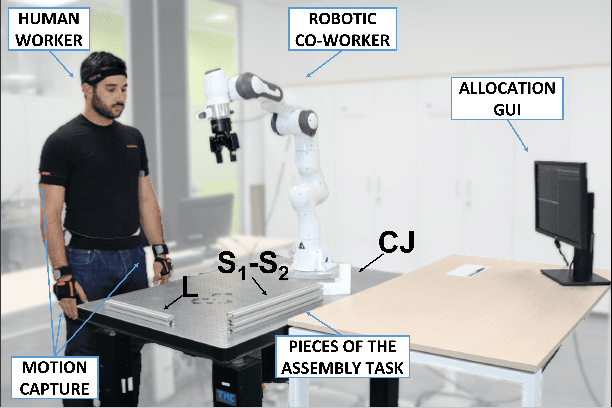

Jan 19, 2023Abstract:By incorporating ergonomics principles into the task allocation processes, human-robot collaboration (HRC) frameworks can favour the prevention of work-related musculoskeletal disorders (WMSDs). In this context, existing offline methodologies do not account for the variability of human actions and states; therefore, planning and dynamically assigning roles in human-robot teams remains an unaddressed challenge.This study aims to create an ergonomic role allocation framework that optimises the HRC, taking into account task features and human state measurements. The presented framework consists of two main modules: the first provides the HRC task model, exploiting AND/OR Graphs (AOG)s, which we adapted to solve the allocation problem; the second module describes the ergonomic risk assessment during task execution through a risk indicator and updates the AOG-related variables to influence future task allocation. The proposed framework can be combined with any time-varying ergonomic risk indicator that evaluates human cognitive and physical burden. In this work, we tested our framework in an assembly scenario, introducing a risk index named Kinematic Wear.The overall framework has been tested with a multi-subject experiment. The task allocation results and subjective evaluations, measured with questionnaires, show that high-risk actions are correctly recognised and not assigned to humans, reducing fatigue and frustration in collaborative tasks.

Dynamic Human-Robot Role Allocation based on Human Ergonomics Risk Prediction and Robot Actions Adaptation

Nov 05, 2021

Abstract:Despite cobots have high potential in bringing several benefits in the manufacturing and logistic processes, but their rapid (re-)deployment in changing environments is still limited. To enable fast adaptation to new product demands and to boost the fitness of the human workers to the allocated tasks, we propose a novel method that optimizes assembly strategies and distributes the effort among the workers in human-robot cooperative tasks. The cooperation model exploits AND/OR Graphs that we adapted to solve also the role allocation problem. The allocation algorithm considers quantitative measurements that are computed online to describe human operator's ergonomic status and task properties. We conducted preliminary experiments to demonstrate that the proposed approach succeeds in controlling the task allocation process to ensure safe and ergonomic conditions for the human worker.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge