Marta Lagomarsino

Human-Robot Interfaces and Interaction Laboratory, Istituto Italiano di Tecnologia, Genoa, Italy, Dept. of Electronics, Information and Bioengineering, Politecnico di Milano, Milan, Italy

Postural Virtual Fixtures for Ergonomic Physical Interactions with Supernumerary Robotic Bodies

Jan 30, 2026Abstract:Conjoined collaborative robots, functioning as supernumerary robotic bodies (SRBs), can enhance human load tolerance abilities. However, in tasks involving physical interaction with humans, users may still adopt awkward, non-ergonomic postures, which can lead to discomfort or injury over time. In this paper, we propose a novel control framework that provides kinesthetic feedback to SRB users when a non-ergonomic posture is detected, offering resistance to discourage such behaviors. This approach aims to foster long-term learning of ergonomic habits and promote proper posture during physical interactions. To achieve this, a virtual fixture method is developed, integrated with a continuous, online ergonomic posture assessment framework. Additionally, to improve coordination between the operator and the SRB, which consists of a robotic arm mounted on a floating base, the position of the floating base is adjusted as needed. Experimental results demonstrate the functionality and efficacy of the ergonomics-driven control framework, including two user studies involving practical loco-manipulation tasks with 14 subjects, comparing the proposed framework with a baseline control framework that does not account for human ergonomics.

Information-Theoretic Detection of Bimanual Interactions for Dual-Arm Robot Plan Generation

Jan 27, 2026Abstract:Programming by demonstration is a strategy to simplify the robot programming process for non-experts via human demonstrations. However, its adoption for bimanual tasks is an underexplored problem due to the complexity of hand coordination, which also hinders data recording. This paper presents a novel one-shot method for processing a single RGB video of a bimanual task demonstration to generate an execution plan for a dual-arm robotic system. To detect hand coordination policies, we apply Shannon's information theory to analyze the information flow between scene elements and leverage scene graph properties. The generated plan is a modular behavior tree that assumes different structures based on the desired arms coordination. We validated the effectiveness of this framework through multiple subject video demonstrations, which we collected and made open-source, and exploiting data from an external, publicly available dataset. Comparisons with existing methods revealed significant improvements in generating a centralized execution plan for coordinating two-arm systems.

Estimating Trust in Human-Robot Collaboration through Behavioral Indicators and Explainability

Jan 27, 2026Abstract:Industry 5.0 focuses on human-centric collaboration between humans and robots, prioritizing safety, comfort, and trust. This study introduces a data-driven framework to assess trust using behavioral indicators. The framework employs a Preference-Based Optimization algorithm to generate trust-enhancing trajectories based on operator feedback. This feedback serves as ground truth for training machine learning models to predict trust levels from behavioral indicators. The framework was tested in a chemical industry scenario where a robot assisted a human operator in mixing chemicals. Machine learning models classified trust with over 80\% accuracy, with the Voting Classifier achieving 84.07\% accuracy and an AUC-ROC score of 0.90. These findings underscore the effectiveness of data-driven methods in assessing trust within human-robot collaboration, emphasizing the valuable role behavioral indicators play in predicting the dynamics of human trust.

Intuitive Programming, Adaptive Task Planning, and Dynamic Role Allocation in Human-Robot Collaboration

Nov 11, 2025

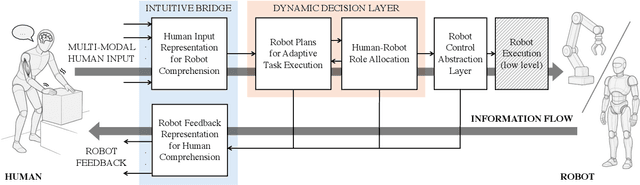

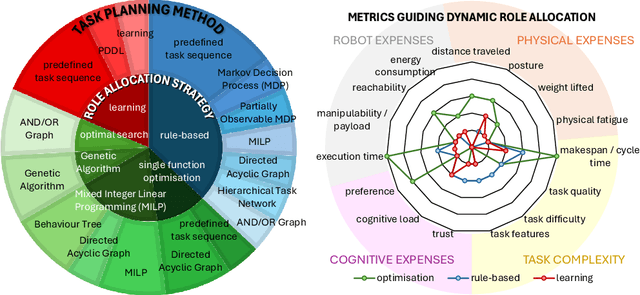

Abstract:Remarkable capabilities have been achieved by robotics and AI, mastering complex tasks and environments. Yet, humans often remain passive observers, fascinated but uncertain how to engage. Robots, in turn, cannot reach their full potential in human-populated environments without effectively modeling human states and intentions and adapting their behavior. To achieve a synergistic human-robot collaboration (HRC), a continuous information flow should be established: humans must intuitively communicate instructions, share expertise, and express needs. In parallel, robots must clearly convey their internal state and forthcoming actions to keep users informed, comfortable, and in control. This review identifies and connects key components enabling intuitive information exchange and skill transfer between humans and robots. We examine the full interaction pipeline: from the human-to-robot communication bridge translating multimodal inputs into robot-understandable representations, through adaptive planning and role allocation, to the control layer and feedback mechanisms to close the loop. Finally, we highlight trends and promising directions toward more adaptive, accessible HRC.

* Published in the Annual Review of Control, Robotics, and Autonomous Systems, Volume 9; copyright 2026 the author(s), CC BY 4.0, https://www.annualreviews.org

A Human-in-the-loop Approach to Robot Action Replanning through LLM Common-Sense Reasoning

Jul 28, 2025Abstract:To facilitate the wider adoption of robotics, accessible programming tools are required for non-experts. Observational learning enables intuitive human skills transfer through hands-on demonstrations, but relying solely on visual input can be inefficient in terms of scalability and failure mitigation, especially when based on a single demonstration. This paper presents a human-in-the-loop method for enhancing the robot execution plan, automatically generated based on a single RGB video, with natural language input to a Large Language Model (LLM). By including user-specified goals or critical task aspects and exploiting the LLM common-sense reasoning, the system adjusts the vision-based plan to prevent potential failures and adapts it based on the received instructions. Experiments demonstrated the framework intuitiveness and effectiveness in correcting vision-derived errors and adapting plans without requiring additional demonstrations. Moreover, interactive plan refinement and hallucination corrections promoted system robustness.

Graph-based Online Monitoring of Train Driver States via Facial and Skeletal Features

May 09, 2025Abstract:Driver fatigue poses a significant challenge to railway safety, with traditional systems like the dead-man switch offering limited and basic alertness checks. This study presents an online behavior-based monitoring system utilizing a customised Directed-Graph Neural Network (DGNN) to classify train driver's states into three categories: alert, not alert, and pathological. To optimize input representations for the model, an ablation study was performed, comparing three feature configurations: skeletal-only, facial-only, and a combination of both. Experimental results show that combining facial and skeletal features yields the highest accuracy (80.88%) in the three-class model, outperforming models using only facial or skeletal features. Furthermore, this combination achieves over 99% accuracy in the binary alertness classification. Additionally, we introduced a novel dataset that, for the first time, incorporates simulated pathological conditions into train driver monitoring, broadening the scope for assessing risks related to fatigue and health. This work represents a step forward in enhancing railway safety through advanced online monitoring using vision-based technologies.

Mitigating Compensatory Movements in Prosthesis Users via Adaptive Collaborative Robotics

May 03, 2025Abstract:Prosthesis users can regain partial limb functionality, however, full natural limb mobility is rarely restored, often resulting in compensatory movements that lead to discomfort, inefficiency, and long-term physical strain. To address this issue, we propose a novel human-robot collaboration framework to mitigate compensatory mechanisms in upper-limb prosthesis users by exploiting their residual motion capabilities while respecting task requirements. Our approach introduces a personalised mobility model that quantifies joint-specific functional limitations and the cost of compensatory movements. This model is integrated into a constrained optimisation framework that computes optimal user postures for task performance, balancing functionality and comfort. The solution guides a collaborative robot to reconfigure the task environment, promoting effective interaction. We validated the framework using a new body-powered prosthetic device for single-finger amputation, which enhances grasping capabilities through synergistic closure with the hand but imposes wrist constraints. Initial experiments with healthy subjects wearing the prosthesis as a supernumerary finger demonstrated that a robotic assistant embedding the user-specific mobility model outperformed human partners in handover tasks, improving both the efficiency of the prosthesis user's grasp and reducing compensatory movements in functioning joints. These results highlight the potential of collaborative robots as effective workplace and caregiving assistants, promoting inclusion and better integration of prosthetic devices into daily tasks.

WiFi based Human Fall and Activity Recognition using Transformer based Encoder Decoder and Graph Neural Networks

Apr 23, 2025Abstract:Human pose estimation and action recognition have received attention due to their critical roles in healthcare monitoring, rehabilitation, and assistive technologies. In this study, we proposed a novel architecture named Transformer based Encoder Decoder Network (TED Net) designed for estimating human skeleton poses from WiFi Channel State Information (CSI). TED Net integrates convolutional encoders with transformer based attention mechanisms to capture spatiotemporal features from CSI signals. The estimated skeleton poses were used as input to a customized Directed Graph Neural Network (DGNN) for action recognition. We validated our model on two datasets: a publicly available multi modal dataset for assessing general pose estimation, and a newly collected dataset focused on fall related scenarios involving 20 participants. Experimental results demonstrated that TED Net outperformed existing approaches in pose estimation, and that the DGNN achieves reliable action classification using CSI based skeletons, with performance comparable to RGB based systems. Notably, TED Net maintains robust performance across both fall and non fall cases. These findings highlight the potential of CSI driven human skeleton estimation for effective action recognition, particularly in home environments such as elderly fall detection. In such settings, WiFi signals are often readily available, offering a privacy preserving alternative to vision based methods, which may raise concerns about continuous camera monitoring.

Exploiting Information Theory for Intuitive Robot Programming of Manual Activities

Oct 31, 2024

Abstract:Observational learning is a promising approach to enable people without expertise in programming to transfer skills to robots in a user-friendly manner, since it mirrors how humans learn new behaviors by observing others. Many existing methods focus on instructing robots to mimic human trajectories, but motion-level strategies often pose challenges in skills generalization across diverse environments. This paper proposes a novel framework that allows robots to achieve a \textit{higher-level} understanding of human-demonstrated manual tasks recorded in RGB videos. By recognizing the task structure and goals, robots generalize what observed to unseen scenarios. We found our task representation on Shannon's Information Theory (IT), which is applied for the first time to manual tasks. IT helps extract the active scene elements and quantify the information shared between hands and objects. We exploit scene graph properties to encode the extracted interaction features in a compact structure and segment the demonstration into blocks, streamlining the generation of Behavior Trees for robot replicas. Experiments validated the effectiveness of IT to automatically generate robot execution plans from a single human demonstration. Additionally, we provide HANDSOME, an open-source dataset of HAND Skills demOnstrated by Multi-subjEcts, to promote further research and evaluation in this field.

PRO-MIND: Proximity and Reactivity Optimisation of robot Motion to tune safety limits, human stress, and productivity in INDustrial settings

Sep 10, 2024Abstract:Despite impressive advancements of industrial collaborative robots, their potential remains largely untapped due to the difficulty in balancing human safety and comfort with fast production constraints. To help address this challenge, we present PRO-MIND, a novel human-in-the-loop framework that leverages valuable data about the human co-worker to optimise robot trajectories. By estimating human attention and mental effort, our method dynamically adjusts safety zones and enables on-the-fly alterations of the robot path to enhance human comfort and optimal stopping conditions. Moreover, we formulate a multi-objective optimisation to adapt the robot's trajectory execution time and smoothness based on the current human psycho-physical stress, estimated from heart rate variability and frantic movements. These adaptations exploit the properties of B-spline curves to preserve continuity and smoothness, which are crucial factors in improving motion predictability and comfort. Evaluation in two realistic case studies showcases the framework's ability to restrain the operators' workload and stress and to ensure their safety while enhancing human-robot productivity. Further strengths of PRO-MIND include its adaptability to each individual's specific needs and sensitivity to variations in attention, mental effort, and stress during task execution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge