Luna Gava

Event-Driven Perception for Robotics Lab, Istituto Italiano di Tecnologia, Italy

Training slow silicon neurons to control extremely fast robots with spiking reinforcement learning

Jan 29, 2026Abstract:Air hockey demands split-second decisions at high puck velocities, a challenge we address with a compact network of spiking neurons running on a mixed-signal analog/digital neuromorphic processor. By co-designing hardware and learning algorithms, we train the system to achieve successful puck interactions through reinforcement learning in a remarkably small number of trials. The network leverages fixed random connectivity to capture the task's temporal structure and adopts a local e-prop learning rule in the readout layer to exploit event-driven activity for fast and efficient learning. The result is real-time learning with a setup comprising a computer and the neuromorphic chip in-the-loop, enabling practical training of spiking neural networks for robotic autonomous systems. This work bridges neuroscience-inspired hardware with real-world robotic control, showing that brain-inspired approaches can tackle fast-paced interaction tasks while supporting always-on learning in intelligent machines.

IMA-Catcher: An IMpact-Aware Nonprehensile Catching Framework based on Combined Optimization and Learning

Jun 25, 2025Abstract:Robotic catching of flying objects typically generates high impact forces that might lead to task failure and potential hardware damages. This is accentuated when the object mass to robot payload ratio increases, given the strong inertial components characterizing this task. This paper aims to address this problem by proposing an implicitly impact-aware framework that accomplishes the catching task in both pre- and post-catching phases. In the first phase, a motion planner generates optimal trajectories that minimize catching forces, while in the second, the object's energy is dissipated smoothly, minimizing bouncing. In particular, in the pre-catching phase, a real-time optimal planner is responsible for generating trajectories of the end-effector that minimize the velocity difference between the robot and the object to reduce impact forces during catching. In the post-catching phase, the robot's position, velocity, and stiffness trajectories are generated based on human demonstrations when catching a series of free-falling objects with unknown masses. A hierarchical quadratic programming-based controller is used to enforce the robot's constraints (i.e., joint and torque limits) and create a stack of tasks that minimizes the reflected mass at the end-effector as a secondary objective. The initial experiments isolate the problem along one dimension to accurately study the effects of each contribution on the metrics proposed. We show how the same task, without velocity matching, would be infeasible due to excessive joint torques resulting from the impact. The addition of reflected mass minimization is then investigated, and the catching height is increased to evaluate the method's robustness. Finally, the setup is extended to catching along multiple Cartesian axes, to prove its generalization in space.

* 25 pages, 17 figures, accepted by International Journal of Robotics Research (IJRR)

Fast Trajectory End-Point Prediction with Event Cameras for Reactive Robot Control

Feb 27, 2023

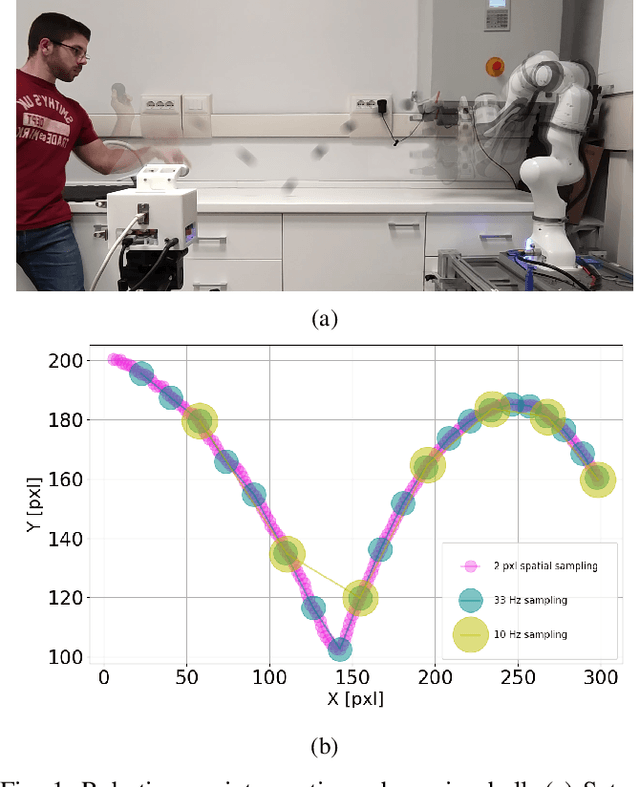

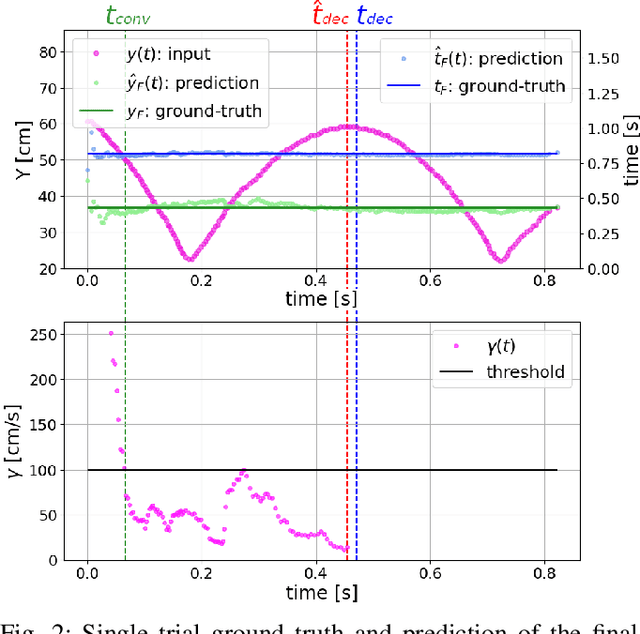

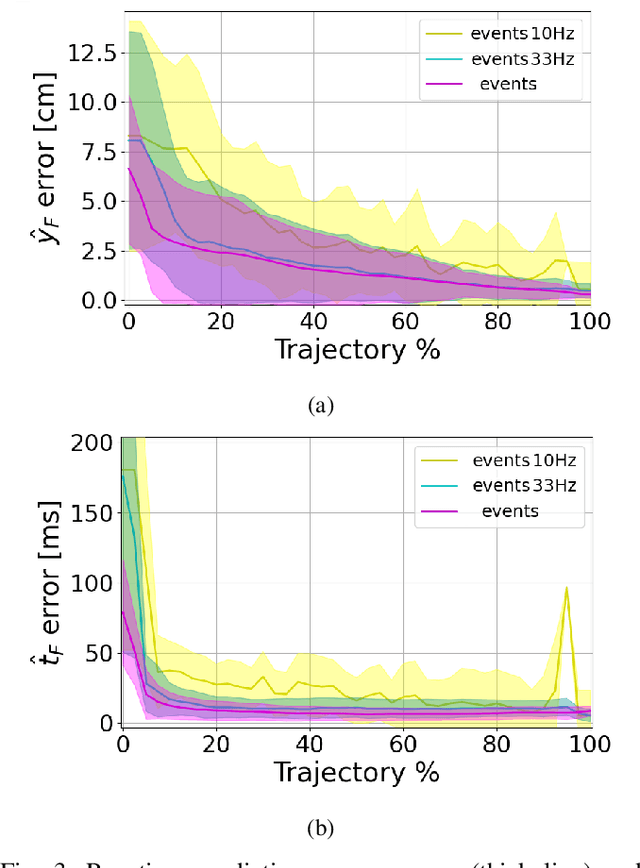

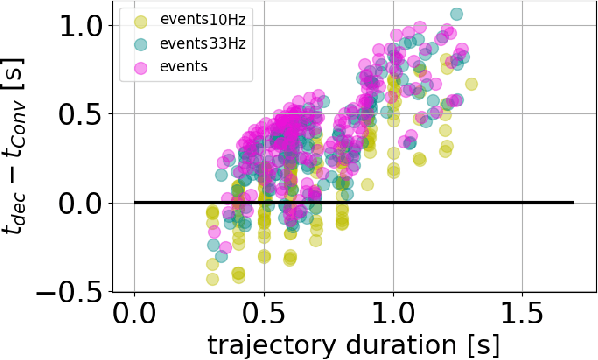

Abstract:Prediction skills can be crucial for the success of tasks where robots have limited time to act or joints actuation power. In such a scenario, a vision system with a fixed, possibly too low, sampling rate could lead to the loss of informative points, slowing down prediction convergence and reducing the accuracy. In this paper, we propose to exploit the low latency, motion-driven sampling, and data compression properties of event cameras to overcome these issues. As a use-case, we use a Panda robotic arm to intercept a ball bouncing on a table. To predict the interception point, we adopt a Stateful LSTM network, a specific LSTM variant without fixed input length, which perfectly suits the event-driven paradigm and the problem at hand, where the length of the trajectory is not defined. We train the network in simulation to speed up the dataset acquisition and then fine-tune the models on real trajectories. Experimental results demonstrate how using a dense spatial sampling (i.e. event cameras) significantly increases the number of intercepted trajectories as compared to a fixed temporal sampling (i.e. frame-based cameras).

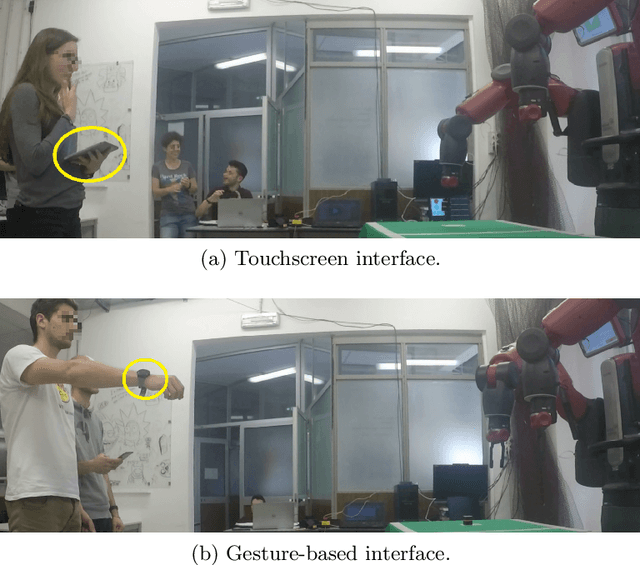

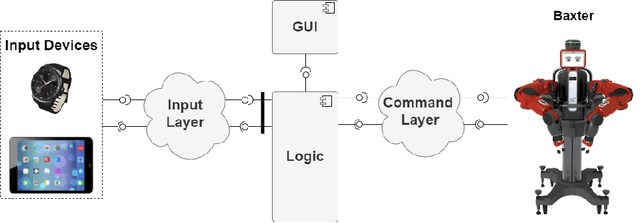

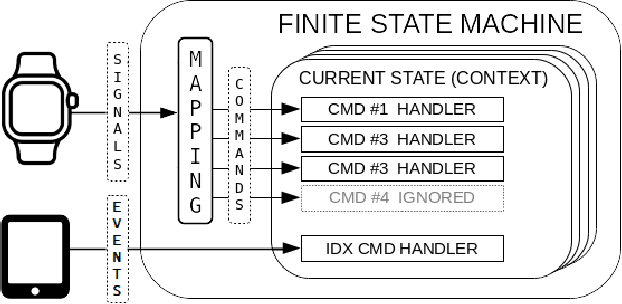

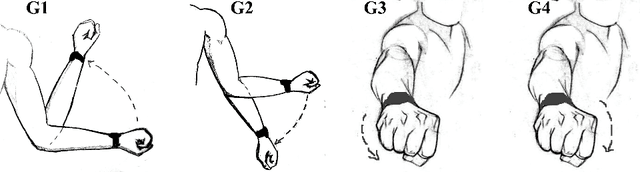

Gestural and Touchscreen Interaction for Human-Robot Collaboration: a Comparative Study

Jul 08, 2022

Abstract:Close human-robot interaction (HRI), especially in industrial scenarios, has been vastly investigated for the advantages of combining human and robot skills. For an effective HRI, the validity of currently available human-machine communication media or tools should be questioned, and new communication modalities should be explored. This article proposes a modular architecture allowing human operators to interact with robots through different modalities. In particular, we implemented the architecture to handle gestural and touchscreen input, respectively, using a smartwatch and a tablet. Finally, we performed a comparative user experience study between these two modalities.

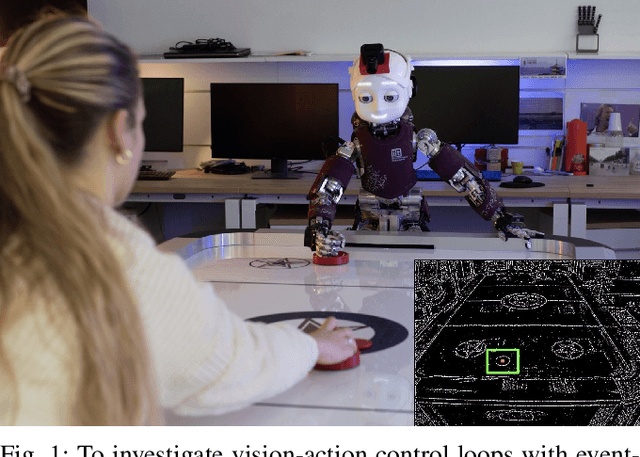

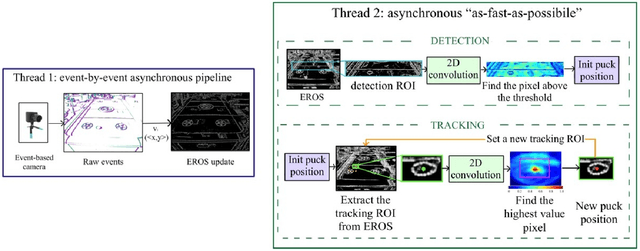

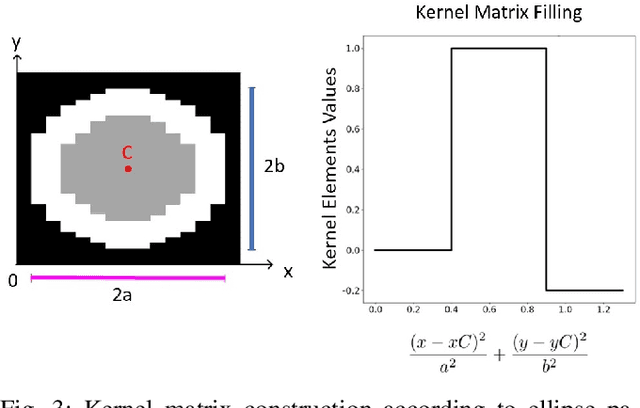

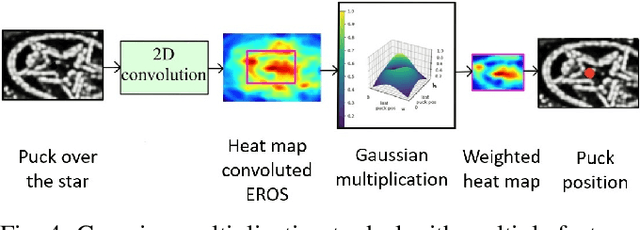

PUCK: Parallel Surface and Convolution-kernel Tracking for Event-Based Cameras

May 16, 2022

Abstract:Low latency and accuracy are fundamental requirements when vision is integrated in robots for high-speed interaction with targets, since they affect system reliability and stability. In such a scenario, the choice of the sensor and algorithms is important for the entire control loop. The technology of event-cameras can guarantee fast visual sensing in dynamic environments, but requires a tracking algorithm that can keep up with the high data rate induced by the robot ego-motion while maintaining accuracy and robustness to distractors. In this paper, we introduce a novel tracking method that leverages the Exponential Reduced Ordinal Surface (EROS) data representation to decouple event-by-event processing and tracking computation. The latter is performed using convolution kernels to detect and follow a circular target moving on a plane. To benchmark state-of-the-art event-based tracking, we propose the task of tracking the air hockey puck sliding on a surface, with the future aim of controlling the iCub robot to reach the target precisely and on time. Experimental results demonstrate that our algorithm achieves the best compromise between low latency and tracking accuracy both when the robot is still and when moving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge