Francesco Tassi

Human-Robot Interfaces and Interaction Lab., Istituto Italiano di Tecnologia, Italy

IMA-Catcher: An IMpact-Aware Nonprehensile Catching Framework based on Combined Optimization and Learning

Jun 25, 2025Abstract:Robotic catching of flying objects typically generates high impact forces that might lead to task failure and potential hardware damages. This is accentuated when the object mass to robot payload ratio increases, given the strong inertial components characterizing this task. This paper aims to address this problem by proposing an implicitly impact-aware framework that accomplishes the catching task in both pre- and post-catching phases. In the first phase, a motion planner generates optimal trajectories that minimize catching forces, while in the second, the object's energy is dissipated smoothly, minimizing bouncing. In particular, in the pre-catching phase, a real-time optimal planner is responsible for generating trajectories of the end-effector that minimize the velocity difference between the robot and the object to reduce impact forces during catching. In the post-catching phase, the robot's position, velocity, and stiffness trajectories are generated based on human demonstrations when catching a series of free-falling objects with unknown masses. A hierarchical quadratic programming-based controller is used to enforce the robot's constraints (i.e., joint and torque limits) and create a stack of tasks that minimizes the reflected mass at the end-effector as a secondary objective. The initial experiments isolate the problem along one dimension to accurately study the effects of each contribution on the metrics proposed. We show how the same task, without velocity matching, would be infeasible due to excessive joint torques resulting from the impact. The addition of reflected mass minimization is then investigated, and the catching height is increased to evaluate the method's robustness. Finally, the setup is extended to catching along multiple Cartesian axes, to prove its generalization in space.

* 25 pages, 17 figures, accepted by International Journal of Robotics Research (IJRR)

Mitigating Compensatory Movements in Prosthesis Users via Adaptive Collaborative Robotics

May 03, 2025Abstract:Prosthesis users can regain partial limb functionality, however, full natural limb mobility is rarely restored, often resulting in compensatory movements that lead to discomfort, inefficiency, and long-term physical strain. To address this issue, we propose a novel human-robot collaboration framework to mitigate compensatory mechanisms in upper-limb prosthesis users by exploiting their residual motion capabilities while respecting task requirements. Our approach introduces a personalised mobility model that quantifies joint-specific functional limitations and the cost of compensatory movements. This model is integrated into a constrained optimisation framework that computes optimal user postures for task performance, balancing functionality and comfort. The solution guides a collaborative robot to reconfigure the task environment, promoting effective interaction. We validated the framework using a new body-powered prosthetic device for single-finger amputation, which enhances grasping capabilities through synergistic closure with the hand but imposes wrist constraints. Initial experiments with healthy subjects wearing the prosthesis as a supernumerary finger demonstrated that a robotic assistant embedding the user-specific mobility model outperformed human partners in handover tasks, improving both the efficiency of the prosthesis user's grasp and reducing compensatory movements in functioning joints. These results highlight the potential of collaborative robots as effective workplace and caregiving assistants, promoting inclusion and better integration of prosthetic devices into daily tasks.

A Combined Learning and Optimization Framework to Transfer Human Whole-body Loco-manipulation Skills to Mobile Manipulators

Feb 21, 2024

Abstract:Humans' ability to smoothly switch between locomotion and manipulation is a remarkable feature of sensorimotor coordination. Leaning and replication of such human-like strategies can lead to the development of more sophisticated robots capable of performing complex whole-body tasks in real-world environments. To this end, this paper proposes a combined learning and optimization framework for transferring human's loco-manipulation soft-switching skills to mobile manipulators. The methodology departs from data collection of human demonstrations for a locomotion-integrated manipulation task through a vision system. Next, the wrist and pelvis motions are mapped to mobile manipulators' End-Effector (EE) and mobile base. A kernelized movement primitive algorithm learns the wrist and pelvis trajectories and generalizes to new desired points according to task requirements. Next, the reference trajectories are sent to a hierarchical quadratic programming controller, where the EE and the mobile base reference trajectories are provided as the first and second priority tasks, generating the feasible and optimal joint level commands. A locomotion-integrated pick-and-place task is executed to validate the proposed approach. After a human demonstrates the task, a mobile manipulator executes the task with the same and new settings, grasping a bottle at non-zero velocity. The results showed that the proposed approach successfully transfers the human loco-manipulation skills to mobile manipulators, even with different geometry.

Impact-Friendly Object Catching at Non-Zero Velocity based on Hybrid Optimization and Learning

Sep 26, 2022

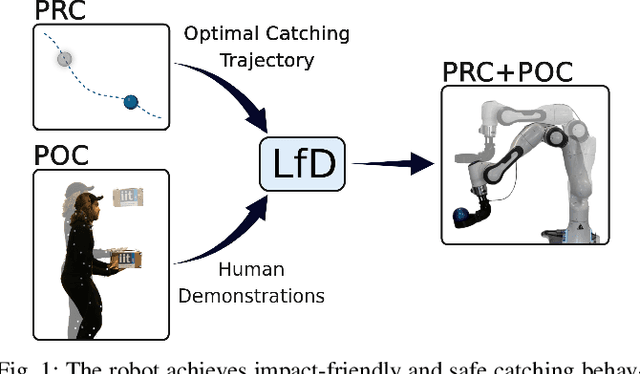

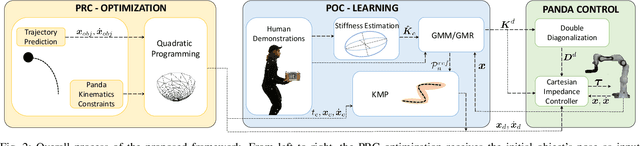

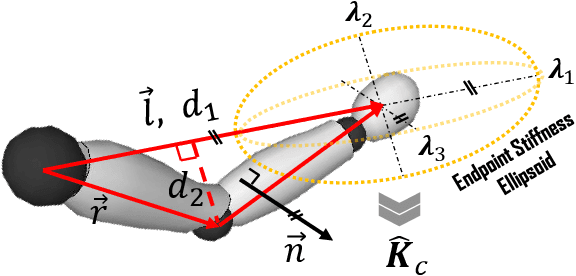

Abstract:This paper proposes a hybrid optimization and learning method for impact-friendly catching objects at non-zero velocity. Through a constrained Quadratic Programming problem, the method generates optimal trajectories up to the contact point between the robot and the object to minimize their relative velocity and reduce the initial impact forces. Next, the generated trajectories are updated by Kernelized Movement Primitives which are based on human catching demonstrations to ensure a smooth transition around the catching point. In addition, the learned human variable stiffness (HVS) is sent to the robot's Cartesian impedance controller to absorb the post-impact forces and stabilize the catching position. Three experiments are conducted to compare our method with and without HVS against a fixed-position impedance controller (FP-IC). The results showed that the proposed methods outperform the FP-IC, while adding HVS yields better results for absorbing the post-impact forces.

Sociable and Ergonomic Human-Robot Collaboration through Action Recognition and Augmented Hierarchical Quadratic Programming

Jul 07, 2022

Abstract:The recognition of actions performed by humans and the anticipation of their intentions are important enablers to yield sociable and successful collaboration in human-robot teams. Meanwhile, robots should have the capacity to deal with multiple objectives and constraints, arising from the collaborative task or the human. In this regard, we propose vision techniques to perform human action recognition and image classification, which are integrated into an Augmented Hierarchical Quadratic Programming (AHQP) scheme to hierarchically optimize the robot's reactive behavior and human ergonomics. The proposed framework allows one to intuitively command the robot in space while a task is being executed. The experiments confirm increased human ergonomics and usability, which are fundamental parameters for reducing musculoskeletal diseases and increasing trust in automation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge