Yanlong Huang

School of Computing, University of Leeds, Leeds, UK

Orientation Learning and Adaptation towards Simultaneous Incorporation of Multiple Local Constraints

Oct 09, 2025Abstract:Orientation learning plays a pivotal role in many tasks. However, the rotation group SO(3) is a Riemannian manifold. As a result, the distortion caused by non-Euclidean geometric nature introduces difficulties to the incorporation of local constraints, especially for the simultaneous incorporation of multiple local constraints. To address this issue, we propose the Angle-Axis Space-based orientation representation method to solve several orientation learning problems, including orientation adaptation and minimization of angular acceleration. Specifically, we propose a weighted average mechanism in SO(3) based on the angle-axis representation method. Our main idea is to generate multiple trajectories by considering different local constraints at different basepoints. Then these multiple trajectories are fused to generate a smooth trajectory by our proposed weighted average mechanism, achieving the goal to incorporate multiple local constraints simultaneously. Compared with existing solution, ours can address the distortion issue and make the off-theshelf Euclidean learning algorithm be re-applicable in non-Euclidean space. Simulation and Experimental evaluations validate that our solution can not only adapt orientations towards arbitrary desired via-points and cope with angular acceleration constraints, but also incorporate multiple local constraints simultaneously to achieve extra benefits, e.g., achieving smaller acceleration costs.

A Reactive Framework for Whole-Body Motion Planning of Mobile Manipulators Combining Reinforcement Learning and SDF-Constrained Quadratic Programmi

Mar 31, 2025Abstract:As an important branch of embodied artificial intelligence, mobile manipulators are increasingly applied in intelligent services, but their redundant degrees of freedom also limit efficient motion planning in cluttered environments. To address this issue, this paper proposes a hybrid learning and optimization framework for reactive whole-body motion planning of mobile manipulators. We develop the Bayesian distributional soft actor-critic (Bayes-DSAC) algorithm to improve the quality of value estimation and the convergence performance of the learning. Additionally, we introduce a quadratic programming method constrained by the signed distance field to enhance the safety of the obstacle avoidance motion. We conduct experiments and make comparison with standard benchmark. The experimental results verify that our proposed framework significantly improves the efficiency of reactive whole-body motion planning, reduces the planning time, and improves the success rate of motion planning. Additionally, the proposed reinforcement learning method ensures a rapid learning process in the whole-body planning task. The novel framework allows mobile manipulators to adapt to complex environments more safely and efficiently.

One-Shot Robust Imitation Learning for Long-Horizon Visuomotor Tasks from Unsegmented Demonstrations

Oct 02, 2024Abstract:In contrast to single-skill tasks, long-horizon tasks play a crucial role in our daily life, e.g., a pouring task requires a proper concatenation of reaching, grasping and pouring subtasks. As an efficient solution for transferring human skills to robots, imitation learning has achieved great progress over the last two decades. However, when learning long-horizon visuomotor skills, imitation learning often demands a large amount of semantically segmented demonstrations. Moreover, the performance of imitation learning could be susceptible to external perturbation and visual occlusion. In this paper, we exploit dynamical movement primitives and meta-learning to provide a new framework for imitation learning, called Meta-Imitation Learning with Adaptive Dynamical Primitives (MiLa). MiLa allows for learning unsegmented long-horizon demonstrations and adapting to unseen tasks with a single demonstration. MiLa can also resist external disturbances and visual occlusion during task execution. Real-world robotic experiments demonstrate the superiority of MiLa, irrespective of visual occlusion and random perturbations on robots.

Motif-Consistent Counterfactuals with Adversarial Refinement for Graph-Level Anomaly Detection

Jul 18, 2024Abstract:Graph-level anomaly detection is significant in diverse domains. To improve detection performance, counterfactual graphs have been exploited to benefit the generalization capacity by learning causal relations. Most existing studies directly introduce perturbations (e.g., flipping edges) to generate counterfactual graphs, which are prone to alter the semantics of generated examples and make them off the data manifold, resulting in sub-optimal performance. To address these issues, we propose a novel approach, Motif-consistent Counterfactuals with Adversarial Refinement (MotifCAR), for graph-level anomaly detection. The model combines the motif of one graph, the core subgraph containing the identification (category) information, and the contextual subgraph (non-motif) of another graph to produce a raw counterfactual graph. However, the produced raw graph might be distorted and cannot satisfy the important counterfactual properties: Realism, Validity, Proximity and Sparsity. Towards that, we present a Generative Adversarial Network (GAN)-based graph optimizer to refine the raw counterfactual graphs. It adopts the discriminator to guide the generator to generate graphs close to realistic data, i.e., meet the property Realism. Further, we design the motif consistency to force the motif of the generated graphs to be consistent with the realistic graphs, meeting the property Validity. Also, we devise the contextual loss and connection loss to control the contextual subgraph and the newly added links to meet the properties Proximity and Sparsity. As a result, the model can generate high-quality counterfactual graphs. Experiments demonstrate the superiority of MotifCAR.

A Combined Learning and Optimization Framework to Transfer Human Whole-body Loco-manipulation Skills to Mobile Manipulators

Feb 21, 2024

Abstract:Humans' ability to smoothly switch between locomotion and manipulation is a remarkable feature of sensorimotor coordination. Leaning and replication of such human-like strategies can lead to the development of more sophisticated robots capable of performing complex whole-body tasks in real-world environments. To this end, this paper proposes a combined learning and optimization framework for transferring human's loco-manipulation soft-switching skills to mobile manipulators. The methodology departs from data collection of human demonstrations for a locomotion-integrated manipulation task through a vision system. Next, the wrist and pelvis motions are mapped to mobile manipulators' End-Effector (EE) and mobile base. A kernelized movement primitive algorithm learns the wrist and pelvis trajectories and generalizes to new desired points according to task requirements. Next, the reference trajectories are sent to a hierarchical quadratic programming controller, where the EE and the mobile base reference trajectories are provided as the first and second priority tasks, generating the feasible and optimal joint level commands. A locomotion-integrated pick-and-place task is executed to validate the proposed approach. After a human demonstrates the task, a mobile manipulator executes the task with the same and new settings, grasping a bottle at non-zero velocity. The results showed that the proposed approach successfully transfers the human loco-manipulation skills to mobile manipulators, even with different geometry.

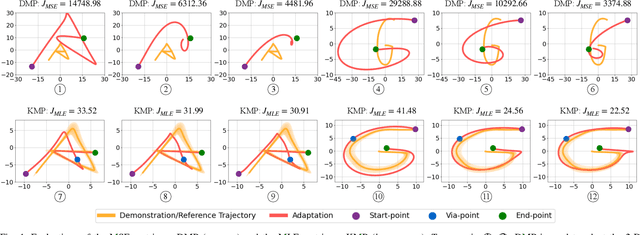

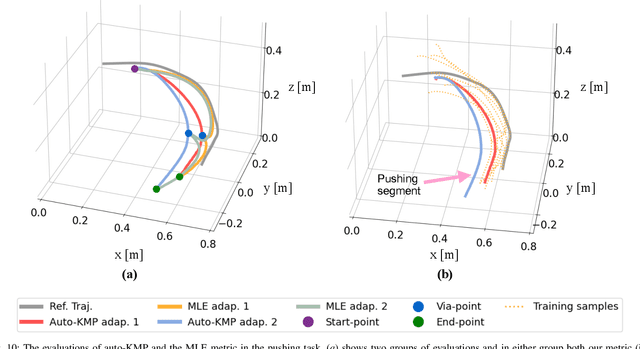

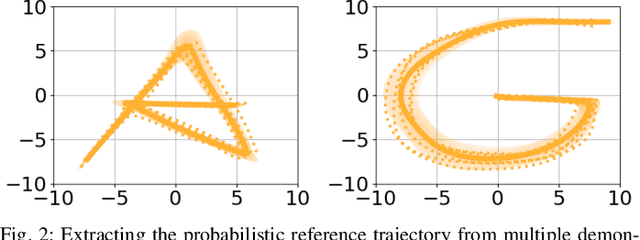

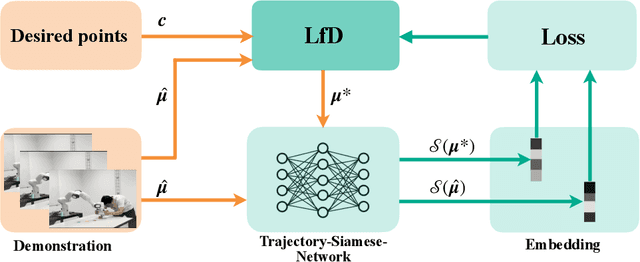

Auto-LfD: Towards Closing the Loop for Learning from Demonstrations

Oct 15, 2023

Abstract:Over the past few years, there have been numerous works towards advancing the generalization capability of robots, among which learning from demonstrations (LfD) has drawn much attention by virtue of its user-friendly and data-efficient nature. While many LfD solutions have been reported, a key question has not been properly addressed: how can we evaluate the generalization performance of LfD? For instance, when a robot draws a letter that needs to pass through new desired points, how does it ensure the new trajectory maintains a similar shape to the demonstration? This question becomes more relevant when a new task is significantly far from the demonstrated region. To tackle this issue, a user often resorts to manual tuning of the hyperparameters of an LfD approach until a satisfactory trajectory is attained. In this paper, we aim to provide closed-loop evaluative feedback for LfD and optimize LfD in an automatic fashion. Specifically, we consider dynamical movement primitives (DMP) and kernelized movement primitives (KMP) as examples and develop a generic optimization framework capable of measuring the generalization performance of DMP and KMP and auto-optimizing their hyperparameters without any human inputs. Evaluations including a peg-in-hole task and a pushing task on a real robot evidence the applicability of our framework.

A Non-parametric Skill Representation with Soft Null Space Projectors for Fast Generalization

Sep 18, 2022

Abstract:Over the last two decades, the robotics community witnessed the emergence of various motion representations that have been used extensively, particularly in behavorial cloning, to compactly encode and generalize skills. Among these, probabilistic approaches have earned a relevant place, owing to their encoding of variations, correlations and adaptability to new task conditions. Modulating such primitives, however, is often cumbersome due to the need for parameter re-optimization which frequently entails computationally costly operations. In this paper we derive a non-parametric movement primitive formulation that contains a null space projector. We show that such formulation allows for fast and efficient motion generation with computational complexity O(n2) without involving matrix inversions, whose complexity is O(n3). This is achieved by using the null space to track secondary targets, with a precision determined by the training dataset. Using a 2D example associated with time input we show that our non-parametric solution compares favourably with a state-of-the-art parametric approach. For demonstrated skills with high-dimensional inputs we show that it permits on-the-fly adaptation as well.

iPLAN: Interactive and Procedural Layout Planning

Mar 27, 2022

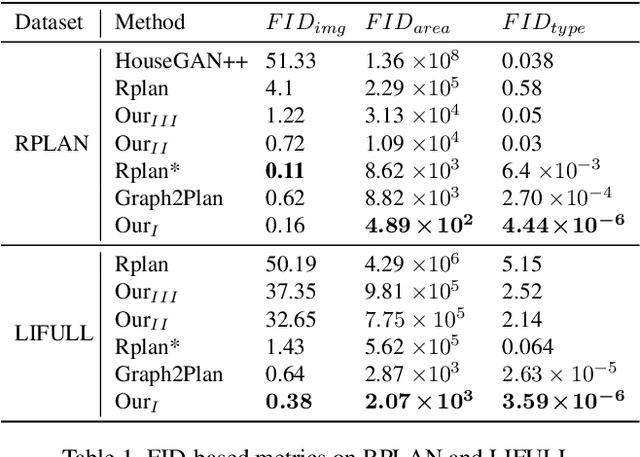

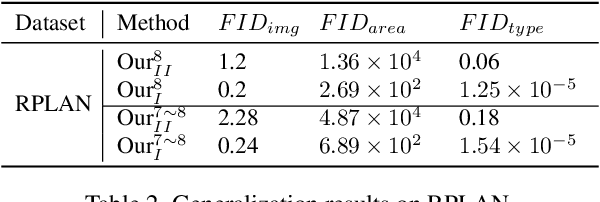

Abstract:Layout design is ubiquitous in many applications, e.g. architecture/urban planning, etc, which involves a lengthy iterative design process. Recently, deep learning has been leveraged to automatically generate layouts via image generation, showing a huge potential to free designers from laborious routines. While automatic generation can greatly boost productivity, designer input is undoubtedly crucial. An ideal AI-aided design tool should automate repetitive routines, and meanwhile accept human guidance and provide smart/proactive suggestions. However, the capability of involving humans into the loop has been largely ignored in existing methods which are mostly end-to-end approaches. To this end, we propose a new human-in-the-loop generative model, iPLAN, which is capable of automatically generating layouts, but also interacting with designers throughout the whole procedure, enabling humans and AI to co-evolve a sketchy idea gradually into the final design. iPLAN is evaluated on diverse datasets and compared with existing methods. The results show that iPLAN has high fidelity in producing similar layouts to those from human designers, great flexibility in accepting designer inputs and providing design suggestions accordingly, and strong generalizability when facing unseen design tasks and limited training data.

EKMP: Generalized Imitation Learning with Adaptation, Nonlinear Hard Constraints and Obstacle Avoidance

Mar 12, 2021

Abstract:As a user-friendly and straightforward solution for robot trajectory generation, imitation learning has been viewed as a vital direction in the context of robot skill learning. In contrast to unconstrained imitation learning which ignores possible internal and external constraints arising from environments and robot kinematics/dynamics, recent works on constrained imitation learning allow for transferring human skills to unstructured scenarios, further enlarging the application domain of imitation learning. While various constraints have been studied, e.g., joint limits, obstacle avoidance and plane constraints, the problem of nonlinear hard constraints has not been well-addressed. In this paper, we propose extended kernelized movement primitives (EKMP) to cope with most of the key problems in imitation learning, including nonlinear hard constraints. Specifically, EKMP is capable of learning the probabilistic features of multiple demonstrations, adapting the learned skills towards arbitrary desired points in terms of joint position and velocity, avoiding obstacles at the level of robot links, as well as satisfying arbitrary linear and nonlinear, equality and inequality hard constraints. Besides, the connections between EKMP and state-of-the-art motion planning approaches are discussed. Several evaluations including the planning of joint trajectories for a 7-DoF robotic arm are provided to verify the effectiveness of our framework.

A Linearly Constrained Nonparametric Framework for Imitation Learning

Sep 15, 2019

Abstract:In recent years, a myriad of advanced results have been reported in the community of imitation learning, ranging from parametric to non-parametric, probabilistic to non-probabilistic and Bayesian to frequentist approaches. Meanwhile, ample applications (e.g., grasping tasks and human-robot collaborations) further show the applicability of imitation learning in a wide range of domains. While numerous literature is dedicated to the learning of human skills in unconstrained environment, the problem of learning constrained motor skills, however, has not received equal attention yet. In fact, constrained skills exist widely in robotic systems. For instance, when a robot is demanded to write letters on a board, its end-effector trajectory must comply with the plane constraint from the board. In this paper, we aim to tackle the problem of imitation learning with linear constraints. Specifically, we propose to exploit the probabilistic properties of multiple demonstrations, and subsequently incorporate them into a linearly constrained optimization problem, which finally leads to a non-parametric solution. In addition, a connection between our framework and the classical model predictive control is provided. Several examples including simulated writing and locomotion tasks are presented to show the effectiveness of our framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge