Yijin Wang

DebFlow: Automating Agent Creation via Agent Debate

Mar 31, 2025

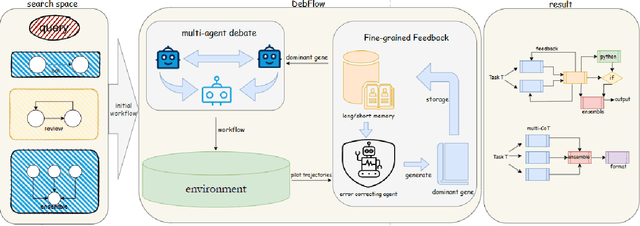

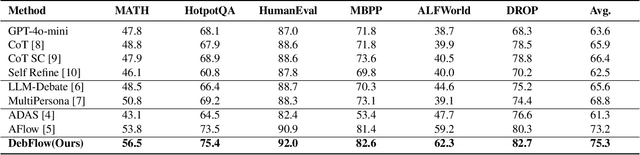

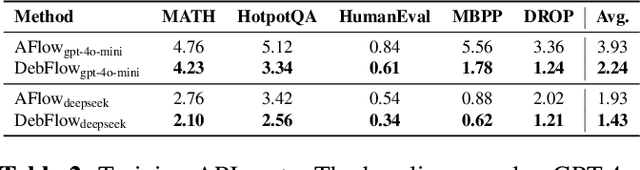

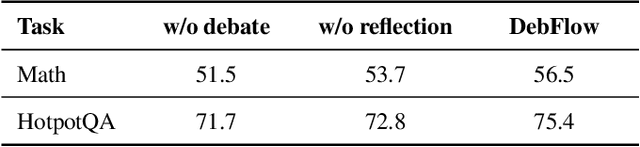

Abstract:Large language models (LLMs) have demonstrated strong potential and impressive performance in automating the generation and optimization of workflows. However, existing approaches are marked by limited reasoning capabilities, high computational demands, and significant resource requirements. To address these issues, we propose DebFlow, a framework that employs a debate mechanism to optimize workflows and integrates reflexion to improve based on previous experiences. We evaluated our method across six benchmark datasets, including HotpotQA, MATH, and ALFWorld. Our approach achieved a 3\% average performance improvement over the latest baselines, demonstrating its effectiveness in diverse problem domains. In particular, during training, our framework reduces resource consumption by 37\% compared to the state-of-the-art baselines. Additionally, we performed ablation studies. Removing the Debate component resulted in a 4\% performance drop across two benchmark datasets, significantly greater than the 2\% drop observed when the Reflection component was removed. These findings strongly demonstrate the critical role of Debate in enhancing framework performance, while also highlighting the auxiliary contribution of reflexion to overall optimization.

MARS: Memory-Enhanced Agents with Reflective Self-improvement

Mar 25, 2025Abstract:Large language models (LLMs) have made significant advances in the field of natural language processing, but they still face challenges such as continuous decision-making, lack of long-term memory, and limited context windows in dynamic environments. To address these issues, this paper proposes an innovative framework Memory-Enhanced Agents with Reflective Self-improvement. The MARS framework comprises three agents: the User, the Assistant, and the Checker. By integrating iterative feedback, reflective mechanisms, and a memory optimization mechanism based on the Ebbinghaus forgetting curve, it significantly enhances the agents capabilities in handling multi-tasking and long-span information.

Enhancing Intent Understanding for Ambiguous Prompts through Human-Machine Co-Adaptation

Jan 25, 2025

Abstract:Modern image generation systems can produce high-quality visuals, yet user prompts often contain ambiguities, requiring multiple revisions. Existing methods struggle to address the nuanced needs of non-expert users. We propose Visual Co-Adaptation (VCA), a novel framework that iteratively refines prompts and aligns generated images with user preferences. VCA employs a fine-tuned language model with reinforcement learning and multi-turn dialogues for prompt disambiguation. Key components include the Incremental Context-Enhanced Dialogue Block for interactive clarification, the Semantic Exploration and Disambiguation Module (SESD) leveraging Retrieval-Augmented Generation (RAG) and CLIP scoring, and the Pixel Precision and Consistency Optimization Module (PPCO) for refining image details using Proximal Policy Optimization (PPO). A human-in-the-loop feedback mechanism further improves performance. Experiments show that VCA surpasses models like DALL-E 3 and Stable Diffusion, reducing dialogue rounds to 4.3, achieving a CLIP score of 0.92, and enhancing user satisfaction to 4.73/5. Additionally, we introduce a novel multi-round dialogue dataset with prompt-image pairs and user intent annotations.

Elucidating the Design Choice of Probability Paths in Flow Matching for Forecasting

Oct 04, 2024Abstract:Flow matching has recently emerged as a powerful paradigm for generative modeling and has been extended to probabilistic time series forecasting in latent spaces. However, the impact of the specific choice of probability path model on forecasting performance remains under-explored. In this work, we demonstrate that forecasting spatio-temporal data with flow matching is highly sensitive to the selection of the probability path model. Motivated by this insight, we propose a novel probability path model designed to improve forecasting performance. Our empirical results across various dynamical system benchmarks show that our model achieves faster convergence during training and improved predictive performance compared to existing probability path models. Importantly, our approach is efficient during inference, requiring only a few sampling steps. This makes our proposed model practical for real-world applications and opens new avenues for probabilistic forecasting.

One-Shot Robust Imitation Learning for Long-Horizon Visuomotor Tasks from Unsegmented Demonstrations

Oct 02, 2024Abstract:In contrast to single-skill tasks, long-horizon tasks play a crucial role in our daily life, e.g., a pouring task requires a proper concatenation of reaching, grasping and pouring subtasks. As an efficient solution for transferring human skills to robots, imitation learning has achieved great progress over the last two decades. However, when learning long-horizon visuomotor skills, imitation learning often demands a large amount of semantically segmented demonstrations. Moreover, the performance of imitation learning could be susceptible to external perturbation and visual occlusion. In this paper, we exploit dynamical movement primitives and meta-learning to provide a new framework for imitation learning, called Meta-Imitation Learning with Adaptive Dynamical Primitives (MiLa). MiLa allows for learning unsegmented long-horizon demonstrations and adapting to unseen tasks with a single demonstration. MiLa can also resist external disturbances and visual occlusion during task execution. Real-world robotic experiments demonstrate the superiority of MiLa, irrespective of visual occlusion and random perturbations on robots.

Flusion: Integrating multiple data sources for accurate influenza predictions

Jul 26, 2024Abstract:Over the last ten years, the US Centers for Disease Control and Prevention (CDC) has organized an annual influenza forecasting challenge with the motivation that accurate probabilistic forecasts could improve situational awareness and yield more effective public health actions. Starting with the 2021/22 influenza season, the forecasting targets for this challenge have been based on hospital admissions reported in the CDC's National Healthcare Safety Network (NHSN) surveillance system. Reporting of influenza hospital admissions through NHSN began within the last few years, and as such only a limited amount of historical data are available for this signal. To produce forecasts in the presence of limited data for the target surveillance system, we augmented these data with two signals that have a longer historical record: 1) ILI+, which estimates the proportion of outpatient doctor visits where the patient has influenza; and 2) rates of laboratory-confirmed influenza hospitalizations at a selected set of healthcare facilities. Our model, Flusion, is an ensemble that combines gradient boosting quantile regression models with a Bayesian autoregressive model. The gradient boosting models were trained on all three data signals, while the autoregressive model was trained on only the target signal; all models were trained jointly on data for multiple locations. Flusion was the top-performing model in the CDC's influenza prediction challenge for the 2023/24 season. In this article we investigate the factors contributing to Flusion's success, and we find that its strong performance was primarily driven by the use of a gradient boosting model that was trained jointly on data from multiple surveillance signals and locations. These results indicate the value of sharing information across locations and surveillance signals, especially when doing so adds to the pool of available training data.

Auto-LfD: Towards Closing the Loop for Learning from Demonstrations

Oct 15, 2023

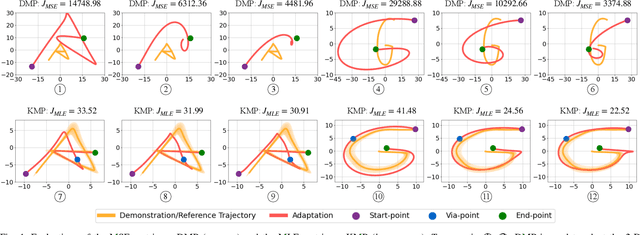

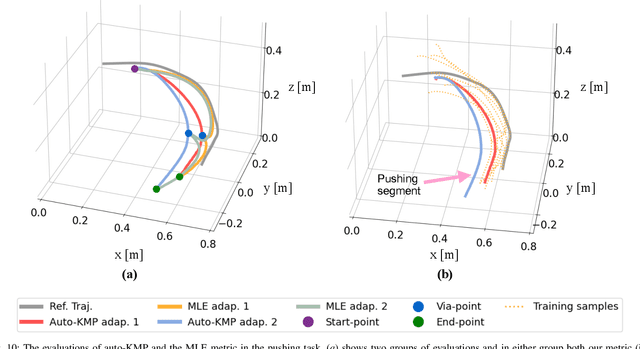

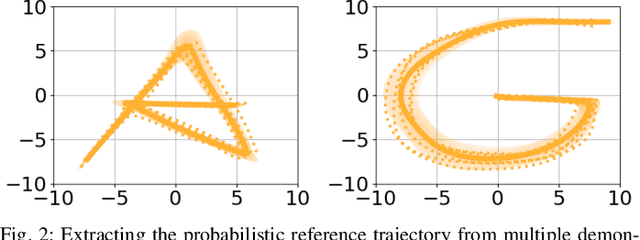

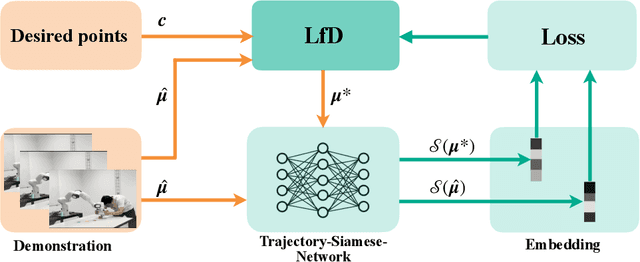

Abstract:Over the past few years, there have been numerous works towards advancing the generalization capability of robots, among which learning from demonstrations (LfD) has drawn much attention by virtue of its user-friendly and data-efficient nature. While many LfD solutions have been reported, a key question has not been properly addressed: how can we evaluate the generalization performance of LfD? For instance, when a robot draws a letter that needs to pass through new desired points, how does it ensure the new trajectory maintains a similar shape to the demonstration? This question becomes more relevant when a new task is significantly far from the demonstrated region. To tackle this issue, a user often resorts to manual tuning of the hyperparameters of an LfD approach until a satisfactory trajectory is attained. In this paper, we aim to provide closed-loop evaluative feedback for LfD and optimize LfD in an automatic fashion. Specifically, we consider dynamical movement primitives (DMP) and kernelized movement primitives (KMP) as examples and develop a generic optimization framework capable of measuring the generalization performance of DMP and KMP and auto-optimizing their hyperparameters without any human inputs. Evaluations including a peg-in-hole task and a pushing task on a real robot evidence the applicability of our framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge