A Non-parametric Skill Representation with Soft Null Space Projectors for Fast Generalization

Paper and Code

Sep 18, 2022

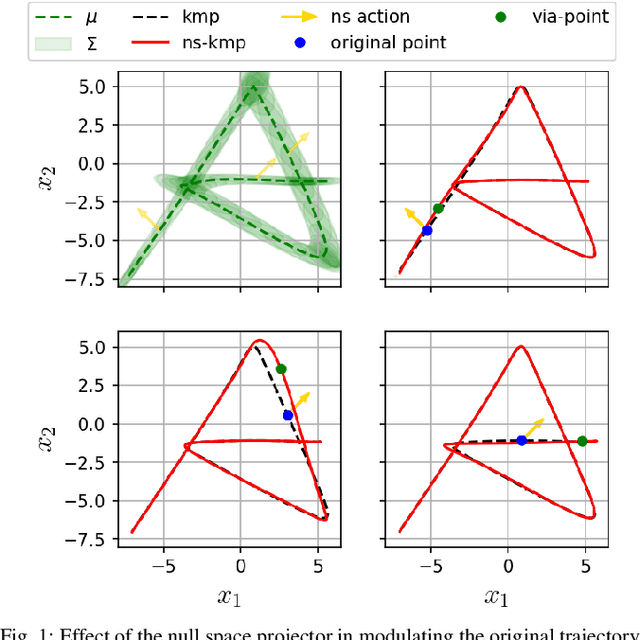

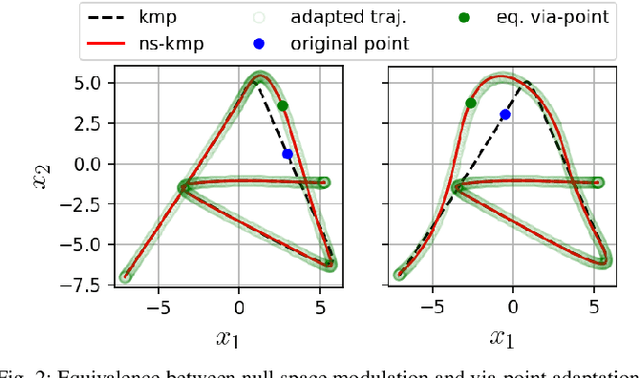

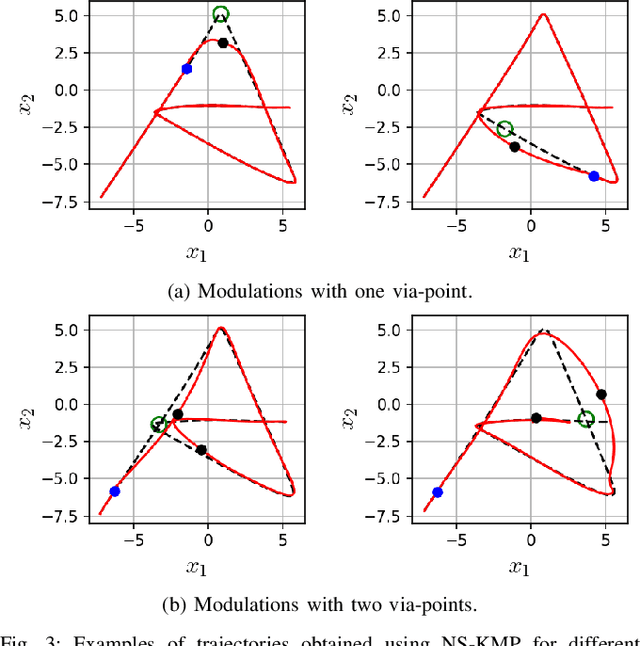

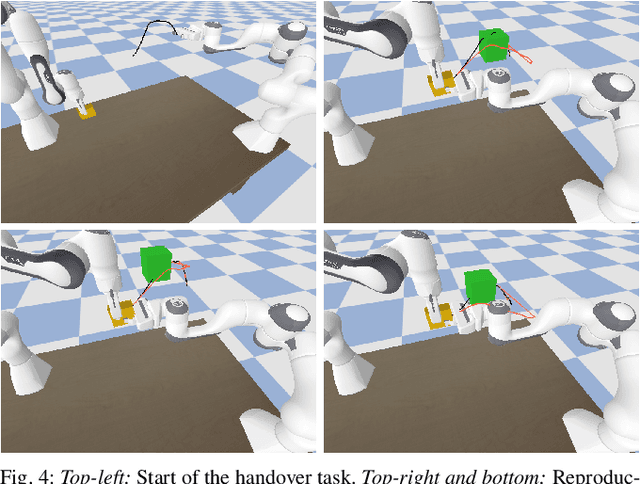

Over the last two decades, the robotics community witnessed the emergence of various motion representations that have been used extensively, particularly in behavorial cloning, to compactly encode and generalize skills. Among these, probabilistic approaches have earned a relevant place, owing to their encoding of variations, correlations and adaptability to new task conditions. Modulating such primitives, however, is often cumbersome due to the need for parameter re-optimization which frequently entails computationally costly operations. In this paper we derive a non-parametric movement primitive formulation that contains a null space projector. We show that such formulation allows for fast and efficient motion generation with computational complexity O(n2) without involving matrix inversions, whose complexity is O(n3). This is achieved by using the null space to track secondary targets, with a precision determined by the training dataset. Using a 2D example associated with time input we show that our non-parametric solution compares favourably with a state-of-the-art parametric approach. For demonstrated skills with high-dimensional inputs we show that it permits on-the-fly adaptation as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge