Generalized State-Dependent Exploration for Deep Reinforcement Learning in Robotics

Paper and Code

May 12, 2020

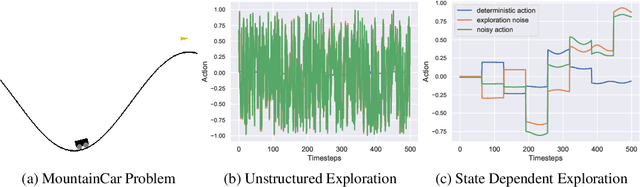

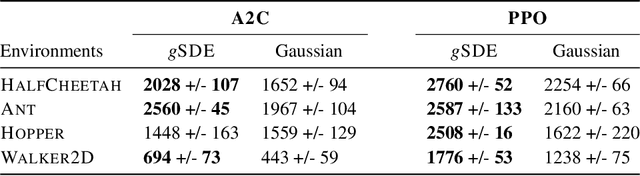

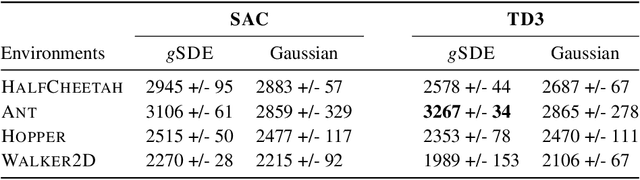

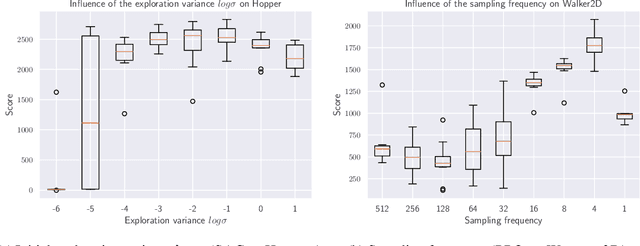

Reinforcement learning (RL) enables robots to learn skills from interactions with the real world. In practice, the unstructured step-based exploration used in Deep RL -- often very successful in simulation -- leads to jerky motion patterns on real robots. Consequences of the resulting shaky behavior are poor exploration, or even damage to the robot. We address these issues by adapting state-dependent exploration (SDE) to current Deep RL algorithms. To enable this adaptation, we propose three extensions to the original SDE, which leads to a new exploration method generalized state-dependent exploration (gSDE). We evaluate gSDE both in simulation, on PyBullet continuous control tasks, and directly on a tendon-driven elastic robot. gSDE yields competitive results in simulation but outperforms the unstructured exploration on the real robot. The code is available at https://github.com/DLR-RM/stable-baselines3/tree/sde.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge