George Pantazis

An Efficient Risk-aware Branch MPC for Automated Driving that is Robust to Uncertain Vehicle Behaviors

Mar 27, 2024

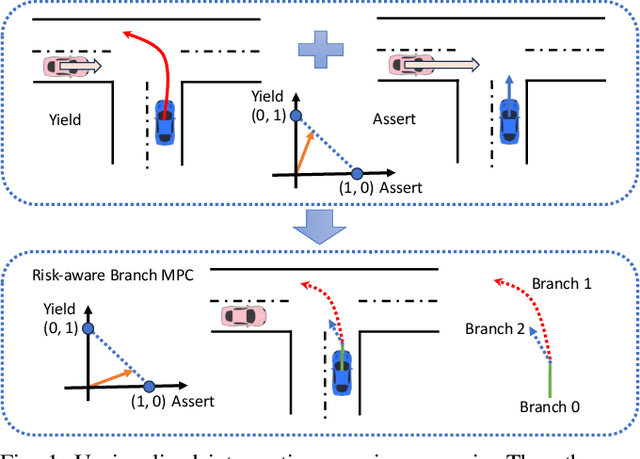

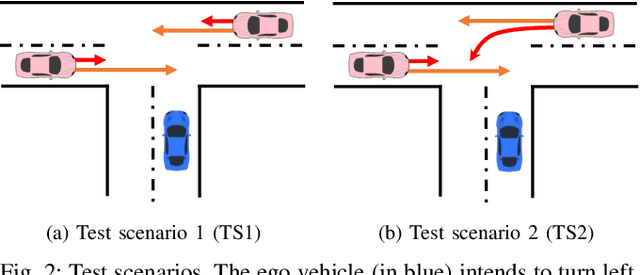

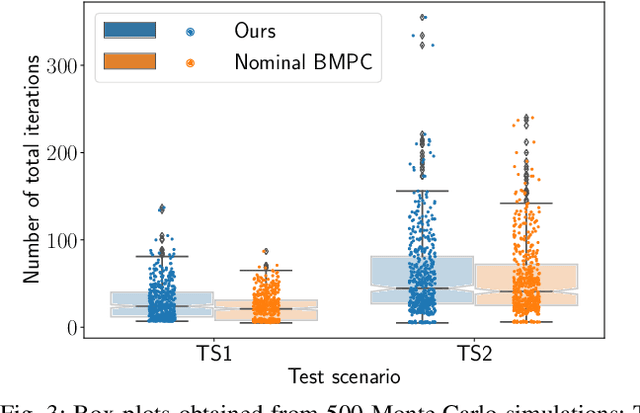

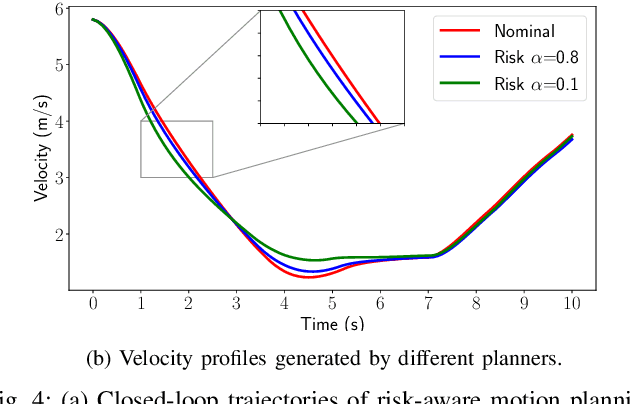

Abstract:One of the critical challenges in automated driving is ensuring safety of automated vehicles despite the unknown behavior of the other vehicles. Although motion prediction modules are able to generate a probability distribution associated with various behavior modes, their probabilistic estimates are often inaccurate, thus leading to a possibly unsafe trajectory. To overcome this challenge, we propose a risk-aware motion planning framework that appropriately accounts for the ambiguity in the estimated probability distribution. We formulate the risk-aware motion planning problem as a min-max optimization problem and develop an efficient iterative method by incorporating a regularization term in the probability update step. Via extensive numerical studies, we validate the convergence of our method and demonstrate its advantages compared to the state-of-the-art approaches.

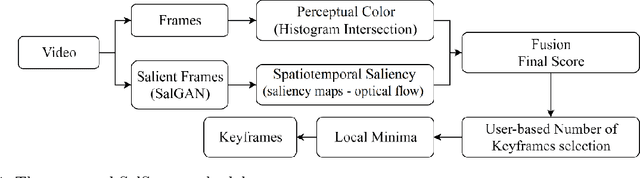

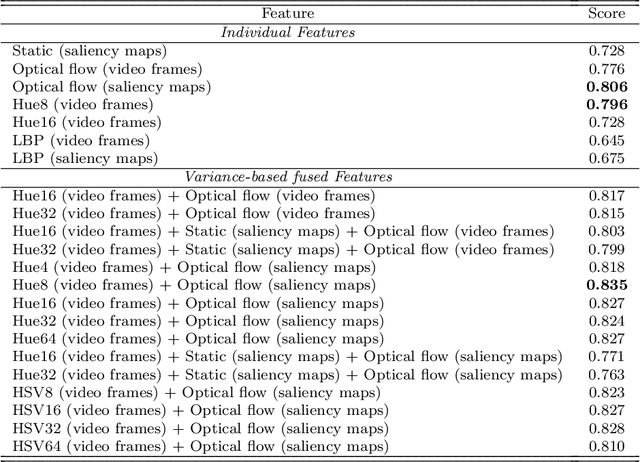

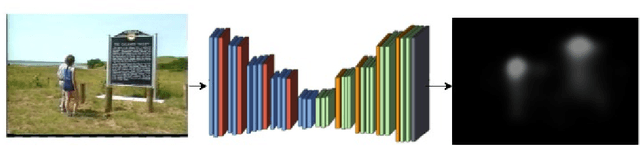

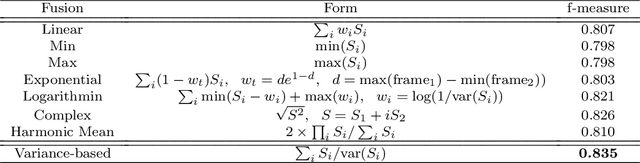

SalSum: Saliency-based Video Summarization using Generative Adversarial Networks

Nov 20, 2020

Abstract:The huge amount of video data produced daily by camera-based systems, such as surveilance, medical and telecommunication systems, emerges the need for effective video summarization (VS) methods. These methods should be capable of creating an overview of the video content. In this paper, we propose a novel VS method based on a Generative Adversarial Network (GAN) model pre-trained with human eye fixations. The main contribution of the proposed method is that it can provide perceptually compatible video summaries by combining both perceived color and spatiotemporal visual attention cues in a unsupervised scheme. Several fusion approaches are considered for robustness under uncertainty, and personalization. The proposed method is evaluated in comparison to state-of-the-art VS approaches on the benchmark dataset VSUMM. The experimental results conclude that SalSum outperforms the state-of-the-art approaches by providing the highest f-measure score on the VSUMM benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge