Robert Lo

VisualWebArena: Evaluating Multimodal Agents on Realistic Visual Web Tasks

Jan 24, 2024

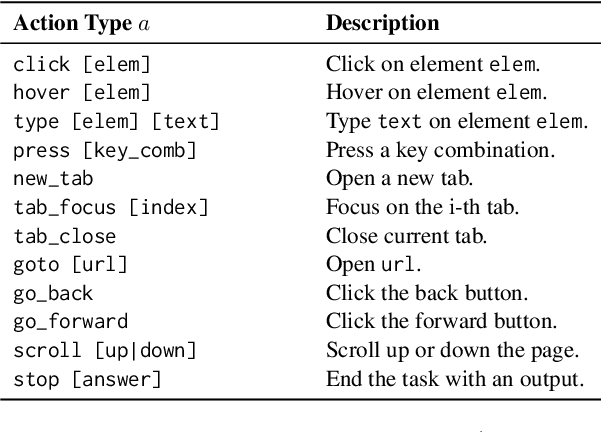

Abstract:Autonomous agents capable of planning, reasoning, and executing actions on the web offer a promising avenue for automating computer tasks. However, the majority of existing benchmarks primarily focus on text-based agents, neglecting many natural tasks that require visual information to effectively solve. Given that most computer interfaces cater to human perception, visual information often augments textual data in ways that text-only models struggle to harness effectively. To bridge this gap, we introduce VisualWebArena, a benchmark designed to assess the performance of multimodal web agents on realistic \textit{visually grounded tasks}. VisualWebArena comprises of a set of diverse and complex web-based tasks that evaluate various capabilities of autonomous multimodal agents. To perform on this benchmark, agents need to accurately process image-text inputs, interpret natural language instructions, and execute actions on websites to accomplish user-defined objectives. We conduct an extensive evaluation of state-of-the-art LLM-based autonomous agents, including several multimodal models. Through extensive quantitative and qualitative analysis, we identify several limitations of text-only LLM agents, and reveal gaps in the capabilities of state-of-the-art multimodal language agents. VisualWebArena provides a framework for evaluating multimodal autonomous language agents, and offers insights towards building stronger autonomous agents for the web. Our code, baseline models, and data is publicly available at https://jykoh.com/vwa.

WebArena: A Realistic Web Environment for Building Autonomous Agents

Jul 25, 2023

Abstract:With generative AI advances, the exciting potential for autonomous agents to manage daily tasks via natural language commands has emerged. However, cur rent agents are primarily created and tested in simplified synthetic environments, substantially limiting real-world scenario representation. In this paper, we build an environment for agent command and control that is highly realistic and reproducible. Specifically, we focus on agents that perform tasks on websites, and we create an environment with fully functional websites from four common domains: e-commerce, social forum discussions, collaborative software development, and content management. Our environment is enriched with tools (e.g., a map) and external knowledge bases (e.g., user manuals) to encourage human-like task-solving. Building upon our environment, we release a set of benchmark tasks focusing on evaluating the functional correctness of task completions. The tasks in our benchmark are diverse, long-horizon, and are designed to emulate tasks that humans routinely perform on the internet. We design and implement several autonomous agents, integrating recent techniques such as reasoning before acting. The results demonstrate that solving complex tasks is challenging: our best GPT-4-based agent only achieves an end-to-end task success rate of 10.59%. These results highlight the need for further development of robust agents, that current state-of-the-art LMs are far from perfect performance in these real-life tasks, and that WebArena can be used to measure such progress. Our code, data, environment reproduction resources, and video demonstrations are publicly available at https://webarena.dev/.

Exploring the Role of the Bottleneck in Slot-Based Models Through Covariance Regularization

Jun 05, 2023

Abstract:In this project we attempt to make slot-based models with an image reconstruction objective competitive with those that use a feature reconstruction objective on real world datasets. We propose a loss-based approach to constricting the bottleneck of slot-based models, allowing larger-capacity encoder networks to be used with Slot Attention without producing degenerate stripe-shaped masks. We find that our proposed method offers an improvement over the baseline Slot Attention model but does not reach the performance of \dinosaur on the COCO2017 dataset. Throughout this project, we confirm the superiority of a feature reconstruction objective over an image reconstruction objective and explore the role of the architectural bottleneck in slot-based models.

LIC-GAN: Language Information Conditioned Graph Generative GAN Model

Jun 02, 2023Abstract:Deep generative models for Natural Language data offer a new angle on the problem of graph synthesis: by optimizing differentiable models that directly generate graphs, it is possible to side-step expensive search procedures in the discrete and vast space of possible graphs. We introduce LIC-GAN, an implicit, likelihood-free generative model for small graphs that circumvents the need for expensive graph matching procedures. Our method takes as input a natural language query and using a combination of language modelling and Generative Adversarial Networks (GANs) and returns a graph that closely matches the description of the query. We combine our approach with a reward network to further enhance the graph generation with desired properties. Our experiments, show that LIC-GAN does well on metrics such as PropMatch and Closeness getting scores of 0.36 and 0.48. We also show that LIC-GAN performs as good as ChatGPT, with ChatGPT getting scores of 0.40 and 0.42. We also conduct a few experiments to demonstrate the robustness of our method, while also highlighting a few interesting caveats of the model.

Hierarchical Prompting Assists Large Language Model on Web Navigation

May 23, 2023Abstract:Large language models (LLMs) struggle on processing complicated observations in interactive decision making. To alleviate this issue, we propose a simple hierarchical prompting approach. Diverging from previous prompting approaches that always put the \emph{full} observation~(\eg a web page) to the prompt, we propose to first construct an action-aware observation which is more \emph{condensed} and \emph{relevant} with a dedicated \summ prompt. The \actor prompt then predicts the next action based on the summarized history. While our method has broad applicability, we particularly demonstrate its efficacy in the complex domain of web navigation where a full observation often contains redundant and irrelevant information. Our approach outperforms the previous state-of-the-art prompting mechanism with the same LLM by 6.2\% on task success rate, demonstrating its potential on interactive decision making tasks with long observation traces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge