Wei Ke

Unveiling Perceptual Artifacts: A Fine-Grained Benchmark for Interpretable AI-Generated Image Detection

Jan 27, 2026Abstract:Current AI-Generated Image (AIGI) detection approaches predominantly rely on binary classification to distinguish real from synthetic images, often lacking interpretable or convincing evidence to substantiate their decisions. This limitation stems from existing AIGI detection benchmarks, which, despite featuring a broad collection of synthetic images, remain restricted in their coverage of artifact diversity and lack detailed, localized annotations. To bridge this gap, we introduce a fine-grained benchmark towards eXplainable AI-Generated image Detection, named X-AIGD, which provides pixel-level, categorized annotations of perceptual artifacts, spanning low-level distortions, high-level semantics, and cognitive-level counterfactuals. These comprehensive annotations facilitate fine-grained interpretability evaluation and deeper insight into model decision-making processes. Our extensive investigation using X-AIGD provides several key insights: (1) Existing AIGI detectors demonstrate negligible reliance on perceptual artifacts, even at the most basic distortion level. (2) While AIGI detectors can be trained to identify specific artifacts, they still substantially base their judgment on uninterpretable features. (3) Explicitly aligning model attention with artifact regions can increase the interpretability and generalization of detectors. The data and code are available at: https://github.com/Coxy7/X-AIGD.

AlignDrive: Aligned Lateral-Longitudinal Planning for End-to-End Autonomous Driving

Jan 05, 2026Abstract:End-to-end autonomous driving has rapidly progressed, enabling joint perception and planning in complex environments. In the planning stage, state-of-the-art (SOTA) end-to-end autonomous driving models decouple planning into parallel lateral and longitudinal predictions. While effective, this parallel design can lead to i) coordination failures between the planned path and speed, and ii) underutilization of the drive path as a prior for longitudinal planning, thus redundantly encoding static information. To address this, we propose a novel cascaded framework that explicitly conditions longitudinal planning on the drive path, enabling coordinated and collision-aware lateral and longitudinal planning. Specifically, we introduce a path-conditioned formulation that explicitly incorporates the drive path into longitudinal planning. Building on this, the model predicts longitudinal displacements along the drive path rather than full 2D trajectory waypoints. This design simplifies longitudinal reasoning and more tightly couples it with lateral planning. Additionally, we introduce a planning-oriented data augmentation strategy that simulates rare safety-critical events, such as vehicle cut-ins, by adding agents and relabeling longitudinal targets to avoid collision. Evaluated on the challenging Bench2Drive benchmark, our method sets a new SOTA, achieving a driving score of 89.07 and a success rate of 73.18%, demonstrating significantly improved coordination and safety

Head Anchor Enhanced Detection and Association for Crowded Pedestrian Tracking

Aug 07, 2025Abstract:Visual pedestrian tracking represents a promising research field, with extensive applications in intelligent surveillance, behavior analysis, and human-computer interaction. However, real-world applications face significant occlusion challenges. When multiple pedestrians interact or overlap, the loss of target features severely compromises the tracker's ability to maintain stable trajectories. Traditional tracking methods, which typically rely on full-body bounding box features extracted from {Re-ID} models and linear constant-velocity motion assumptions, often struggle in severe occlusion scenarios. To address these limitations, this work proposes an enhanced tracking framework that leverages richer feature representations and a more robust motion model. Specifically, the proposed method incorporates detection features from both the regression and classification branches of an object detector, embedding spatial and positional information directly into the feature representations. To further mitigate occlusion challenges, a head keypoint detection model is introduced, as the head is less prone to occlusion compared to the full body. In terms of motion modeling, we propose an iterative Kalman filtering approach designed to align with modern detector assumptions, integrating 3D priors to better complete motion trajectories in complex scenes. By combining these advancements in appearance and motion modeling, the proposed method offers a more robust solution for multi-object tracking in crowded environments where occlusions are prevalent.

Multi-tracklet Tracking for Generic Targets with Adaptive Detection Clustering

Aug 07, 2025

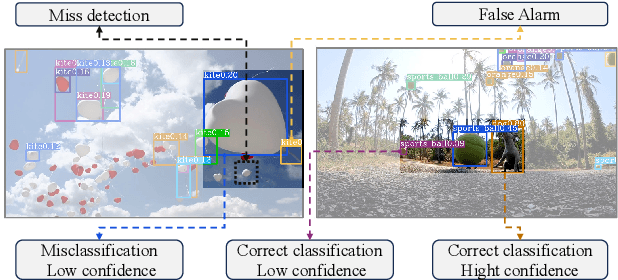

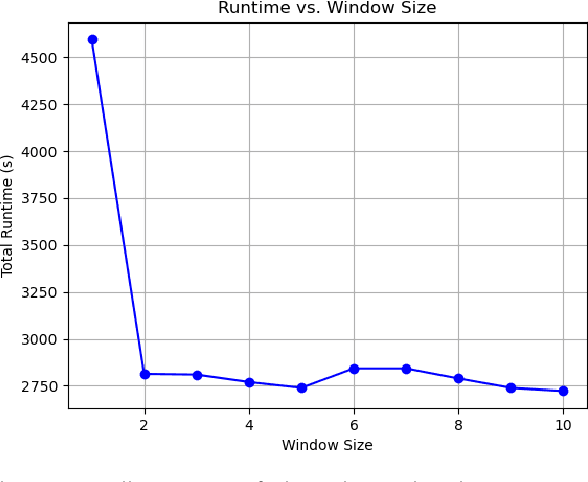

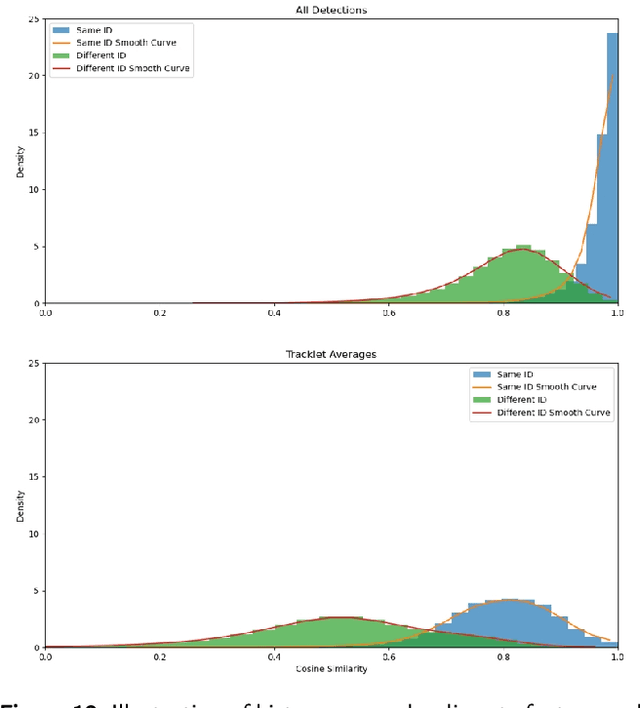

Abstract:Tracking specific targets, such as pedestrians and vehicles, has been the focus of recent vision-based multitarget tracking studies. However, in some real-world scenarios, unseen categories often challenge existing methods due to low-confidence detections, weak motion and appearance constraints, and long-term occlusions. To address these issues, this article proposes a tracklet-enhanced tracker called Multi-Tracklet Tracking (MTT) that integrates flexible tracklet generation into a multi-tracklet association framework. This framework first adaptively clusters the detection results according to their short-term spatio-temporal correlation into robust tracklets and then estimates the best tracklet partitions using multiple clues, such as location and appearance over time to mitigate error propagation in long-term association. Finally, extensive experiments on the benchmark for generic multiple object tracking demonstrate the competitiveness of the proposed framework.

InstructionBench: An Instructional Video Understanding Benchmark

Apr 07, 2025Abstract:Despite progress in video large language models (Video-LLMs), research on instructional video understanding, crucial for enhancing access to instructional content, remains insufficient. To address this, we introduce InstructionBench, an Instructional video understanding Benchmark, which challenges models' advanced temporal reasoning within instructional videos characterized by their strict step-by-step flow. Employing GPT-4, we formulate Q\&A pairs in open-ended and multiple-choice formats to assess both Coarse-Grained event-level and Fine-Grained object-level reasoning. Our filtering strategies exclude questions answerable purely by common-sense knowledge, focusing on visual perception and analysis when evaluating Video-LLM models. The benchmark finally contains 5k questions across over 700 videos. We evaluate the latest Video-LLMs on our InstructionBench, finding that closed-source models outperform open-source ones. However, even the best model, GPT-4o, achieves only 53.42\% accuracy, indicating significant gaps in temporal reasoning. To advance the field, we also develop a comprehensive instructional video dataset with over 19k Q\&A pairs from nearly 2.5k videos, using an automated data generation framework, thereby enriching the community's research resources.

Refining CLIP's Spatial Awareness: A Visual-Centric Perspective

Apr 03, 2025

Abstract:Contrastive Language-Image Pre-training (CLIP) excels in global alignment with language but exhibits limited sensitivity to spatial information, leading to strong performance in zero-shot classification tasks but underperformance in tasks requiring precise spatial understanding. Recent approaches have introduced Region-Language Alignment (RLA) to enhance CLIP's performance in dense multimodal tasks by aligning regional visual representations with corresponding text inputs. However, we find that CLIP ViTs fine-tuned with RLA suffer from notable loss in spatial awareness, which is crucial for dense prediction tasks. To address this, we propose the Spatial Correlation Distillation (SCD) framework, which preserves CLIP's inherent spatial structure and mitigates the above degradation. To further enhance spatial correlations, we introduce a lightweight Refiner that extracts refined correlations directly from CLIP before feeding them into SCD, based on an intriguing finding that CLIP naturally captures high-quality dense features. Together, these components form a robust distillation framework that enables CLIP ViTs to integrate both visual-language and visual-centric improvements, achieving state-of-the-art results across various open-vocabulary dense prediction benchmarks.

Generating Multimodal Driving Scenes via Next-Scene Prediction

Mar 19, 2025Abstract:Generative models in Autonomous Driving (AD) enable diverse scene creation, yet existing methods fall short by only capturing a limited range of modalities, restricting the capability of generating controllable scenes for comprehensive evaluation of AD systems. In this paper, we introduce a multimodal generation framework that incorporates four major data modalities, including a novel addition of map modality. With tokenized modalities, our scene sequence generation framework autoregressively predicts each scene while managing computational demands through a two-stage approach. The Temporal AutoRegressive (TAR) component captures inter-frame dynamics for each modality while the Ordered AutoRegressive (OAR) component aligns modalities within each scene by sequentially predicting tokens in a fixed order. To maintain coherence between map and ego-action modalities, we introduce the Action-aware Map Alignment (AMA) module, which applies a transformation based on the ego-action to maintain coherence between these modalities. Our framework effectively generates complex, realistic driving scenes over extended sequences, ensuring multimodal consistency and offering fine-grained control over scene elements.

Towards Self-Improving Systematic Cognition for Next-Generation Foundation MLLMs

Mar 16, 2025Abstract:Despite their impressive capabilities, Multimodal Large Language Models (MLLMs) face challenges with fine-grained perception and complex reasoning. Prevalent pre-training approaches focus on enhancing perception by training on high-quality image captions due to the extremely high cost of collecting chain-of-thought (CoT) reasoning data for improving reasoning. While leveraging advanced MLLMs for caption generation enhances scalability, the outputs often lack comprehensiveness and accuracy. In this paper, we introduce Self-Improving Cognition (SIcog), a self-learning framework designed to construct next-generation foundation MLLMs by enhancing their systematic cognitive capabilities through multimodal pre-training with self-generated data. Specifically, we propose chain-of-description, an approach that improves an MLLM's systematic perception by enabling step-by-step visual understanding, ensuring greater comprehensiveness and accuracy. Additionally, we adopt a structured CoT reasoning technique to enable MLLMs to integrate in-depth multimodal reasoning. To construct a next-generation foundation MLLM with self-improved cognition, SIcog first equips an MLLM with systematic perception and reasoning abilities using minimal external annotations. The enhanced models then generate detailed captions and CoT reasoning data, which are further curated through self-consistency. This curated data is ultimately used to refine the MLLM during multimodal pre-training, facilitating next-generation foundation MLLM construction. Extensive experiments on both low- and high-resolution MLLMs across diverse benchmarks demonstrate that, with merely 213K self-generated pre-training samples, SIcog produces next-generation foundation MLLMs with significantly improved cognition, achieving benchmark-leading performance compared to prevalent pre-training approaches.

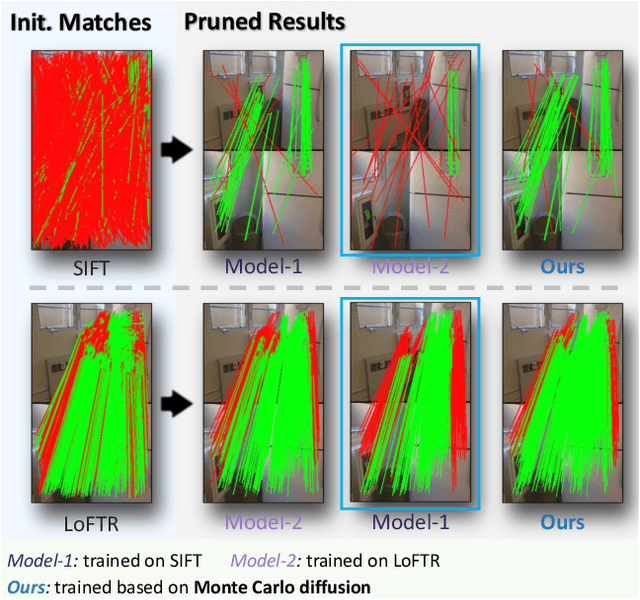

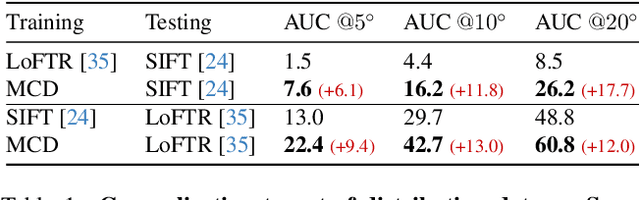

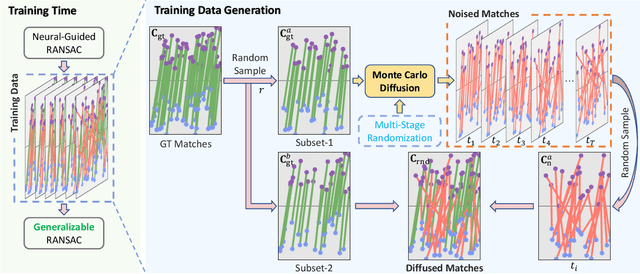

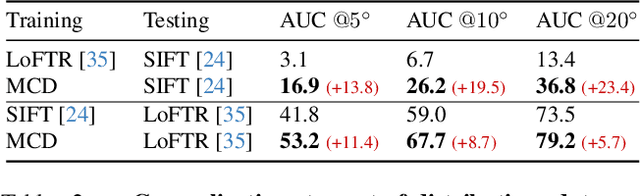

Monte Carlo Diffusion for Generalizable Learning-Based RANSAC

Mar 12, 2025

Abstract:Random Sample Consensus (RANSAC) is a fundamental approach for robustly estimating parametric models from noisy data. Existing learning-based RANSAC methods utilize deep learning to enhance the robustness of RANSAC against outliers. However, these approaches are trained and tested on the data generated by the same algorithms, leading to limited generalization to out-of-distribution data during inference. Therefore, in this paper, we introduce a novel diffusion-based paradigm that progressively injects noise into ground-truth data, simulating the noisy conditions for training learning-based RANSAC. To enhance data diversity, we incorporate Monte Carlo sampling into the diffusion paradigm, approximating diverse data distributions by introducing different types of randomness at multiple stages. We evaluate our approach in the context of feature matching through comprehensive experiments on the ScanNet and MegaDepth datasets. The experimental results demonstrate that our Monte Carlo diffusion mechanism significantly improves the generalization ability of learning-based RANSAC. We also develop extensive ablation studies that highlight the effectiveness of key components in our framework.

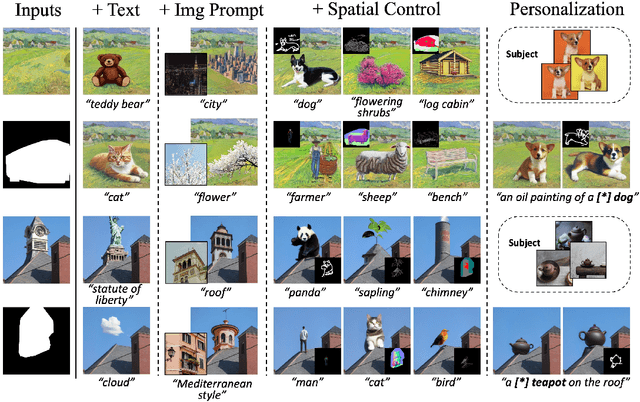

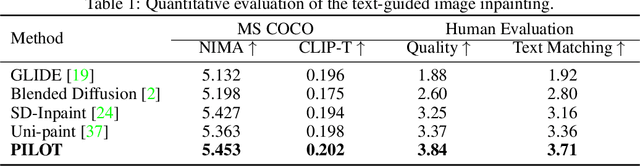

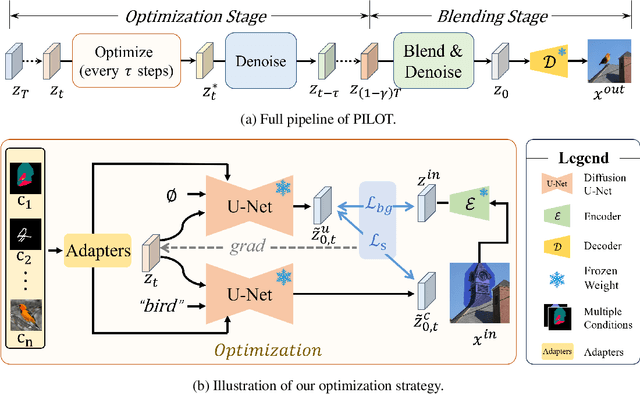

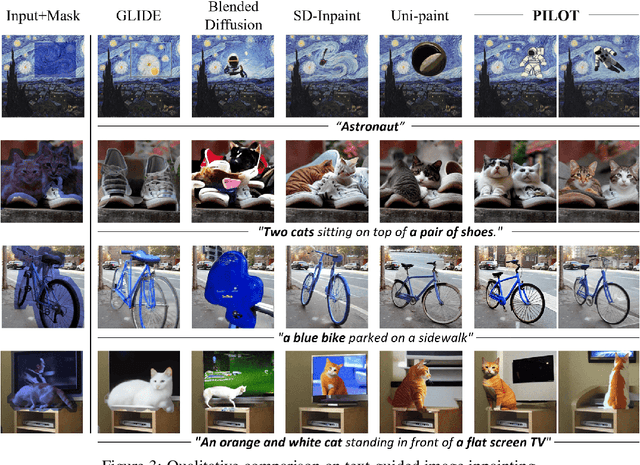

Coherent and Multi-modality Image Inpainting via Latent Space Optimization

Jul 10, 2024

Abstract:With the advancements in denoising diffusion probabilistic models (DDPMs), image inpainting has significantly evolved from merely filling information based on nearby regions to generating content conditioned on various prompts such as text, exemplar images, and sketches. However, existing methods, such as model fine-tuning and simple concatenation of latent vectors, often result in generation failures due to overfitting and inconsistency between the inpainted region and the background. In this paper, we argue that the current large diffusion models are sufficiently powerful to generate realistic images without further tuning. Hence, we introduce PILOT (in\textbf{P}ainting v\textbf{I}a \textbf{L}atent \textbf{O}p\textbf{T}imization), an optimization approach grounded on a novel \textit{semantic centralization} and \textit{background preservation loss}. Our method searches latent spaces capable of generating inpainted regions that exhibit high fidelity to user-provided prompts while maintaining coherence with the background. Furthermore, we propose a strategy to balance optimization expense and image quality, significantly enhancing generation efficiency. Our method seamlessly integrates with any pre-trained model, including ControlNet and DreamBooth, making it suitable for deployment in multi-modal editing tools. Our qualitative and quantitative evaluations demonstrate that PILOT outperforms existing approaches by generating more coherent, diverse, and faithful inpainted regions in response to provided prompts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge