Ruben Vera-Rodriguez

Is Visual Realism Enough? Evaluating Gait Biometric Fidelity in Generative AI Human Animation

Dec 22, 2025

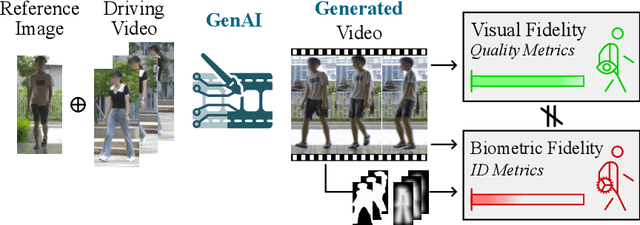

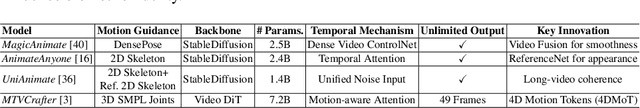

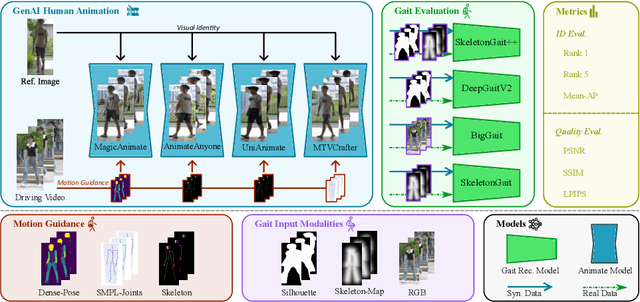

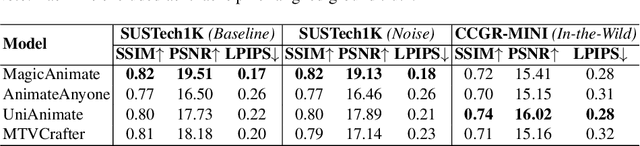

Abstract:Generative AI (GenAI) models have revolutionized animation, enabling the synthesis of humans and motion patterns with remarkable visual fidelity. However, generating truly realistic human animation remains a formidable challenge, where even minor inconsistencies can make a subject appear unnatural. This limitation is particularly critical when AI-generated videos are evaluated for behavioral biometrics, where subtle motion cues that define identity are easily lost or distorted. The present study investigates whether state-of-the-art GenAI human animation models can preserve the subtle spatio-temporal details needed for person identification through gait biometrics. Specifically, we evaluate four different GenAI models across two primary evaluation tasks to assess their ability to i) restore gait patterns from reference videos under varying conditions of complexity, and ii) transfer these gait patterns to different visual identities. Our results show that while visual quality is mostly high, biometric fidelity remains low in tasks focusing on identification, suggesting that current GenAI models struggle to disentangle identity from motion. Furthermore, through an identity transfer task, we expose a fundamental flaw in appearance-based gait recognition: when texture is disentangled from motion, identification collapses, proving current GenAI models rely on visual attributes rather than temporal dynamics.

Is It Really You? Exploring Biometric Verification Scenarios in Photorealistic Talking-Head Avatar Videos

Aug 01, 2025

Abstract:Photorealistic talking-head avatars are becoming increasingly common in virtual meetings, gaming, and social platforms. These avatars allow for more immersive communication, but they also introduce serious security risks. One emerging threat is impersonation: an attacker can steal a user's avatar-preserving their appearance and voice-making it nearly impossible to detect its fraudulent usage by sight or sound alone. In this paper, we explore the challenge of biometric verification in such avatar-mediated scenarios. Our main question is whether an individual's facial motion patterns can serve as reliable behavioral biometrics to verify their identity when the avatar's visual appearance is a facsimile of its owner. To answer this question, we introduce a new dataset of realistic avatar videos created using a state-of-the-art one-shot avatar generation model, GAGAvatar, with genuine and impostor avatar videos. We also propose a lightweight, explainable spatio-temporal Graph Convolutional Network architecture with temporal attention pooling, that uses only facial landmarks to model dynamic facial gestures. Experimental results demonstrate that facial motion cues enable meaningful identity verification with AUC values approaching 80%. The proposed benchmark and biometric system are available for the research community in order to bring attention to the urgent need for more advanced behavioral biometric defenses in avatar-based communication systems.

Exploring a Patch-Wise Approach for Privacy-Preserving Fake ID Detection

Apr 10, 2025Abstract:In an increasingly digitalized world, verifying the authenticity of ID documents has become a critical challenge for real-life applications such as digital banking, crypto-exchanges, renting, etc. This study focuses on the topic of fake ID detection, covering several limitations in the field. In particular, no publicly available data from real ID documents exists, and most studies rely on proprietary in-house databases that are not available due to privacy reasons. In order to shed some light on this critical challenge that makes difficult to advance in the field, we explore a trade-off between privacy (i.e., amount of sensitive data available) and performance, proposing a novel patch-wise approach for privacy-preserving fake ID detection. Our proposed approach explores how privacy can be enhanced through: i) two levels of anonymization for an ID document (i.e., fully- and pseudo-anonymized), and ii) different patch size configurations, varying the amount of sensitive data visible in the patch image. Also, state-of-the-art methods such as Vision Transformers and Foundation Models are considered in the analysis. The experimental framework shows that, on an unseen database (DLC-2021), our proposal achieves 13.91% and 0% EERs at patch and ID document level, showing a good generalization to other databases. In addition to this exploration, another key contribution of our study is the release of the first publicly available database that contains 48,400 patches from both real and fake ID documents, along with the experimental framework and models, which will be available in our GitHub.

MINT-Demo: Membership Inference Test Demonstrator

Mar 11, 2025

Abstract:We present the Membership Inference Test Demonstrator, to emphasize the need for more transparent machine learning training processes. MINT is a technique for experimentally determining whether certain data has been used during the training of machine learning models. We conduct experiments with popular face recognition models and 5 public databases containing over 22M images. Promising results, up to 89% accuracy are achieved, suggesting that it is possible to recognize if an AI model has been trained with specific data. Finally, we present a MINT platform as demonstrator of this technology aimed to promote transparency in AI training.

KVC-onGoing: Keystroke Verification Challenge

Dec 29, 2024

Abstract:This article presents the Keystroke Verification Challenge - onGoing (KVC-onGoing), on which researchers can easily benchmark their systems in a common platform using large-scale public databases, the Aalto University Keystroke databases, and a standard experimental protocol. The keystroke data consist of tweet-long sequences of variable transcript text from over 185,000 subjects, acquired through desktop and mobile keyboards simulating real-life conditions. The results on the evaluation set of KVC-onGoing have proved the high discriminative power of keystroke dynamics, reaching values as low as 3.33% of Equal Error Rate (EER) and 11.96% of False Non-Match Rate (FNMR) @1% False Match Rate (FMR) in the desktop scenario, and 3.61% of EER and 17.44% of FNMR @1% at FMR in the mobile scenario, significantly improving previous state-of-the-art results. Concerning demographic fairness, the analyzed scores reflect the subjects' age and gender to various extents, not negligible in a few cases. The framework runs on CodaLab.

Second FRCSyn-onGoing: Winning Solutions and Post-Challenge Analysis to Improve Face Recognition with Synthetic Data

Dec 02, 2024Abstract:Synthetic data is gaining increasing popularity for face recognition technologies, mainly due to the privacy concerns and challenges associated with obtaining real data, including diverse scenarios, quality, and demographic groups, among others. It also offers some advantages over real data, such as the large amount of data that can be generated or the ability to customize it to adapt to specific problem-solving needs. To effectively use such data, face recognition models should also be specifically designed to exploit synthetic data to its fullest potential. In order to promote the proposal of novel Generative AI methods and synthetic data, and investigate the application of synthetic data to better train face recognition systems, we introduce the 2nd FRCSyn-onGoing challenge, based on the 2nd Face Recognition Challenge in the Era of Synthetic Data (FRCSyn), originally launched at CVPR 2024. This is an ongoing challenge that provides researchers with an accessible platform to benchmark i) the proposal of novel Generative AI methods and synthetic data, and ii) novel face recognition systems that are specifically proposed to take advantage of synthetic data. We focus on exploring the use of synthetic data both individually and in combination with real data to solve current challenges in face recognition such as demographic bias, domain adaptation, and performance constraints in demanding situations, such as age disparities between training and testing, changes in the pose, or occlusions. Very interesting findings are obtained in this second edition, including a direct comparison with the first one, in which synthetic databases were restricted to DCFace and GANDiffFace.

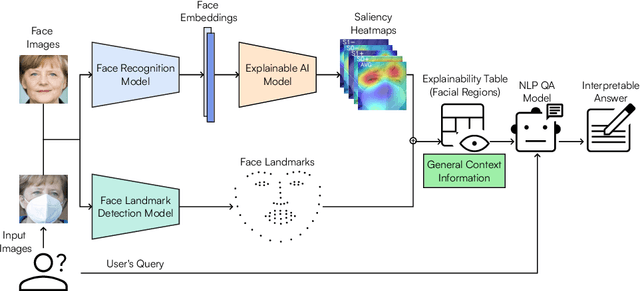

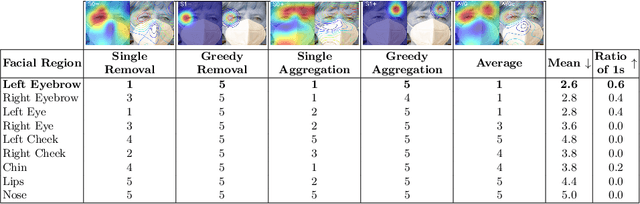

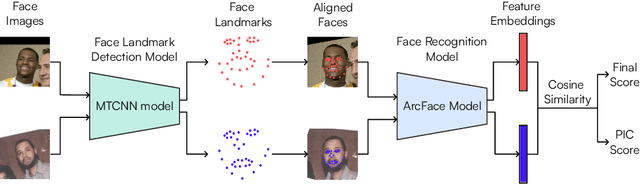

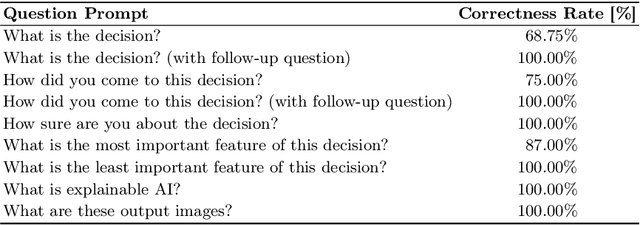

From Pixels to Words: Leveraging Explainability in Face Recognition through Interactive Natural Language Processing

Sep 24, 2024

Abstract:Face Recognition (FR) has advanced significantly with the development of deep learning, achieving high accuracy in several applications. However, the lack of interpretability of these systems raises concerns about their accountability, fairness, and reliability. In the present study, we propose an interactive framework to enhance the explainability of FR models by combining model-agnostic Explainable Artificial Intelligence (XAI) and Natural Language Processing (NLP) techniques. The proposed framework is able to accurately answer various questions of the user through an interactive chatbot. In particular, the explanations generated by our proposed method are in the form of natural language text and visual representations, which for example can describe how different facial regions contribute to the similarity measure between two faces. This is achieved through the automatic analysis of the output's saliency heatmaps of the face images and a BERT question-answering model, providing users with an interface that facilitates a comprehensive understanding of the FR decisions. The proposed approach is interactive, allowing the users to ask questions to get more precise information based on the user's background knowledge. More importantly, in contrast to previous studies, our solution does not decrease the face recognition performance. We demonstrate the effectiveness of the method through different experiments, highlighting its potential to make FR systems more interpretable and user-friendly, especially in sensitive applications where decision-making transparency is crucial.

Personalized Weight Loss Management through Wearable Devices and Artificial Intelligence

Sep 13, 2024

Abstract:Early detection of chronic and Non-Communicable Diseases (NCDs) is crucial for effective treatment during the initial stages. This study explores the application of wearable devices and Artificial Intelligence (AI) in order to predict weight loss changes in overweight and obese individuals. Using wearable data from a 1-month trial involving around 100 subjects from the AI4FoodDB database, including biomarkers, vital signs, and behavioral data, we identify key differences between those achieving weight loss (>= 2% of their initial weight) and those who do not. Feature selection techniques and classification algorithms reveal promising results, with the Gradient Boosting classifier achieving 84.44% Area Under the Curve (AUC). The integration of multiple data sources (e.g., vital signs, physical and sleep activity, etc.) enhances performance, suggesting the potential of wearable devices and AI in personalized healthcare.

Second Edition FRCSyn Challenge at CVPR 2024: Face Recognition Challenge in the Era of Synthetic Data

Apr 16, 2024

Abstract:Synthetic data is gaining increasing relevance for training machine learning models. This is mainly motivated due to several factors such as the lack of real data and intra-class variability, time and errors produced in manual labeling, and in some cases privacy concerns, among others. This paper presents an overview of the 2nd edition of the Face Recognition Challenge in the Era of Synthetic Data (FRCSyn) organized at CVPR 2024. FRCSyn aims to investigate the use of synthetic data in face recognition to address current technological limitations, including data privacy concerns, demographic biases, generalization to novel scenarios, and performance constraints in challenging situations such as aging, pose variations, and occlusions. Unlike the 1st edition, in which synthetic data from DCFace and GANDiffFace methods was only allowed to train face recognition systems, in this 2nd edition we propose new sub-tasks that allow participants to explore novel face generative methods. The outcomes of the 2nd FRCSyn Challenge, along with the proposed experimental protocol and benchmarking contribute significantly to the application of synthetic data to face recognition.

* arXiv admin note: text overlap with arXiv:2311.10476

SDFR: Synthetic Data for Face Recognition Competition

Apr 09, 2024

Abstract:Large-scale face recognition datasets are collected by crawling the Internet and without individuals' consent, raising legal, ethical, and privacy concerns. With the recent advances in generative models, recently several works proposed generating synthetic face recognition datasets to mitigate concerns in web-crawled face recognition datasets. This paper presents the summary of the Synthetic Data for Face Recognition (SDFR) Competition held in conjunction with the 18th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2024) and established to investigate the use of synthetic data for training face recognition models. The SDFR competition was split into two tasks, allowing participants to train face recognition systems using new synthetic datasets and/or existing ones. In the first task, the face recognition backbone was fixed and the dataset size was limited, while the second task provided almost complete freedom on the model backbone, the dataset, and the training pipeline. The submitted models were trained on existing and also new synthetic datasets and used clever methods to improve training with synthetic data. The submissions were evaluated and ranked on a diverse set of seven benchmarking datasets. The paper gives an overview of the submitted face recognition models and reports achieved performance compared to baseline models trained on real and synthetic datasets. Furthermore, the evaluation of submissions is extended to bias assessment across different demography groups. Lastly, an outlook on the current state of the research in training face recognition models using synthetic data is presented, and existing problems as well as potential future directions are also discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge