Guido Borghi

GazeD: Context-Aware Diffusion for Accurate 3D Gaze Estimation

Jan 19, 2026Abstract:We introduce GazeD, a new 3D gaze estimation method that jointly provides 3D gaze and human pose from a single RGB image. Leveraging the ability of diffusion models to deal with uncertainty, it generates multiple plausible 3D gaze and pose hypotheses based on the 2D context information extracted from the input image. Specifically, we condition the denoising process on the 2D pose, the surroundings of the subject, and the context of the scene. With GazeD we also introduce a novel way of representing the 3D gaze by positioning it as an additional body joint at a fixed distance from the eyes. The rationale is that the gaze is usually closely related to the pose, and thus it can benefit from being jointly denoised during the diffusion process. Evaluations across three benchmark datasets demonstrate that GazeD achieves state-of-the-art performance in 3D gaze estimation, even surpassing methods that rely on temporal information. Project details will be available at https://aimagelab.ing.unimore.it/go/gazed.

BRUM: Robust 3D Vehicle Reconstruction from 360 Sparse Images

Jul 16, 2025Abstract:Accurate 3D reconstruction of vehicles is vital for applications such as vehicle inspection, predictive maintenance, and urban planning. Existing methods like Neural Radiance Fields and Gaussian Splatting have shown impressive results but remain limited by their reliance on dense input views, which hinders real-world applicability. This paper addresses the challenge of reconstructing vehicles from sparse-view inputs, leveraging depth maps and a robust pose estimation architecture to synthesize novel views and augment training data. Specifically, we enhance Gaussian Splatting by integrating a selective photometric loss, applied only to high-confidence pixels, and replacing standard Structure-from-Motion pipelines with the DUSt3R architecture to improve camera pose estimation. Furthermore, we present a novel dataset featuring both synthetic and real-world public transportation vehicles, enabling extensive evaluation of our approach. Experimental results demonstrate state-of-the-art performance across multiple benchmarks, showcasing the method's ability to achieve high-quality reconstructions even under constrained input conditions.

TakuNet: an Energy-Efficient CNN for Real-Time Inference on Embedded UAV systems in Emergency Response Scenarios

Jan 10, 2025Abstract:Designing efficient neural networks for embedded devices is a critical challenge, particularly in applications requiring real-time performance, such as aerial imaging with drones and UAVs for emergency responses. In this work, we introduce TakuNet, a novel light-weight architecture which employs techniques such as depth-wise convolutions and an early downsampling stem to reduce computational complexity while maintaining high accuracy. It leverages dense connections for fast convergence during training and uses 16-bit floating-point precision for optimization on embedded hardware accelerators. Experimental evaluation on two public datasets shows that TakuNet achieves near-state-of-the-art accuracy in classifying aerial images of emergency situations, despite its minimal parameter count. Real-world tests on embedded devices, namely Jetson Orin Nano and Raspberry Pi, confirm TakuNet's efficiency, achieving more than 650 fps on the 15W Jetson board, making it suitable for real-time AI processing on resource-constrained platforms and advancing the applicability of drones in emergency scenarios. The code and implementation details are publicly released.

Depth-based Privileged Information for Boosting 3D Human Pose Estimation on RGB

Sep 17, 2024

Abstract:Despite the recent advances in computer vision research, estimating the 3D human pose from single RGB images remains a challenging task, as multiple 3D poses can correspond to the same 2D projection on the image. In this context, depth data could help to disambiguate the 2D information by providing additional constraints about the distance between objects in the scene and the camera. Unfortunately, the acquisition of accurate depth data is limited to indoor spaces and usually is tied to specific depth technologies and devices, thus limiting generalization capabilities. In this paper, we propose a method able to leverage the benefits of depth information without compromising its broader applicability and adaptability in a predominantly RGB-camera-centric landscape. Our approach consists of a heatmap-based 3D pose estimator that, leveraging the paradigm of Privileged Information, is able to hallucinate depth information from the RGB frames given at inference time. More precisely, depth information is used exclusively during training by enforcing our RGB-based hallucination network to learn similar features to a backbone pre-trained only on depth data. This approach proves to be effective even when dealing with limited and small datasets. Experimental results reveal that the paradigm of Privileged Information significantly enhances the model's performance, enabling efficient extraction of depth information by using only RGB images.

ONOT: a High-Quality ICAO-compliant Synthetic Mugshot Dataset

Apr 17, 2024

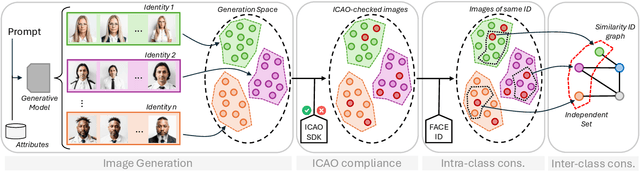

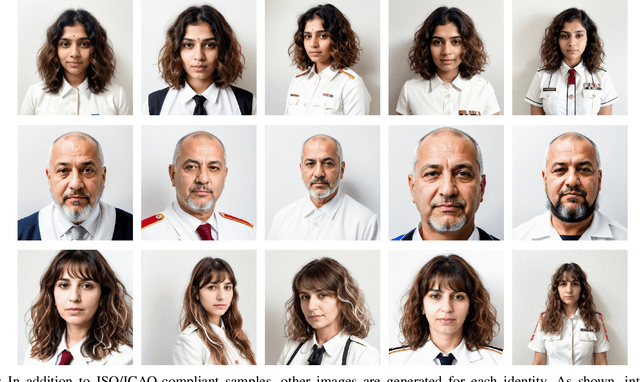

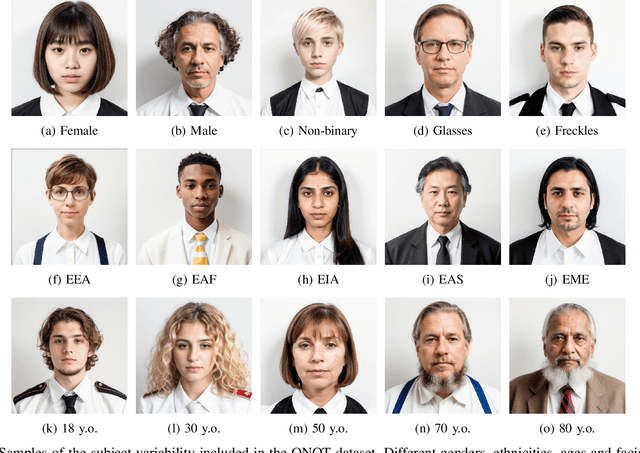

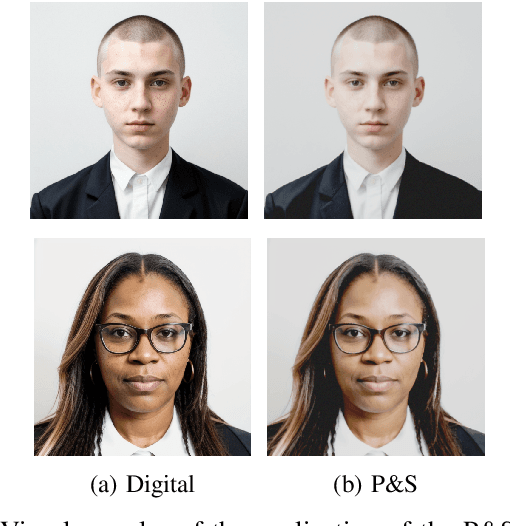

Abstract:Nowadays, state-of-the-art AI-based generative models represent a viable solution to overcome privacy issues and biases in the collection of datasets containing personal information, such as faces. Following this intuition, in this paper we introduce ONOT, a synthetic dataset specifically focused on the generation of high-quality faces in adherence to the requirements of the ISO/IEC 39794-5 standards that, following the guidelines of the International Civil Aviation Organization (ICAO), defines the interchange formats of face images in electronic Machine-Readable Travel Documents (eMRTD). The strictly controlled and varied mugshot images included in ONOT are useful in research fields related to the analysis of face images in eMRTD, such as Morphing Attack Detection and Face Quality Assessment. The dataset is publicly released, in combination with the generation procedure details in order to improve the reproducibility and enable future extensions.

Adversarial Identity Injection for Semantic Face Image Synthesis

Apr 16, 2024

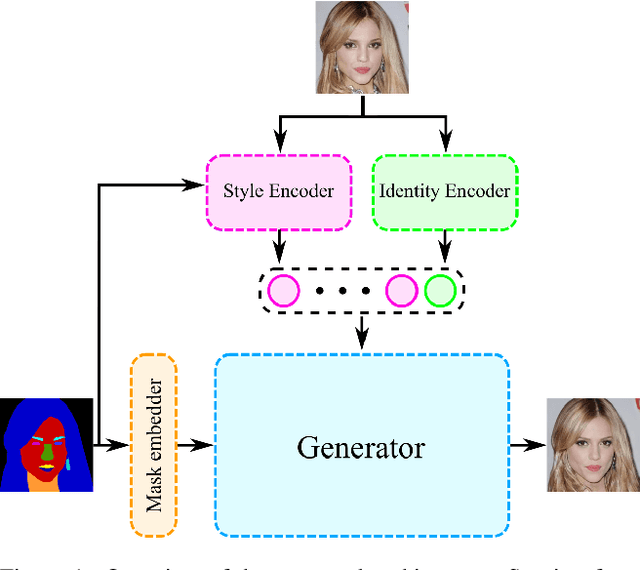

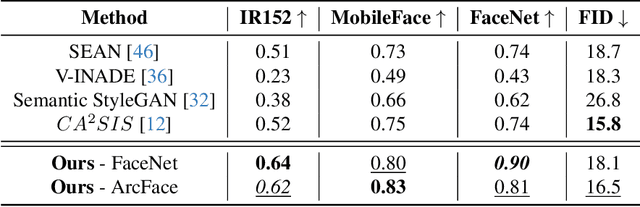

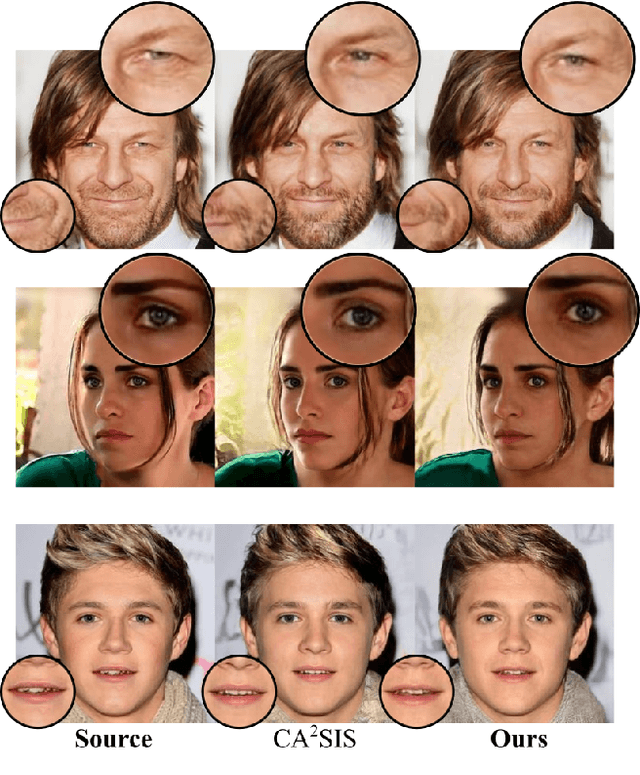

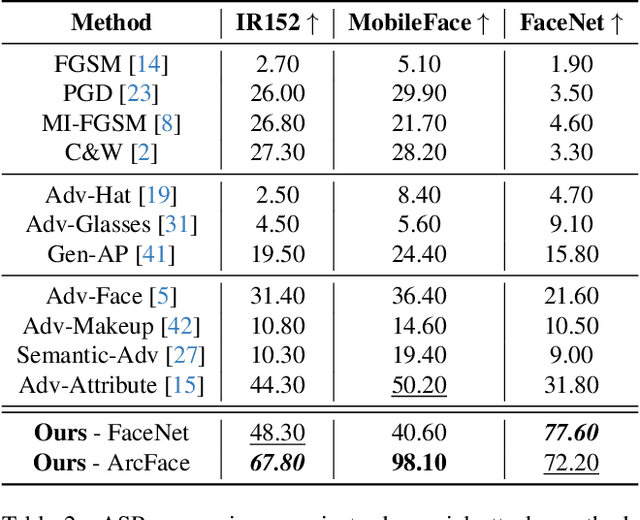

Abstract:Nowadays, deep learning models have reached incredible performance in the task of image generation. Plenty of literature works address the task of face generation and editing, with human and automatic systems that struggle to distinguish what's real from generated. Whereas most systems reached excellent visual generation quality, they still face difficulties in preserving the identity of the starting input subject. Among all the explored techniques, Semantic Image Synthesis (SIS) methods, whose goal is to generate an image conditioned on a semantic segmentation mask, are the most promising, even though preserving the perceived identity of the input subject is not their main concern. Therefore, in this paper, we investigate the problem of identity preservation in face image generation and present an SIS architecture that exploits a cross-attention mechanism to merge identity, style, and semantic features to generate faces whose identities are as similar as possible to the input ones. Experimental results reveal that the proposed method is not only suitable for preserving the identity but is also effective in the face recognition adversarial attack, i.e. hiding a second identity in the generated faces.

Dealing with Subject Similarity in Differential Morphing Attack Detection

Apr 11, 2024

Abstract:The advent of morphing attacks has posed significant security concerns for automated Face Recognition systems, raising the pressing need for robust and effective Morphing Attack Detection (MAD) methods able to effectively address this issue. In this paper, we focus on Differential MAD (D-MAD), where a trusted live capture, usually representing the criminal, is compared with the document image to classify it as morphed or bona fide. We show these approaches based on identity features are effective when the morphed image and the live one are sufficiently diverse; unfortunately, the effectiveness is significantly reduced when the same approaches are applied to look-alike subjects or in all those cases when the similarity between the two compared images is high (e.g. comparison between the morphed image and the accomplice). Therefore, in this paper, we propose ACIdA, a modular D-MAD system, consisting of a module for the attempt type classification, and two modules for the identity and artifacts analysis on input images. Successfully addressing this task would allow broadening the D-MAD applications including, for instance, the document enrollment stage, which currently relies entirely on human evaluation, thus limiting the possibility of releasing ID documents with manipulated images, as well as the automated gates to detect both accomplices and criminals. An extensive cross-dataset experimental evaluation conducted on the introduced scenario shows that ACIdA achieves state-of-the-art results, outperforming literature competitors, while maintaining good performance in traditional D-MAD benchmarks.

V-MAD: Video-based Morphing Attack Detection in Operational Scenarios

Apr 10, 2024

Abstract:In response to the rising threat of the face morphing attack, this paper introduces and explores the potential of Video-based Morphing Attack Detection (V-MAD) systems in real-world operational scenarios. While current morphing attack detection methods primarily focus on a single or a pair of images, V-MAD is based on video sequences, exploiting the video streams often acquired by face verification tools available, for instance, at airport gates. Through this study, we show for the first time the advantages that the availability of multiple probe frames can bring to the morphing attack detection task, especially in scenarios where the quality of probe images is varied and might be affected, for instance, by pose or illumination variations. Experimental results on a real operational database demonstrate that video sequences represent valuable information for increasing the robustness and performance of morphing attack detection systems.

SDFR: Synthetic Data for Face Recognition Competition

Apr 09, 2024

Abstract:Large-scale face recognition datasets are collected by crawling the Internet and without individuals' consent, raising legal, ethical, and privacy concerns. With the recent advances in generative models, recently several works proposed generating synthetic face recognition datasets to mitigate concerns in web-crawled face recognition datasets. This paper presents the summary of the Synthetic Data for Face Recognition (SDFR) Competition held in conjunction with the 18th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2024) and established to investigate the use of synthetic data for training face recognition models. The SDFR competition was split into two tasks, allowing participants to train face recognition systems using new synthetic datasets and/or existing ones. In the first task, the face recognition backbone was fixed and the dataset size was limited, while the second task provided almost complete freedom on the model backbone, the dataset, and the training pipeline. The submitted models were trained on existing and also new synthetic datasets and used clever methods to improve training with synthetic data. The submissions were evaluated and ranked on a diverse set of seven benchmarking datasets. The paper gives an overview of the submitted face recognition models and reports achieved performance compared to baseline models trained on real and synthetic datasets. Furthermore, the evaluation of submissions is extended to bias assessment across different demography groups. Lastly, an outlook on the current state of the research in training face recognition models using synthetic data is presented, and existing problems as well as potential future directions are also discussed.

Enabling On-device Continual Learning with Binary Neural Networks

Jan 18, 2024

Abstract:On-device learning remains a formidable challenge, especially when dealing with resource-constrained devices that have limited computational capabilities. This challenge is primarily rooted in two key issues: first, the memory available on embedded devices is typically insufficient to accommodate the memory-intensive back-propagation algorithm, which often relies on floating-point precision. Second, the development of learning algorithms on models with extreme quantization levels, such as Binary Neural Networks (BNNs), is critical due to the drastic reduction in bit representation. In this study, we propose a solution that combines recent advancements in the field of Continual Learning (CL) and Binary Neural Networks to enable on-device training while maintaining competitive performance. Specifically, our approach leverages binary latent replay (LR) activations and a novel quantization scheme that significantly reduces the number of bits required for gradient computation. The experimental validation demonstrates a significant accuracy improvement in combination with a noticeable reduction in memory requirement, confirming the suitability of our approach in expanding the practical applications of deep learning in real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge