Michael Wang

SSHealth Team, AI for Healthcare Laboratory

A New Workflow for Materials Discovery Bridging the Gap Between Experimental Databases and Graph Neural Networks

Jan 31, 2026Abstract:Incorporating Machine Learning (ML) into material property prediction has become a crucial step in accelerating materials discovery. A key challenge is the severe lack of training data, as many properties are too complicated to calculate with high-throughput first principles techniques. To address this, recent research has created experimental databases from information extracted from scientific literature. However, most existing experimental databases do not provide full atomic coordinate information, which prevents them from supporting advanced ML architectures such as Graph Neural Networks (GNNs). In this work, we propose to bridge this gap through an alignment process between experimental databases and Crystallographic Information Files (CIF) from the Inorganic Crystal Structure Database (ICSD). Our approach enables the creation of a database that can fully leverage state-of-the-art model architectures for material property prediction. It also opens the door to utilizing transfer learning to improve prediction accuracy. To validate our approach, we align NEMAD with the ICSD and compare models trained on the resulting database to those trained on NEMAD originally. We demonstrate significant improvements in both Mean Absolute Error (MAE) and Correct Classification Rate (CCR) in predicting the ordering temperatures and magnetic ground states of magnetic materials, respectively.

Deploying UDM Series in Real-Life Stuttered Speech Applications: A Clinical Evaluation Framework

Sep 17, 2025Abstract:Stuttered and dysfluent speech detection systems have traditionally suffered from the trade-off between accuracy and clinical interpretability. While end-to-end deep learning models achieve high performance, their black-box nature limits clinical adoption. This paper looks at the Unconstrained Dysfluency Modeling (UDM) series-the current state-of-the-art framework developed by Berkeley that combines modular architecture, explicit phoneme alignment, and interpretable outputs for real-world clinical deployment. Through extensive experiments involving patients and certified speech-language pathologists (SLPs), we demonstrate that UDM achieves state-of-the-art performance (F1: 0.89+-0.04) while providing clinically meaningful interpretability scores (4.2/5.0). Our deployment study shows 87% clinician acceptance rate and 34% reduction in diagnostic time. The results provide strong evidence that UDM represents a practical pathway toward AI-assisted speech therapy in clinical environments.

Humanity's Last Exam

Jan 24, 2025Abstract:Benchmarks are important tools for tracking the rapid advancements in large language model (LLM) capabilities. However, benchmarks are not keeping pace in difficulty: LLMs now achieve over 90\% accuracy on popular benchmarks like MMLU, limiting informed measurement of state-of-the-art LLM capabilities. In response, we introduce Humanity's Last Exam (HLE), a multi-modal benchmark at the frontier of human knowledge, designed to be the final closed-ended academic benchmark of its kind with broad subject coverage. HLE consists of 3,000 questions across dozens of subjects, including mathematics, humanities, and the natural sciences. HLE is developed globally by subject-matter experts and consists of multiple-choice and short-answer questions suitable for automated grading. Each question has a known solution that is unambiguous and easily verifiable, but cannot be quickly answered via internet retrieval. State-of-the-art LLMs demonstrate low accuracy and calibration on HLE, highlighting a significant gap between current LLM capabilities and the expert human frontier on closed-ended academic questions. To inform research and policymaking upon a clear understanding of model capabilities, we publicly release HLE at https://lastexam.ai.

BetaExplainer: A Probabilistic Method to Explain Graph Neural Networks

Dec 16, 2024Abstract:Graph neural networks (GNNs) are powerful tools for conducting inference on graph data but are often seen as "black boxes" due to difficulty in extracting meaningful subnetworks driving predictive performance. Many interpretable GNN methods exist, but they cannot quantify uncertainty in edge weights and suffer in predictive accuracy when applied to challenging graph structures. In this work, we proposed BetaExplainer which addresses these issues by using a sparsity-inducing prior to mask unimportant edges during model training. To evaluate our approach, we examine various simulated data sets with diverse real-world characteristics. Not only does this implementation provide a notion of edge importance uncertainty, it also improves upon evaluation metrics for challenging datasets compared to state-of-the art explainer methods.

FogROS2-FT: Fault Tolerant Cloud Robotics

Dec 06, 2024Abstract:Cloud robotics enables robots to offload complex computational tasks to cloud servers for performance and ease of management. However, cloud compute can be costly, cloud services can suffer occasional downtime, and connectivity between the robot and cloud can be prone to variations in network Quality-of-Service (QoS). We present FogROS2-FT (Fault Tolerant) to mitigate these issues by introducing a multi-cloud extension that automatically replicates independent stateless robotic services, routes requests to these replicas, and directs the first response back. With replication, robots can still benefit from cloud computations even when a cloud service provider is down or there is low QoS. Additionally, many cloud computing providers offer low-cost spot computing instances that may shutdown unpredictably. Normally, these low-cost instances would be inappropriate for cloud robotics, but the fault tolerance nature of FogROS2-FT allows them to be used reliably. We demonstrate FogROS2-FT fault tolerance capabilities in 3 cloud-robotics scenarios in simulation (visual object detection, semantic segmentation, motion planning) and 1 physical robot experiment (scan-pick-and-place). Running on the same hardware specification, FogROS2-FT achieves motion planning with up to 2.2x cost reduction and up to a 5.53x reduction on 99 Percentile (P99) long-tail latency. FogROS2-FT reduces the P99 long-tail latency of object detection and semantic segmentation by 2.0x and 2.1x, respectively, under network slowdown and resource contention.

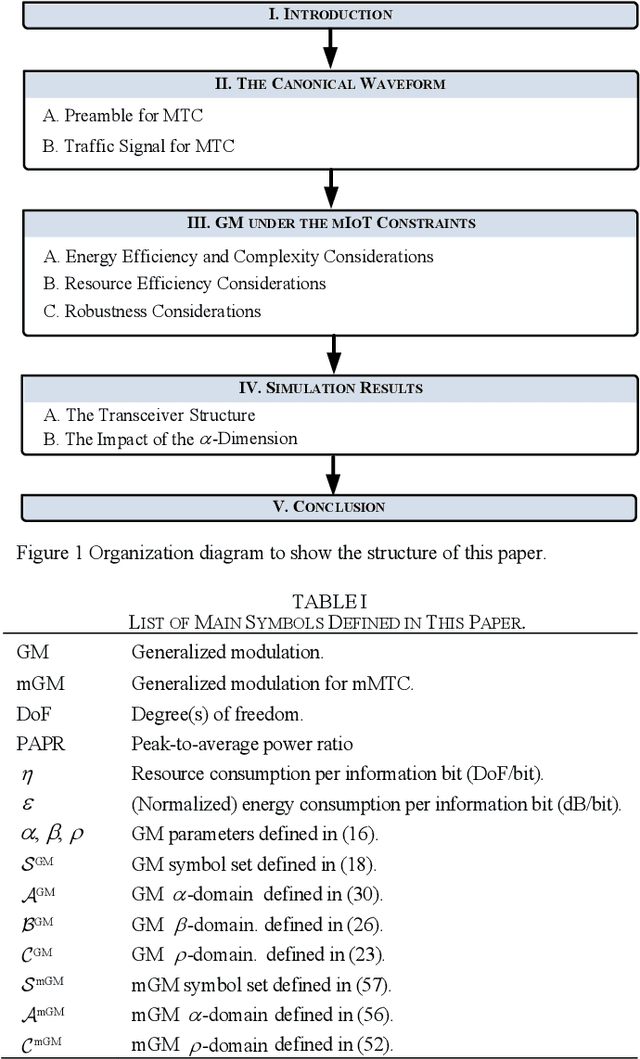

Machine-Type Communication Waveforms: An Exploration of New Dimensions

Jun 29, 2024

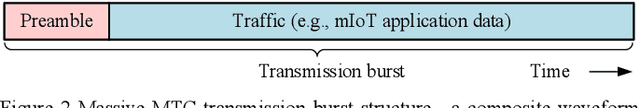

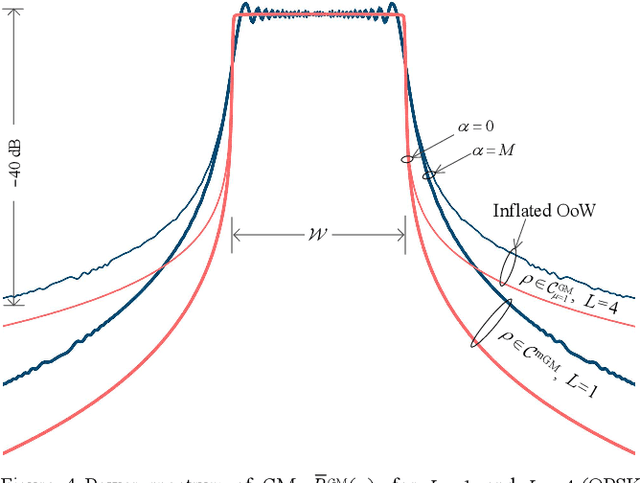

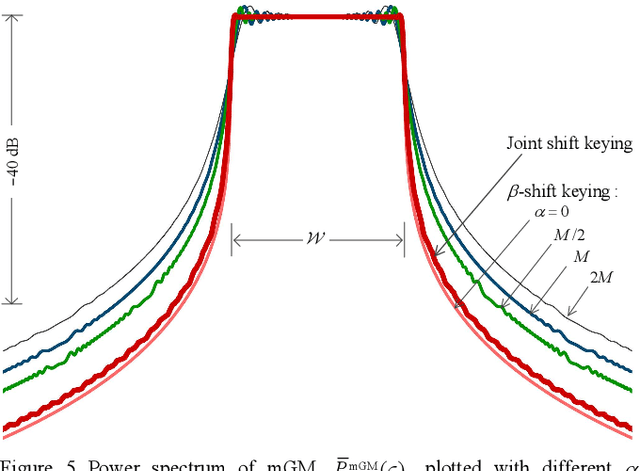

Abstract:This paper derives a generalized class of waveforms with an application to machine-type communication (MTC) while studying its underlying structural characteristics in relation to conventional modulation waveforms. First, a canonical waveform of frequency-error tolerance is identified for a unified preamble and traffic signal design, ideal for MTC use as a composite waveform, commonly known as a transmission burst. It is shown that the most widely used modulation schemes for mIoT traffic signals, e.g., FSK and LoRa modulation, are simply subsets of the canonical waveform. The intrinsic characteristics and degrees of freedom the waveform offers are then explored. Most significantly, a new waveform dimension is uncovered and exploited as additional degrees of freedom for satisfying the MTC requirements, i.e., energy and resource efficiency and robustness. The corresponding benefits are evaluated analytically and numerically in AWGN, frequency-flat, and selective channels. We demonstrate that neither FSK nor LoRa can fully address the mIoT requirements since neither fully exploits the degrees of freedom from the perspective of the generalized waveform class. Finally, a solution is devised to optimize energy and resource efficiency under various deployment environments and practical constraints while maintaining the low-complexity property.

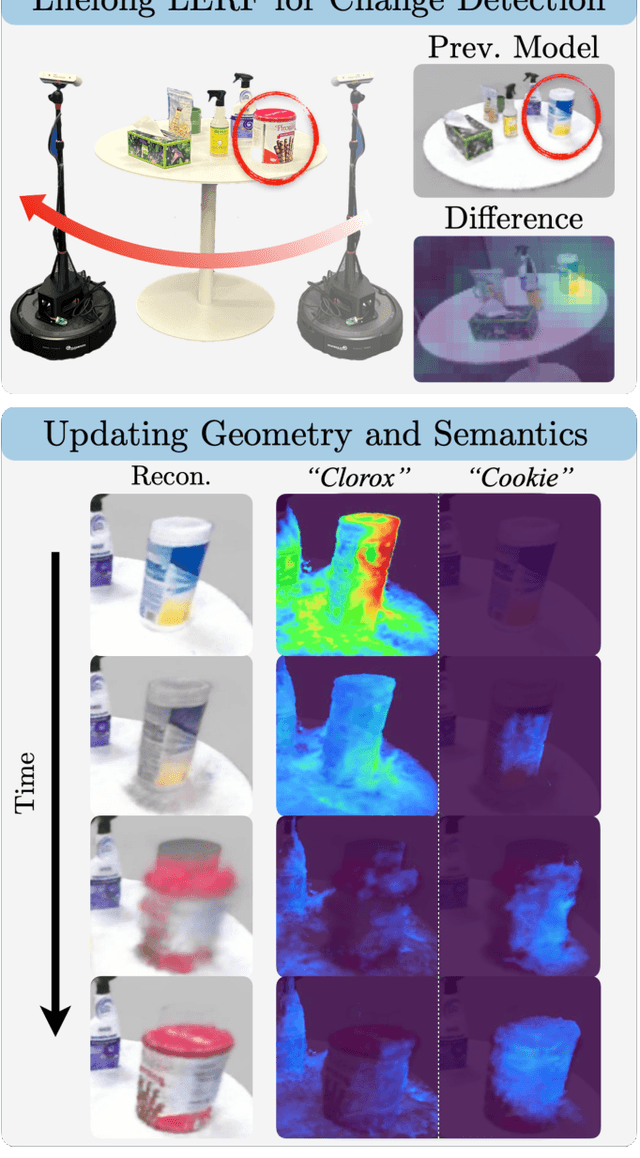

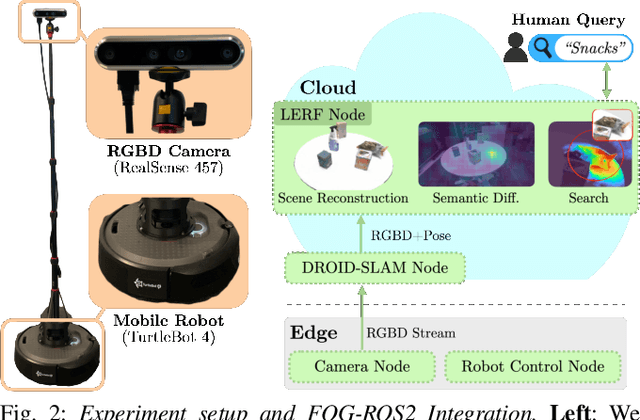

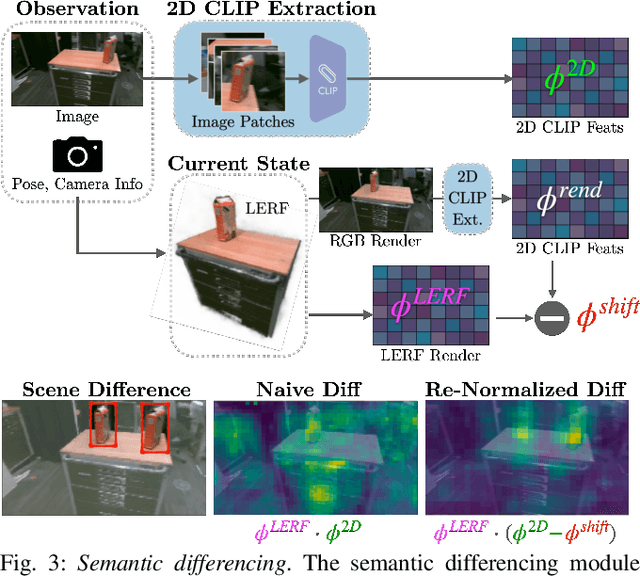

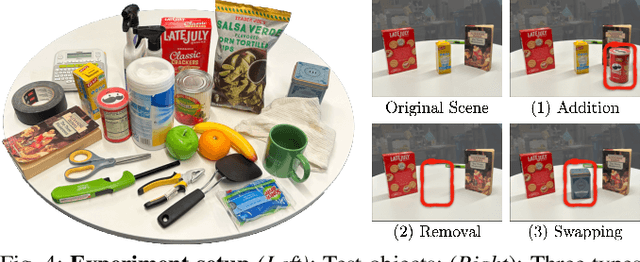

Lifelong LERF: Local 3D Semantic Inventory Monitoring Using FogROS2

Mar 15, 2024

Abstract:Inventory monitoring in homes, factories, and retail stores relies on maintaining data despite objects being swapped, added, removed, or moved. We introduce Lifelong LERF, a method that allows a mobile robot with minimal compute to jointly optimize a dense language and geometric representation of its surroundings. Lifelong LERF maintains this representation over time by detecting semantic changes and selectively updating these regions of the environment, avoiding the need to exhaustively remap. Human users can query inventory by providing natural language queries and receiving a 3D heatmap of potential object locations. To manage the computational load, we use Fog-ROS2, a cloud robotics platform, to offload resource-intensive tasks. Lifelong LERF obtains poses from a monocular RGBD SLAM backend, and uses these poses to progressively optimize a Language Embedded Radiance Field (LERF) for semantic monitoring. Experiments with 3-5 objects arranged on a tabletop and a Turtlebot with a RealSense camera suggest that Lifelong LERF can persistently adapt to changes in objects with up to 91% accuracy.

Bringing the State-of-the-Art to Customers: A Neural Agent Assistant Framework for Customer Service Support

Feb 07, 2023

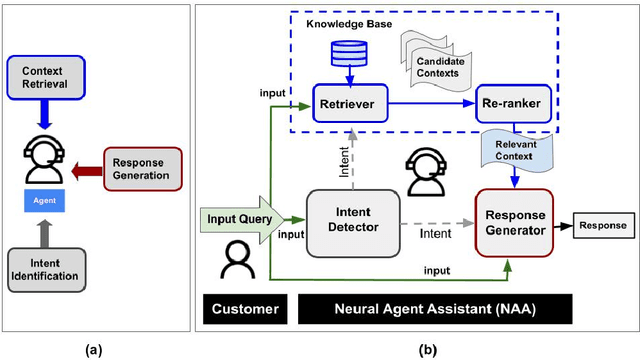

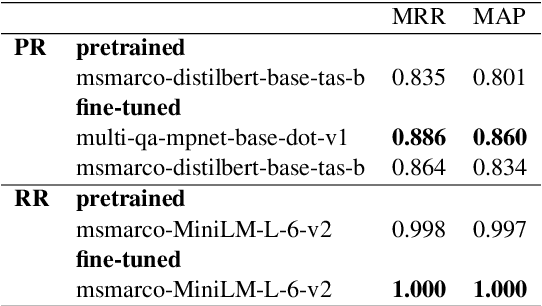

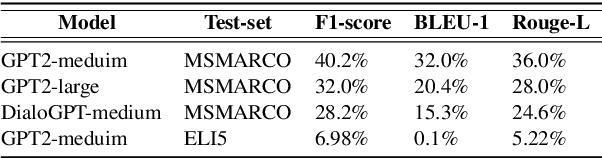

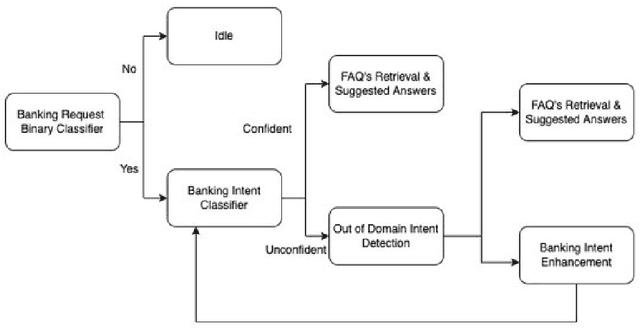

Abstract:Building Agent Assistants that can help improve customer service support requires inputs from industry users and their customers, as well as knowledge about state-of-the-art Natural Language Processing (NLP) technology. We combine expertise from academia and industry to bridge the gap and build task/domain-specific Neural Agent Assistants (NAA) with three high-level components for: (1) Intent Identification, (2) Context Retrieval, and (3) Response Generation. In this paper, we outline the pipeline of the NAA's core system and also present three case studies in which three industry partners successfully adapt the framework to find solutions to their unique challenges. Our findings suggest that a collaborative process is instrumental in spurring the development of emerging NLP models for Conversational AI tasks in industry. The full reference implementation code and results are available at \url{https://github.com/VectorInstitute/NAA}

NeuDep: Neural Binary Memory Dependence Analysis

Oct 04, 2022

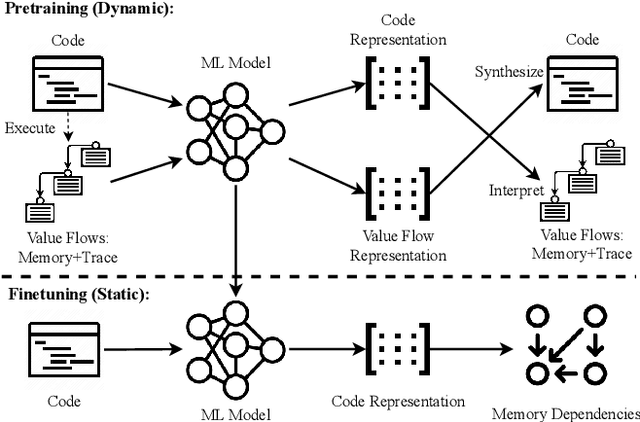

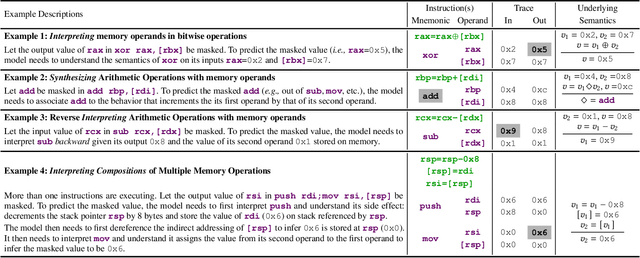

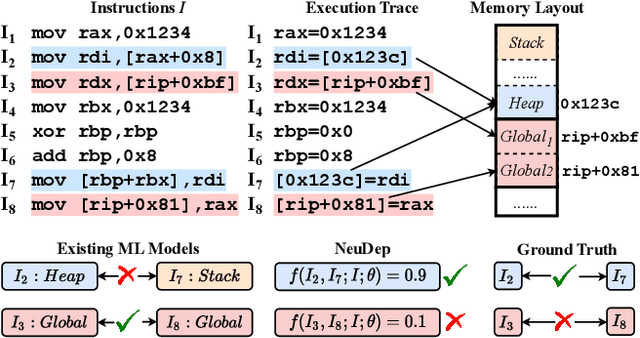

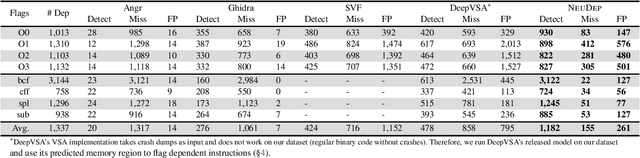

Abstract:Determining whether multiple instructions can access the same memory location is a critical task in binary analysis. It is challenging as statically computing precise alias information is undecidable in theory. The problem aggravates at the binary level due to the presence of compiler optimizations and the absence of symbols and types. Existing approaches either produce significant spurious dependencies due to conservative analysis or scale poorly to complex binaries. We present a new machine-learning-based approach to predict memory dependencies by exploiting the model's learned knowledge about how binary programs execute. Our approach features (i) a self-supervised procedure that pretrains a neural net to reason over binary code and its dynamic value flows through memory addresses, followed by (ii) supervised finetuning to infer the memory dependencies statically. To facilitate efficient learning, we develop dedicated neural architectures to encode the heterogeneous inputs (i.e., code, data values, and memory addresses from traces) with specific modules and fuse them with a composition learning strategy. We implement our approach in NeuDep and evaluate it on 41 popular software projects compiled by 2 compilers, 4 optimizations, and 4 obfuscation passes. We demonstrate that NeuDep is more precise (1.5x) and faster (3.5x) than the current state-of-the-art. Extensive probing studies on security-critical reverse engineering tasks suggest that NeuDep understands memory access patterns, learns function signatures, and is able to match indirect calls. All these tasks either assist or benefit from inferring memory dependencies. Notably, NeuDep also outperforms the current state-of-the-art on these tasks.

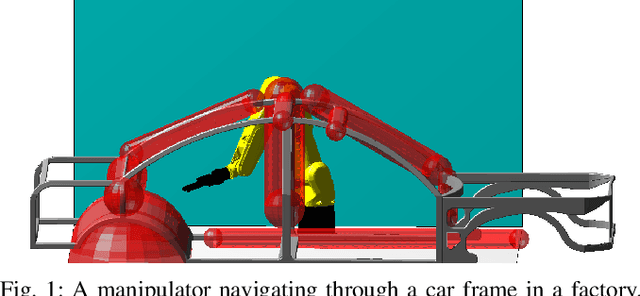

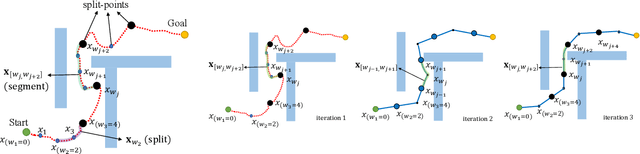

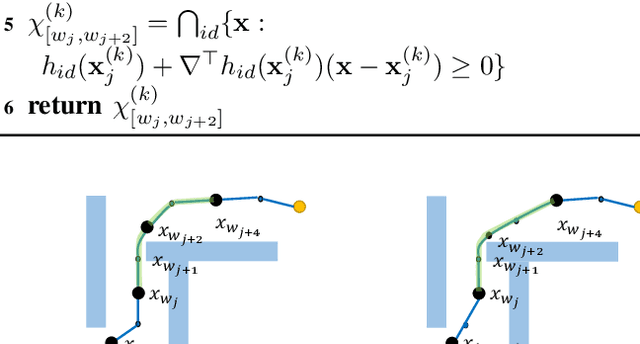

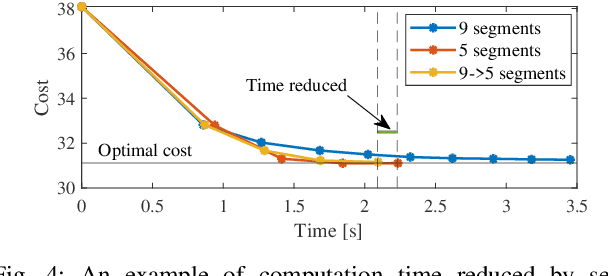

Long-Horizon Motion Planning via Sampling and Segmented Trajectory Optimization

Apr 17, 2022

Abstract:This paper presents a hybrid robot motion planner that generates long-horizon motion plans for robot navigation in environments with obstacles. We propose a hybrid planner, RRT* with segmented trajectory optimization (RRT*-sOpt), which combines the merits of sampling-based planning, optimization-based planning, and trajectory splitting to quickly plan for a collision-free and dynamically-feasible motion plan. When generating a plan, the RRT* layer quickly samples a semi-optimal path and sets it as an initial reference path. Then, the sOpt layer splits the reference path and performs optimization on each segment. It then splits the new trajectory again and repeats the process until the whole trajectory converges. We also propose to reduce the number of segments before convergence with the aim of further reducing computation time. Simulation results show that RRT*-sOpt benefits from the hybrid structure with trajectory splitting and performs robustly in various robot platforms and scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge