Man Chen

A Mechanistic View on Video Generation as World Models: State and Dynamics

Jan 22, 2026Abstract:Large-scale video generation models have demonstrated emergent physical coherence, positioning them as potential world models. However, a gap remains between contemporary "stateless" video architectures and classic state-centric world model theories. This work bridges this gap by proposing a novel taxonomy centered on two pillars: State Construction and Dynamics Modeling. We categorize state construction into implicit paradigms (context management) and explicit paradigms (latent compression), while dynamics modeling is analyzed through knowledge integration and architectural reformulation. Furthermore, we advocate for a transition in evaluation from visual fidelity to functional benchmarks, testing physical persistence and causal reasoning. We conclude by identifying two critical frontiers: enhancing persistence via data-driven memory and compressed fidelity, and advancing causality through latent factor decoupling and reasoning-prior integration. By addressing these challenges, the field can evolve from generating visually plausible videos to building robust, general-purpose world simulators.

A Clinician-Friendly Platform for Ophthalmic Image Analysis Without Technical Barriers

Apr 22, 2025Abstract:Artificial intelligence (AI) shows remarkable potential in medical imaging diagnostics, but current models typically require retraining when deployed across different clinical centers, limiting their widespread adoption. We introduce GlobeReady, a clinician-friendly AI platform that enables ocular disease diagnosis without retraining/fine-tuning or technical expertise. GlobeReady achieves high accuracy across imaging modalities: 93.9-98.5% for an 11-category fundus photo dataset and 87.2-92.7% for a 15-category OCT dataset. Through training-free local feature augmentation, it addresses domain shifts across centers and populations, reaching an average accuracy of 88.9% across five centers in China, 86.3% in Vietnam, and 90.2% in the UK. The built-in confidence-quantifiable diagnostic approach further boosted accuracy to 94.9-99.4% (fundus) and 88.2-96.2% (OCT), while identifying out-of-distribution cases at 86.3% (49 CFP categories) and 90.6% (13 OCT categories). Clinicians from multiple countries rated GlobeReady highly (average 4.6 out of 5) for its usability and clinical relevance. These results demonstrate GlobeReady's robust, scalable diagnostic capability and potential to support ophthalmic care without technical barriers.

ImplicitCell: Resolution Cell Modeling of Joint Implicit Volume Reconstruction and Pose Refinement in Freehand 3D Ultrasound

Mar 09, 2025Abstract:Freehand 3D ultrasound enables volumetric imaging by tracking a conventional ultrasound probe during freehand scanning, offering enriched spatial information that improves clinical diagnosis. However, the quality of reconstructed volumes is often compromised by tracking system noise and irregular probe movements, leading to artifacts in the final reconstruction. To address these challenges, we propose ImplicitCell, a novel framework that integrates Implicit Neural Representation (INR) with an ultrasound resolution cell model for joint optimization of volume reconstruction and pose refinement. Three distinct datasets are used for comprehensive validation, including phantom, common carotid artery, and carotid atherosclerosis. Experimental results demonstrate that ImplicitCell significantly reduces reconstruction artifacts and improves volume quality compared to existing methods, particularly in challenging scenarios with noisy tracking data. These improvements enhance the clinical utility of freehand 3D ultrasound by providing more reliable and precise diagnostic information.

Enhancing CTR Prediction in Recommendation Domain with Search Query Representation

Oct 28, 2024

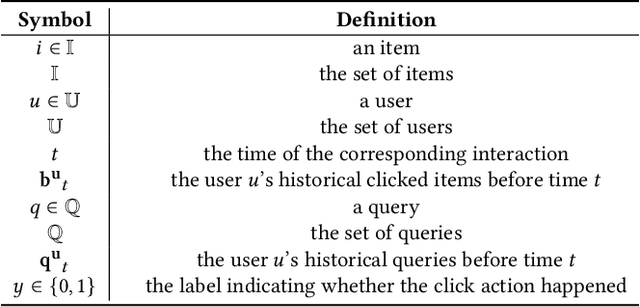

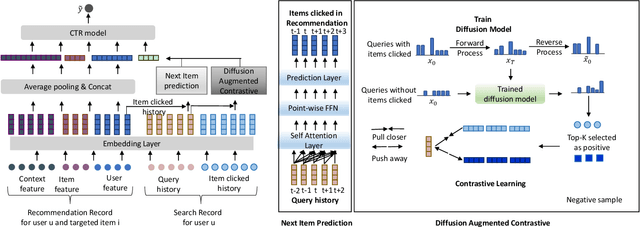

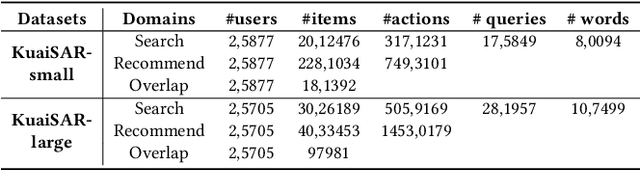

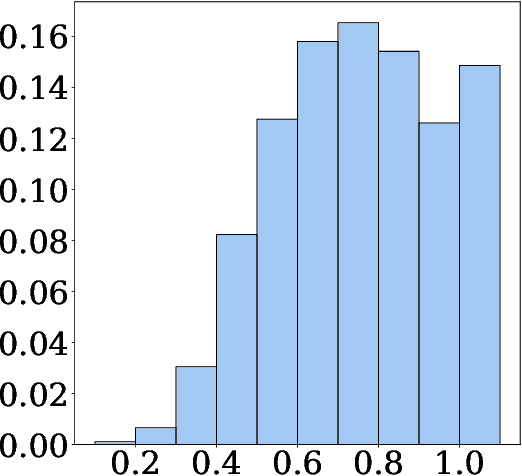

Abstract:Many platforms, such as e-commerce websites, offer both search and recommendation services simultaneously to better meet users' diverse needs. Recommendation services suggest items based on user preferences, while search services allow users to search for items before providing recommendations. Since users and items are often shared between the search and recommendation domains, there is a valuable opportunity to enhance the recommendation domain by leveraging user preferences extracted from the search domain. Existing approaches either overlook the shift in user intention between these domains or fail to capture the significant impact of learning from users' search queries on understanding their interests. In this paper, we propose a framework that learns from user search query embeddings within the context of user preferences in the recommendation domain. Specifically, user search query sequences from the search domain are used to predict the items users will click at the next time point in the recommendation domain. Additionally, the relationship between queries and items is explored through contrastive learning. To address issues of data sparsity, the diffusion model is incorporated to infer positive items the user will select after searching with certain queries in a denoising manner, which is particularly effective in preventing false positives. Effectively extracting this information, the queries are integrated into click-through rate prediction in the recommendation domain. Experimental analysis demonstrates that our model outperforms state-of-the-art models in the recommendation domain.

* Accepted by CIKM 2024 Full Research Track

UFLUX v2.0: A Process-Informed Machine Learning Framework for Efficient and Explainable Modelling of Terrestrial Carbon Uptake

Oct 04, 2024

Abstract:Gross Primary Productivity (GPP), the amount of carbon plants fixed by photosynthesis, is pivotal for understanding the global carbon cycle and ecosystem functioning. Process-based models built on the knowledge of ecological processes are susceptible to biases stemming from their assumptions and approximations. These limitations potentially result in considerable uncertainties in global GPP estimation, which may pose significant challenges to our Net Zero goals. This study presents UFLUX v2.0, a process-informed model that integrates state-of-art ecological knowledge and advanced machine learning techniques to reduce uncertainties in GPP estimation by learning the biases between process-based models and eddy covariance (EC) measurements. In our findings, UFLUX v2.0 demonstrated a substantial improvement in model accuracy, achieving an R^2 of 0.79 with a reduced RMSE of 1.60 g C m^-2 d^-1, compared to the process-based model's R^2 of 0.51 and RMSE of 3.09 g C m^-2 d^-1. Our global GPP distribution analysis indicates that while UFLUX v2.0 and the process-based model achieved similar global total GPP (137.47 Pg C and 132.23 Pg C, respectively), they exhibited large differences in spatial distribution, particularly in latitudinal gradients. These differences are very likely due to systematic biases in the process-based model and differing sensitivities to climate and environmental conditions. This study offers improved adaptability for GPP modelling across diverse ecosystems, and further enhances our understanding of global carbon cycles and its responses to environmental changes.

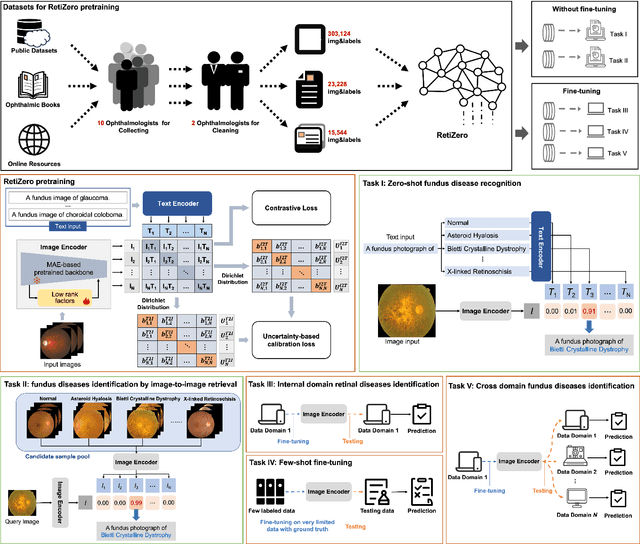

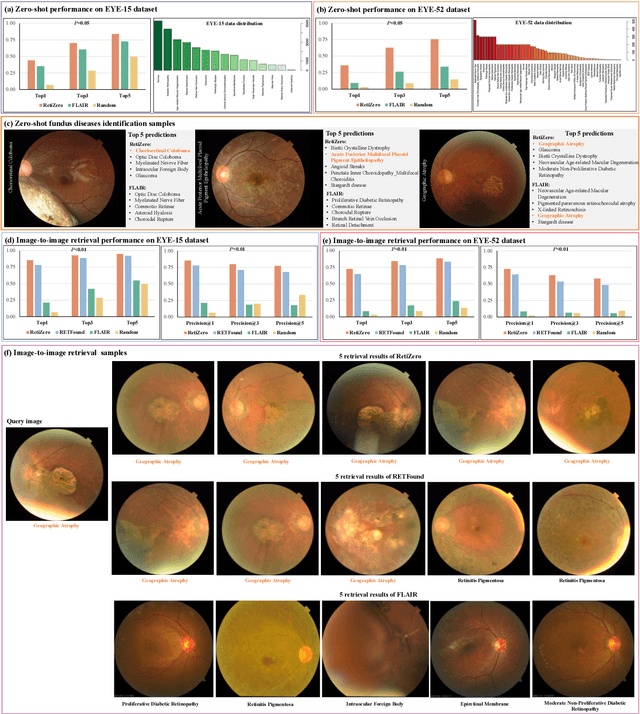

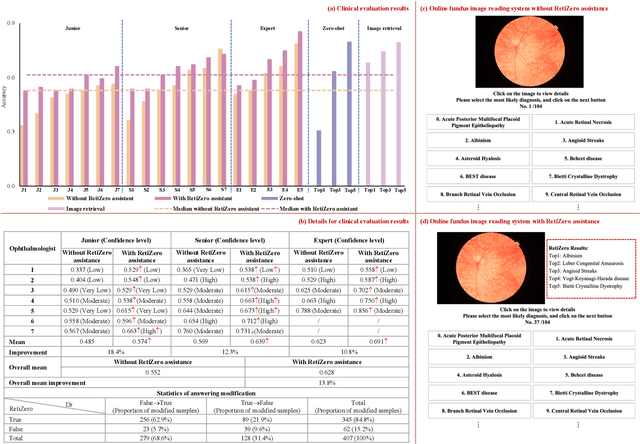

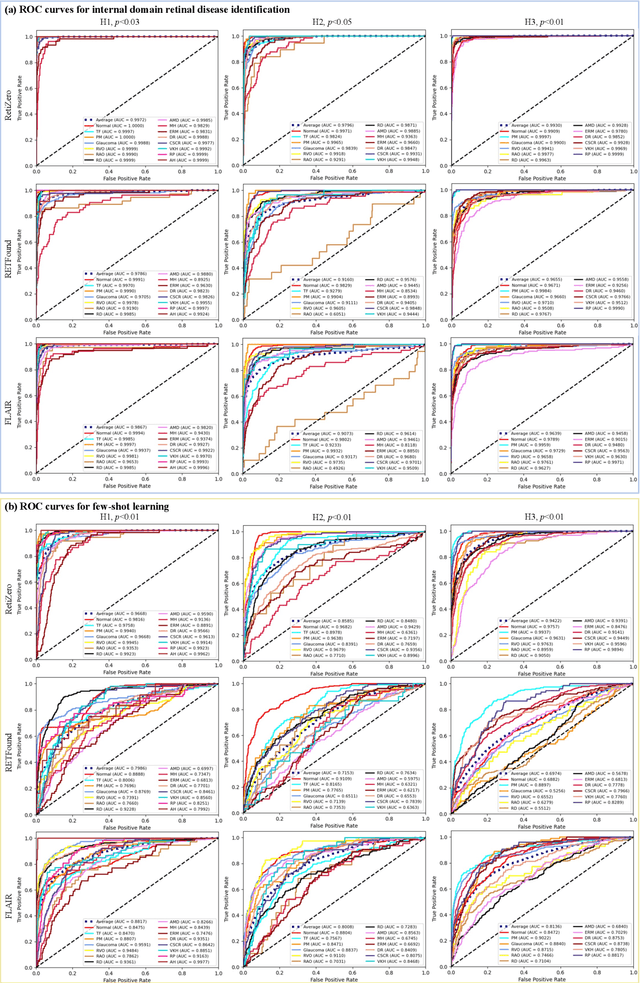

Common and Rare Fundus Diseases Identification Using Vision-Language Foundation Model with Knowledge of Over 400 Diseases

Jun 13, 2024

Abstract:The current retinal artificial intelligence models were trained using data with a limited category of diseases and limited knowledge. In this paper, we present a retinal vision-language foundation model (RetiZero) with knowledge of over 400 fundus diseases. Specifically, we collected 341,896 fundus images paired with text descriptions from 29 publicly available datasets, 180 ophthalmic books, and online resources, encompassing over 400 fundus diseases across multiple countries and ethnicities. RetiZero achieved outstanding performance across various downstream tasks, including zero-shot retinal disease recognition, image-to-image retrieval, internal domain and cross-domain retinal disease classification, and few-shot fine-tuning. Specially, in the zero-shot scenario, RetiZero achieved a Top5 score of 0.8430 and 0.7561 on 15 and 52 fundus diseases respectively. In the image-retrieval task, RetiZero achieved a Top5 score of 0.9500 and 0.8860 on 15 and 52 retinal diseases respectively. Furthermore, clinical evaluations by ophthalmology experts from different countries demonstrate that RetiZero can achieve performance comparable to experienced ophthalmologists using zero-shot and image retrieval methods without requiring model retraining. These capabilities of retinal disease identification strengthen our RetiZero foundation model in clinical implementation.

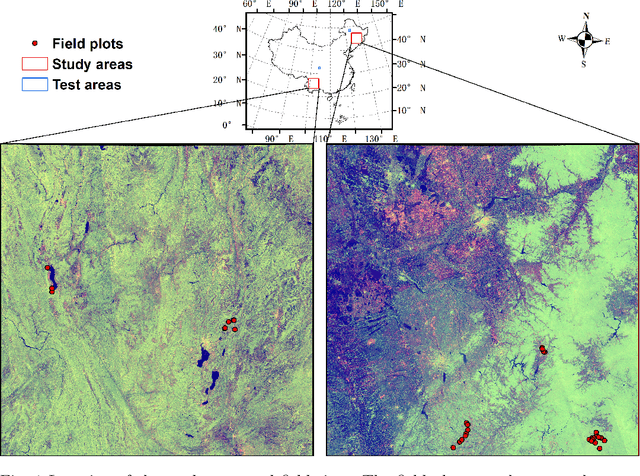

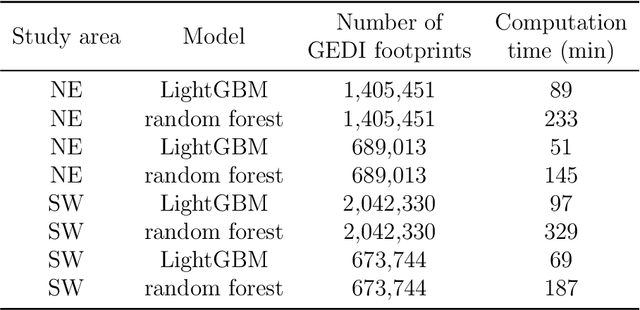

Comparing remote sensing-based forest biomass mapping approaches using new forest inventory plots in contrasting forests in northeastern and southwestern China

May 24, 2024

Abstract:Large-scale high spatial resolution aboveground biomass (AGB) maps play a crucial role in determining forest carbon stocks and how they are changing, which is instrumental in understanding the global carbon cycle, and implementing policy to mitigate climate change. The advent of the new space-borne LiDAR sensor, NASA's GEDI instrument, provides unparalleled possibilities for the accurate and unbiased estimation of forest AGB at high resolution, particularly in dense and tall forests, where Synthetic Aperture Radar (SAR) and passive optical data exhibit saturation. However, GEDI is a sampling instrument, collecting dispersed footprints, and its data must be combined with that from other continuous cover satellites to create high-resolution maps, using local machine learning methods. In this study, we developed local models to estimate forest AGB from GEDI L2A data, as the models used to create GEDI L4 AGB data incorporated minimal field data from China. We then applied LightGBM and random forest regression to generate wall-to-wall AGB maps at 25 m resolution, using extensive GEDI footprints as well as Sentinel-1 data, ALOS-2 PALSAR-2 and Sentinel-2 optical data. Through a 5-fold cross-validation, LightGBM demonstrated a slightly better performance than Random Forest across two contrasting regions. However, in both regions, the computation speed of LightGBM is substantially faster than that of the random forest model, requiring roughly one-third of the time to compute on the same hardware. Through the validation against field data, the 25 m resolution AGB maps generated using the local models developed in this study exhibited higher accuracy compared to the GEDI L4B AGB data. We found in both regions an increase in error as slope increased. The trained models were tested on nearby but different regions and exhibited good performance.

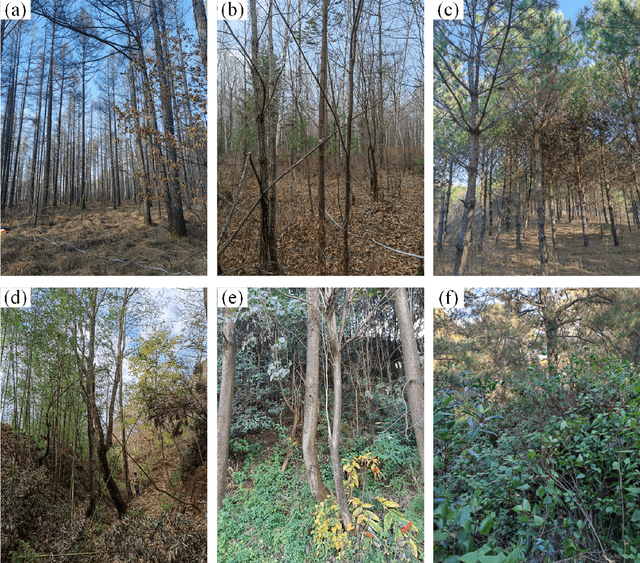

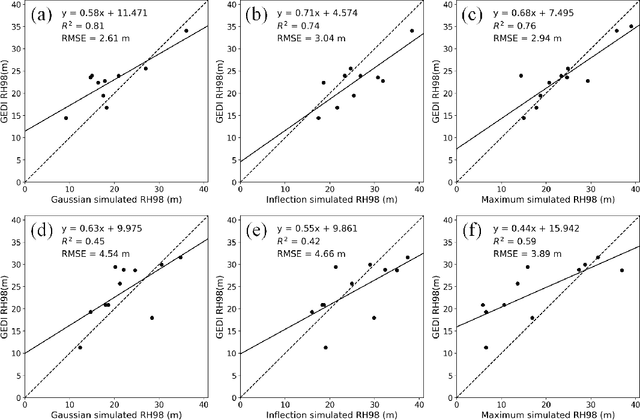

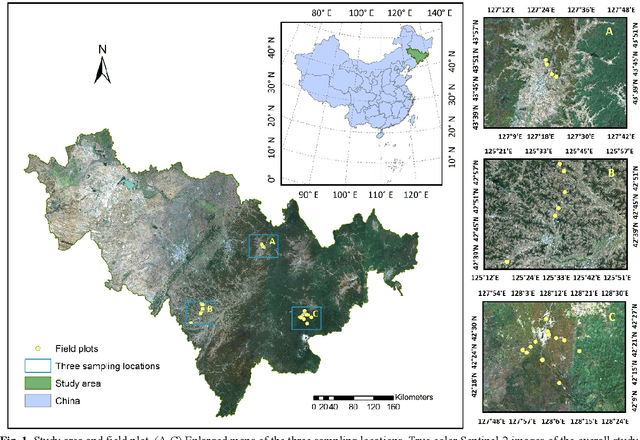

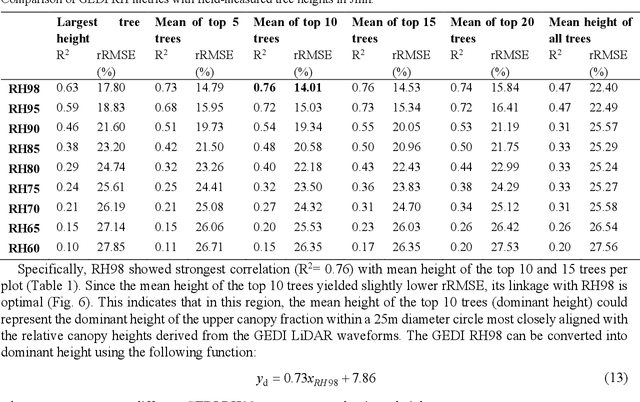

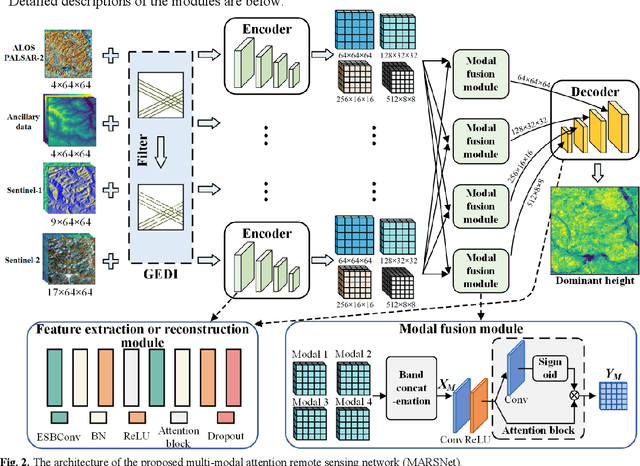

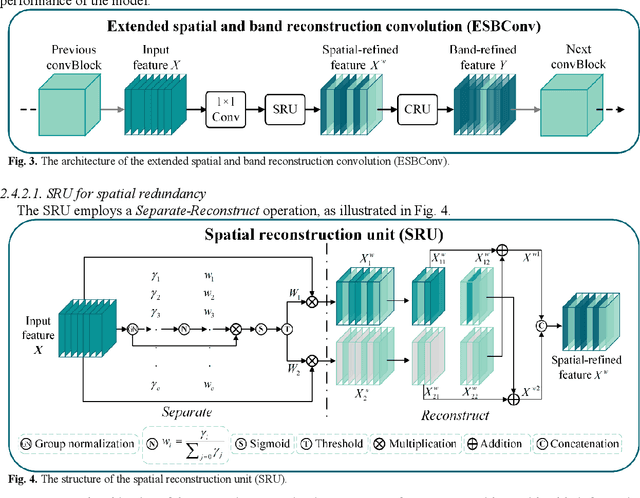

Multimodal deep learning for mapping forest dominant height by fusing GEDI with earth observation data

Nov 20, 2023

Abstract:The integration of multisource remote sensing data and deep learning models offers new possibilities for accurately mapping high spatial resolution forest height. We found that GEDI relative heights (RH) metrics exhibited strong correlation with the mean of the top 10 highest trees (dominant height) measured in situ at the corresponding footprint locations. Consequently, we proposed a novel deep learning framework termed the multi-modal attention remote sensing network (MARSNet) to estimate forest dominant height by extrapolating dominant height derived from GEDI, using Setinel-1 data, ALOS-2 PALSAR-2 data, Sentinel-2 optical data and ancillary data. MARSNet comprises separate encoders for each remote sensing data modality to extract multi-scale features, and a shared decoder to fuse the features and estimate height. Using individual encoders for each remote sensing imagery avoids interference across modalities and extracts distinct representations. To focus on the efficacious information from each dataset, we reduced the prevalent spatial and band redundancies in each remote sensing data by incorporating the extended spatial and band reconstruction convolution modules in the encoders. MARSNet achieved commendable performance in estimating dominant height, with an R2 of 0.62 and RMSE of 2.82 m, outperforming the widely used random forest approach which attained an R2 of 0.55 and RMSE of 3.05 m. Finally, we applied the trained MARSNet model to generate wall-to-wall maps at 10 m resolution for Jilin, China. Through independent validation using field measurements, MARSNet demonstrated an R2 of 0.58 and RMSE of 3.76 m, compared to 0.41 and 4.37 m for the random forest baseline. Our research demonstrates the effectiveness of a multimodal deep learning approach fusing GEDI with SAR and passive optical imagery for enhancing the accuracy of high resolution dominant height estimation.

Automatic Diagnosis of Carotid Atherosclerosis Using a Portable Freehand 3D Ultrasound Imaging System

Jan 08, 2023

Abstract:Objective: The objective of this study is to develop a deep-learning based detection and diagnosis technique for carotid atherosclerosis using a portable freehand 3D ultrasound (US) imaging system. Methods: A total of 127 3D carotid artery datasets were acquired using a portable 3D US imaging system. A U-Net segmentation network was firstly applied to extract the carotid artery on 2D transverse frame, then a novel 3D reconstruction algorithm using fast dot projection (FDP) method with position regularization was proposed to reconstruct the carotid artery volume. Furthermore, a convolutional neural network was used to classify the healthy case and diseased case qualitatively. 3D volume analysis including longitudinal reprojection algorithm and stenosis grade measurement algorithm was developed to obtain the clinical metrics quantitatively. Results: The proposed system achieved sensitivity of 0.714, specificity of 0.851 and accuracy of 0.803 respectively in diagnosis of carotid atherosclerosis. The automatically measured stenosis grade illustrated good correlation (r=0.762) with the experienced expert measurement. Conclusion: the developed technique based on 3D US imaging can be applied to the automatic diagnosis of carotid atherosclerosis. Significance: The proposed deep-learning based technique was specially designed for a portable 3D freehand US system, which can provide carotid atherosclerosis examination more conveniently and decrease the dependence on clinician's experience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge