Edward T. A. Mitchard

Comparing remote sensing-based forest biomass mapping approaches using new forest inventory plots in contrasting forests in northeastern and southwestern China

May 24, 2024

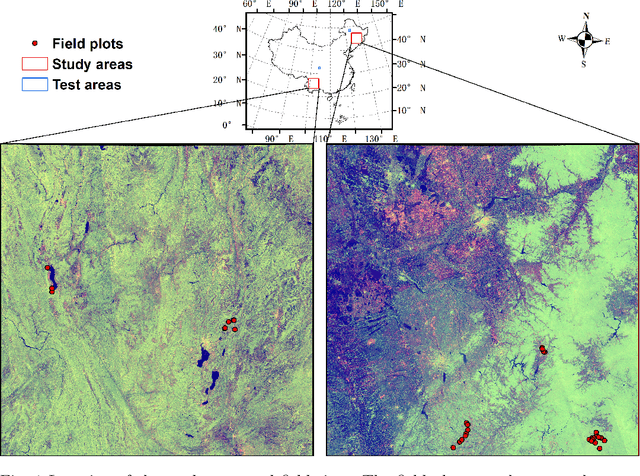

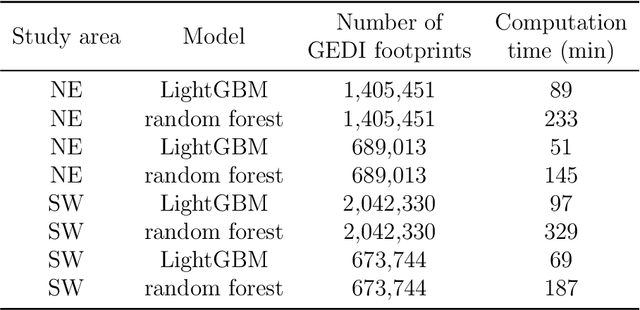

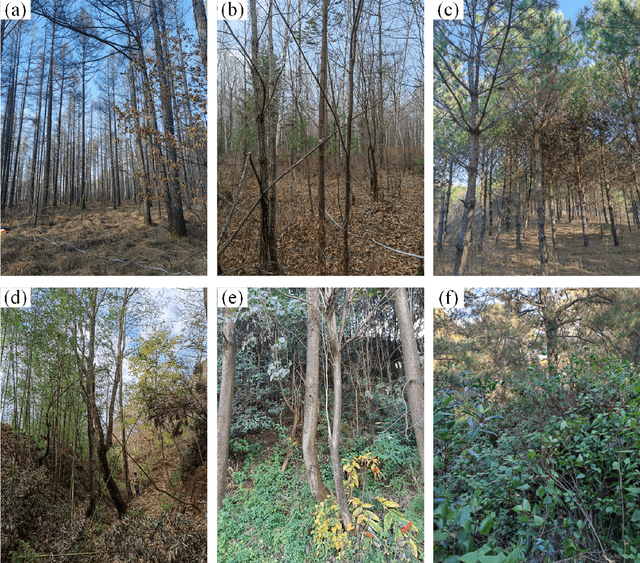

Abstract:Large-scale high spatial resolution aboveground biomass (AGB) maps play a crucial role in determining forest carbon stocks and how they are changing, which is instrumental in understanding the global carbon cycle, and implementing policy to mitigate climate change. The advent of the new space-borne LiDAR sensor, NASA's GEDI instrument, provides unparalleled possibilities for the accurate and unbiased estimation of forest AGB at high resolution, particularly in dense and tall forests, where Synthetic Aperture Radar (SAR) and passive optical data exhibit saturation. However, GEDI is a sampling instrument, collecting dispersed footprints, and its data must be combined with that from other continuous cover satellites to create high-resolution maps, using local machine learning methods. In this study, we developed local models to estimate forest AGB from GEDI L2A data, as the models used to create GEDI L4 AGB data incorporated minimal field data from China. We then applied LightGBM and random forest regression to generate wall-to-wall AGB maps at 25 m resolution, using extensive GEDI footprints as well as Sentinel-1 data, ALOS-2 PALSAR-2 and Sentinel-2 optical data. Through a 5-fold cross-validation, LightGBM demonstrated a slightly better performance than Random Forest across two contrasting regions. However, in both regions, the computation speed of LightGBM is substantially faster than that of the random forest model, requiring roughly one-third of the time to compute on the same hardware. Through the validation against field data, the 25 m resolution AGB maps generated using the local models developed in this study exhibited higher accuracy compared to the GEDI L4B AGB data. We found in both regions an increase in error as slope increased. The trained models were tested on nearby but different regions and exhibited good performance.

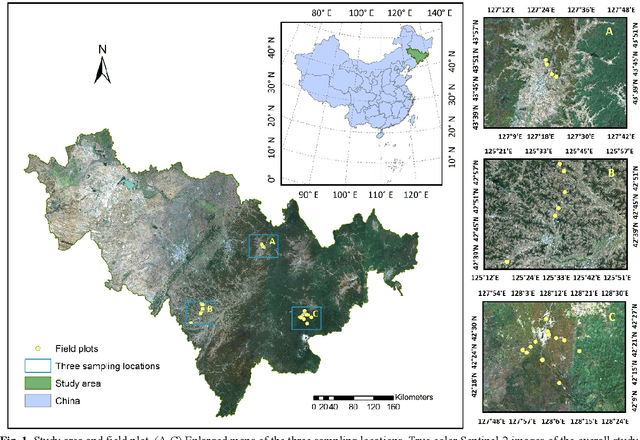

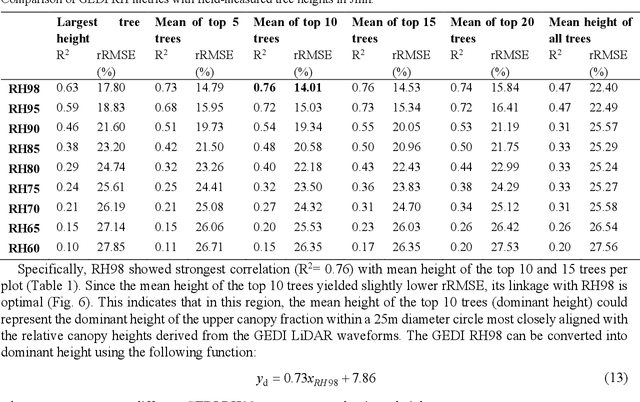

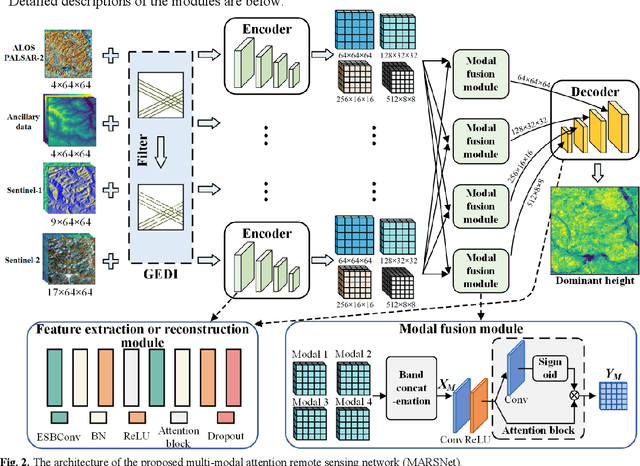

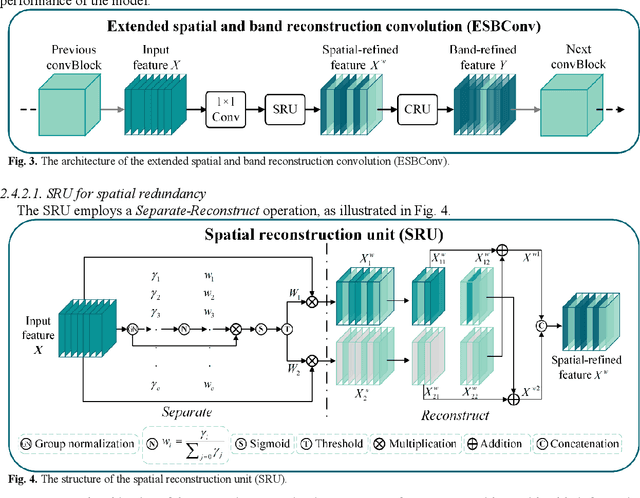

Multimodal deep learning for mapping forest dominant height by fusing GEDI with earth observation data

Nov 20, 2023

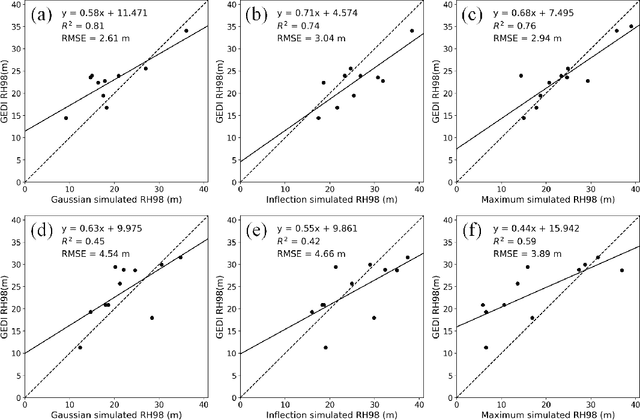

Abstract:The integration of multisource remote sensing data and deep learning models offers new possibilities for accurately mapping high spatial resolution forest height. We found that GEDI relative heights (RH) metrics exhibited strong correlation with the mean of the top 10 highest trees (dominant height) measured in situ at the corresponding footprint locations. Consequently, we proposed a novel deep learning framework termed the multi-modal attention remote sensing network (MARSNet) to estimate forest dominant height by extrapolating dominant height derived from GEDI, using Setinel-1 data, ALOS-2 PALSAR-2 data, Sentinel-2 optical data and ancillary data. MARSNet comprises separate encoders for each remote sensing data modality to extract multi-scale features, and a shared decoder to fuse the features and estimate height. Using individual encoders for each remote sensing imagery avoids interference across modalities and extracts distinct representations. To focus on the efficacious information from each dataset, we reduced the prevalent spatial and band redundancies in each remote sensing data by incorporating the extended spatial and band reconstruction convolution modules in the encoders. MARSNet achieved commendable performance in estimating dominant height, with an R2 of 0.62 and RMSE of 2.82 m, outperforming the widely used random forest approach which attained an R2 of 0.55 and RMSE of 3.05 m. Finally, we applied the trained MARSNet model to generate wall-to-wall maps at 10 m resolution for Jilin, China. Through independent validation using field measurements, MARSNet demonstrated an R2 of 0.58 and RMSE of 3.76 m, compared to 0.41 and 4.37 m for the random forest baseline. Our research demonstrates the effectiveness of a multimodal deep learning approach fusing GEDI with SAR and passive optical imagery for enhancing the accuracy of high resolution dominant height estimation.

Forest aboveground biomass estimation using GEDI and earth observation data through attention-based deep learning

Nov 06, 2023Abstract:Accurate quantification of forest aboveground biomass (AGB) is critical for understanding carbon accounting in the context of climate change. In this study, we presented a novel attention-based deep learning approach for forest AGB estimation, primarily utilizing openly accessible EO data, including: GEDI LiDAR data, C-band Sentinel-1 SAR data, ALOS-2 PALSAR-2 data, and Sentinel-2 multispectral data. The attention UNet (AU) model achieved markedly higher accuracy for biomass estimation compared to the conventional RF algorithm. Specifically, the AU model attained an R2 of 0.66, RMSE of 43.66 Mg ha-1, and bias of 0.14 Mg ha-1, while RF resulted in lower scores of R2 0.62, RMSE 45.87 Mg ha-1, and bias 1.09 Mg ha-1. However, the superiority of the deep learning approach was not uniformly observed across all tested models. ResNet101 only achieved an R2 of 0.50, an RMSE of 52.93 Mg ha-1, and a bias of 0.99 Mg ha-1, while the UNet reported an R2 of 0.65, an RMSE of 44.28 Mg ha-1, and a substantial bias of 1.84 Mg ha-1. Moreover, to explore the performance of AU in the absence of spatial information, fully connected (FC) layers were employed to eliminate spatial information from the remote sensing data. AU-FC achieved intermediate R2 of 0.64, RMSE of 44.92 Mgha-1, and bias of -0.56 Mg ha-1, outperforming RF but underperforming AU model using spatial information. We also generated 10m forest AGB maps across Guangdong for the year 2019 using AU and compared it with that produced by RF. The AGB distributions from both models showed strong agreement with similar mean values; the mean forest AGB estimated by AU was 102.18 Mg ha-1 while that of RF was 104.84 Mg ha-1. Additionally, it was observed that the AGB map generated by AU provided superior spatial information. Overall, this research substantiates the feasibility of employing deep learning for biomass estimation based on satellite data.

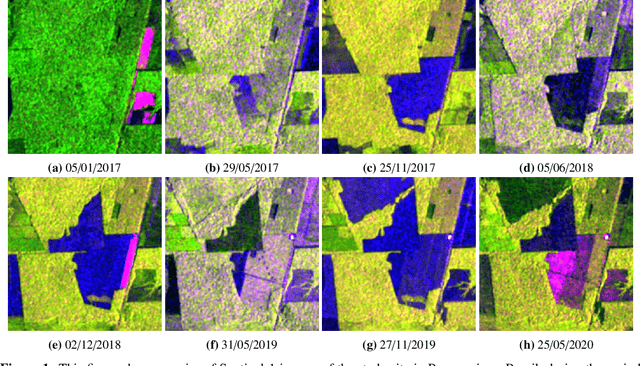

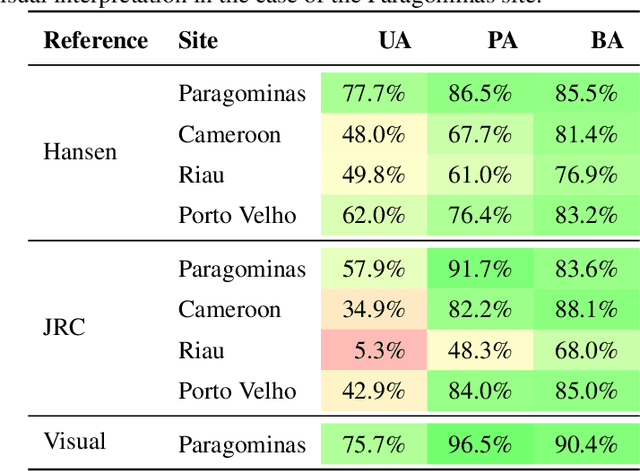

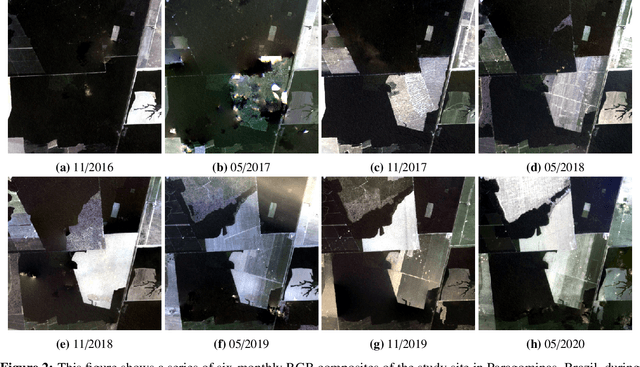

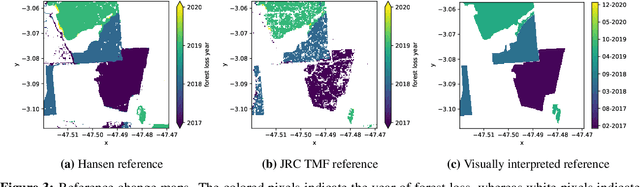

Detecting Deforestation from Sentinel-1 Data in the Absence of Reliable Reference Data

May 24, 2022

Abstract:Forests are vital for the wellbeing of our planet. Large and small scale deforestation across the globe is threatening the stability of our climate, forest biodiversity, and therefore the preservation of fragile ecosystems and our natural habitat as a whole. With increasing public interest in climate change issues and forest preservation, a large demand for carbon offsetting, carbon footprint ratings, and environmental impact assessments is emerging. Most often, deforestation maps are created from optical data such as Landsat and MODIS. These maps are not typically available at less than annual intervals due to persistent cloud cover in many parts of the world, especially the tropics where most of the world's forest biomass is concentrated. Synthetic Aperture Radar (SAR) can fill this gap as it penetrates clouds. We propose and evaluate a novel method for deforestation detection in the absence of reliable reference data which often constitutes the largest practical hurdle. This method achieves a change detection sensitivity (producer's accuracy) of 96.5% in the study area, although false positives lead to a lower user's accuracy of about 75.7%, with a total balanced accuracy of 90.4%. The change detection accuracy is maintained when adding up to 20% noise to the reference labels. While further work is required to reduce the false positive rate, improve detection delay, and validate this method in additional circumstances, the results show that Sentinel-1 data have the potential to advance the timeliness of global deforestation monitoring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge