M. D.

Math

ACTIVE: A Deep Model for Sperm and Impurity Detection in Microscopic Videos

Jan 15, 2023

Abstract:The accurate detection of sperms and impurities is a very challenging task, facing problems such as the small size of targets, indefinite target morphologies, low contrast and resolution of the video, and similarity of sperms and impurities. So far, the detection of sperms and impurities still largely relies on the traditional image processing and detection techniques which only yield limited performance and often require manual intervention in the detection process, therefore unfavorably escalating the time cost and injecting the subjective bias into the analysis. Encouraged by the successes of deep learning methods in numerous object detection tasks, here we report a deep learning model based on Double Branch Feature Extraction Network (DBFEN) and Cross-conjugate Feature Pyramid Networks (CCFPN).DBFEN is designed to extract visual features from tiny objects with a double branch structure, and CCFPN is further introduced to fuse the features extracted by DBFEN to enhance the description of position and high-level semantic information. Our work is the pioneer of introducing deep learning approaches to the detection of sperms and impurities. Experiments show that the highest AP50 of the sperm and impurity detection is 91.13% and 59.64%, which lead its competitors by a substantial margin and establish new state-of-the-art results in this problem.

Deep learning model trained on mobile phone-acquired frozen section images effectively detects basal cell carcinoma

Nov 22, 2020

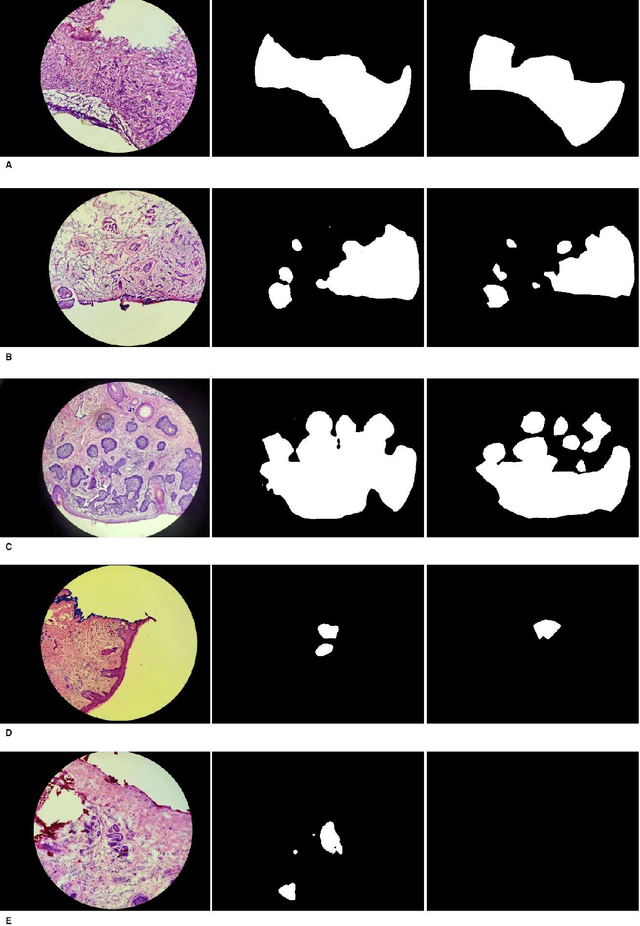

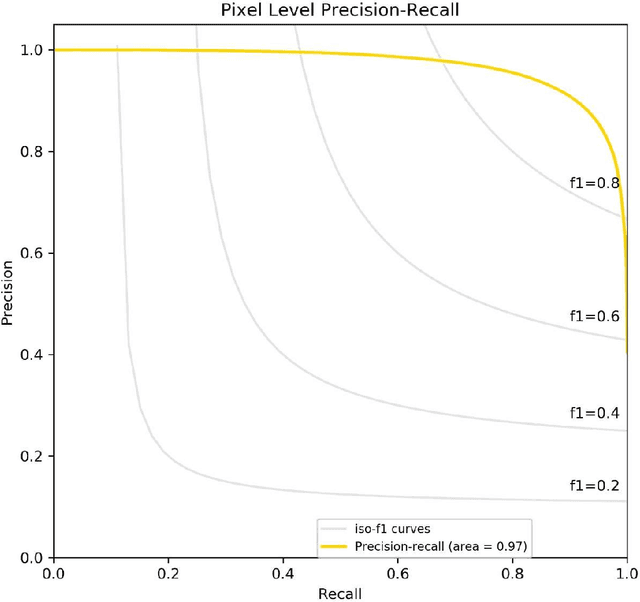

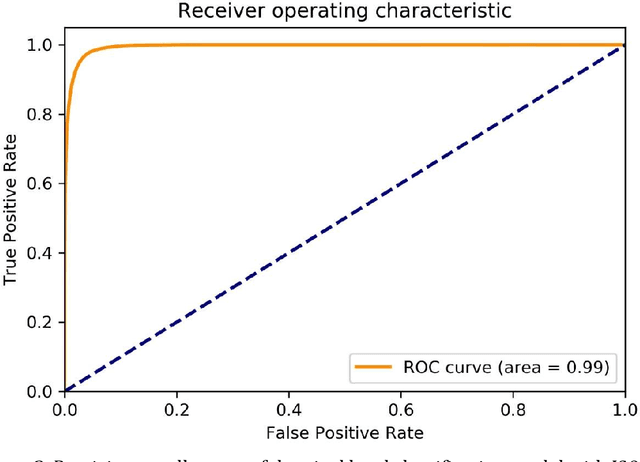

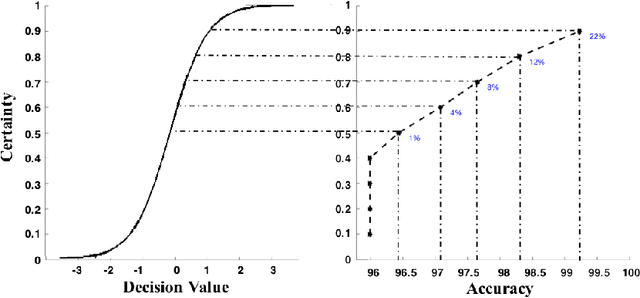

Abstract:Background: Margin assessment of basal cell carcinoma using the frozen section is a common task of pathology intraoperative consultation. Although frequently straight-forward, the determination of the presence or absence of basal cell carcinoma on the tissue sections can sometimes be challenging. We explore if a deep learning model trained on mobile phone-acquired frozen section images can have adequate performance for future deployment. Materials and Methods: One thousand two hundred and forty-one (1241) images of frozen sections performed for basal cell carcinoma margin status were acquired using mobile phones. The photos were taken at 100x magnification (10x objective). The images were downscaled from a 4032 x 3024 pixel resolution to 576 x 432 pixel resolution. Semantic segmentation algorithm Deeplab V3 with Xception backbone was used for model training. Results: The model uses an image as input and produces a 2-dimensional black and white output of prediction of the same dimension; the areas determined to be basal cell carcinoma were displayed with white color, in a black background. Any output with the number of white pixels exceeding 0.5% of the total number of pixels is deemed positive for basal cell carcinoma. On the test set, the model achieves area under curve of 0.99 for receiver operator curve and 0.97 for precision-recall curve at the pixel level. The accuracy of classification at the slide level is 96%. Conclusions: The deep learning model trained with mobile phone images shows satisfactory performance characteristics, and thus demonstrates the potential for deploying as a mobile phone app to assist in frozen section interpretation in real time.

Extracting and Learning Fine-Grained Labels from Chest Radiographs

Nov 18, 2020

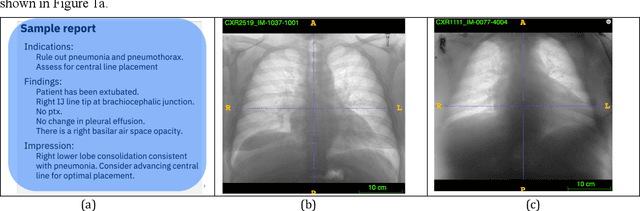

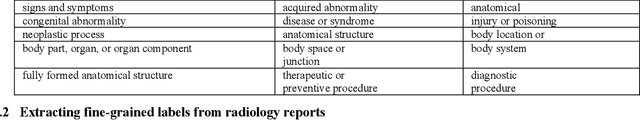

Abstract:Chest radiographs are the most common diagnostic exam in emergency rooms and intensive care units today. Recently, a number of researchers have begun working on large chest X-ray datasets to develop deep learning models for recognition of a handful of coarse finding classes such as opacities, masses and nodules. In this paper, we focus on extracting and learning fine-grained labels for chest X-ray images. Specifically we develop a new method of extracting fine-grained labels from radiology reports by combining vocabulary-driven concept extraction with phrasal grouping in dependency parse trees for association of modifiers with findings. A total of 457 fine-grained labels depicting the largest spectrum of findings to date were selected and sufficiently large datasets acquired to train a new deep learning model designed for fine-grained classification. We show results that indicate a highly accurate label extraction process and a reliable learning of fine-grained labels. The resulting network, to our knowledge, is the first to recognize fine-grained descriptions of findings in images covering over nine modifiers including laterality, location, severity, size and appearance.

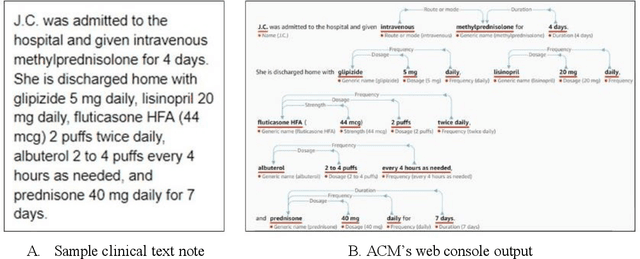

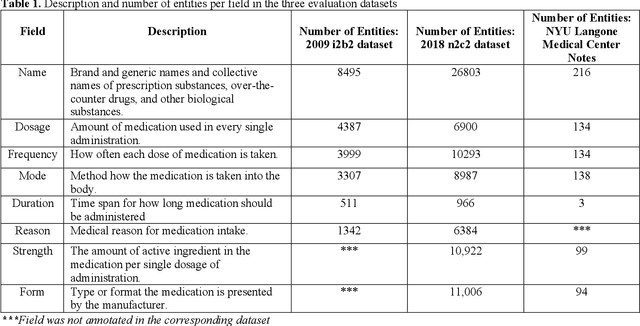

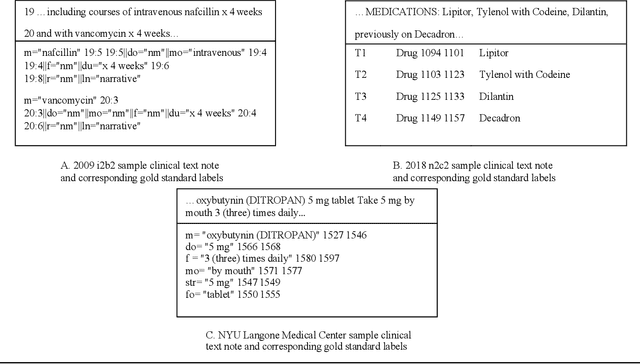

Assessment of Amazon Comprehend Medical: Medication Information Extraction

Feb 02, 2020

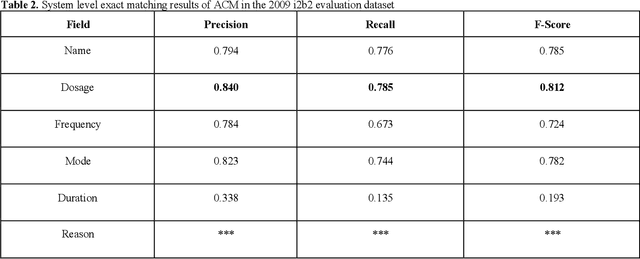

Abstract:In November 27, 2018, Amazon Web Services (AWS) released Amazon Comprehend Medical (ACM), a deep learning based system that automatically extracts clinical concepts (which include anatomy, medical conditions, protected health information (PH)I, test names, treatment names, and medical procedures, and medications) from clinical text notes. Uptake and trust in any new data product relies on independent validation across benchmark datasets and tools to establish and confirm expected quality of results. This work focuses on the medication extraction task, and particularly, ACM was evaluated using the official test sets from the 2009 i2b2 Medication Extraction Challenge and 2018 n2c2 Track 2: Adverse Drug Events and Medication Extraction in EHRs. Overall, ACM achieved F-scores of 0.768 and 0.828. These scores ranked the lowest when compared to the three best systems in the respective challenges. To further establish the generalizability of its medication extraction performance, a set of random internal clinical text notes from NYU Langone Medical Center were also included in this work. And in this corpus, ACM garnered an F-score of 0.753.

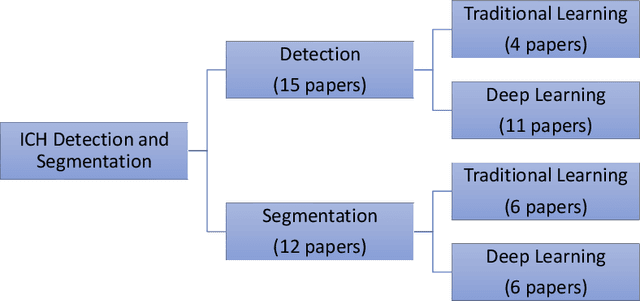

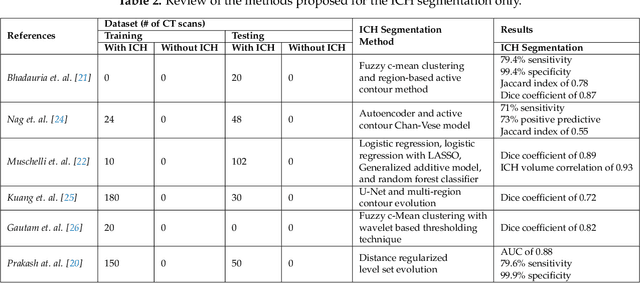

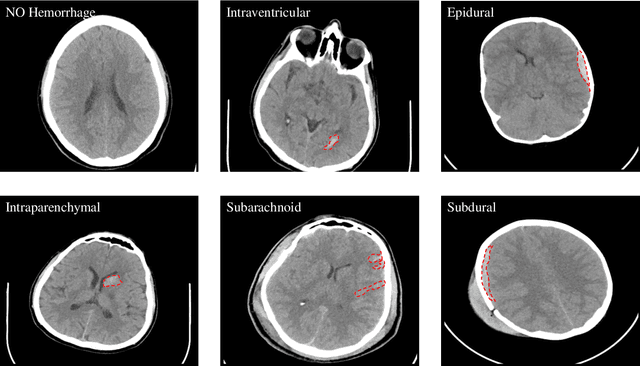

Intracranial Hemorrhage Segmentation Using Deep Convolutional Model

Nov 15, 2019

Abstract:Traumatic brain injuries could cause intracranial hemorrhage (ICH). ICH could lead to disability or death if it is not accurately diagnosed and treated in a time-sensitive procedure. The current clinical protocol to diagnose ICH is examining Computerized Tomography (CT) scans by radiologists to detect ICH and localize its regions. However, this process relies heavily on the availability of an experienced radiologist. In this paper, we designed a study protocol to collect a dataset of 82 CT scans of subjects with traumatic brain injury. Later, the ICH regions were manually delineated in each slice by a consensus decision of two radiologists. Recently, fully convolutional networks (FCN) have shown to be successful in medical image segmentation. We developed a deep FCN, called U-Net, to segment the ICH regions from the CT scans in a fully automated manner. The method achieved a Dice coefficient of 0.31 for the ICH segmentation based on 5-fold cross-validation. The dataset is publicly available online at PhysioNet repository for future analysis and comparison.

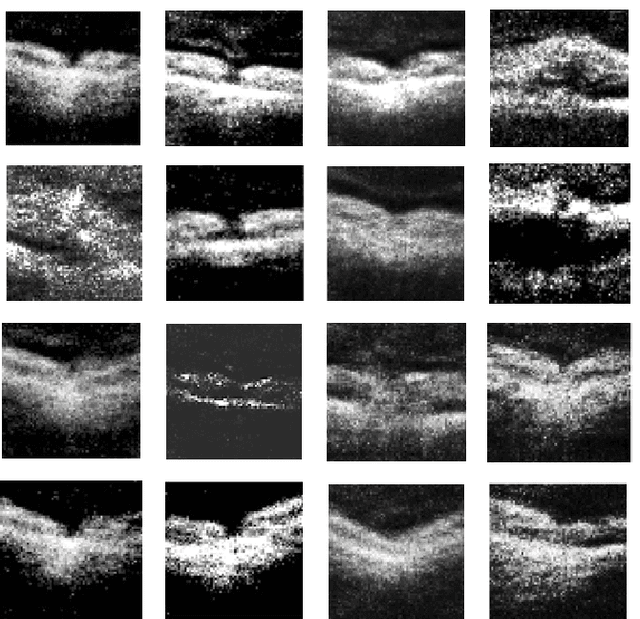

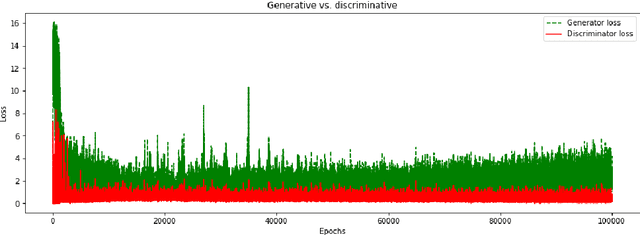

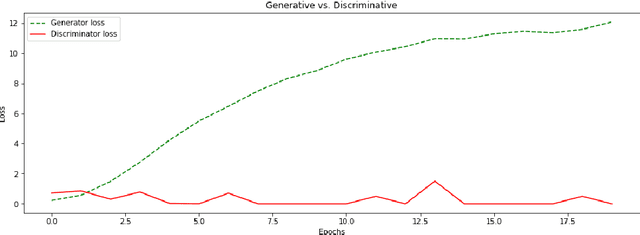

Generative Adversarial Networks Synthesize Realistic OCT Images of the Retina

Feb 18, 2019

Abstract:We report, to our knowledge, the first end-to-end application of Generative Adversarial Networks (GANs) towards the synthesis of Optical Coherence Tomography (OCT) images of the retina. Generative models have gained recent attention for the increasingly realistic images they can synthesize, given a sampling of a data type. In this paper, we apply GANs to a sampling distribution of OCTs of the retina. We observe the synthesis of realistic OCT images depicting recognizable pathology such as macular holes, choroidal neovascular membranes, myopic degeneration, cystoid macular edema, and central serous retinopathy amongst others. This represents the first such report of its kind. Potential applications of this new technology include for surgical simulation, for treatment planning, for disease prognostication, and for accelerating the development of new drugs and surgical procedures to treat retinal disease.

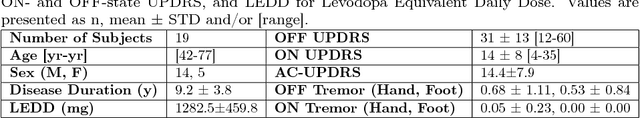

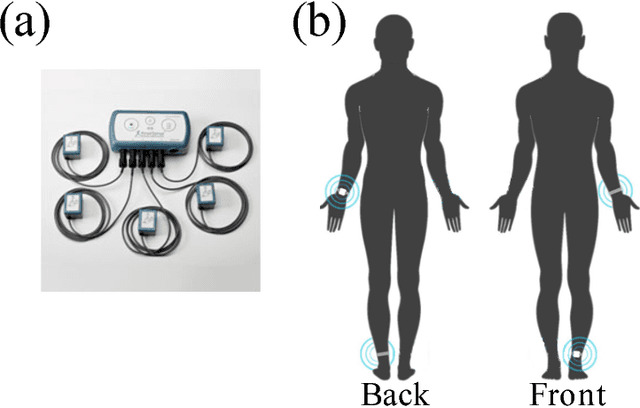

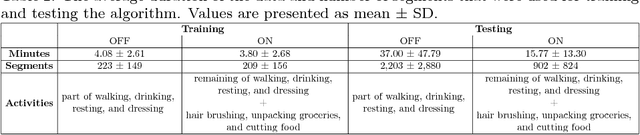

Wearable-based Mediation State Detection in Individuals with Parkinson's Disease

Sep 19, 2018

Abstract:One of the most prevalent complaints of individuals with mid-stage and advanced Parkinson's disease (PD) is the fluctuating response to their medication (i.e., ON state with maximum benefit from medication and OFF state with no benefit from medication). In order to address these motor fluctuations, the patients go through periodic clinical examination where the treating physician reviews the patients' self-report about duration in different medication states and optimize therapy accordingly. Unfortunately, the patients' self-report can be unreliable and suffer from recall bias. There is a need to a technology-based system that can provide objective measures about the duration in different medication states that can be used by the treating physician to successfully adjust the therapy. In this paper, we developed a medication state detection algorithm to detect medication states using two wearable motion sensors. A series of significant features are extracted from the motion data and used in a classifier that is based on a support vector machine with fuzzy labeling. The developed algorithm is evaluated using a dataset with 19 PD subjects and a total duration of 1,052.24 minutes (17.54 hours). The algorithm resulted in an average classification accuracy of 90.5%, sensitivity of 94.2%, and specificity of 85.4%.

Geared Rotationally Identical and Invariant Convolutional Neural Network Systems

Aug 10, 2018

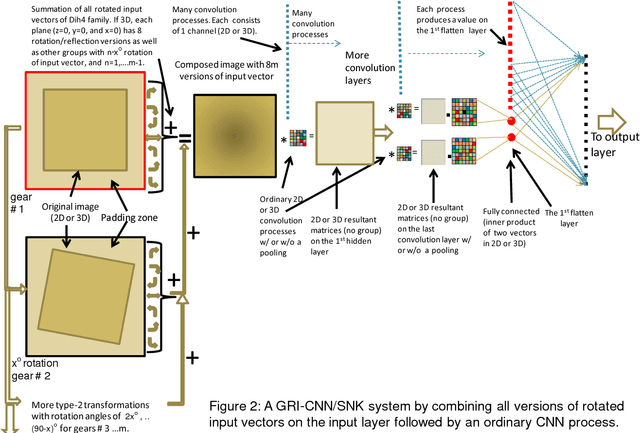

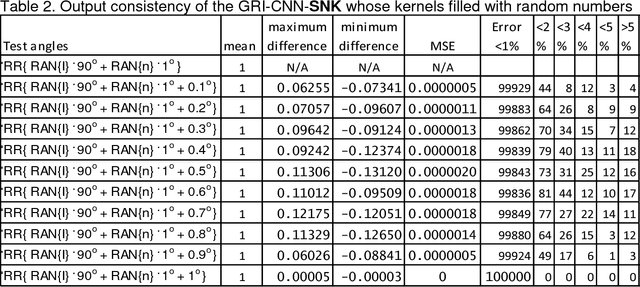

Abstract:Theorems and techniques to form different types of transformationally invariant processing and to produce the same output quantitatively based on either transformationally invariant operators or symmetric operations have recently been introduced by the authors. In this study, we further propose to compose a geared rotationally identical CNN system (GRI-CNN) with a small step angle by connecting networks of participated processes at the first flatten layer. Using an ordinary CNN structure as a base, requirements for constructing a GRI-CNN include the use of either symmetric input vector or kernels with an angle increment that can form a complete cycle as a "gearwheel". Four basic GRI-CNN structures were studied. Each of them can produce quantitatively identical output results when a rotation angle of the input vector is evenly divisible by the step angle of the gear. Our study showed when an input vector rotated with an angle does not match to a step angle, the GRI-CNN can also produce a highly consistent result. With a design of using an ultra-fine gear-tooth step angle (e.g., 1 degree or 0.1 degree), all four GRI-CNN systems can be constructed virtually isotropically.

Exploiting Partial Structural Symmetry For Patient-Specific Image Augmentation in Trauma Interventions

Apr 09, 2018

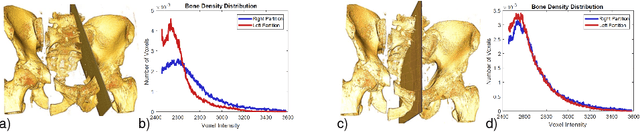

Abstract:In unilateral pelvic fracture reductions, surgeons attempt to reconstruct the bone fragments such that bilateral symmetry in the bony anatomy is restored. We propose to exploit this "structurally symmetric" nature of the pelvic bone, and provide intra-operative image augmentation to assist the surgeon in repairing dislocated fragments. The main challenge is to automatically estimate the desired plane of symmetry within the patient's pre-operative CT. We propose to estimate this plane using a non-linear optimization strategy, by minimizing Tukey's biweight robust estimator, relying on the partial symmetry of the anatomy. Moreover, a regularization term is designed to enforce the similarity of bone density histograms on both sides of this plane, relying on the biological fact that, even if injured, the dislocated bone segments remain within the body. The experimental results demonstrate the performance of the proposed method in estimating this "plane of partial symmetry" using CT images of both healthy and injured anatomy. Examples of unilateral pelvic fractures are used to show how intra-operative X-ray images could be augmented with the forward-projections of the mirrored anatomy, acting as objective road-map for fracture reduction procedures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge