Bastian Bier

Learning to Avoid Poor Images: Towards Task-aware C-arm Cone-beam CT Trajectories

Sep 19, 2019

Abstract:Metal artifacts in computed tomography (CT) arise from a mismatch between physics of image formation and idealized assumptions during tomographic reconstruction. These artifacts are particularly strong around metal implants, inhibiting widespread adoption of 3D cone-beam CT (CBCT) despite clear opportunity for intra-operative verification of implant positioning, e.g. in spinal fusion surgery. On synthetic and real data, we demonstrate that much of the artifact can be avoided by acquiring better data for reconstruction in a task-aware and patient-specific manner, and describe the first step towards the envisioned task-aware CBCT protocol. The traditional short-scan CBCT trajectory is planar, with little room for scene-specific adjustment. We extend this trajectory by autonomously adjusting out-of-plane angulation. This enables C-arm source trajectories that are scene-specific in that they avoid acquiring "poor images", characterized by beam hardening, photon starvation, and noise. The recommendation of ideal out-of-plane angulation is performed on-the-fly using a deep convolutional neural network that regresses a detectability-rank derived from imaging physics.

Exploiting Partial Structural Symmetry For Patient-Specific Image Augmentation in Trauma Interventions

Apr 09, 2018

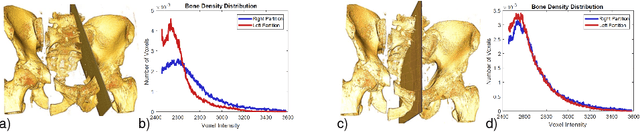

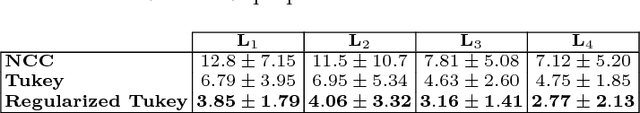

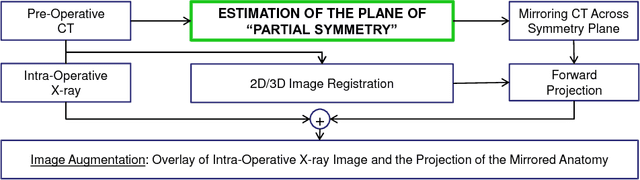

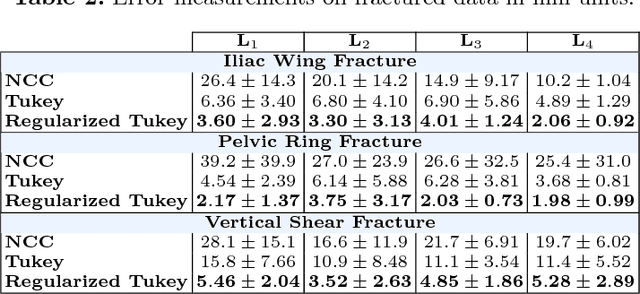

Abstract:In unilateral pelvic fracture reductions, surgeons attempt to reconstruct the bone fragments such that bilateral symmetry in the bony anatomy is restored. We propose to exploit this "structurally symmetric" nature of the pelvic bone, and provide intra-operative image augmentation to assist the surgeon in repairing dislocated fragments. The main challenge is to automatically estimate the desired plane of symmetry within the patient's pre-operative CT. We propose to estimate this plane using a non-linear optimization strategy, by minimizing Tukey's biweight robust estimator, relying on the partial symmetry of the anatomy. Moreover, a regularization term is designed to enforce the similarity of bone density histograms on both sides of this plane, relying on the biological fact that, even if injured, the dislocated bone segments remain within the body. The experimental results demonstrate the performance of the proposed method in estimating this "plane of partial symmetry" using CT images of both healthy and injured anatomy. Examples of unilateral pelvic fractures are used to show how intra-operative X-ray images could be augmented with the forward-projections of the mirrored anatomy, acting as objective road-map for fracture reduction procedures.

Closing the Calibration Loop: An Inside-out-tracking Paradigm for Augmented Reality in Orthopedic Surgery

Mar 22, 2018

Abstract:In percutaneous orthopedic interventions the surgeon attempts to reduce and fixate fractures in bony structures. The complexity of these interventions arises when the surgeon performs the challenging task of navigating surgical tools percutaneously only under the guidance of 2D interventional X-ray imaging. Moreover, the intra-operatively acquired data is only visualized indirectly on external displays. In this work, we propose a flexible Augmented Reality (AR) paradigm using optical see-through head mounted displays. The key technical contribution of this work includes the marker-less and dynamic tracking concept which closes the calibration loop between patient, C-arm and the surgeon. This calibration is enabled using Simultaneous Localization and Mapping of the environment of the operating theater. In return, the proposed solution provides in situ visualization of pre- and intra-operative 3D medical data directly at the surgical site. We demonstrate pre-clinical evaluation of a prototype system, and report errors for calibration and target registration. Finally, we demonstrate the usefulness of the proposed inside-out tracking system in achieving "bull's eye" view for C-arm-guided punctures. This AR solution provides an intuitive visualization of the anatomy and can simplify the hand-eye coordination for the orthopedic surgeon.

X-ray-transform Invariant Anatomical Landmark Detection for Pelvic Trauma Surgery

Mar 22, 2018

Abstract:X-ray image guidance enables percutaneous alternatives to complex procedures. Unfortunately, the indirect view onto the anatomy in addition to projective simplification substantially increase the task-load for the surgeon. Additional 3D information such as knowledge of anatomical landmarks can benefit surgical decision making in complicated scenarios. Automatic detection of these landmarks in transmission imaging is challenging since image-domain features characteristic to a certain landmark change substantially depending on the viewing direction. Consequently and to the best of our knowledge, the above problem has not yet been addressed. In this work, we present a method to automatically detect anatomical landmarks in X-ray images independent of the viewing direction. To this end, a sequential prediction framework based on convolutional layers is trained on synthetically generated data of the pelvic anatomy to predict 23 landmarks in single X-ray images. View independence is contingent on training conditions and, here, is achieved on a spherical segment covering (120 x 90) degrees in LAO/RAO and CRAN/CAUD, respectively, centered around AP. On synthetic data, the proposed approach achieves a mean prediction error of 5.6 +- 4.5 mm. We demonstrate that the proposed network is immediately applicable to clinically acquired data of the pelvis. In particular, we show that our intra-operative landmark detection together with pre-operative CT enables X-ray pose estimation which, ultimately, benefits initialization of image-based 2D/3D registration.

DeepDRR -- A Catalyst for Machine Learning in Fluoroscopy-guided Procedures

Mar 22, 2018

Abstract:Machine learning-based approaches outperform competing methods in most disciplines relevant to diagnostic radiology. Interventional radiology, however, has not yet benefited substantially from the advent of deep learning, in particular because of two reasons: 1) Most images acquired during the procedure are never archived and are thus not available for learning, and 2) even if they were available, annotations would be a severe challenge due to the vast amounts of data. When considering fluoroscopy-guided procedures, an interesting alternative to true interventional fluoroscopy is in silico simulation of the procedure from 3D diagnostic CT. In this case, labeling is comparably easy and potentially readily available, yet, the appropriateness of resulting synthetic data is dependent on the forward model. In this work, we propose DeepDRR, a framework for fast and realistic simulation of fluoroscopy and digital radiography from CT scans, tightly integrated with the software platforms native to deep learning. We use machine learning for material decomposition and scatter estimation in 3D and 2D, respectively, combined with analytic forward projection and noise injection to achieve the required performance. On the example of anatomical landmark detection in X-ray images of the pelvis, we demonstrate that machine learning models trained on DeepDRRs generalize to unseen clinically acquired data without the need for re-training or domain adaptation. Our results are promising and promote the establishment of machine learning in fluoroscopy-guided procedures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge