Jonas Hajek

SurgT challenge: Benchmark of Soft-Tissue Trackers for Robotic Surgery

Feb 28, 2023

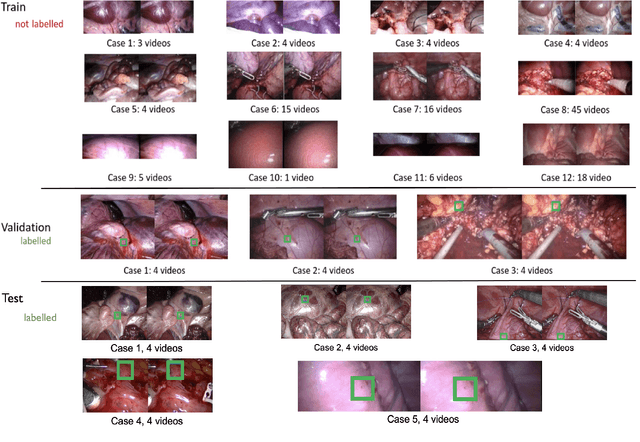

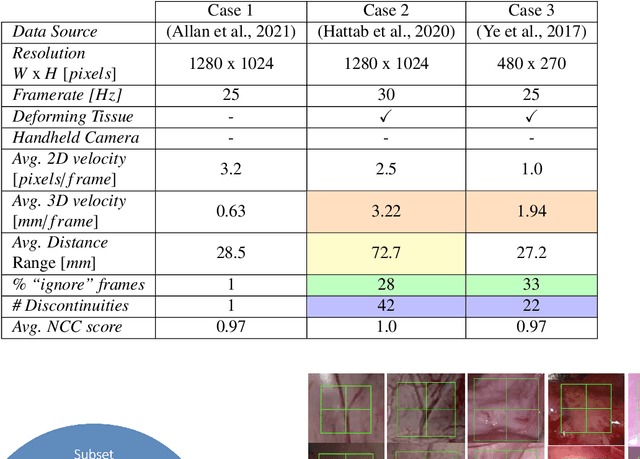

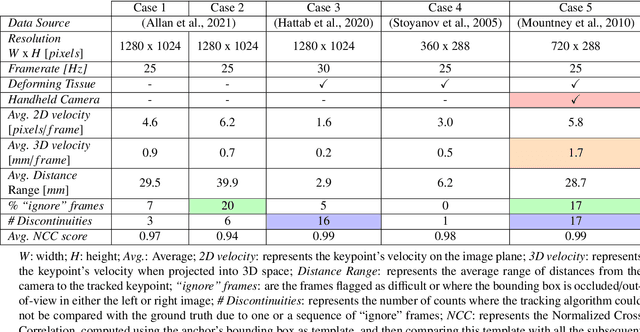

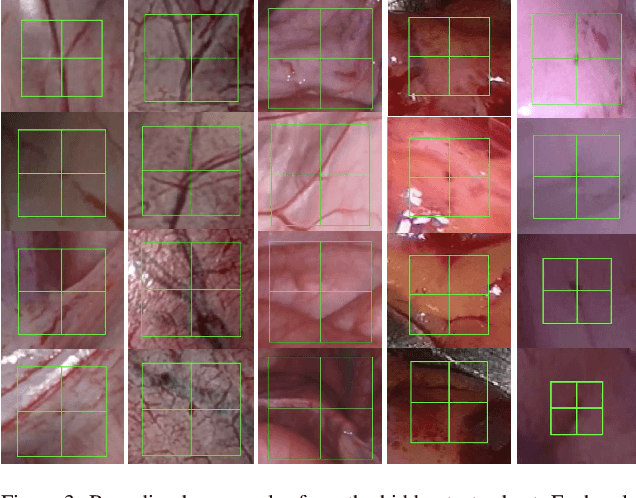

Abstract:This paper introduces the "SurgT: Surgical Tracking" challenge which was organised in conjunction with the 25th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2022). There were two purposes for the creation of this challenge: (1) the establishment of the first standardised benchmark for the research community to assess soft-tissue trackers; and (2) to encourage the development of unsupervised deep learning methods, given the lack of annotated data in surgery. A dataset of 157 stereo endoscopic videos from 20 clinical cases, along with stereo camera calibration parameters, have been provided. The participants were tasked with the development of algorithms to track a bounding box on stereo endoscopic videos. At the end of the challenge, the developed methods were assessed on a previously hidden test subset. This assessment uses benchmarking metrics that were purposely developed for this challenge and are now available online. The teams were ranked according to their Expected Average Overlap (EAO) score, which is a weighted average of the Intersection over Union (IoU) scores. The performance evaluation study verifies the efficacy of unsupervised deep learning algorithms in tracking soft-tissue. The best-performing method achieved an EAO score of 0.583 in the test subset. The dataset and benchmarking tool created for this challenge have been made publicly available. This challenge is expected to contribute to the development of autonomous robotic surgery and other digital surgical technologies.

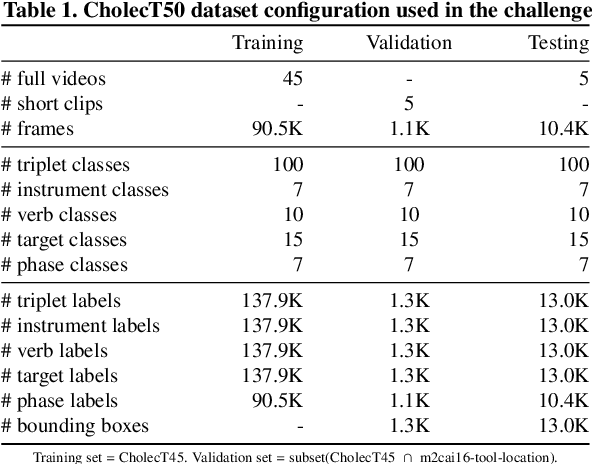

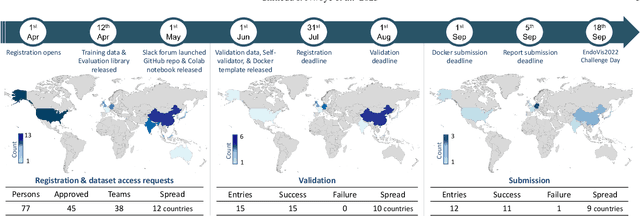

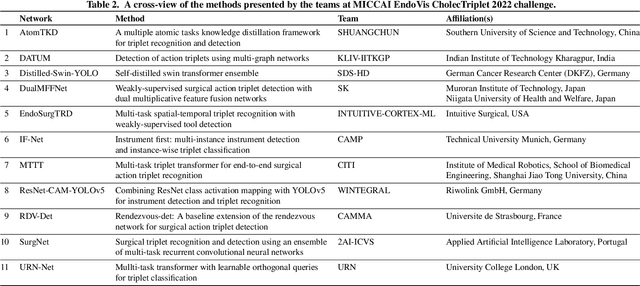

CholecTriplet2022: Show me a tool and tell me the triplet -- an endoscopic vision challenge for surgical action triplet detection

Feb 13, 2023

Abstract:Formalizing surgical activities as triplets of the used instruments, actions performed, and target anatomies is becoming a gold standard approach for surgical activity modeling. The benefit is that this formalization helps to obtain a more detailed understanding of tool-tissue interaction which can be used to develop better Artificial Intelligence assistance for image-guided surgery. Earlier efforts and the CholecTriplet challenge introduced in 2021 have put together techniques aimed at recognizing these triplets from surgical footage. Estimating also the spatial locations of the triplets would offer a more precise intraoperative context-aware decision support for computer-assisted intervention. This paper presents the CholecTriplet2022 challenge, which extends surgical action triplet modeling from recognition to detection. It includes weakly-supervised bounding box localization of every visible surgical instrument (or tool), as the key actors, and the modeling of each tool-activity in the form of <instrument, verb, target> triplet. The paper describes a baseline method and 10 new deep learning algorithms presented at the challenge to solve the task. It also provides thorough methodological comparisons of the methods, an in-depth analysis of the obtained results, their significance, and useful insights for future research directions and applications in surgery.

Augmented Reality-based Feedback for Technician-in-the-loop C-arm Repositioning

Jun 22, 2018

Abstract:Interventional C-arm imaging is crucial to percutaneous orthopedic procedures as it enables the surgeon to monitor the progress of surgery on the anatomy level. Minimally invasive interventions require repeated acquisition of X-ray images from different anatomical views to verify tool placement. Achieving and reproducing these views often comes at the cost of increased surgical time and radiation dose to both patient and staff. This work proposes a marker-free "technician-in-the-loop" Augmented Reality (AR) solution for C-arm repositioning. The X-ray technician operating the C-arm interventionally is equipped with a head-mounted display capable of recording desired C-arm poses in 3D via an integrated infrared sensor. For C-arm repositioning to a particular target view, the recorded C-arm pose is restored as a virtual object and visualized in an AR environment, serving as a perceptual reference for the technician. We conduct experiments in a setting simulating orthopedic trauma surgery. Our proof-of-principle findings indicate that the proposed system can decrease the 2.76 X-ray images required per desired view down to zero, suggesting substantial reductions of radiation dose during C-arm repositioning. The proposed AR solution is a first step towards facilitating communication between the surgeon and the surgical staff, improving the quality of surgical image acquisition, and enabling context-aware guidance for surgery rooms of the future. The concept of technician-in-the-loop design will become relevant to various interventions considering the expected advancements of sensing and wearable computing in the near future.

Closing the Calibration Loop: An Inside-out-tracking Paradigm for Augmented Reality in Orthopedic Surgery

Mar 22, 2018

Abstract:In percutaneous orthopedic interventions the surgeon attempts to reduce and fixate fractures in bony structures. The complexity of these interventions arises when the surgeon performs the challenging task of navigating surgical tools percutaneously only under the guidance of 2D interventional X-ray imaging. Moreover, the intra-operatively acquired data is only visualized indirectly on external displays. In this work, we propose a flexible Augmented Reality (AR) paradigm using optical see-through head mounted displays. The key technical contribution of this work includes the marker-less and dynamic tracking concept which closes the calibration loop between patient, C-arm and the surgeon. This calibration is enabled using Simultaneous Localization and Mapping of the environment of the operating theater. In return, the proposed solution provides in situ visualization of pre- and intra-operative 3D medical data directly at the surgical site. We demonstrate pre-clinical evaluation of a prototype system, and report errors for calibration and target registration. Finally, we demonstrate the usefulness of the proposed inside-out tracking system in achieving "bull's eye" view for C-arm-guided punctures. This AR solution provides an intuitive visualization of the anatomy and can simplify the hand-eye coordination for the orthopedic surgeon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge