Joao Cartucho

SurgRIPE challenge: Benchmark of Surgical Robot Instrument Pose Estimation

Jan 06, 2025

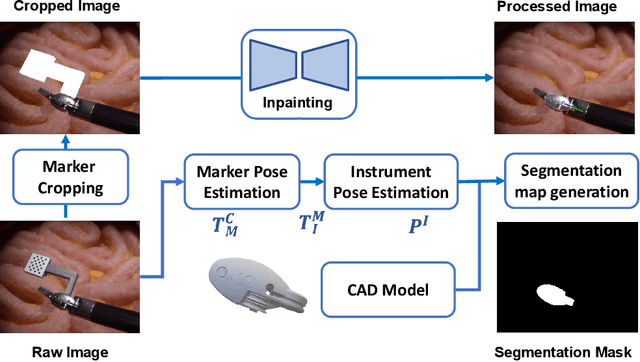

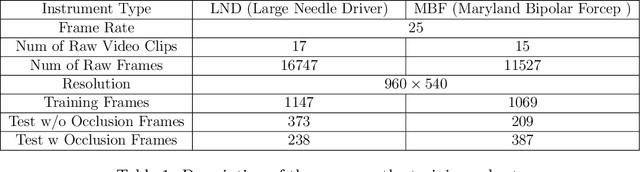

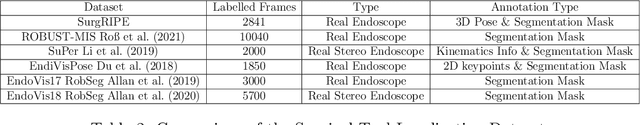

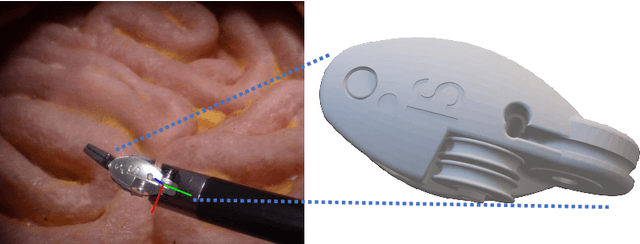

Abstract:Accurate instrument pose estimation is a crucial step towards the future of robotic surgery, enabling applications such as autonomous surgical task execution. Vision-based methods for surgical instrument pose estimation provide a practical approach to tool tracking, but they often require markers to be attached to the instruments. Recently, more research has focused on the development of marker-less methods based on deep learning. However, acquiring realistic surgical data, with ground truth instrument poses, required for deep learning training, is challenging. To address the issues in surgical instrument pose estimation, we introduce the Surgical Robot Instrument Pose Estimation (SurgRIPE) challenge, hosted at the 26th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) in 2023. The objectives of this challenge are: (1) to provide the surgical vision community with realistic surgical video data paired with ground truth instrument poses, and (2) to establish a benchmark for evaluating markerless pose estimation methods. The challenge led to the development of several novel algorithms that showcased improved accuracy and robustness over existing methods. The performance evaluation study on the SurgRIPE dataset highlights the potential of these advanced algorithms to be integrated into robotic surgery systems, paving the way for more precise and autonomous surgical procedures. The SurgRIPE challenge has successfully established a new benchmark for the field, encouraging further research and development in surgical robot instrument pose estimation.

SurgT challenge: Benchmark of Soft-Tissue Trackers for Robotic Surgery

Feb 28, 2023

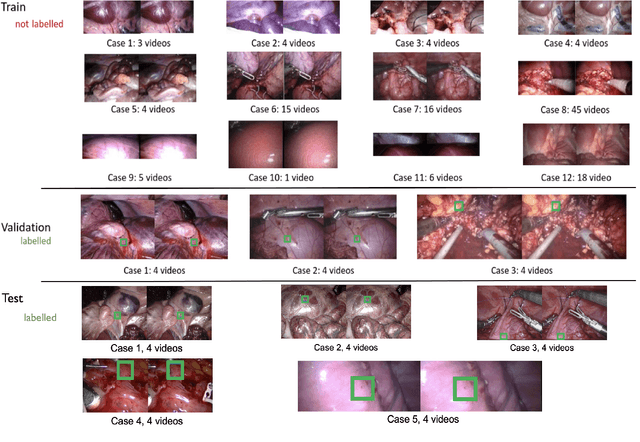

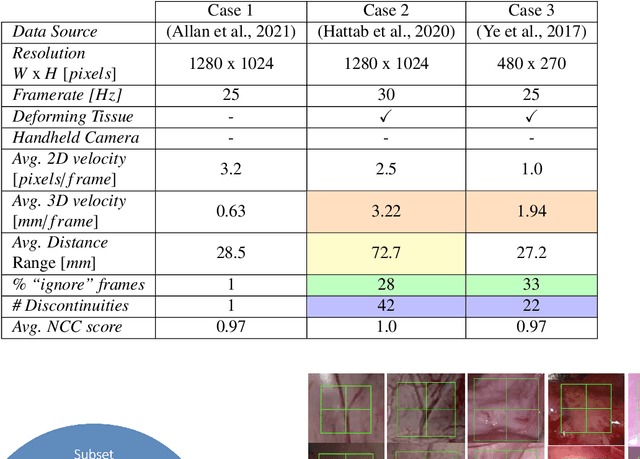

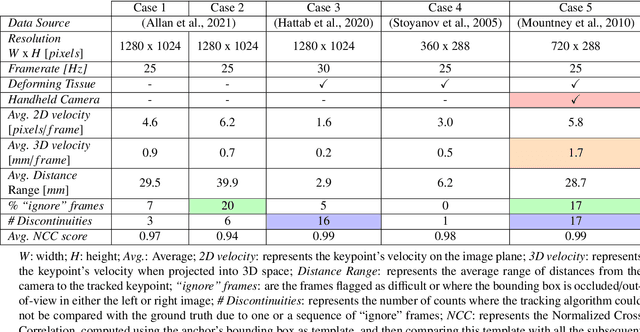

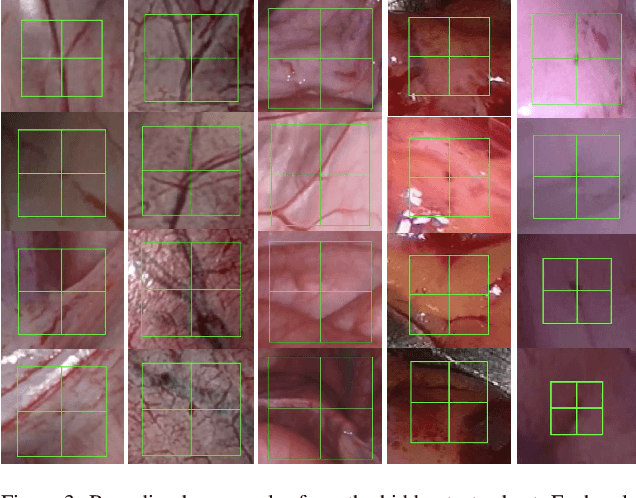

Abstract:This paper introduces the "SurgT: Surgical Tracking" challenge which was organised in conjunction with the 25th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2022). There were two purposes for the creation of this challenge: (1) the establishment of the first standardised benchmark for the research community to assess soft-tissue trackers; and (2) to encourage the development of unsupervised deep learning methods, given the lack of annotated data in surgery. A dataset of 157 stereo endoscopic videos from 20 clinical cases, along with stereo camera calibration parameters, have been provided. The participants were tasked with the development of algorithms to track a bounding box on stereo endoscopic videos. At the end of the challenge, the developed methods were assessed on a previously hidden test subset. This assessment uses benchmarking metrics that were purposely developed for this challenge and are now available online. The teams were ranked according to their Expected Average Overlap (EAO) score, which is a weighted average of the Intersection over Union (IoU) scores. The performance evaluation study verifies the efficacy of unsupervised deep learning algorithms in tracking soft-tissue. The best-performing method achieved an EAO score of 0.583 in the test subset. The dataset and benchmarking tool created for this challenge have been made publicly available. This challenge is expected to contribute to the development of autonomous robotic surgery and other digital surgical technologies.

Regularizing disparity estimation via multi task learning with structured light reconstruction

Jan 19, 2023Abstract:3D reconstruction is a useful tool for surgical planning and guidance. However, the lack of available medical data stunts research and development in this field, as supervised deep learning methods for accurate disparity estimation rely heavily on large datasets containing ground truth information. Alternative approaches to supervision have been explored, such as self-supervision, which can reduce or remove entirely the need for ground truth. However, no proposed alternatives have demonstrated performance capabilities close to what would be expected from a supervised setup. This work aims to alleviate this issue. In this paper, we investigate the learning of structured light projections to enhance the development of direct disparity estimation networks. We show for the first time that it is possible to accurately learn the projection of structured light on a scene, implicitly learning disparity. Secondly, we \textcolor{black}{explore the use of a multi task learning (MTL) framework for the joint training of structured light and disparity. We present results which show that MTL with structured light improves disparity training; without increasing the number of model parameters. Our MTL setup outperformed the single task learning (STL) network in every validation test. Notably, in the medical generalisation test, the STL error was 1.4 times worse than that of the best MTL performance. The benefit of using MTL is emphasised when the training data is limited.} A dataset containing stereoscopic images, disparity maps and structured light projections on medical phantoms and ex vivo tissue was created for evaluation together with virtual scenes. This dataset will be made publicly available in the future.

Caveats on the first-generation da Vinci Research Kit: latent technical constraints and essential calibrations

Oct 24, 2022

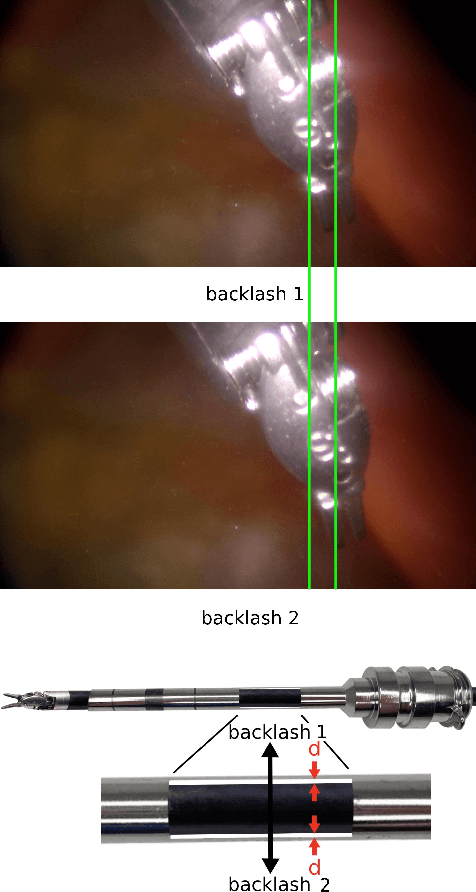

Abstract:Telesurgical robotic systems provide a well established form of assistance in the operating theater, with evidence of growing uptake in recent years. Until now, the da Vinci surgical system (Intuitive Surgical Inc, Sunnyvale, California) has been the most widely adopted robot of this kind, with more than 6,700 systems in current clinical use worldwide. To accelerate research on robotic-assisted surgery, the retired first-generation da Vinci robots have been redeployed for research use as "da Vinci Research Kits" (dVRKs), which have been distributed to research institutions around the world to support both training and research in the sector. In the past ten years, a great amount of research on the dVRK has been carried out across a vast range of research topics. During this extensive and distributed process, common technical issues have been identified that are buried deep within the dVRK research and development architecture, and were found to be common among dVRK user feedback, regardless of the breadth and disparity of research directions identified. This paper gathers and analyzes the most significant of these, with a focus on the technical constraints of the first-generation dVRK, which both existing and prospective users should be aware of before embarking onto dVRK-related research. The hope is that this review will aid users in identifying and addressing common limitations of the systems promptly, thus helping to accelerate progress in the field.

Autonomous Tissue Scanning under Free-Form Motion for Intraoperative Tissue Characterisation

May 22, 2020

Abstract:In Minimally Invasive Surgery (MIS), tissue scanning with imaging probes is required for subsurface visualisation to characterise the state of the tissue. However, scanning of large tissue surfaces in the presence of deformation is a challenging task for the surgeon. Recently, robot-assisted local tissue scanning has been investigated for motion stabilisation of imaging probes to facilitate the capturing of good quality images and reduce the surgeon's cognitive load. Nonetheless, these approaches require the tissue surface to be static or deform with periodic motion. To eliminate these assumptions, we propose a visual servoing framework for autonomous tissue scanning, able to deal with free-form tissue deformation. The 3D structure of the surgical scene is recovered and a feature-based method is proposed to estimate the motion of the tissue in real-time. A desired scanning trajectory is manually defined on a reference frame and continuously updated using projective geometry to follow the tissue motion and control the movement of the robotic arm. The advantage of the proposed method is that it does not require the learning of the tissue motion prior to scanning and can deal with free-form deformation. We deployed this framework on the da Vinci surgical robot using the da Vinci Research Kit (dVRK) for Ultrasound tissue scanning. Since the framework does not rely on information from the Ultrasound data, it can be easily extended to other probe-based imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge