Russell Taylor

Signal or 'Noise': Human Reactions to Robot Errors in the Wild

Feb 04, 2026Abstract:In the real world, robots frequently make errors, yet little is known about people's social responses to errors outside of lab settings. Prior work has shown that social signals are reliable and useful for error management in constrained interactions, but it is unclear if this holds in the real world - especially with a non-social robot in repeated and group interactions with successive or propagated errors. To explore this, we built a coffee robot and conducted a public field deployment ($N = 49$). We found that participants consistently expressed varied social signals in response to errors and other stimuli, particularly during group interactions. Our findings suggest that social signals in the wild are rich (with participants volunteering information about the interaction), but "noisy." We discuss lessons, benefits, and challenges for using social signals in real-world HRI.

Pun Intended: Multi-Agent Translation of Wordplay with Contrastive Learning and Phonetic-Semantic Embeddings

Jul 09, 2025

Abstract:Translating wordplay across languages presents unique challenges that have long confounded both professional human translators and machine translation systems. This research proposes a novel approach for translating puns from English to French by combining state-of-the-art large language models with specialized techniques for wordplay generation. Our methodology employs a three-stage approach. First, we establish a baseline using multiple frontier large language models with feedback based on a new contrastive learning dataset. Second, we implement a guided chain-of-thought pipeline with combined phonetic-semantic embeddings. Third, we implement a multi-agent generator-discriminator framework for evaluating and regenerating puns with feedback. Moving beyond the limitations of literal translation, our methodology's primary objective is to capture the linguistic creativity and humor of the source text wordplay, rather than simply duplicating its vocabulary. Our best runs earned first and second place in the CLEF JOKER 2025 Task 2 competition where they were evaluated manually by expert native French speakers. This research addresses a gap between translation studies and computational linguistics by implementing linguistically-informed techniques for wordplay translation, advancing our understanding of how language models can be leveraged to handle the complex interplay between semantic ambiguity, phonetic similarity, and the implicit cultural and linguistic awareness needed for successful humor.

Robot Error Awareness Through Human Reactions: Implementation, Evaluation, and Recommendations

Jan 13, 2025

Abstract:Effective error detection is crucial to prevent task disruption and maintain user trust. Traditional methods often rely on task-specific models or user reporting, which can be inflexible or slow. Recent research suggests social signals, naturally exhibited by users in response to robot errors, can enable more flexible, timely error detection. However, most studies rely on post hoc analysis, leaving their real-time effectiveness uncertain and lacking user-centric evaluation. In this work, we developed a proactive error detection system that combines user behavioral signals (facial action units and speech), user feedback, and error context for automatic error detection. In a study (N = 28), we compared our proactive system to a status quo reactive approach. Results show our system 1) reliably and flexibly detects error, 2) detects errors faster than the reactive approach, and 3) is perceived more favorably by users than the reactive one. We discuss recommendations for enabling robot error awareness in future HRI systems.

Seamless Augmented Reality Integration in Arthroscopy: A Pipeline for Articular Reconstruction and Guidance

Oct 01, 2024Abstract:Arthroscopy is a minimally invasive surgical procedure used to diagnose and treat joint problems. The clinical workflow of arthroscopy typically involves inserting an arthroscope into the joint through a small incision, during which surgeons navigate and operate largely by relying on their visual assessment through the arthroscope. However, the arthroscope's restricted field of view and lack of depth perception pose challenges in navigating complex articular structures and achieving surgical precision during procedures. Aiming at enhancing intraoperative awareness, we present a robust pipeline that incorporates simultaneous localization and mapping, depth estimation, and 3D Gaussian splatting to realistically reconstruct intra-articular structures solely based on monocular arthroscope video. Extending 3D reconstruction to Augmented Reality (AR) applications, our solution offers AR assistance for articular notch measurement and annotation anchoring in a human-in-the-loop manner. Compared to traditional Structure-from-Motion and Neural Radiance Field-based methods, our pipeline achieves dense 3D reconstruction and competitive rendering fidelity with explicit 3D representation in 7 minutes on average. When evaluated on four phantom datasets, our method achieves RMSE = 2.21mm reconstruction error, PSNR = 32.86 and SSIM = 0.89 on average. Because our pipeline enables AR reconstruction and guidance directly from monocular arthroscopy without any additional data and/or hardware, our solution may hold the potential for enhancing intraoperative awareness and facilitating surgical precision in arthroscopy. Our AR measurement tool achieves accuracy within 1.59 +/- 1.81mm and the AR annotation tool achieves a mIoU of 0.721.

Beyond the Manual Touch: Situational-aware Force Control for Increased Safety in Robot-assisted Skullbase Surgery

Jan 22, 2024Abstract:Purpose - Skullbase surgery demands exceptional precision when removing bone in the lateral skull base. Robotic assistance can alleviate the effect of human sensory-motor limitations. However, the stiffness and inertia of the robot can significantly impact the surgeon's perception and control of the tool-to-tissue interaction forces. Methods - We present a situational-aware, force control technique aimed at regulating interaction forces during robot-assisted skullbase drilling. The contextual interaction information derived from the digital twin environment is used to enhance sensory perception and suppress undesired high forces. Results - To validate our approach, we conducted initial feasibility experiments involving a medical and two engineering students. The experiment focused on further drilling around critical structures following cortical mastoidectomy. The experiment results demonstrate that robotic assistance coupled with our proposed control scheme effectively limited undesired interaction forces when compared to robotic assistance without the proposed force control. Conclusions - The proposed force control techniques show promise in significantly reducing undesired interaction forces during robot-assisted skullbase surgery. These findings contribute to the ongoing efforts to enhance surgical precision and safety in complex procedures involving the lateral skull base.

A force-sensing surgical drill for real-time force feedback in robotic mastoidectomy

Apr 05, 2023Abstract:Purpose: Robotic assistance in otologic surgery can reduce the task load of operating surgeons during the removal of bone around the critical structures in the lateral skull base. However, safe deployment into the anatomical passageways necessitates the development of advanced sensing capabilities to actively limit the interaction forces between the surgical tools and critical anatomy. Methods: We introduce a surgical drill equipped with a force sensor that is capable of measuring accurate tool-tissue interaction forces to enable force control and feedback to surgeons. The design, calibration and validation of the force-sensing surgical drill mounted on a cooperatively controlled surgical robot are described in this work. Results: The force measurements on the tip of the surgical drill are validated with raw-egg drilling experiments, where a force sensor mounted below the egg serves as ground truth. The average root mean square error (RMSE) for points and path drilling experiments are 41.7 (pm 12.2) mN and 48.3 (pm 13.7) mN respectively. Conclusions: The force-sensing prototype measures forces with sub-millinewton resolution and the results demonstrate that the calibrated force-sensing drill generates accurate force measurements with minimal error compared to the measured drill forces. The development of such sensing capabilities is crucial for the safe use of robotic systems in a clinical context.

Modeling Human Response to Robot Errors for Timely Error Detection

Aug 01, 2022

Abstract:In human-robot collaboration, robot errors are inevitable -- damaging user trust, willingness to work together, and task performance. Prior work has shown that people naturally respond to robot errors socially and that in social interactions it is possible to use human responses to detect errors. However, there is little exploration in the domain of non-social, physical human-robot collaboration such as assembly and tool retrieval. In this work, we investigate how people's organic, social responses to robot errors may be used to enable timely automatic detection of errors in physical human-robot interactions. We conducted a data collection study to obtain facial responses to train a real-time detection algorithm and a case study to explore the generalizability of our method with different task settings and errors. Our results show that natural social responses are effective signals for timely detection and localization of robot errors even in non-social contexts and that our method is robust across a variety of task contexts, robot errors, and user responses. This work contributes to robust error detection without detailed task specifications.

Surgical Data Science -- from Concepts to Clinical Translation

Oct 30, 2020

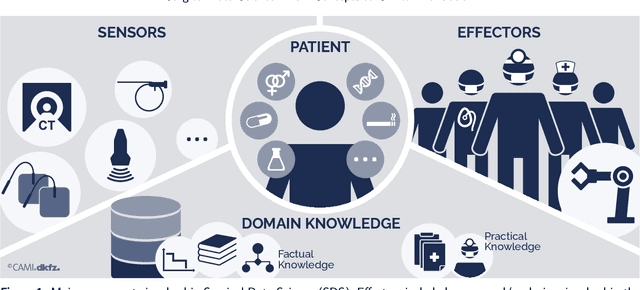

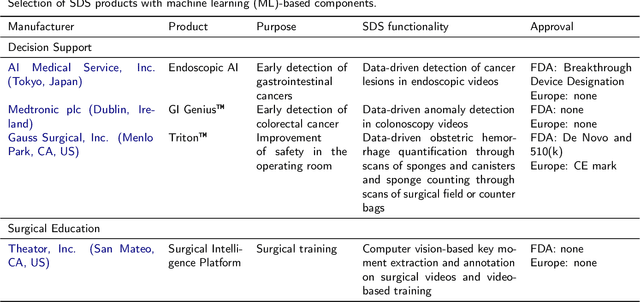

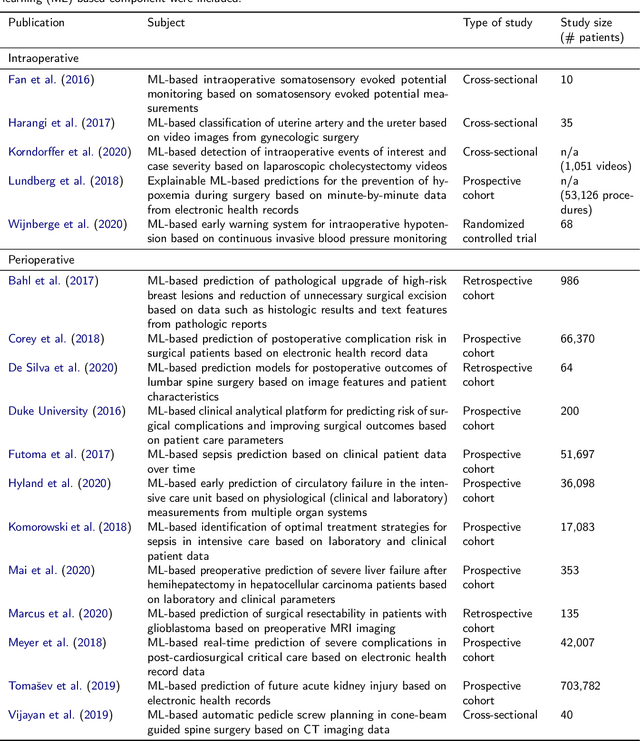

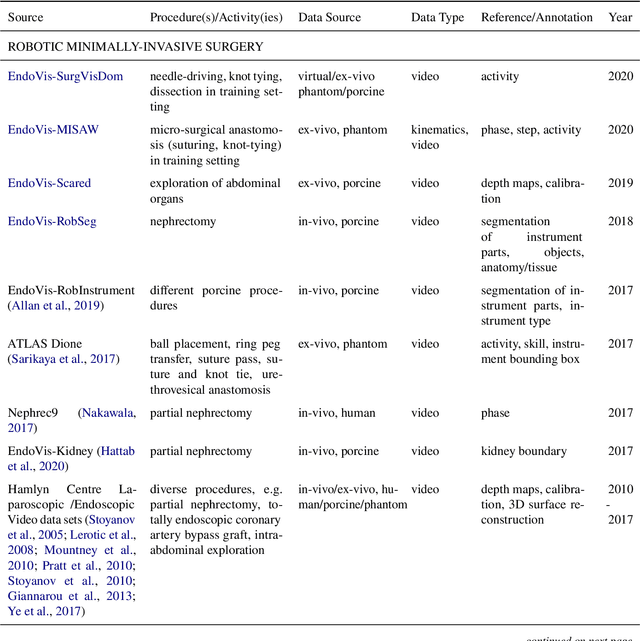

Abstract:Recent developments in data science in general and machine learning in particular have transformed the way experts envision the future of surgery. Surgical data science is a new research field that aims to improve the quality of interventional healthcare through the capture, organization, analysis and modeling of data. While an increasing number of data-driven approaches and clinical applications have been studied in the fields of radiological and clinical data science, translational success stories are still lacking in surgery. In this publication, we shed light on the underlying reasons and provide a roadmap for future advances in the field. Based on an international workshop involving leading researchers in the field of surgical data science, we review current practice, key achievements and initiatives as well as available standards and tools for a number of topics relevant to the field, namely (1) technical infrastructure for data acquisition, storage and access in the presence of regulatory constraints, (2) data annotation and sharing and (3) data analytics. Drawing from this extensive review, we present current challenges for technology development and (4) describe a roadmap for faster clinical translation and exploitation of the full potential of surgical data science.

The Role of Robotics in Infectious Disease Crises

Oct 19, 2020Abstract:The recent coronavirus pandemic has highlighted the many challenges faced by the healthcare, public safety, and economic systems when confronted with a surge in patients that require intensive treatment and a population that must be quarantined or shelter in place. The most obvious and pressing challenge is taking care of acutely ill patients while managing spread of infection within the care facility, but this is just the tip of the iceberg if we consider what could be done to prepare in advance for future pandemics. Beyond the obvious need for strengthening medical knowledge and preparedness, there is a complementary need to anticipate and address the engineering challenges associated with infectious disease emergencies. Robotic technologies are inherently programmable, and robotic systems have been adapted and deployed, to some extent, in the current crisis for such purposes as transport, logistics, and disinfection. As technical capabilities advance and as the installed base of robotic systems increases in the future, they could play a much more significant role in future crises. This report is the outcome of a virtual workshop co-hosted by the National Academy of Engineering (NAE) and the Computing Community Consortium (CCC) held on July 9-10, 2020. The workshop consisted of over forty participants including representatives from the engineering/robotics community, clinicians, critical care workers, public health and safety experts, and emergency responders. It identifies key challenges faced by healthcare responders and the general population and then identifies robotic/technological responses to these challenges. Then it identifies the key research/knowledge barriers that need to be addressed in developing effective, scalable solutions. Finally, the report ends with the following recommendations on how to implement this strategy.

A Learning-based Method for Online Adjustment of C-arm Cone-Beam CT Source Trajectories for Artifact Avoidance

Aug 14, 2020

Abstract:During spinal fusion surgery, screws are placed close to critical nerves suggesting the need for highly accurate screw placement. Verifying screw placement on high-quality tomographic imaging is essential. C-arm Cone-beam CT (CBCT) provides intraoperative 3D tomographic imaging which would allow for immediate verification and, if needed, revision. However, the reconstruction quality attainable with commercial CBCT devices is insufficient, predominantly due to severe metal artifacts in the presence of pedicle screws. These artifacts arise from a mismatch between the true physics of image formation and an idealized model thereof assumed during reconstruction. Prospectively acquiring views onto anatomy that are least affected by this mismatch can, therefore, improve reconstruction quality. We propose to adjust the C-arm CBCT source trajectory during the scan to optimize reconstruction quality with respect to a certain task, i.e. verification of screw placement. Adjustments are performed on-the-fly using a convolutional neural network that regresses a quality index for possible next views given the current x-ray image. Adjusting the CBCT trajectory to acquire the recommended views results in non-circular source orbits that avoid poor images, and thus, data inconsistencies. We demonstrate that convolutional neural networks trained on realistically simulated data are capable of predicting quality metrics that enable scene-specific adjustments of the CBCT source trajectory. Using both realistically simulated data and real CBCT acquisitions of a semi-anthropomorphic phantom, we show that tomographic reconstructions of the resulting scene-specific CBCT acquisitions exhibit improved image quality particularly in terms of metal artifacts. Since the optimization objective is implicitly encoded in a neural network, the proposed approach overcomes the need for 3D information at run-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge