Anand Malpani

Surgical Data Science -- from Concepts to Clinical Translation

Oct 30, 2020

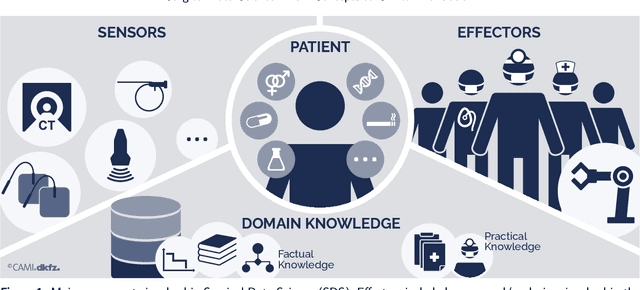

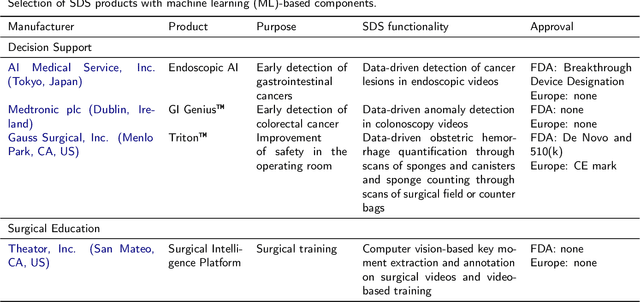

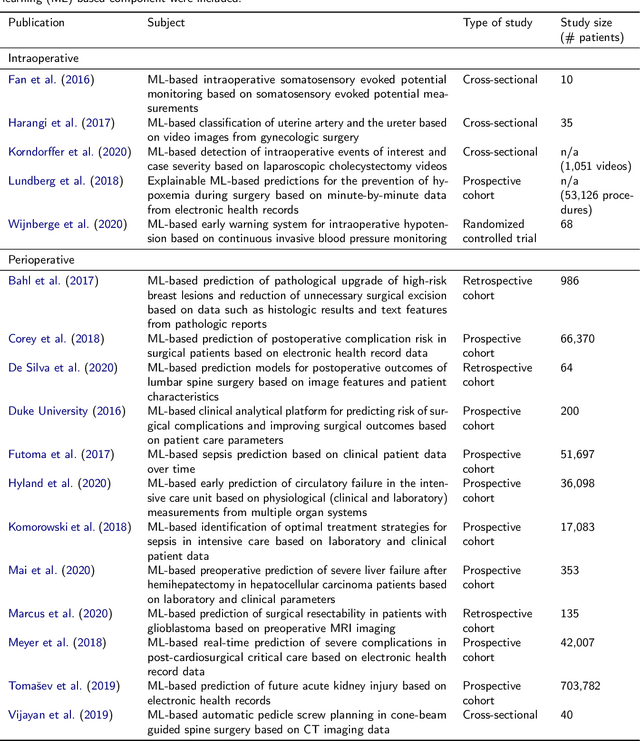

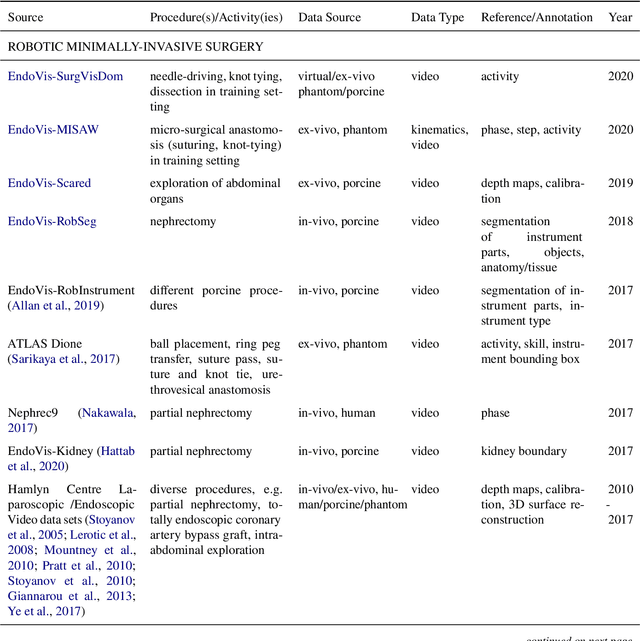

Abstract:Recent developments in data science in general and machine learning in particular have transformed the way experts envision the future of surgery. Surgical data science is a new research field that aims to improve the quality of interventional healthcare through the capture, organization, analysis and modeling of data. While an increasing number of data-driven approaches and clinical applications have been studied in the fields of radiological and clinical data science, translational success stories are still lacking in surgery. In this publication, we shed light on the underlying reasons and provide a roadmap for future advances in the field. Based on an international workshop involving leading researchers in the field of surgical data science, we review current practice, key achievements and initiatives as well as available standards and tools for a number of topics relevant to the field, namely (1) technical infrastructure for data acquisition, storage and access in the presence of regulatory constraints, (2) data annotation and sharing and (3) data analytics. Drawing from this extensive review, we present current challenges for technology development and (4) describe a roadmap for faster clinical translation and exploitation of the full potential of surgical data science.

Real-time Teaching Cues for Automated Surgical Coaching

Apr 24, 2017

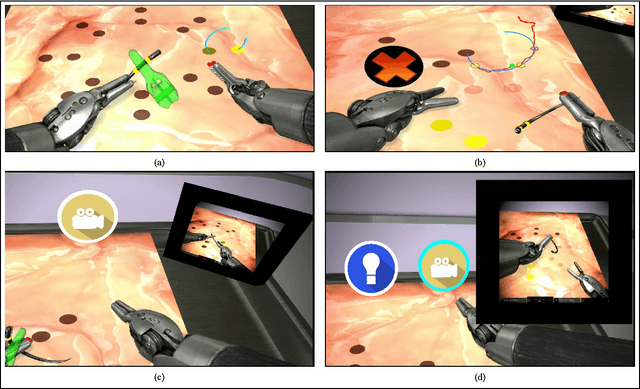

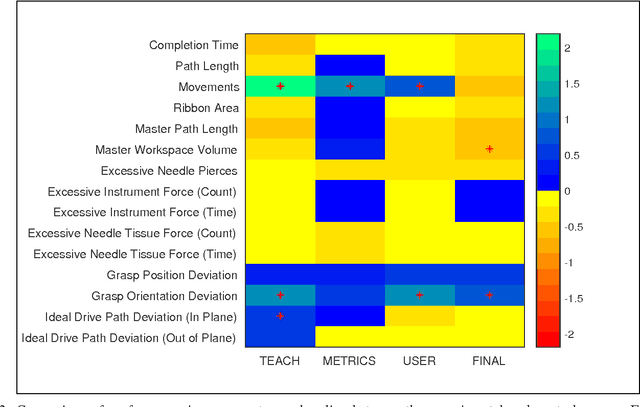

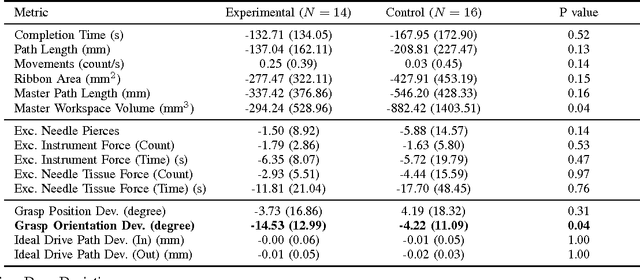

Abstract:With introduction of new technologies in the operating room like the da Vinci Surgical System, training surgeons to use them effectively and efficiently is crucial in the delivery of better patient care. Coaching by an expert surgeon is effective in teaching relevant technical skills, but current methods to deliver effective coaching are limited and not scalable. We present a virtual reality simulation-based framework for automated virtual coaching in surgical education. We implement our framework within the da Vinci Skills Simulator. We provide three coaching modes ranging from a hands-on teacher (continuous guidance) to a handsoff guide (assistance upon request). We present six teaching cues targeted at critical learning elements of a needle passing task, which are shown to the user based on the coaching mode. These cues are graphical overlays which guide the user, inform them about sub-par performance, and show relevant video demonstrations. We evaluated our framework in a pilot randomized controlled trial with 16 subjects in each arm. In a post-study questionnaire, participants reported high comprehension of feedback, and perceived improvement in performance. After three practice repetitions of the task, the control arm (independent learning) showed better motion efficiency whereas the experimental arm (received real-time coaching) had better performance of learning elements (as per the ACS Resident Skills Curriculum). We observed statistically higher improvement in the experimental group based on one of the metrics (related to needle grasp orientation). In conclusion, we developed an automated coach that provides real-time cues for surgical training and demonstrated its feasibility.

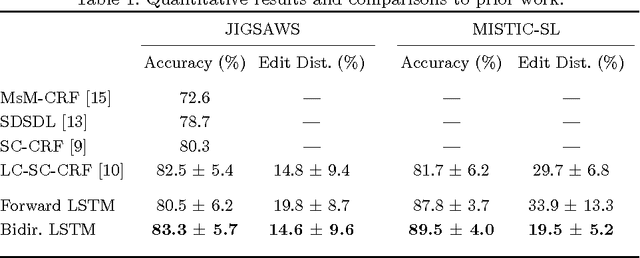

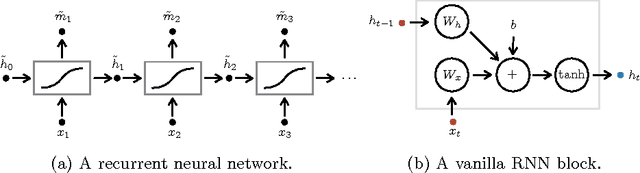

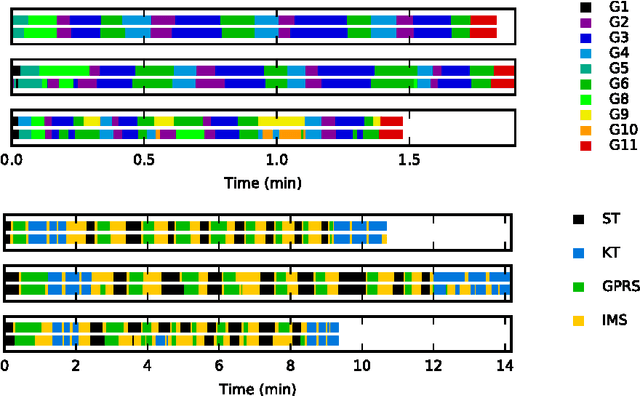

Recognizing Surgical Activities with Recurrent Neural Networks

Jun 22, 2016

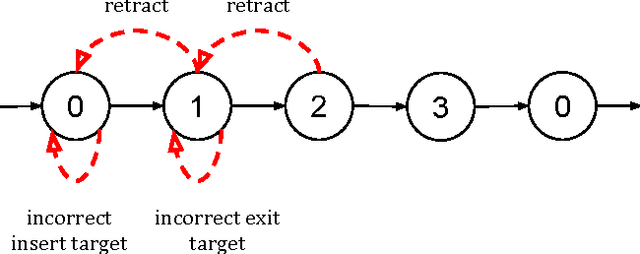

Abstract:We apply recurrent neural networks to the task of recognizing surgical activities from robot kinematics. Prior work in this area focuses on recognizing short, low-level activities, or gestures, and has been based on variants of hidden Markov models and conditional random fields. In contrast, we work on recognizing both gestures and longer, higher-level activites, or maneuvers, and we model the mapping from kinematics to gestures/maneuvers with recurrent neural networks. To our knowledge, we are the first to apply recurrent neural networks to this task. Using a single model and a single set of hyperparameters, we match state-of-the-art performance for gesture recognition and advance state-of-the-art performance for maneuver recognition, in terms of both accuracy and edit distance. Code is available at https://github.com/rdipietro/miccai-2016-surgical-activity-rec .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge