Hisashi Ishida

A Digital Twin for Telesurgery under Intermittent Communication

Nov 20, 2024Abstract:Telesurgery is an effective way to deliver service from expert surgeons to areas without immediate access to specialized resources. However, many of these areas, such as rural districts or battlefields, might be subject to different problems in communication, especially latency and intermittent periods of communication outage. This challenge motivates the use of a digital twin for the surgical system, where a simulation would mirror the robot hardware and surgical environment in the real world. The surgeon would then be able to interact with the digital twin during communication outage, followed by a recovery strategy on the real robot upon reestablishing communication. This paper builds the digital twin for the da Vinci surgical robot, with a buffering and replay strategy that reduces the mean task completion time by 23% when compared to the baseline, for a peg transfer task subject to intermittent communication outage.

Improving the realism of robotic surgery simulation through injection of learning-based estimated errors

Jun 11, 2024Abstract:The development of algorithms for automation of subtasks during robotic surgery can be accelerated by the availability of realistic simulation environments. In this work, we focus on one aspect of the realism of a surgical simulator, which is the positional accuracy of the robot. In current simulators, robots have perfect or near-perfect accuracy, which is not representative of their physical counterparts. We therefore propose a pair of neural networks, trained by data collected from a physical robot, to estimate both the controller error and the kinematic and non-kinematic error. These error estimates are then injected within the simulator to produce a simulated robot that has the characteristic performance of the physical robot. In this scenario, we believe it is sufficient for the estimated error used in the simulation to have a statistically similar distribution to the actual error of the physical robot. This is less stringent, and therefore more tenable, than the requirement for error compensation of a physical robot, where the estimated error should equal the actual error. Our results demonstrate that error injection reduces the mean position and orientation differences between the simulated and physical robots from 5.0 mm / 3.6 deg to 1.3 mm / 1.7 deg, respectively, which represents reductions by factors of 3.8 and 2.1.

* 6 page paper

Haptic-Assisted Collaborative Robot Framework for Improved Situational Awareness in Skull Base Surgery

Jan 22, 2024Abstract:Skull base surgery is a demanding field in which surgeons operate in and around the skull while avoiding critical anatomical structures including nerves and vasculature. While image-guided surgical navigation is the prevailing standard, limitation still exists requiring personalized planning and recognizing the irreplaceable role of a skilled surgeon. This paper presents a collaboratively controlled robotic system tailored for assisted drilling in skull base surgery. Our central hypothesis posits that this collaborative system, enriched with haptic assistive modes to enforce virtual fixtures, holds the potential to significantly enhance surgical safety, streamline efficiency, and alleviate the physical demands on the surgeon. The paper describes the intricate system development work required to enable these virtual fixtures through haptic assistive modes. To validate our system's performance and effectiveness, we conducted initial feasibility experiments involving a medical student and two experienced surgeons. The experiment focused on drilling around critical structures following cortical mastoidectomy, utilizing dental stone phantom and cadaveric models. Our experimental results demonstrate that our proposed haptic feedback mechanism enhances the safety of drilling around critical structures compared to systems lacking haptic assistance. With the aid of our system, surgeons were able to safely skeletonize the critical structures without breaching any critical structure even under obstructed view of the surgical site.

Integrating 3D Slicer with a Dynamic Simulator for Situational Aware Robotic Interventions

Jan 22, 2024

Abstract:Image-guided robotic interventions represent a transformative frontier in surgery, blending advanced imaging and robotics for improved precision and outcomes. This paper addresses the critical need for integrating open-source platforms to enhance situational awareness in image-guided robotic research. We present an open-source toolset that seamlessly combines a physics-based constraint formulation framework, AMBF, with a state-of-the-art imaging platform application, 3D Slicer. Our toolset facilitates the creation of highly customizable interactive digital twins, that incorporates processing and visualization of medical imaging, robot kinematics, and scene dynamics for real-time robot control. Through a feasibility study, we showcase real-time synchronization of a physical robotic interventional environment in both 3D Slicer and AMBF, highlighting low-latency updates and improved visualization.

Beyond the Manual Touch: Situational-aware Force Control for Increased Safety in Robot-assisted Skullbase Surgery

Jan 22, 2024Abstract:Purpose - Skullbase surgery demands exceptional precision when removing bone in the lateral skull base. Robotic assistance can alleviate the effect of human sensory-motor limitations. However, the stiffness and inertia of the robot can significantly impact the surgeon's perception and control of the tool-to-tissue interaction forces. Methods - We present a situational-aware, force control technique aimed at regulating interaction forces during robot-assisted skullbase drilling. The contextual interaction information derived from the digital twin environment is used to enhance sensory perception and suppress undesired high forces. Results - To validate our approach, we conducted initial feasibility experiments involving a medical and two engineering students. The experiment focused on further drilling around critical structures following cortical mastoidectomy. The experiment results demonstrate that robotic assistance coupled with our proposed control scheme effectively limited undesired interaction forces when compared to robotic assistance without the proposed force control. Conclusions - The proposed force control techniques show promise in significantly reducing undesired interaction forces during robot-assisted skullbase surgery. These findings contribute to the ongoing efforts to enhance surgical precision and safety in complex procedures involving the lateral skull base.

Improving Surgical Situational Awareness with Signed Distance Field: A Pilot Study in Virtual Reality

Mar 03, 2023Abstract:The introduction of image-guided surgical navigation (IGSN) has greatly benefited technically demanding surgical procedures by providing real-time support and guidance to the surgeon during surgery. To develop effective IGSN, a careful selection of the information provided to the surgeon is needed. However, identifying optimal feedback modalities is challenging due to the broad array of available options. To address this problem, we have developed an open-source library that facilitates the development of multimodal navigation systems in a wide range of surgical procedures relying on medical imaging data. To provide guidance, our system calculates the minimum distance between the surgical instrument and the anatomy and then presents this information to the user through different mechanisms. The real-time performance of our approach is achieved by calculating Signed Distance Fields at initialization from segmented anatomical volumes. Using this framework, we developed a multimodal surgical navigation system to help surgeons navigate anatomical variability in a skull-base surgery simulation environment. Three different feedback modalities were explored: visual, auditory, and haptic. To evaluate the proposed system, a pilot user study was conducted in which four clinicians performed mastoidectomy procedures with and without guidance. Each condition was assessed using objective performance and subjective workload metrics. This pilot user study showed improvements in procedural safety without additional time or workload. These results demonstrate our pipeline's successful use case in the context of mastoidectomy.

Virtual Fixture Assistance for Suturing in Robot-Aided Pediatric Endoscopic Surgery

Sep 09, 2019

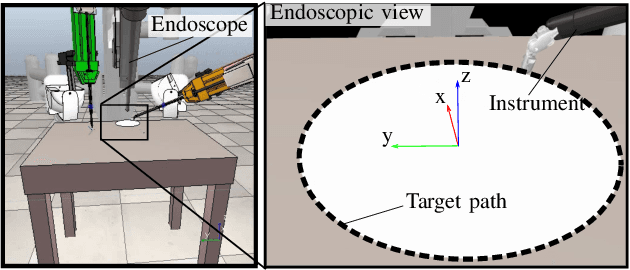

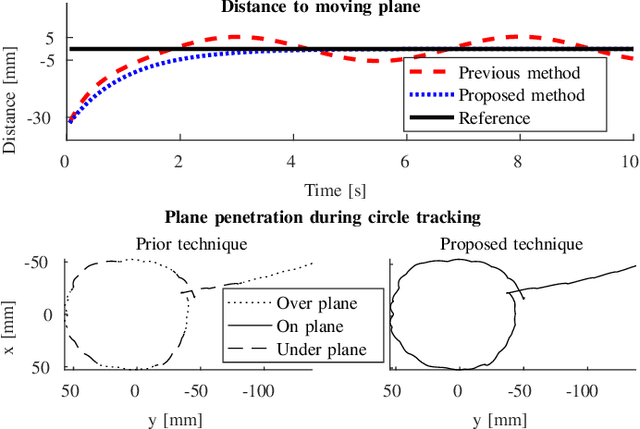

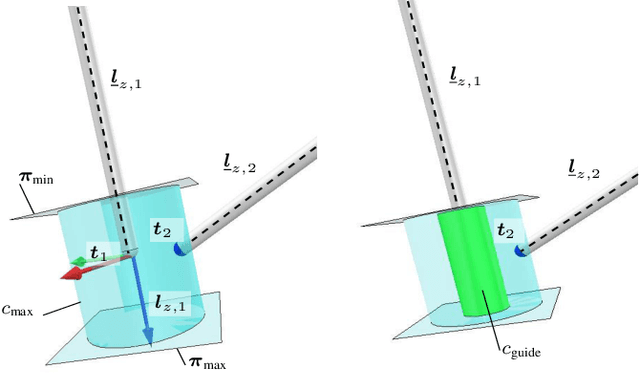

Abstract:The limited workspace in pediatric endoscopic surgery makes surgical suturing one of the most difficult tasks. During suturing, surgeons have to prevent collisions between tools and also collisions with the surrounding tissues. Surgical robots have been shown to be effective in adult laparoscopy, but assistance for suturing in constrained workspaces has not been yet fully explored. In this letter, we propose guidance virtual fixtures to enhance the performance and the safety of suturing while generating the required task constraints using constrained optimization and Cartesian force feedback. We propose two guidance methods: looping virtual fixtures and a trajectory guidance cylinder, that are based on dynamic geometric elements. In simulations and experiments with a physical robot, we show that the proposed methods achieve a more precise and safer looping in robot-assisted pediatric endoscopy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge