Mamoru Mitsuishi

PEg TRAnsfer Workflow recognition challenge report: Does multi-modal data improve recognition?

Feb 11, 2022

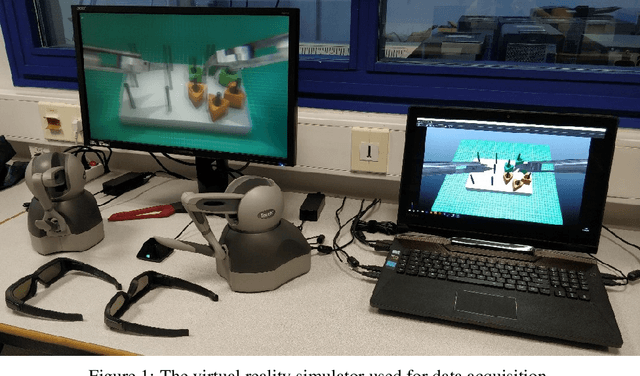

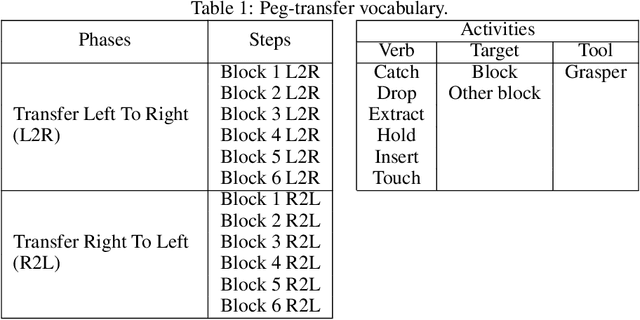

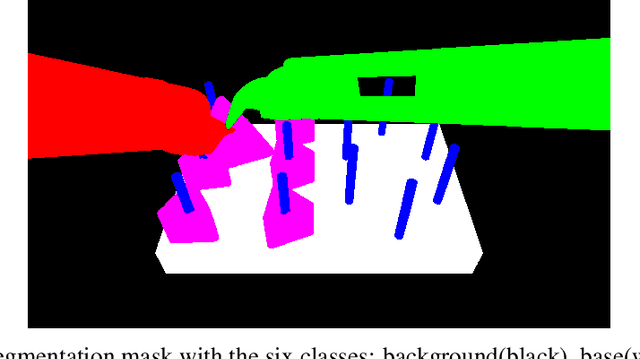

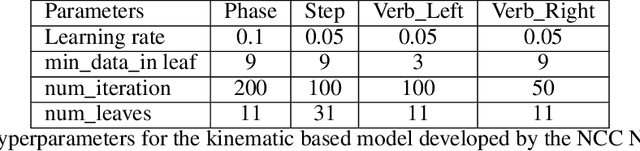

Abstract:This paper presents the design and results of the "PEg TRAnsfert Workflow recognition" (PETRAW) challenge whose objective was to develop surgical workflow recognition methods based on one or several modalities, among video, kinematic, and segmentation data, in order to study their added value. The PETRAW challenge provided a data set of 150 peg transfer sequences performed on a virtual simulator. This data set was composed of videos, kinematics, semantic segmentation, and workflow annotations which described the sequences at three different granularity levels: phase, step, and activity. Five tasks were proposed to the participants: three of them were related to the recognition of all granularities with one of the available modalities, while the others addressed the recognition with a combination of modalities. Average application-dependent balanced accuracy (AD-Accuracy) was used as evaluation metric to take unbalanced classes into account and because it is more clinically relevant than a frame-by-frame score. Seven teams participated in at least one task and four of them in all tasks. Best results are obtained with the use of the video and the kinematics data with an AD-Accuracy between 93% and 90% for the four teams who participated in all tasks. The improvement between video/kinematic-based methods and the uni-modality ones was significant for all of the teams. However, the difference in testing execution time between the video/kinematic-based and the kinematic-based methods has to be taken into consideration. Is it relevant to spend 20 to 200 times more computing time for less than 3% of improvement? The PETRAW data set is publicly available at www.synapse.org/PETRAW to encourage further research in surgical workflow recognition.

Autonomous Coordinated Control of the Light Guide for Positioning in Vitreoretinal Surgery

Jul 26, 2021

Abstract:Vitreoretinal surgery is challenging even for expert surgeons owing to the delicate target tissues and the diminutive 7-mm-diameter workspace in the retina. In addition to improved dexterity and accuracy, robot assistance allows for (partial) task automation. In this work, we propose a strategy to automate the motion of the light guide with respect to the surgical instrument. This automation allows the instrument's shadow to always be inside the microscopic view, which is an important cue for the accurate positioning of the instrument in the retina. We show simulations and experiments demonstrating that the proposed strategy is effective in a 700-point grid in the retina of a surgical phantom.

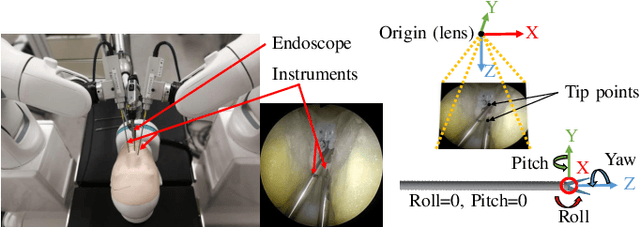

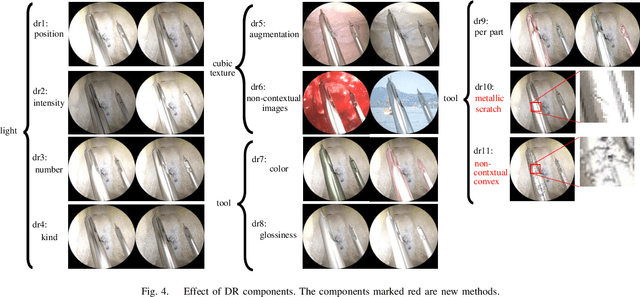

MBAPose: Mask and Bounding-Box Aware Pose Estimation of Surgical Instruments with Photorealistic Domain Randomization

Mar 15, 2021

Abstract:Surgical robots are controlled using a priori models based on robots' geometric parameters, which are calibrated before the surgical procedure. One of the challenges in using robots in real surgical settings is that parameters change over time, consequently deteriorating control accuracy. In this context, our group has been investigating online calibration strategies without added sensors. In one step toward that goal, we have developed an algorithm to estimate the pose of the instruments' shafts in endoscopic images. In this study, we build upon that earlier work and propose a new framework to more precisely estimate the pose of a rigid surgical instrument. Our strategy is based on a novel pose estimation model called MBAPose and the use of synthetic training data. Our experiments demonstrated an improvement of 21 % for translation error and 26 % for orientation error on synthetic test data with respect to our previous work. Results with real test data provide a baseline for further research.

SmartArm: Suturing Feasibility of a Surgical Robotic System on a Neonatal Chest Model

Jan 04, 2021

Abstract:Commercially available surgical-robot technology currently addresses many surgical scenarios for adult patients. This same technology cannot be used to the benefit of neonate patients given the considerably smaller workspace. Medically relevant procedures regarding neonate patients include minimally invasive surgery to repair congenital esophagus disorders, which entail the suturing of the fragile esophagus within the narrow neonate cavity. In this work, we explore the use of the SmartArm robotic system in a feasibility study using a neonate chest and esophagus model. We show that a medically inexperienced operator can perform two-throw knots inside the neonate chest model using the robotic system.

* Accepted on T-MRB 2021, 4 pages

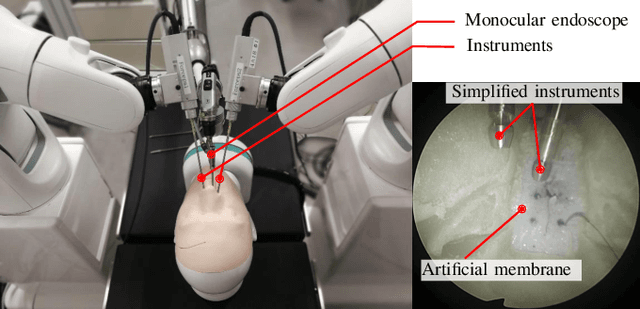

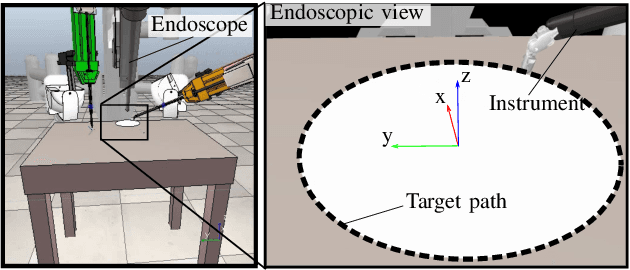

Single-Shot Pose Estimation of Surgical Robot Instruments' Shafts from Monocular Endoscopic Images

Mar 03, 2020

Abstract:Surgical robots are used to perform minimally invasive surgery and alleviate much of the burden imposed on surgeons. Our group has developed a surgical robot to aid in the removal of tumors at the base of the skull via access through the nostrils. To avoid injuring the patients, a collision-avoidance algorithm that depends on having an accurate model for the poses of the instruments' shafts is used. Given that the model's parameters can change over time owing to interactions between instruments and other disturbances, the online estimation of the poses of the instrument's shaft is essential. In this work, we propose a new method to estimate the pose of the surgical instruments' shafts using a monocular endoscope. Our method is based on the use of an automatically annotated training dataset and an improved pose-estimation deep-learning architecture. In preliminary experiments, we show that our method can surpass state of the art vision-based marker-less pose estimation techniques (providing an error decrease of 55% in position estimation, 64% in pitch, and 69% in yaw) by using artificial images.

* Accepted on ICRA 2020, 7 pages

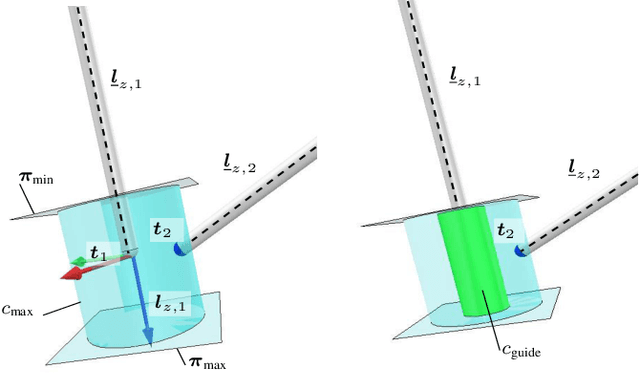

Virtual Fixture Assistance for Suturing in Robot-Aided Pediatric Endoscopic Surgery

Sep 09, 2019

Abstract:The limited workspace in pediatric endoscopic surgery makes surgical suturing one of the most difficult tasks. During suturing, surgeons have to prevent collisions between tools and also collisions with the surrounding tissues. Surgical robots have been shown to be effective in adult laparoscopy, but assistance for suturing in constrained workspaces has not been yet fully explored. In this letter, we propose guidance virtual fixtures to enhance the performance and the safety of suturing while generating the required task constraints using constrained optimization and Cartesian force feedback. We propose two guidance methods: looping virtual fixtures and a trajectory guidance cylinder, that are based on dynamic geometric elements. In simulations and experiments with a physical robot, we show that the proposed methods achieve a more precise and safer looping in robot-assisted pediatric endoscopy.

A Unified Framework for the Teleoperation of Surgical Robots in Constrained Workspaces

Feb 27, 2019

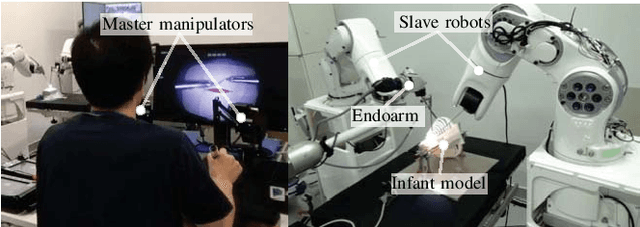

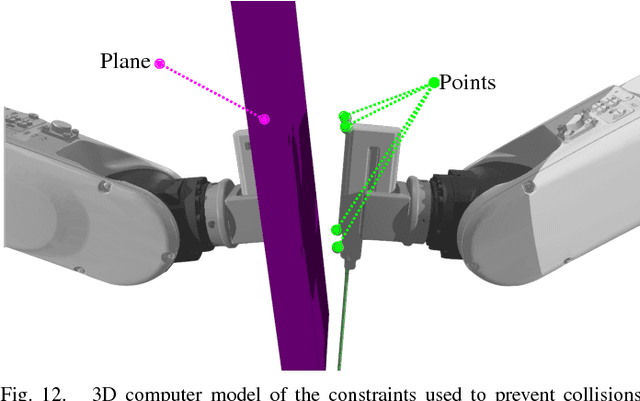

Abstract:In adult laparoscopy, robot-aided surgery is a reality in thousands of operating rooms worldwide, owing to the increased dexterity provided by the robotic tools. Many robots and robot control techniques have been developed to aid in more challenging scenarios, such as pediatric surgery and microsurgery. However, the prevalence of case-specific solutions, particularly those focused on non-redundant robots, reduces the reproducibility of the initial results in more challenging scenarios. In this paper, we propose a general framework for the control of surgical robotics in constrained workspaces under teleoperation, regardless of the robot geometry. Our technique is divided into a slave-side constrained optimization algorithm, which provides virtual fixtures, and with Cartesian impedance on the master side to provide force feedback. Experiments with two robotic systems, one redundant and one non-redundant, show that smooth teleoperation can be achieved in adult laparoscopy and infant surgery.

* Accepted on ICRA 2019, 7 pages

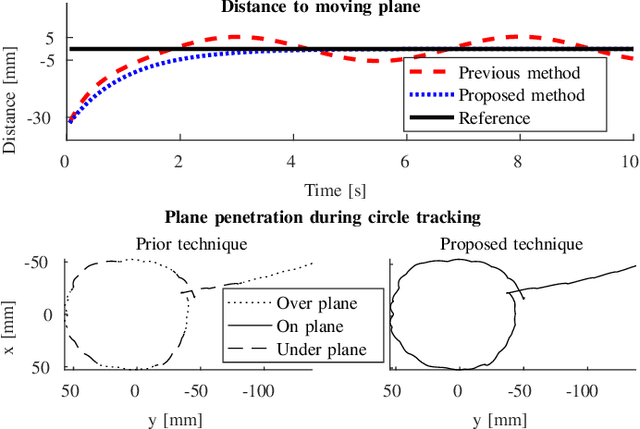

Dynamic Active Constraints for Surgical Robots using Vector Field Inequalities

Nov 21, 2018

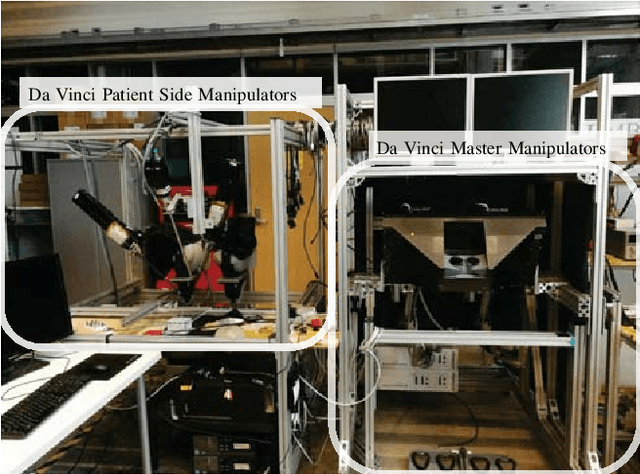

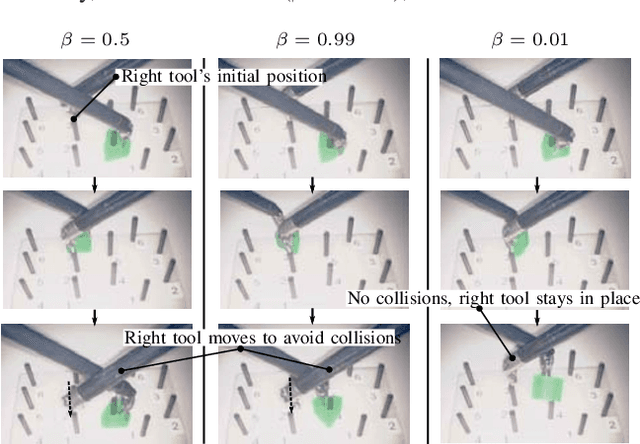

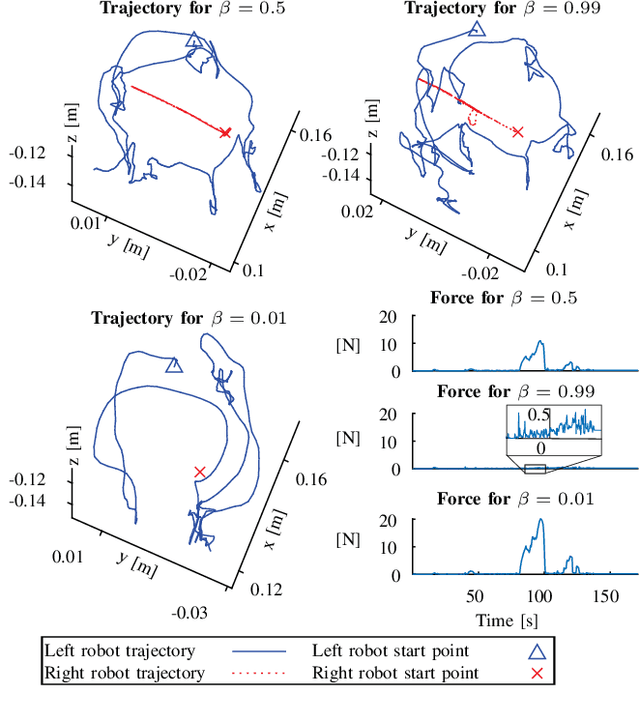

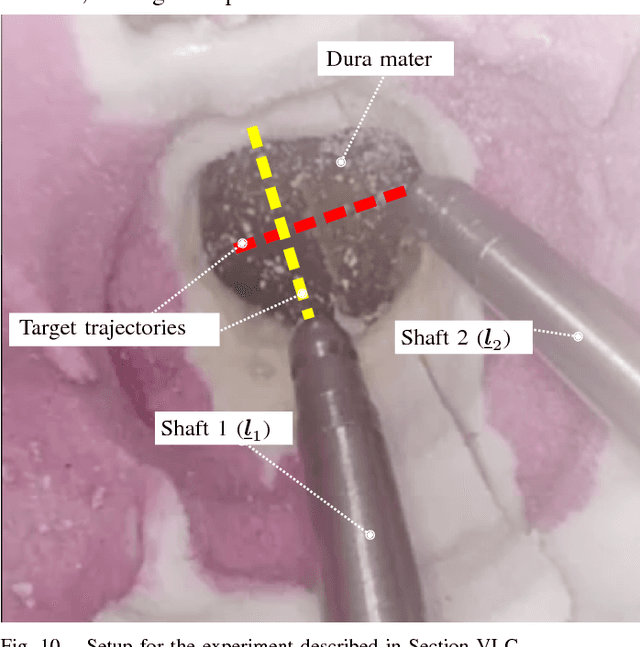

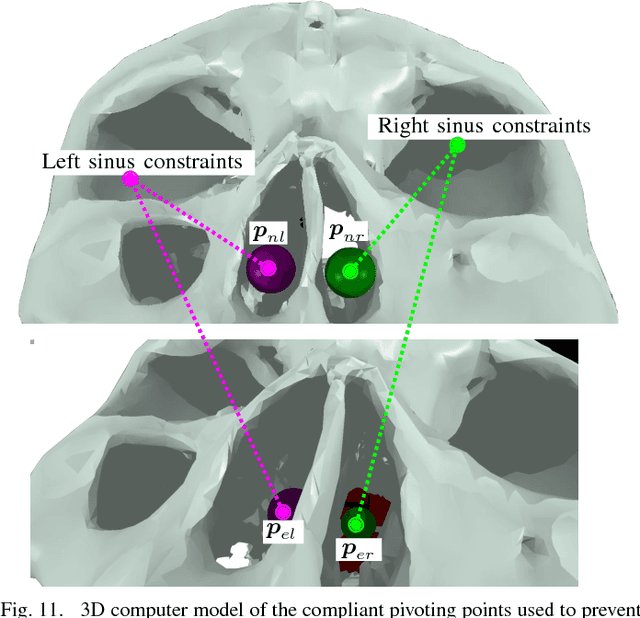

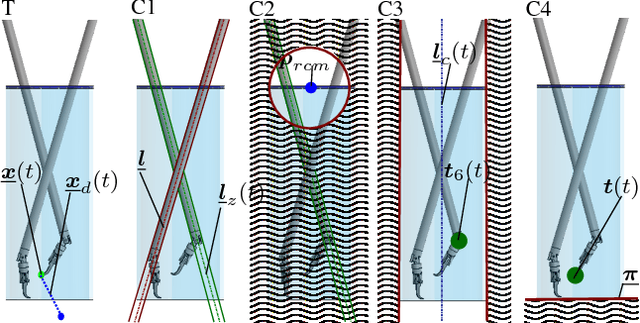

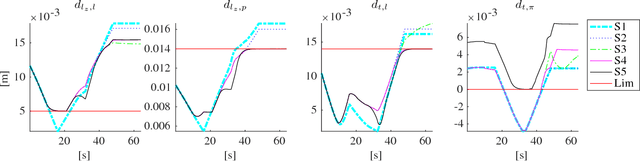

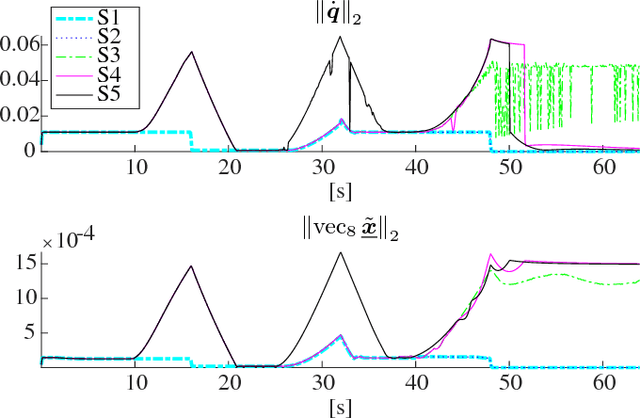

Abstract:Robotic assistance allows surgeons to perform dexterous and tremor-free procedures, but robotic aid is still underrepresented in procedures with constrained workspaces, such as deep brain neurosurgery and endonasal surgery. In these procedures, surgeons have restricted vision to areas near the surgical tooltips, which increases the risk of unexpected collisions between the shafts of the instruments and their surroundings. In this work, our vector-field-inequalities method is extended to provide dynamic active-constraints to any number of robots and moving objects sharing the same workspace. The method is evaluated with experiments and simulations in which robot tools have to avoid collisions autonomously and in real-time, in a constrained endonasal surgical environment. Simulations show that with our method the combined trajectory error of two robotic systems is optimal. Experiments using a real robotic system show that the method can autonomously prevent collisions between the moving robots themselves and between the robots and the environment. Moreover, the framework is also successfully verified under teleoperation with tool-tissue interactions.

Active Constraints using Vector Field Inequalities for Surgical Robots

Apr 11, 2018

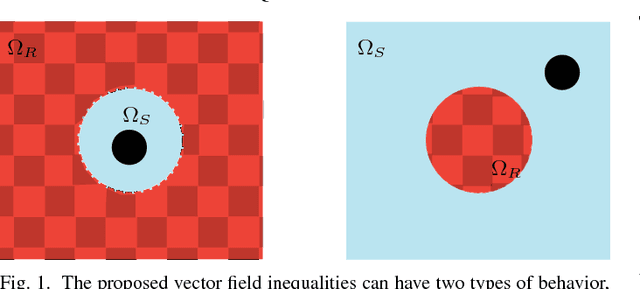

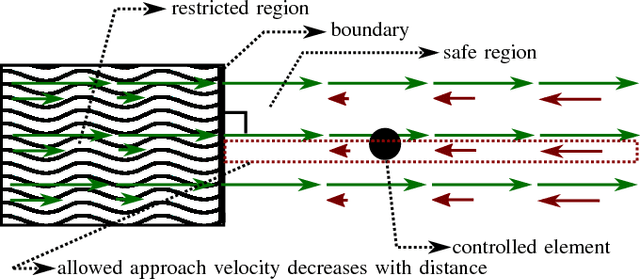

Abstract:Robotic assistance allows surgeons to perform dexterous and tremor-free procedures, but is still underrepresented in deep brain neurosurgery and endonasal surgery where the workspace is constrained. In these conditions, the vision of surgeons is restricted to areas near the surgical tool tips, which increases the risk of unexpected collisions between the shafts of the instruments and their surroundings, in particular in areas outside the surgical field-of-view. Active constraints can be used to prevent the tools from entering restricted zones and thus avoid collisions. In this paper, a vector field inequality is proposed that guarantees that tools do not enter restricted zones. Moreover, in contrast with early techniques, the proposed method limits the tool approach velocity in the direction of the forbidden zone boundary, guaranteeing a smooth behavior and that tangential velocities will not be disturbed. The proposed method is evaluated in simulations featuring two eight degrees-of-freedom manipulators that were custom-designed for deep neurosurgery. The results show that both manipulator-manipulator and manipulator-boundary collisions can be avoided using the vector field inequalities.

* Accepted on ICRA 2018, 8 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge