Nimesh Nagururu

Beyond the Manual Touch: Situational-aware Force Control for Increased Safety in Robot-assisted Skullbase Surgery

Jan 22, 2024Abstract:Purpose - Skullbase surgery demands exceptional precision when removing bone in the lateral skull base. Robotic assistance can alleviate the effect of human sensory-motor limitations. However, the stiffness and inertia of the robot can significantly impact the surgeon's perception and control of the tool-to-tissue interaction forces. Methods - We present a situational-aware, force control technique aimed at regulating interaction forces during robot-assisted skullbase drilling. The contextual interaction information derived from the digital twin environment is used to enhance sensory perception and suppress undesired high forces. Results - To validate our approach, we conducted initial feasibility experiments involving a medical and two engineering students. The experiment focused on further drilling around critical structures following cortical mastoidectomy. The experiment results demonstrate that robotic assistance coupled with our proposed control scheme effectively limited undesired interaction forces when compared to robotic assistance without the proposed force control. Conclusions - The proposed force control techniques show promise in significantly reducing undesired interaction forces during robot-assisted skullbase surgery. These findings contribute to the ongoing efforts to enhance surgical precision and safety in complex procedures involving the lateral skull base.

Haptic-Assisted Collaborative Robot Framework for Improved Situational Awareness in Skull Base Surgery

Jan 22, 2024Abstract:Skull base surgery is a demanding field in which surgeons operate in and around the skull while avoiding critical anatomical structures including nerves and vasculature. While image-guided surgical navigation is the prevailing standard, limitation still exists requiring personalized planning and recognizing the irreplaceable role of a skilled surgeon. This paper presents a collaboratively controlled robotic system tailored for assisted drilling in skull base surgery. Our central hypothesis posits that this collaborative system, enriched with haptic assistive modes to enforce virtual fixtures, holds the potential to significantly enhance surgical safety, streamline efficiency, and alleviate the physical demands on the surgeon. The paper describes the intricate system development work required to enable these virtual fixtures through haptic assistive modes. To validate our system's performance and effectiveness, we conducted initial feasibility experiments involving a medical student and two experienced surgeons. The experiment focused on drilling around critical structures following cortical mastoidectomy, utilizing dental stone phantom and cadaveric models. Our experimental results demonstrate that our proposed haptic feedback mechanism enhances the safety of drilling around critical structures compared to systems lacking haptic assistance. With the aid of our system, surgeons were able to safely skeletonize the critical structures without breaching any critical structure even under obstructed view of the surgical site.

Fully Immersive Virtual Reality for Skull-base Surgery: Surgical Training and Beyond

Feb 27, 2023Abstract:Purpose: A fully immersive virtual reality system (FIVRS), where surgeons can practice procedures on virtual anatomies, is a scalable and cost-effective alternative to cadaveric training. The fully digitized virtual surgeries can also be used to assess the surgeon's skills automatically using metrics that are otherwise hard to collect in reality. Thus, we present FIVRS, a virtual reality (VR) system designed for skull-base surgery, which combines high-fidelity surgical simulation software with a real hardware setup. Methods: FIVRS integrates software and hardware features to allow surgeons to use normal clinical workflows for VR. FIVRS uses advanced rendering designs and drilling algorithms for realistic surgery. We also design a head-mounted display with ergonomics similar to that of surgical microscopes. A plethora of digitized data of VR surgery are recorded, including eye gaze, motion, force and video of the surgery for post-analysis. A user-friendly interface is also designed to ease the learning curve of using FIVRS. Results: We present results from a user study involving surgeons to showcase the efficacy FIVRS and its generated data. Conclusion: We present FIVRS, a fully immersive VR system for skull base surgery. FIVRS features a realistic software simulation coupled with modern hardware for improved realism. The system is completely open-source and provides feature-rich data in an industry-standard format.

Twin-S: A Digital Twin for Skull-base Surgery

Nov 21, 2022

Abstract:Purpose: Digital twins are virtual interactive models of the real world, exhibiting identical behavior and properties. In surgical applications, computational analysis from digital twins can be used, for example, to enhance situational awareness. Methods: We present a digital twin framework for skull-base surgeries, named Twin-S, which can be integrated within various image-guided interventions seamlessly. Twin-S combines high-precision optical tracking and real-time simulation. We rely on rigorous calibration routines to ensure that the digital twin representation precisely mimics all real-world processes. Twin-S models and tracks the critical components of skull-base surgery, including the surgical tool, patient anatomy, and surgical camera. Significantly, Twin-S updates and reflects real-world drilling of the anatomical model in frame rate. Results: We extensively evaluate the accuracy of Twin-S, which achieves an average 1.39 mm error during the drilling process. We further illustrate how segmentation masks derived from the continuously updated digital twin can augment the surgical microscope view in a mixed reality setting, where bone requiring ablation is highlighted to provide surgeons additional situational awareness. Conclusion: We present Twin-S, a digital twin environment for skull-base surgery. Twin-S tracks and updates the virtual model in real-time given measurements from modern tracking technologies. Future research on complementing optical tracking with higher-precision vision-based approaches may further increase the accuracy of Twin-S.

Virtual Reality for Synergistic Surgical Training and Data Generation

Nov 15, 2021

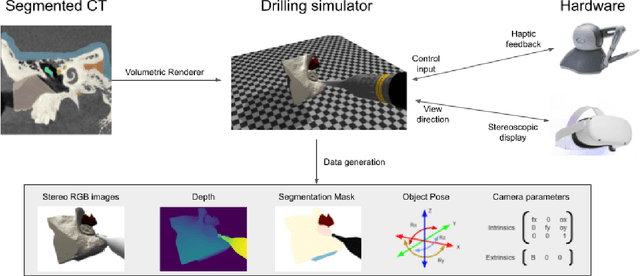

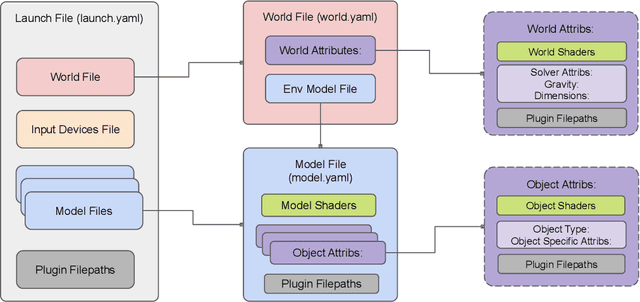

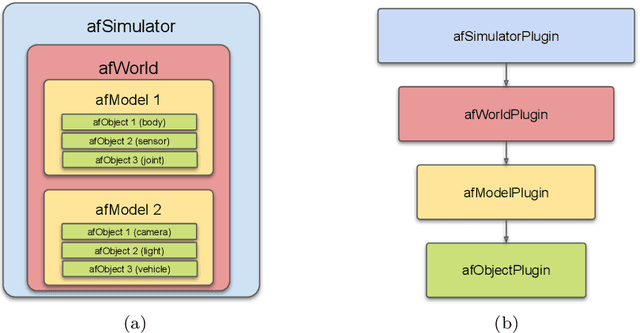

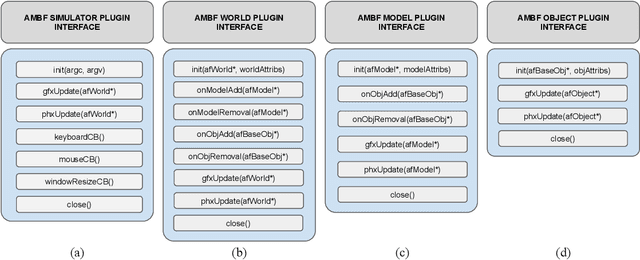

Abstract:Surgical simulators not only allow planning and training of complex procedures, but also offer the ability to generate structured data for algorithm development, which may be applied in image-guided computer assisted interventions. While there have been efforts on either developing training platforms for surgeons or data generation engines, these two features, to our knowledge, have not been offered together. We present our developments of a cost-effective and synergistic framework, named Asynchronous Multibody Framework Plus (AMBF+), which generates data for downstream algorithm development simultaneously with users practicing their surgical skills. AMBF+ offers stereoscopic display on a virtual reality (VR) device and haptic feedback for immersive surgical simulation. It can also generate diverse data such as object poses and segmentation maps. AMBF+ is designed with a flexible plugin setup which allows for unobtrusive extension for simulation of different surgical procedures. We show one use case of AMBF+ as a virtual drilling simulator for lateral skull-base surgery, where users can actively modify the patient anatomy using a virtual surgical drill. We further demonstrate how the data generated can be used for validating and training downstream computer vision algorithms

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge