Javad Fotouhi

From Perspective X-ray Imaging to Parallax-Robust Orthographic Stitching

Mar 05, 2020

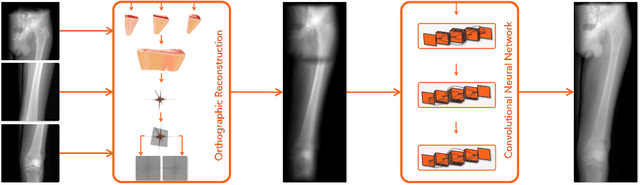

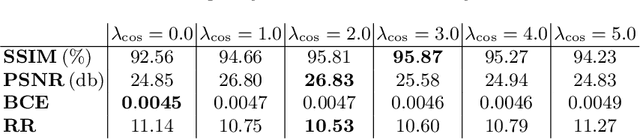

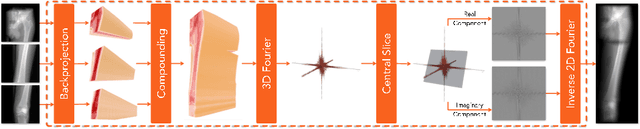

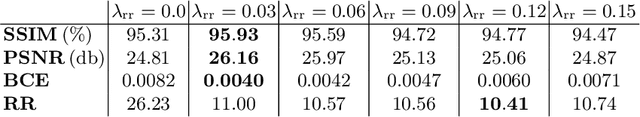

Abstract:Stitching images acquired under perspective projective geometry is a relevant topic in computer vision with multiple applications ranging from smartphone panoramas to the construction of digital maps. Image stitching is an equally prominent challenge in medical imaging, where the limited field-of-view captured by single images prohibits holistic analysis of patient anatomy. The barrier that prevents straight-forward mosaicing of 2D images is depth mismatch due to parallax. In this work, we leverage the Fourier slice theorem to aggregate information from multiple transmission images in parallax-free domains using fundamental principles of X-ray image formation. The semantics of the stitched image are restored using a novel deep learning strategy that exploits similarity measures designed around frequency, as well as dense and sparse spatial image content. Our pipeline, not only stitches images, but also provides orthographic reconstruction that enables metric measurements of clinically relevant quantities directly on the 2D image plane.

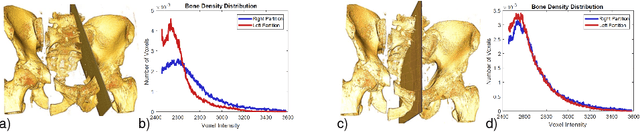

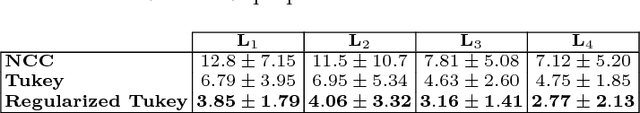

Exploring Partial Intrinsic and Extrinsic Symmetry in 3D Medical Imaging

Mar 04, 2020

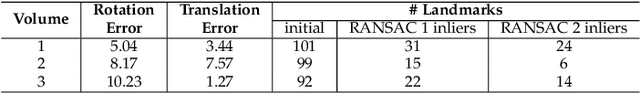

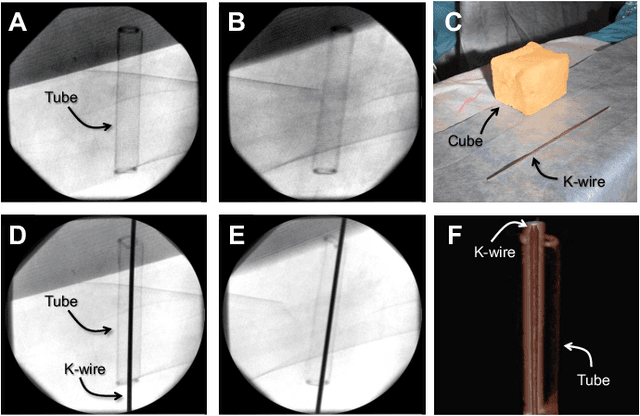

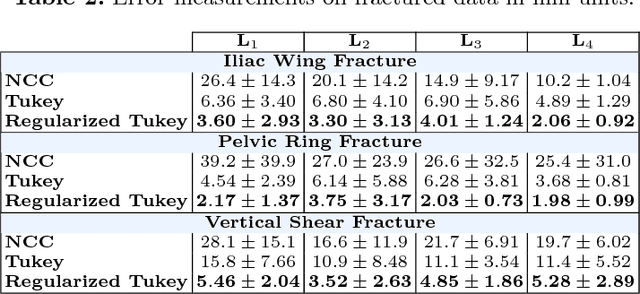

Abstract:We present a novel methodology to detect imperfect bilateral symmetry in CT of human anatomy. In this paper, the structurally symmetric nature of the pelvic bone is explored and is used to provide interventional image augmentation for treatment of unilateral fractures in patients with traumatic injuries. The mathematical basis of our solution is on the incorporation of attributes and characteristics that satisfy the properties of intrinsic and extrinsic symmetry and are robust to outliers. In the first step, feature points that satisfy intrinsic symmetry are automatically detected in the M\"obius space defined on the CT data. These features are then pruned via a two-stage RANSAC to attain correspondences that satisfy also the extrinsic symmetry. Then, a disparity function based on Tukey's biweight robust estimator is introduced and minimized to identify a symmetry plane parametrization that yields maximum contralateral similarity. Finally, a novel regularization term is introduced to enhance similarity between bone density histograms across the partial symmetry plane, relying on the important biological observation that, even if injured, the dislocated bone segments remain within the body. Our extensive evaluations on various cases of common fracture types demonstrate the validity of the novel concepts and the robustness and accuracy of the proposed method.

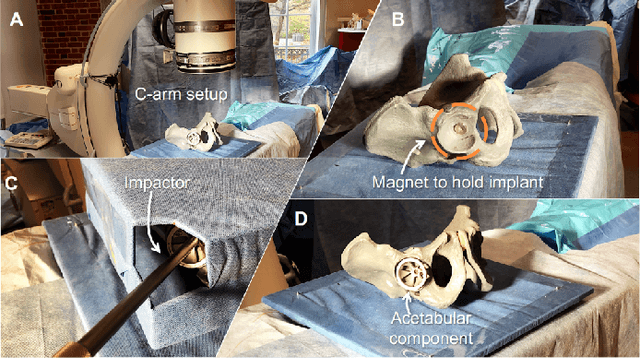

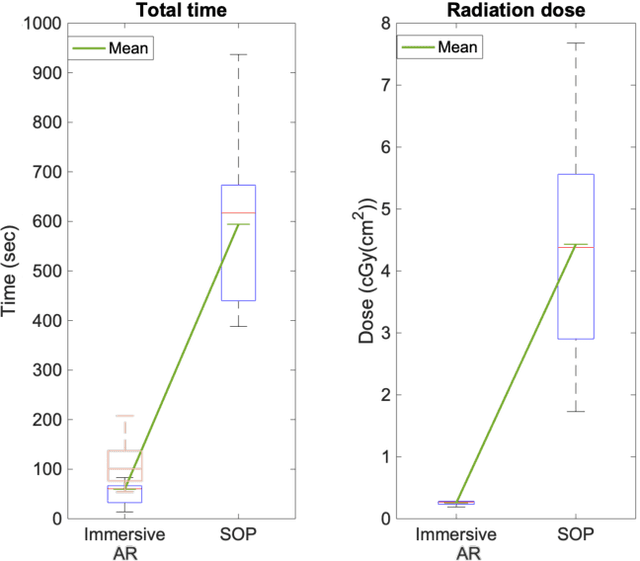

Spatiotemporal-Aware Augmented Reality: Redefining HCI in Image-Guided Therapy

Mar 04, 2020

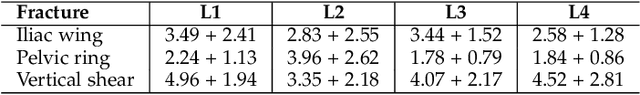

Abstract:Suboptimal interaction with patient data and challenges in mastering 3D anatomy based on ill-posed 2D interventional images are essential concerns in image-guided therapies. Augmented reality (AR) has been introduced in the operating rooms in the last decade; however, in image-guided interventions, it has often only been considered as a visualization device improving traditional workflows. As a consequence, the technology is gaining minimum maturity that it requires to redefine new procedures, user interfaces, and interactions. The main contribution of this paper is to reveal how exemplary workflows are redefined by taking full advantage of head-mounted displays when entirely co-registered with the imaging system at all times. The proposed AR landscape is enabled by co-localizing the users and the imaging devices via the operating room environment and exploiting all involved frustums to move spatial information between different bodies. The awareness of the system from the geometric and physical characteristics of X-ray imaging allows the redefinition of different human-machine interfaces. We demonstrate that this AR paradigm is generic, and can benefit a wide variety of procedures. Our system achieved an error of $4.76\pm2.91$ mm for placing K-wire in a fracture management procedure, and yielded errors of $1.57\pm1.16^\circ$ and $1.46\pm1.00^\circ$ in the abduction and anteversion angles, respectively, for total hip arthroplasty. We hope that our holistic approach towards improving the interface of surgery not only augments the surgeon's capabilities but also augments the surgical team's experience in carrying out an effective intervention with reduced complications and provide novel approaches of documenting procedures for training purposes.

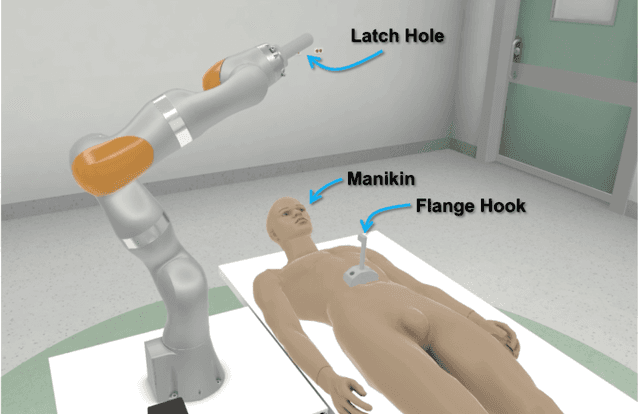

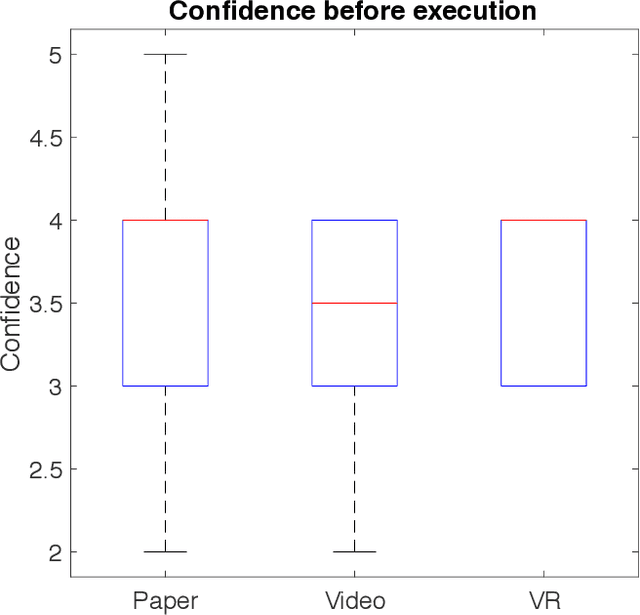

On the Effectiveness of Virtual Reality-based Training for Robotic Setup

Mar 03, 2020

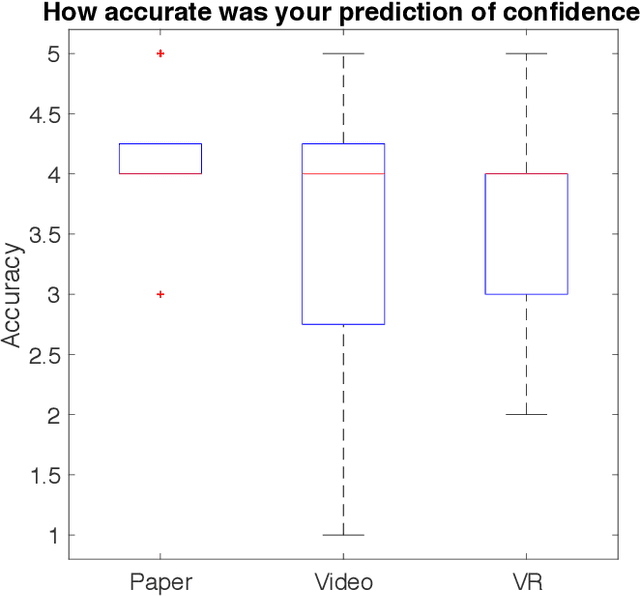

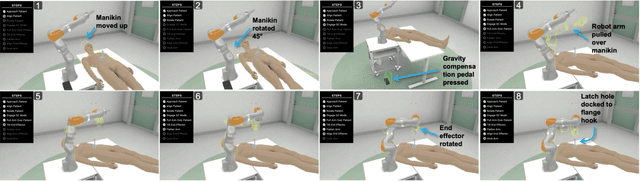

Abstract:Virtual Reality (VR) is rapidly increasing in popularity as a teaching tool. It allows for the creation of a highly immersive, three-dimensional virtual environment intended to simulate real-life environments. With more robots saturating the industry - from manufacturing to healthcare, there is a need to train end-users on how to set up, operate, tear down, and troubleshoot the robot. Even though VR has become widely used in training surgeons on the psychomotor skills associated with operating the robot, little research has been done to see how the benefits of VR could translate to teaching the bedside staff, tasked with supporting the robot during the full end-to-end surgical procedure. We trained 30 participants on how to set up a robotic arm in an environment mimicking clinical setup. We divided these participants equally into 3 groups with one group trained with paper-based instructions, one with video-based instructions and one with VR-based instructions. We then compared and contrasted these three different training methods. VR and paper-based were highly favored training mediums over video-based. VR-trained participants achieved slightly higher fidelity of individual robotic joint angles, suggesting better comprehension of the spatial awareness skills necessary to achieve desired arm positioning. In addition, VR resulted in higher reproducibility of setup fidelity and more consistency in user confidence levels as compared to paper and video-based training.

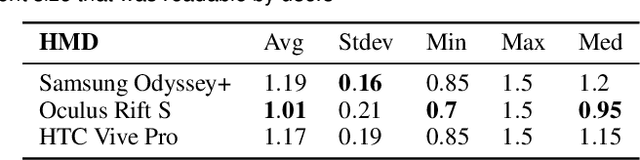

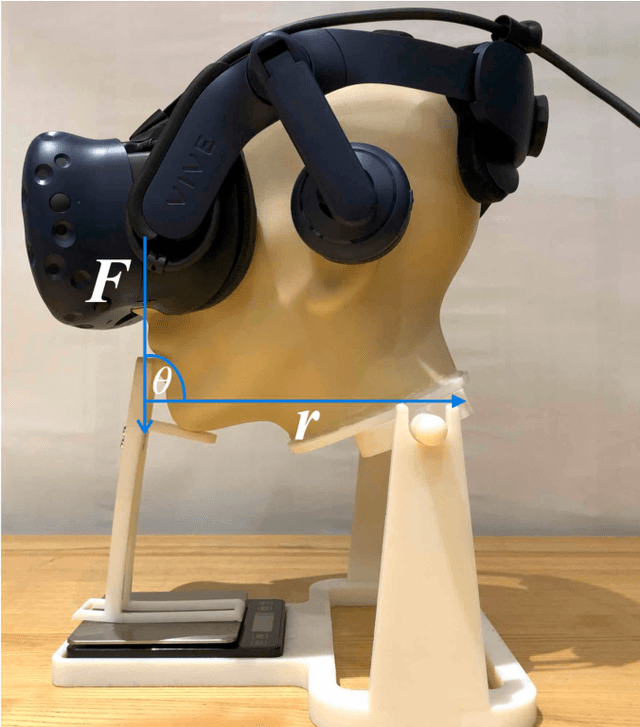

A Comparative Analysis of Virtual Reality Head-Mounted Display Systems

Dec 05, 2019

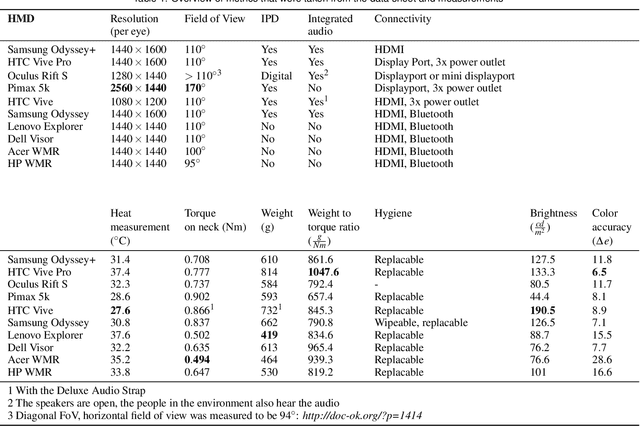

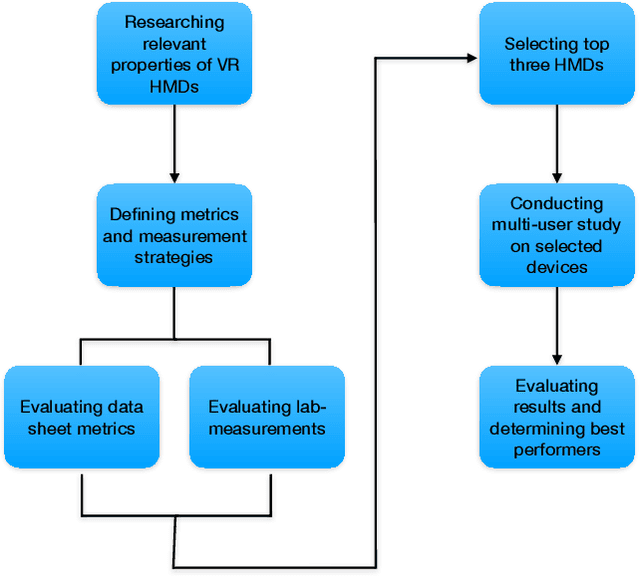

Abstract:With recent advances of Virtual Reality (VR) technology, the deployment of such will dramatically increase in non-entertainment environments, such as professional education and training, manufacturing, service, or low frequency/high risk scenarios. Clinical education is an area that especially stands to benefit from VR technology due to the complexity, high cost, and difficult logistics. The effectiveness of the deployment of VR systems, is subject to factors that may not be necessarily considered for devices targeting the entertainment market. In this work, we systematically compare a wide range of VR Head-Mounted Displays (HMDs) technologies and designs by defining a new set of metrics that are 1) relevant to most generic VR solutions and 2) are of paramount importance for VR-based education and training. We evaluated ten HMDs based on various criteria, including neck strain, heat development, and color accuracy. Other metrics such as text readability, comfort, and contrast perception were evaluated in a multi-user study on three selected HMDs, namely Oculus Rift S, HTC Vive Pro and Samsung Odyssey+. Results indicate that the HTC Vive Pro performs best with regards to comfort, display quality and compatibility with glasses.

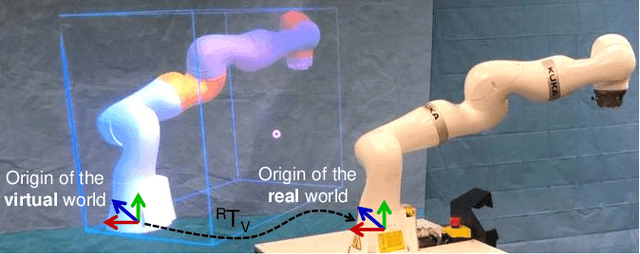

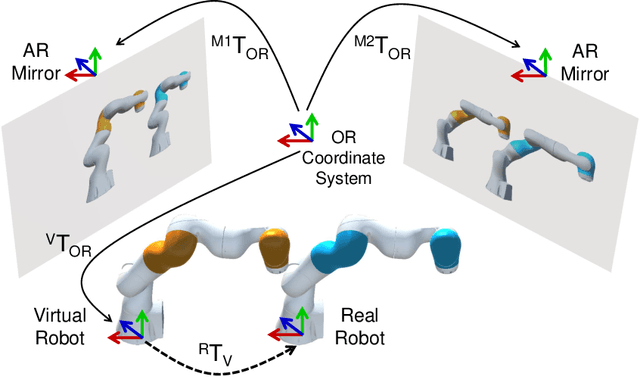

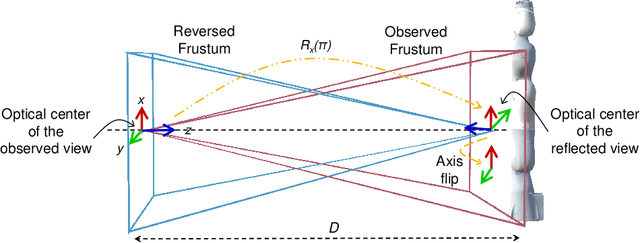

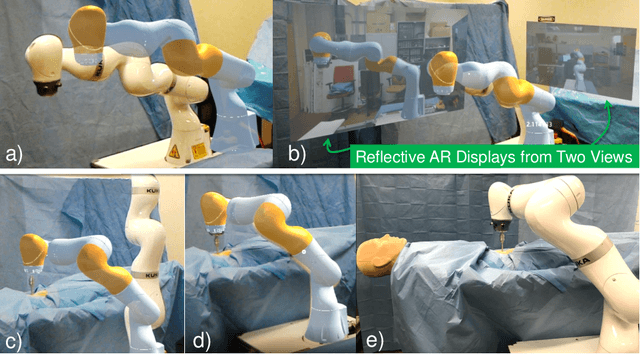

Reflective-AR Display: An Interaction Methodology for Virtual-Real Alignment in Medical Robotics

Jul 23, 2019

Abstract:Robot-assisted minimally invasive surgery has shown to improve patient outcomes, as well as reduce complications and recovery time for several clinical applications. However, increasingly configurable robotic arms require careful setup by surgical staff to maximize anatomical reach and avoid collisions. Furthermore, safety regulations prevent automatically driving robotic arms to this optimal positioning. We propose a Head-Mounted Display (HMD) based augmented reality (AR) guidance system for optimal surgical arm setup. In this case, the staff equipped with HMD aligns the robot with its planned virtual counterpart. The main challenge, however, is the perspective ambiguities hindering such collaborative robotic solution. To overcome this challenge, we introduce a novel registration concept for intuitive alignment of such AR content by providing a multi-view AR experience via reflective-AR displays that show the augmentations from multiple viewpoints. Using this system, operators can visualize different perspectives simultaneously while actively adjusting the pose to determine the registration transformation that most closely superimposes the virtual onto real. The experimental results demonstrate improvement in the interactive alignment of a virtual and real robot when using a reflective-AR display. We also present measurements from configuring a robotic manipulator in a simulated trocar placement surgery using the AR guidance methodology.

Augmented Reality-based Feedback for Technician-in-the-loop C-arm Repositioning

Jun 22, 2018

Abstract:Interventional C-arm imaging is crucial to percutaneous orthopedic procedures as it enables the surgeon to monitor the progress of surgery on the anatomy level. Minimally invasive interventions require repeated acquisition of X-ray images from different anatomical views to verify tool placement. Achieving and reproducing these views often comes at the cost of increased surgical time and radiation dose to both patient and staff. This work proposes a marker-free "technician-in-the-loop" Augmented Reality (AR) solution for C-arm repositioning. The X-ray technician operating the C-arm interventionally is equipped with a head-mounted display capable of recording desired C-arm poses in 3D via an integrated infrared sensor. For C-arm repositioning to a particular target view, the recorded C-arm pose is restored as a virtual object and visualized in an AR environment, serving as a perceptual reference for the technician. We conduct experiments in a setting simulating orthopedic trauma surgery. Our proof-of-principle findings indicate that the proposed system can decrease the 2.76 X-ray images required per desired view down to zero, suggesting substantial reductions of radiation dose during C-arm repositioning. The proposed AR solution is a first step towards facilitating communication between the surgeon and the surgical staff, improving the quality of surgical image acquisition, and enabling context-aware guidance for surgery rooms of the future. The concept of technician-in-the-loop design will become relevant to various interventions considering the expected advancements of sensing and wearable computing in the near future.

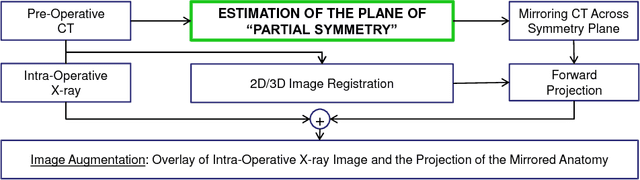

Exploiting Partial Structural Symmetry For Patient-Specific Image Augmentation in Trauma Interventions

Apr 09, 2018

Abstract:In unilateral pelvic fracture reductions, surgeons attempt to reconstruct the bone fragments such that bilateral symmetry in the bony anatomy is restored. We propose to exploit this "structurally symmetric" nature of the pelvic bone, and provide intra-operative image augmentation to assist the surgeon in repairing dislocated fragments. The main challenge is to automatically estimate the desired plane of symmetry within the patient's pre-operative CT. We propose to estimate this plane using a non-linear optimization strategy, by minimizing Tukey's biweight robust estimator, relying on the partial symmetry of the anatomy. Moreover, a regularization term is designed to enforce the similarity of bone density histograms on both sides of this plane, relying on the biological fact that, even if injured, the dislocated bone segments remain within the body. The experimental results demonstrate the performance of the proposed method in estimating this "plane of partial symmetry" using CT images of both healthy and injured anatomy. Examples of unilateral pelvic fractures are used to show how intra-operative X-ray images could be augmented with the forward-projections of the mirrored anatomy, acting as objective road-map for fracture reduction procedures.

Closing the Calibration Loop: An Inside-out-tracking Paradigm for Augmented Reality in Orthopedic Surgery

Mar 22, 2018

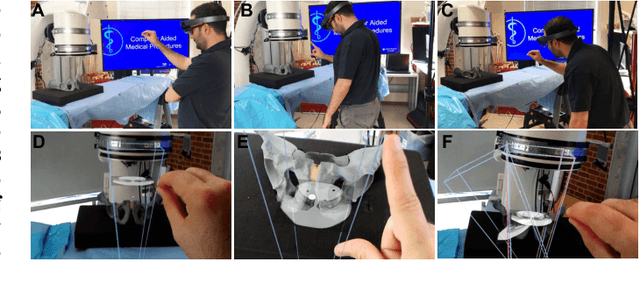

Abstract:In percutaneous orthopedic interventions the surgeon attempts to reduce and fixate fractures in bony structures. The complexity of these interventions arises when the surgeon performs the challenging task of navigating surgical tools percutaneously only under the guidance of 2D interventional X-ray imaging. Moreover, the intra-operatively acquired data is only visualized indirectly on external displays. In this work, we propose a flexible Augmented Reality (AR) paradigm using optical see-through head mounted displays. The key technical contribution of this work includes the marker-less and dynamic tracking concept which closes the calibration loop between patient, C-arm and the surgeon. This calibration is enabled using Simultaneous Localization and Mapping of the environment of the operating theater. In return, the proposed solution provides in situ visualization of pre- and intra-operative 3D medical data directly at the surgical site. We demonstrate pre-clinical evaluation of a prototype system, and report errors for calibration and target registration. Finally, we demonstrate the usefulness of the proposed inside-out tracking system in achieving "bull's eye" view for C-arm-guided punctures. This AR solution provides an intuitive visualization of the anatomy and can simplify the hand-eye coordination for the orthopedic surgeon.

X-ray-transform Invariant Anatomical Landmark Detection for Pelvic Trauma Surgery

Mar 22, 2018

Abstract:X-ray image guidance enables percutaneous alternatives to complex procedures. Unfortunately, the indirect view onto the anatomy in addition to projective simplification substantially increase the task-load for the surgeon. Additional 3D information such as knowledge of anatomical landmarks can benefit surgical decision making in complicated scenarios. Automatic detection of these landmarks in transmission imaging is challenging since image-domain features characteristic to a certain landmark change substantially depending on the viewing direction. Consequently and to the best of our knowledge, the above problem has not yet been addressed. In this work, we present a method to automatically detect anatomical landmarks in X-ray images independent of the viewing direction. To this end, a sequential prediction framework based on convolutional layers is trained on synthetically generated data of the pelvic anatomy to predict 23 landmarks in single X-ray images. View independence is contingent on training conditions and, here, is achieved on a spherical segment covering (120 x 90) degrees in LAO/RAO and CRAN/CAUD, respectively, centered around AP. On synthetic data, the proposed approach achieves a mean prediction error of 5.6 +- 4.5 mm. We demonstrate that the proposed network is immediately applicable to clinically acquired data of the pelvis. In particular, we show that our intra-operative landmark detection together with pre-operative CT enables X-ray pose estimation which, ultimately, benefits initialization of image-based 2D/3D registration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge