Alex Johnson

Augment Yourself: Mixed Reality Self-Augmentation Using Optical See-through Head-mounted Displays and Physical Mirrors

Jul 06, 2020

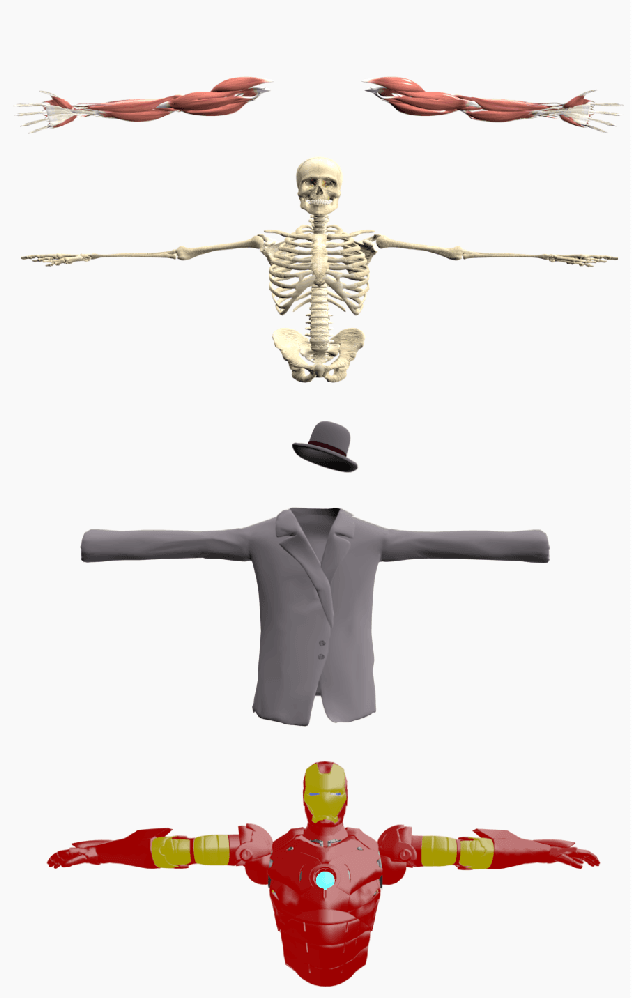

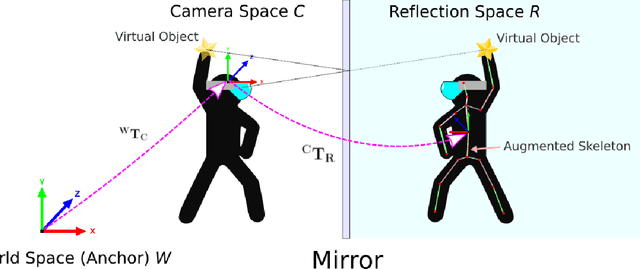

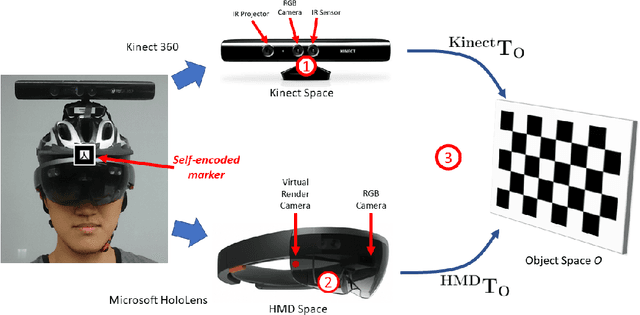

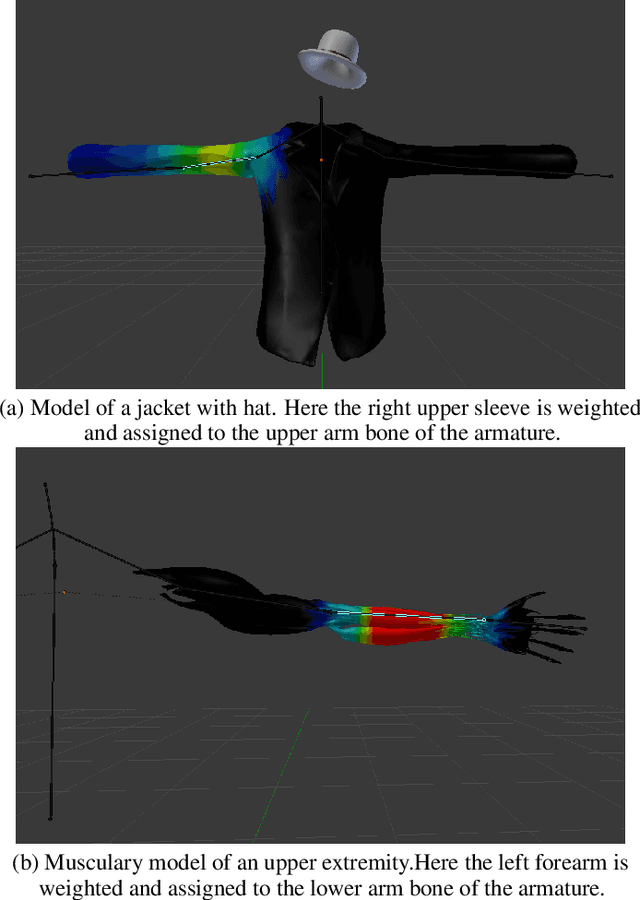

Abstract:Optical see-though head-mounted displays (OST HMDs) are one of the key technologies for merging virtual objects and physical scenes to provide an immersive mixed reality (MR) environment to its user. A fundamental limitation of HMDs is, that the user itself cannot be augmented conveniently as, in casual posture, only the distal upper extremities are within the field of view of the HMD. Consequently, most MR applications that are centered around the user, such as virtual dressing rooms or learning of body movements, cannot be realized with HMDs. In this paper, we propose a novel concept and prototype system that combines OST HMDs and physical mirrors to enable self-augmentation and provide an immersive MR environment centered around the user. Our system, to the best of our knowledge the first of its kind, estimates the user's pose in the virtual image generated by the mirror using an RGBD camera attached to the HMD and anchors virtual objects to the reflection rather than the user directly. We evaluate our system quantitatively with respect to calibration accuracy and infrared signal degradation effects due to the mirror, and show its potential in applications where large mirrors are already an integral part of the facility. Particularly, we demonstrate its use for virtual fitting rooms, gaming applications, anatomy learning, and personal fitness. In contrast to competing devices such as LCD-equipped smart mirrors, the proposed system consists of only an HMD with RGBD camera and, thus, does not require a prepared environment making it very flexible and generic. In future work, we will aim to investigate how the system can be optimally used for physical rehabilitation and personal training as a promising application.

Exploring Partial Intrinsic and Extrinsic Symmetry in 3D Medical Imaging

Mar 04, 2020

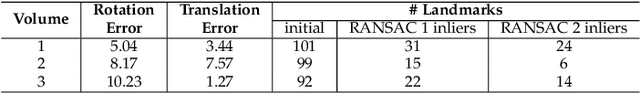

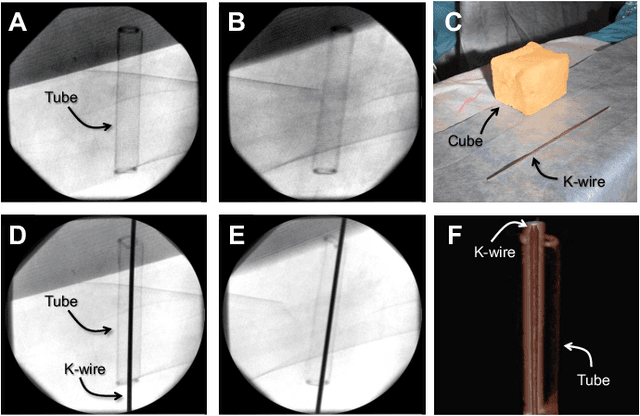

Abstract:We present a novel methodology to detect imperfect bilateral symmetry in CT of human anatomy. In this paper, the structurally symmetric nature of the pelvic bone is explored and is used to provide interventional image augmentation for treatment of unilateral fractures in patients with traumatic injuries. The mathematical basis of our solution is on the incorporation of attributes and characteristics that satisfy the properties of intrinsic and extrinsic symmetry and are robust to outliers. In the first step, feature points that satisfy intrinsic symmetry are automatically detected in the M\"obius space defined on the CT data. These features are then pruned via a two-stage RANSAC to attain correspondences that satisfy also the extrinsic symmetry. Then, a disparity function based on Tukey's biweight robust estimator is introduced and minimized to identify a symmetry plane parametrization that yields maximum contralateral similarity. Finally, a novel regularization term is introduced to enhance similarity between bone density histograms across the partial symmetry plane, relying on the important biological observation that, even if injured, the dislocated bone segments remain within the body. Our extensive evaluations on various cases of common fracture types demonstrate the validity of the novel concepts and the robustness and accuracy of the proposed method.

Spatiotemporal-Aware Augmented Reality: Redefining HCI in Image-Guided Therapy

Mar 04, 2020

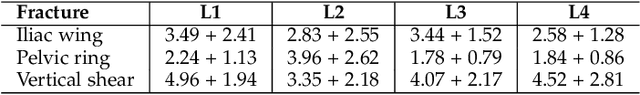

Abstract:Suboptimal interaction with patient data and challenges in mastering 3D anatomy based on ill-posed 2D interventional images are essential concerns in image-guided therapies. Augmented reality (AR) has been introduced in the operating rooms in the last decade; however, in image-guided interventions, it has often only been considered as a visualization device improving traditional workflows. As a consequence, the technology is gaining minimum maturity that it requires to redefine new procedures, user interfaces, and interactions. The main contribution of this paper is to reveal how exemplary workflows are redefined by taking full advantage of head-mounted displays when entirely co-registered with the imaging system at all times. The proposed AR landscape is enabled by co-localizing the users and the imaging devices via the operating room environment and exploiting all involved frustums to move spatial information between different bodies. The awareness of the system from the geometric and physical characteristics of X-ray imaging allows the redefinition of different human-machine interfaces. We demonstrate that this AR paradigm is generic, and can benefit a wide variety of procedures. Our system achieved an error of $4.76\pm2.91$ mm for placing K-wire in a fracture management procedure, and yielded errors of $1.57\pm1.16^\circ$ and $1.46\pm1.00^\circ$ in the abduction and anteversion angles, respectively, for total hip arthroplasty. We hope that our holistic approach towards improving the interface of surgery not only augments the surgeon's capabilities but also augments the surgical team's experience in carrying out an effective intervention with reduced complications and provide novel approaches of documenting procedures for training purposes.

On-the-fly Augmented Reality for Orthopaedic Surgery Using a Multi-Modal Fiducial

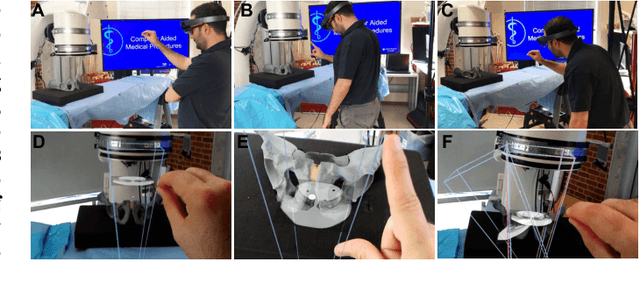

Jan 04, 2018Abstract:Fluoroscopic X-ray guidance is a cornerstone for percutaneous orthopaedic surgical procedures. However, two-dimensional observations of the three-dimensional anatomy suffer from the effects of projective simplification. Consequently, many X-ray images from various orientations need to be acquired for the surgeon to accurately assess the spatial relations between the patient's anatomy and the surgical tools. In this paper, we present an on-the-fly surgical support system that provides guidance using augmented reality and can be used in quasi-unprepared operating rooms. The proposed system builds upon a multi-modality marker and simultaneous localization and mapping technique to co-calibrate an optical see-through head mounted display to a C-arm fluoroscopy system. Then, annotations on the 2D X-ray images can be rendered as virtual objects in 3D providing surgical guidance. We quantitatively evaluate the components of the proposed system, and finally, design a feasibility study on a semi-anthropomorphic phantom. The accuracy of our system was comparable to the traditional image-guided technique while substantially reducing the number of acquired X-ray images as well as procedure time. Our promising results encourage further research on the interaction between virtual and real objects, that we believe will directly benefit the proposed method. Further, we would like to explore the capabilities of our on-the-fly augmented reality support system in a larger study directed towards common orthopaedic interventions.

* S. Andress, A. Johnson, M. Unberath, and A. Winkler have contributed equally and are listed in alphabetical order

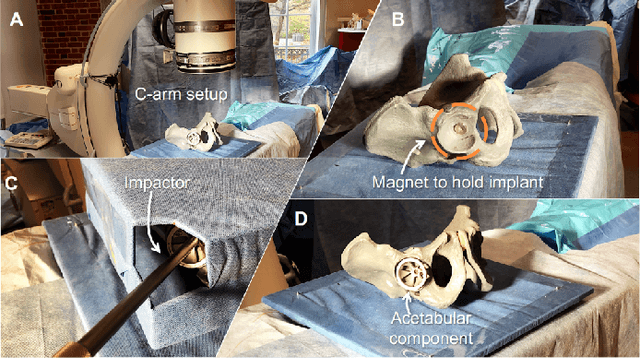

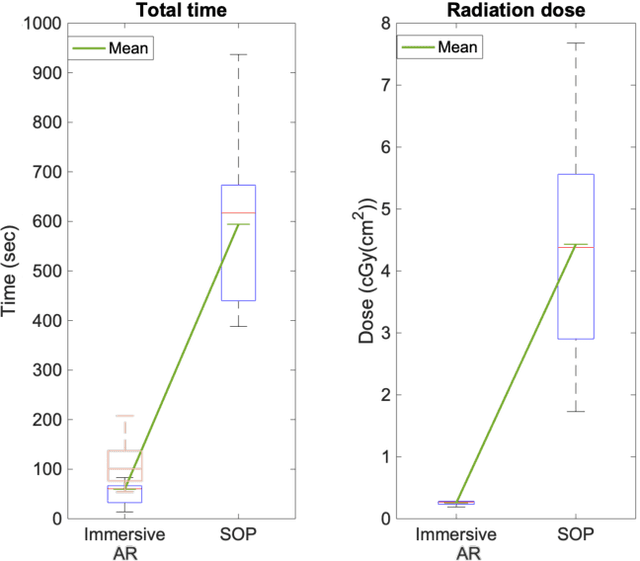

Plan in 2D, execute in 3D: An augmented reality solution for cup placement in total hip arthroplasty

Jan 04, 2018Abstract:Reproducibly achieving proper implant alignment is a critical step in total hip arthroplasty (THA) procedures that has been shown to substantially affect patient outcome. In current practice, correct alignment of the acetabular cup is verified in C-arm X-ray images that are acquired in an anterior-posterior (AP) view. Favorable surgical outcome is, therefore, heavily dependent on the surgeon's experience in understanding the 3D orientation of a hemispheric implant from 2D AP projection images. This work proposes an easy to use intra-operative component planning system based on two C-arm X-ray images that is combined with 3D augmented reality (AR) visualization that simplifies impactor and cup placement according to the planning by providing a real-time RGBD data overlay. We evaluate the feasibility of our system in a user study comprising four orthopedic surgeons at the Johns Hopkins Hospital, and also report errors in translation, anteversion, and abduction as low as 1.98 mm, 1.10 degrees, and 0.53 degrees, respectively. The promising performance of this AR solution shows that deploying this system could eliminate the need for excessive radiation, simplify the intervention, and enable reproducibly accurate placement of acetabular implants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge