Arian Mehrfard

Performance Evaluation of Deep Learning-Based State Estimation: A Comparative Study of KalmanNet

Nov 25, 2024

Abstract:Kalman Filters (KF) are fundamental to real-time state estimation applications, including radar-based tracking systems used in modern driver assistance and safety technologies. In a linear dynamical system with Gaussian noise distributions the KF is the optimal estimator. However, real-world systems often deviate from these assumptions. This deviation combined with the success of deep learning across many disciplines has prompted the exploration of data driven approaches that leverage deep learning for filtering applications. These learned state estimators are often reported to outperform traditional model based systems. In this work, one prevalent model, KalmanNet, was selected and evaluated on automotive radar data to assess its performance under real-world conditions and compare it to an interacting multiple models (IMM) filter. The evaluation is based on raw and normalized errors as well as the state uncertainty. The results demonstrate that KalmanNet is outperformed by the IMM filter and indicate that while data-driven methods such as KalmanNet show promise, their current lack of reliability and robustness makes them unsuited for safety-critical applications.

Uncertainty-Aware Shape Estimation of a Surgical Continuum Manipulator in Constrained Environments using Fiber Bragg Grating Sensors

May 11, 2024

Abstract:Continuum Dexterous Manipulators (CDMs) are well-suited tools for minimally invasive surgery due to their inherent dexterity and reachability. Nonetheless, their flexible structure and non-linear curvature pose significant challenges for shape-based feedback control. The use of Fiber Bragg Grating (FBG) sensors for shape sensing has shown great potential in estimating the CDM's tip position and subsequently reconstructing the shape using optimization algorithms. This optimization, however, is under-constrained and may be ill-posed for complex shapes, falling into local minima. In this work, we introduce a novel method capable of directly estimating a CDM's shape from FBG sensor wavelengths using a deep neural network. In addition, we propose the integration of uncertainty estimation to address the critical issue of uncertainty in neural network predictions. Neural network predictions are unreliable when the input sample is outside the training distribution or corrupted by noise. Recognizing such deviations is crucial when integrating neural networks within surgical robotics, as inaccurate estimations can pose serious risks to the patient. We present a robust method that not only improves the precision upon existing techniques for FBG-based shape estimation but also incorporates a mechanism to quantify the models' confidence through uncertainty estimation. We validate the uncertainty estimation through extensive experiments, demonstrating its effectiveness and reliability on out-of-distribution (OOD) data, adding an additional layer of safety and precision to minimally invasive surgical robotics.

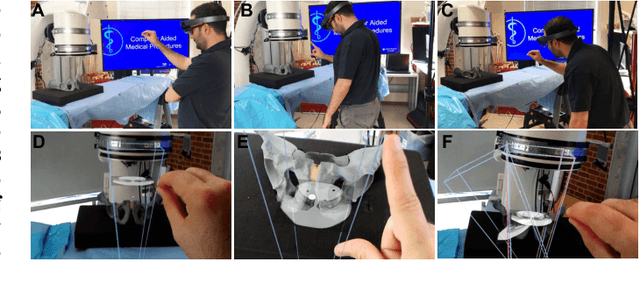

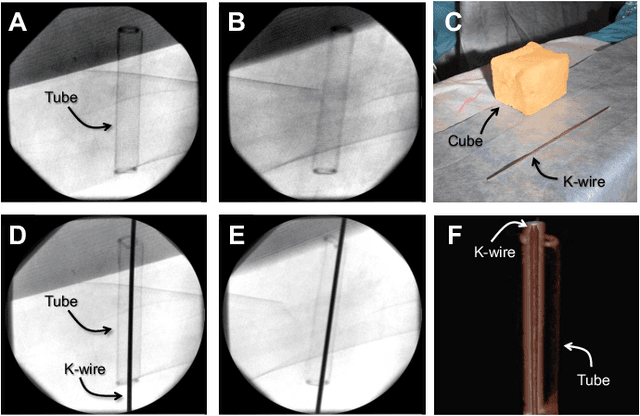

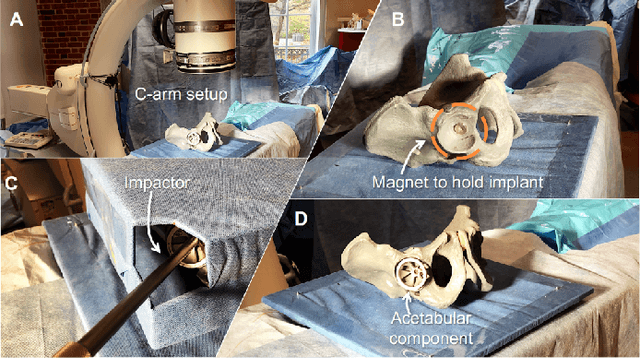

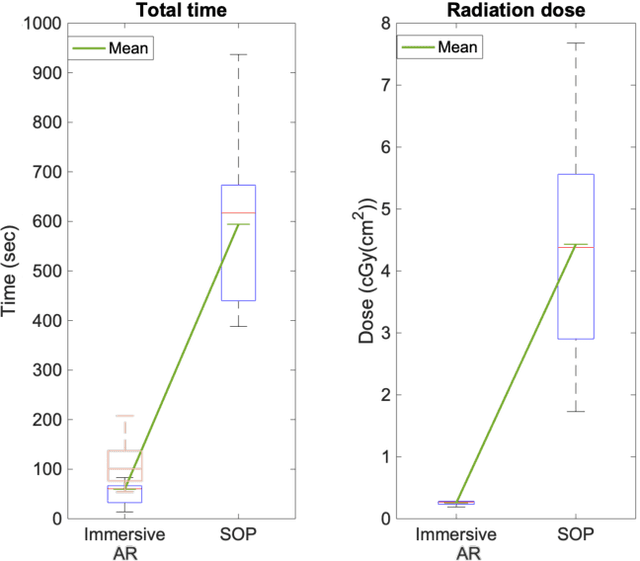

Spatiotemporal-Aware Augmented Reality: Redefining HCI in Image-Guided Therapy

Mar 04, 2020

Abstract:Suboptimal interaction with patient data and challenges in mastering 3D anatomy based on ill-posed 2D interventional images are essential concerns in image-guided therapies. Augmented reality (AR) has been introduced in the operating rooms in the last decade; however, in image-guided interventions, it has often only been considered as a visualization device improving traditional workflows. As a consequence, the technology is gaining minimum maturity that it requires to redefine new procedures, user interfaces, and interactions. The main contribution of this paper is to reveal how exemplary workflows are redefined by taking full advantage of head-mounted displays when entirely co-registered with the imaging system at all times. The proposed AR landscape is enabled by co-localizing the users and the imaging devices via the operating room environment and exploiting all involved frustums to move spatial information between different bodies. The awareness of the system from the geometric and physical characteristics of X-ray imaging allows the redefinition of different human-machine interfaces. We demonstrate that this AR paradigm is generic, and can benefit a wide variety of procedures. Our system achieved an error of $4.76\pm2.91$ mm for placing K-wire in a fracture management procedure, and yielded errors of $1.57\pm1.16^\circ$ and $1.46\pm1.00^\circ$ in the abduction and anteversion angles, respectively, for total hip arthroplasty. We hope that our holistic approach towards improving the interface of surgery not only augments the surgeon's capabilities but also augments the surgical team's experience in carrying out an effective intervention with reduced complications and provide novel approaches of documenting procedures for training purposes.

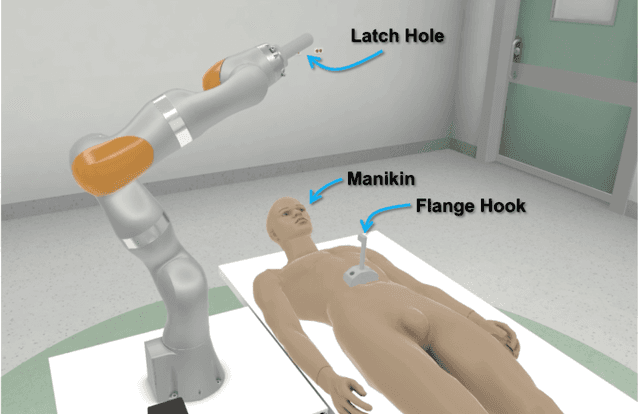

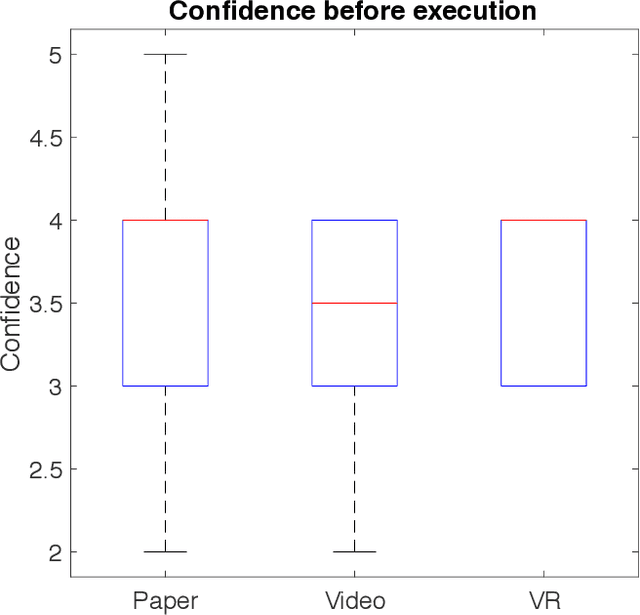

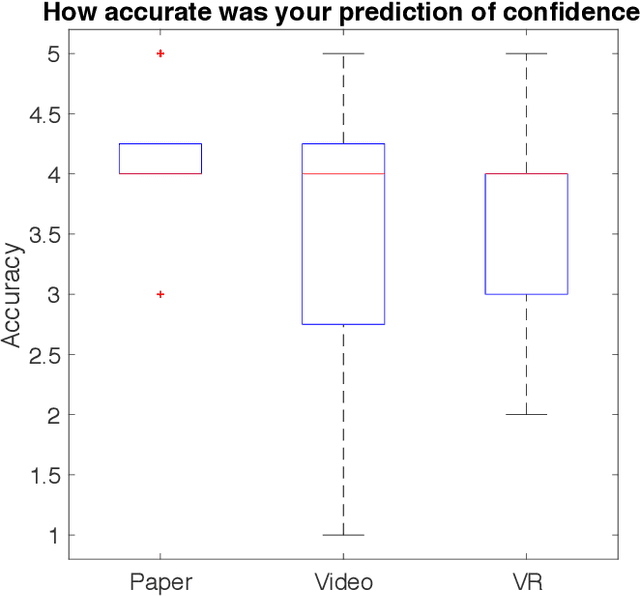

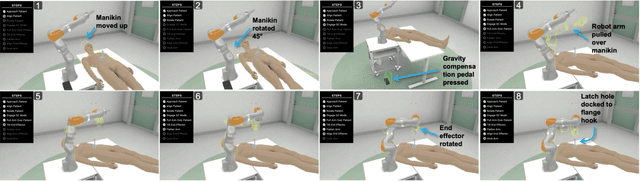

On the Effectiveness of Virtual Reality-based Training for Robotic Setup

Mar 03, 2020

Abstract:Virtual Reality (VR) is rapidly increasing in popularity as a teaching tool. It allows for the creation of a highly immersive, three-dimensional virtual environment intended to simulate real-life environments. With more robots saturating the industry - from manufacturing to healthcare, there is a need to train end-users on how to set up, operate, tear down, and troubleshoot the robot. Even though VR has become widely used in training surgeons on the psychomotor skills associated with operating the robot, little research has been done to see how the benefits of VR could translate to teaching the bedside staff, tasked with supporting the robot during the full end-to-end surgical procedure. We trained 30 participants on how to set up a robotic arm in an environment mimicking clinical setup. We divided these participants equally into 3 groups with one group trained with paper-based instructions, one with video-based instructions and one with VR-based instructions. We then compared and contrasted these three different training methods. VR and paper-based were highly favored training mediums over video-based. VR-trained participants achieved slightly higher fidelity of individual robotic joint angles, suggesting better comprehension of the spatial awareness skills necessary to achieve desired arm positioning. In addition, VR resulted in higher reproducibility of setup fidelity and more consistency in user confidence levels as compared to paper and video-based training.

A Comparative Analysis of Virtual Reality Head-Mounted Display Systems

Dec 05, 2019

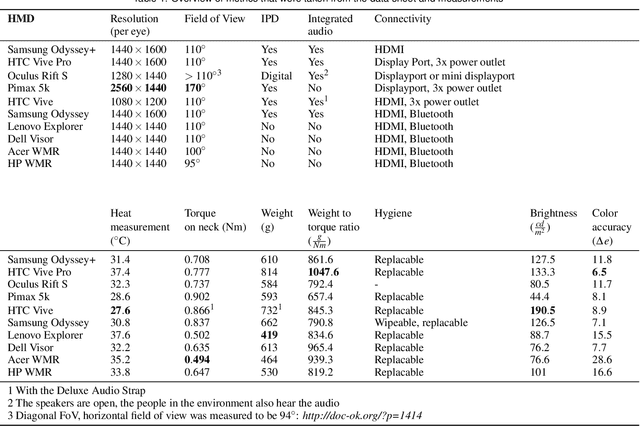

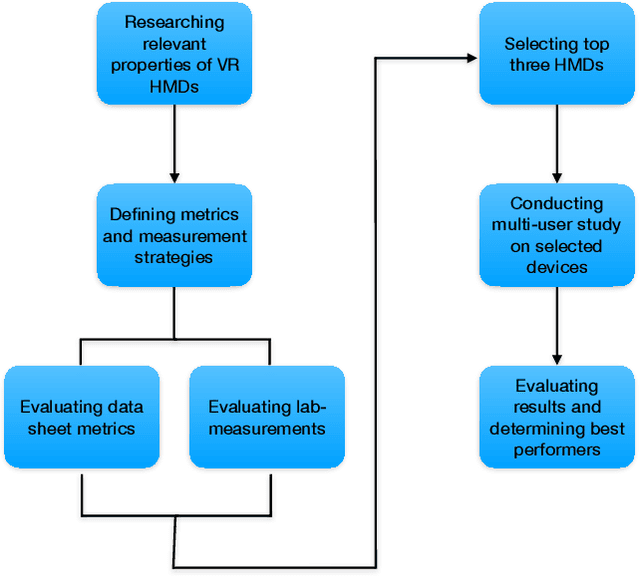

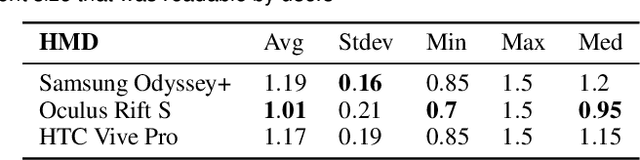

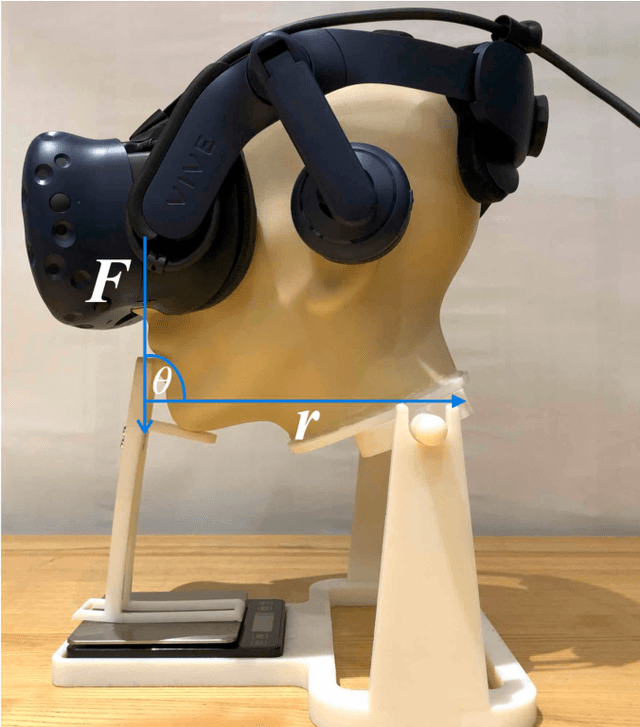

Abstract:With recent advances of Virtual Reality (VR) technology, the deployment of such will dramatically increase in non-entertainment environments, such as professional education and training, manufacturing, service, or low frequency/high risk scenarios. Clinical education is an area that especially stands to benefit from VR technology due to the complexity, high cost, and difficult logistics. The effectiveness of the deployment of VR systems, is subject to factors that may not be necessarily considered for devices targeting the entertainment market. In this work, we systematically compare a wide range of VR Head-Mounted Displays (HMDs) technologies and designs by defining a new set of metrics that are 1) relevant to most generic VR solutions and 2) are of paramount importance for VR-based education and training. We evaluated ten HMDs based on various criteria, including neck strain, heat development, and color accuracy. Other metrics such as text readability, comfort, and contrast perception were evaluated in a multi-user study on three selected HMDs, namely Oculus Rift S, HTC Vive Pro and Samsung Odyssey+. Results indicate that the HTC Vive Pro performs best with regards to comfort, display quality and compatibility with glasses.

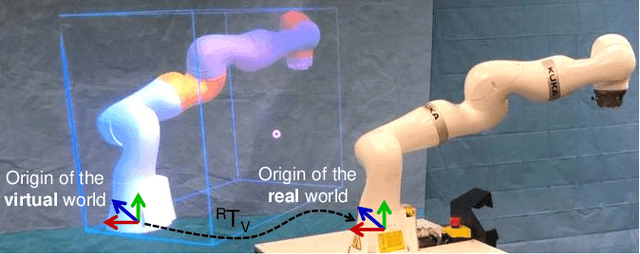

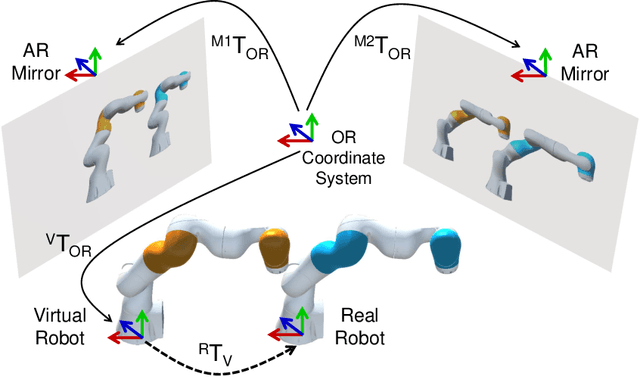

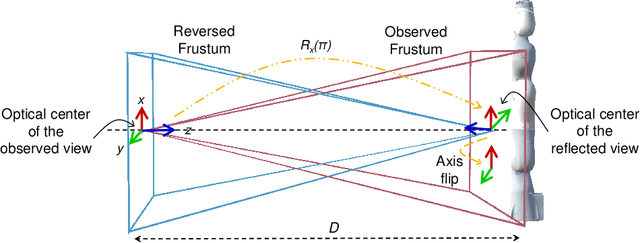

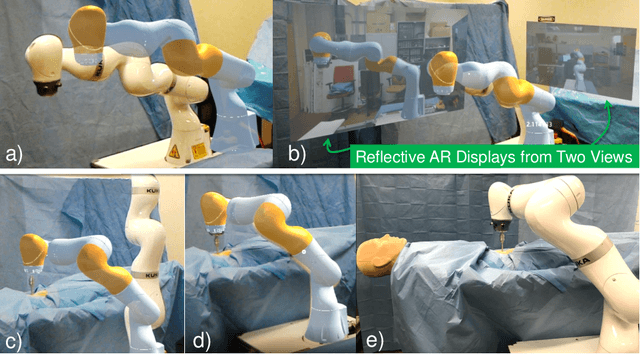

Reflective-AR Display: An Interaction Methodology for Virtual-Real Alignment in Medical Robotics

Jul 23, 2019

Abstract:Robot-assisted minimally invasive surgery has shown to improve patient outcomes, as well as reduce complications and recovery time for several clinical applications. However, increasingly configurable robotic arms require careful setup by surgical staff to maximize anatomical reach and avoid collisions. Furthermore, safety regulations prevent automatically driving robotic arms to this optimal positioning. We propose a Head-Mounted Display (HMD) based augmented reality (AR) guidance system for optimal surgical arm setup. In this case, the staff equipped with HMD aligns the robot with its planned virtual counterpart. The main challenge, however, is the perspective ambiguities hindering such collaborative robotic solution. To overcome this challenge, we introduce a novel registration concept for intuitive alignment of such AR content by providing a multi-view AR experience via reflective-AR displays that show the augmentations from multiple viewpoints. Using this system, operators can visualize different perspectives simultaneously while actively adjusting the pose to determine the registration transformation that most closely superimposes the virtual onto real. The experimental results demonstrate improvement in the interactive alignment of a virtual and real robot when using a reflective-AR display. We also present measurements from configuring a robotic manipulator in a simulated trocar placement surgery using the AR guidance methodology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge