Golchehr Amirkhani

Uncertainty-Aware Shape Estimation of a Surgical Continuum Manipulator in Constrained Environments using Fiber Bragg Grating Sensors

May 11, 2024

Abstract:Continuum Dexterous Manipulators (CDMs) are well-suited tools for minimally invasive surgery due to their inherent dexterity and reachability. Nonetheless, their flexible structure and non-linear curvature pose significant challenges for shape-based feedback control. The use of Fiber Bragg Grating (FBG) sensors for shape sensing has shown great potential in estimating the CDM's tip position and subsequently reconstructing the shape using optimization algorithms. This optimization, however, is under-constrained and may be ill-posed for complex shapes, falling into local minima. In this work, we introduce a novel method capable of directly estimating a CDM's shape from FBG sensor wavelengths using a deep neural network. In addition, we propose the integration of uncertainty estimation to address the critical issue of uncertainty in neural network predictions. Neural network predictions are unreliable when the input sample is outside the training distribution or corrupted by noise. Recognizing such deviations is crucial when integrating neural networks within surgical robotics, as inaccurate estimations can pose serious risks to the patient. We present a robust method that not only improves the precision upon existing techniques for FBG-based shape estimation but also incorporates a mechanism to quantify the models' confidence through uncertainty estimation. We validate the uncertainty estimation through extensive experiments, demonstrating its effectiveness and reliability on out-of-distribution (OOD) data, adding an additional layer of safety and precision to minimally invasive surgical robotics.

Bimanual Manipulation of Steady Hand Eye Robots with Adaptive Sclera Force Control: Cooperative vs. Teleoperation Strategies

Feb 28, 2024

Abstract:Performing intricate eye microsurgery, such as retinal vein cannulation (RVC), as a potential treatment for retinal vein occlusion (RVO), without the assistance of a surgical robotic system is very challenging to do safely. The main limitation has to do with the physiological hand tremor of surgeons. Robot-assisted eye surgery technology may resolve the problems of hand tremors and fatigue and improve the safety and precision of RVC. The Steady-Hand Eye Robot (SHER) is an admittance-based robotic system that can filter out hand tremors and enables ophthalmologists to manipulate a surgical instrument inside the eye cooperatively. However, the admittance-based cooperative control mode does not address crucial safety considerations, such as minimizing contact force between the surgical instrument and the sclera surface to prevent tissue damage. An adaptive sclera force control algorithm was proposed to address this limitation using an FBG-based force-sensing tool to measure and minimize the tool-sclera interaction force. Additionally, features like haptic feedback or hand motion scaling, which can improve the safety and precision of surgery, require a teleoperation control framework. We implemented a bimanual adaptive teleoperation (BMAT) control mode using SHER 2.0 and SHER 2.1 and compared its performance with a bimanual adaptive cooperative (BMAC) mode. Both BMAT and BMAC modes were tested in sitting and standing postures during a vessel-following experiment under a surgical microscope. It is shown, for the first time to the best of our knowledge in robot-assisted retinal surgery, that integrating the adaptive sclera force control algorithm with the bimanual teleoperation framework enables surgeons to safely perform bimanual telemanipulation of the eye without over-stretching it, even in the absence of registration between the two robots.

Cooperative vs. Teleoperation Control of the Steady Hand Eye Robot with Adaptive Sclera Force Control: A Comparative Study

Dec 04, 2023Abstract:A surgeon's physiological hand tremor can significantly impact the outcome of delicate and precise retinal surgery, such as retinal vein cannulation (RVC) and epiretinal membrane peeling. Robot-assisted eye surgery technology provides ophthalmologists with advanced capabilities such as hand tremor cancellation, hand motion scaling, and safety constraints that enable them to perform these otherwise challenging and high-risk surgeries with high precision and safety. Steady-Hand Eye Robot (SHER) with cooperative control mode can filter out surgeon's hand tremor, yet another important safety feature, that is, minimizing the contact force between the surgical instrument and sclera surface for avoiding tissue damage cannot be met in this control mode. Also, other capabilities, such as hand motion scaling and haptic feedback, require a teleoperation control framework. In this work, for the first time, we implemented a teleoperation control mode incorporated with an adaptive sclera force control algorithm using a PHANTOM Omni haptic device and a force-sensing surgical instrument equipped with Fiber Bragg Grating (FBG) sensors attached to the SHER 2.1 end-effector. This adaptive sclera force control algorithm allows the robot to dynamically minimize the tool-sclera contact force. Moreover, for the first time, we compared the performance of the proposed adaptive teleoperation mode with the cooperative mode by conducting a vessel-following experiment inside an eye phantom under a microscope.

Using an Improved Output Feedback MPC Approach for Developing a Haptic Virtual Training System

Mar 12, 2023Abstract:Haptic training simulators generally consist of three major components, namely a human operator, a haptic interface, and a virtual environment. Appropriate dynamic modeling of each of these components can have far-reaching implications for the whole system's performance improvement in terms of transparency, the analogy to the real environment, and stability. In this paper, we developed a virtual-based haptic training simulator for Endoscopic Sinus Surgery (ESS) by doing a dynamic characterization of the phenomenological sinus tissue fracture in the virtual environment, using an input-constrained linear parametric variable model. A parallel robot manipulator equipped with a calibrated force sensor is employed as a haptic interface. A lumped five-parameter single-degree-of-freedom mass-stiffness-damping impedance model is assigned to the operator's arm dynamic. A robust online output feedback quasi-min-max model predictive control (MPC) framework is proposed to stabilize the system during the switching between the piecewise linear dynamics of the virtual environment. The simulations and the experimental results demonstrate the effectiveness of the proposed control algorithm in terms of robustness and convergence to the desired impedance quantities.

Design and Fabrication of a Fiber Bragg Grating Shape Sensor for Shape Reconstruction of a Continuum Manipulator

Mar 07, 2023

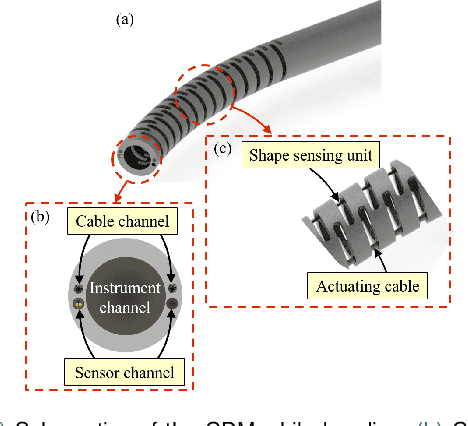

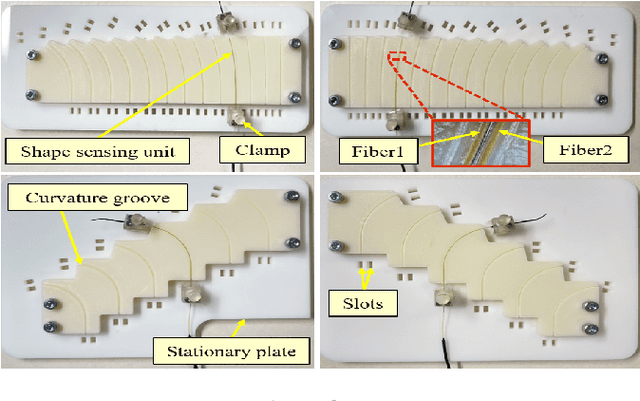

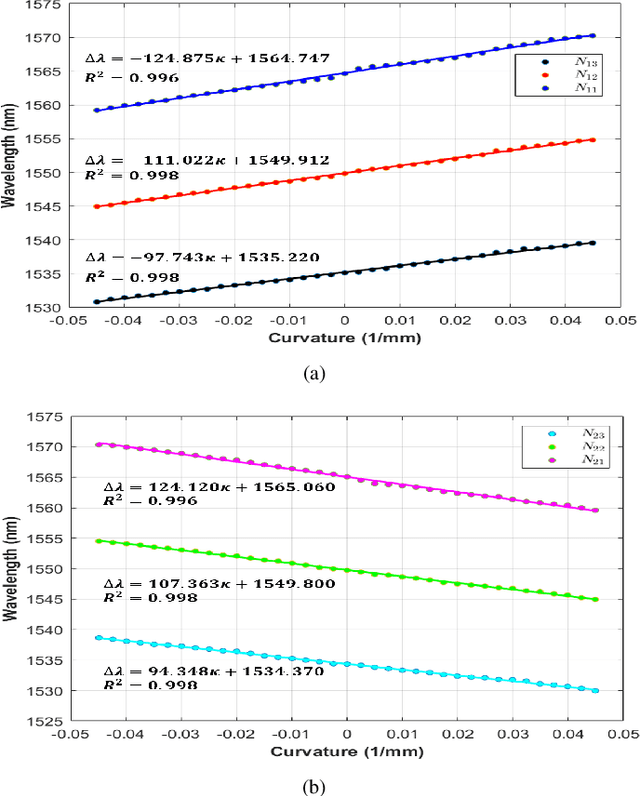

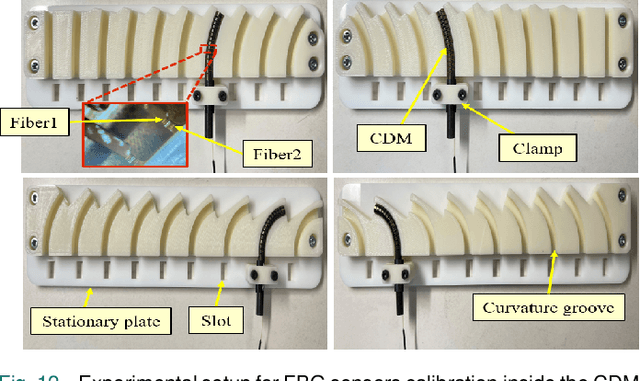

Abstract:Continuum dexterous manipulators (CDMs) are suitable for performing tasks in a constrained environment due to their high dexterity and maneuverability. Despite the inherent advantages of CDMs in minimally invasive surgery, real-time control of CDMs' shape during non-constant curvature bending is still challenging. This study presents a novel approach for the design and fabrication of a large deflection fiber Bragg grating (FBG) shape sensor embedded within the lumens inside the walls of a CDM with a large instrument channel. The shape sensor consisted of two fibers, each with three FBG nodes. A shape-sensing model was introduced to reconstruct the centerline of the CDM based on FBG wavelengths. Different experiments, including shape sensor tests and CDM shape reconstruction tests, were conducted to assess the overall accuracy of the shape sensing. The FBG sensor evaluation results revealed the linear curvature-wavelength relationship with the large curvature detection of 0.045 mm at a 90 degrees bending angle and a sensitivity of up to 5.50 nm/mm in each bending direction. The CDM's shape reconstruction experiments in a free environment demonstrated the shape tracking accuracy of 0.216+-0.126 mm for positive/negative deflections. Also, the CDM shape reconstruction error for three cases of bending with obstacles were observed to be 0.436+-0.370 mm for the proximal case, 0.485+-0.418 mm for the middle case, and 0.312+-0.261 mm for the distal case. This study indicates the adequate performance of the FBG sensor and the effectiveness of the model for tracking the shape of the large-deflection CDM with nonconstant-curvature bending for minimally-invasive orthopaedic applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge