Mojtaba Esfandiari

A Deep Learning-Driven Autonomous System for Retinal Vein Cannulation: Validation Using a Chicken Embryo Model

Jul 29, 2025Abstract:Retinal vein cannulation (RVC) is a minimally invasive microsurgical procedure for treating retinal vein occlusion (RVO), a leading cause of vision impairment. However, the small size and fragility of retinal veins, coupled with the need for high-precision, tremor-free needle manipulation, create significant technical challenges. These limitations highlight the need for robotic assistance to improve accuracy and stability. This study presents an automated robotic system with a top-down microscope and B-scan optical coherence tomography (OCT) imaging for precise depth sensing. Deep learning-based models enable real-time needle navigation, contact detection, and vein puncture recognition, using a chicken embryo model as a surrogate for human retinal veins. The system autonomously detects needle position and puncture events with 85% accuracy. The experiments demonstrate notable reductions in navigation and puncture times compared to manual methods. Our results demonstrate the potential of integrating advanced imaging and deep learning to automate microsurgical tasks, providing a pathway for safer and more reliable RVC procedures with enhanced precision and reproducibility.

An Extended Generalized Prandtl-Ishlinskii Hysteresis Model for I2RIS Robot

Apr 16, 2025Abstract:Retinal surgery requires extreme precision due to constrained anatomical spaces in the human retina. To assist surgeons achieve this level of accuracy, the Improved Integrated Robotic Intraocular Snake (I2RIS) with dexterous capability has been developed. However, such flexible tendon-driven robots often suffer from hysteresis problems, which significantly challenges precise control and positioning. In particular, we observed multi-stage hysteresis phenomena in the small-scale I2RIS. In this paper, we propose an Extended Generalized Prandtl-Ishlinskii (EGPI) model to increase the fitting accuracy of the hysteresis. The model incorporates a novel switching mechanism that enables it to describe multi-stage hysteresis in the regions of monotonic input. Experimental validation on I2RIS data demonstrate that the EGPI model outperforms the conventional Generalized Prandtl-Ishlinskii (GPI) model in terms of RMSE, NRMSE, and MAE across multiple motor input directions. The EGPI model in our study highlights the potential in modeling multi-stage hysteresis in minimally invasive flexible robots.

Deep Learning-Enhanced Robotic Subretinal Injection with Real-Time Retinal Motion Compensation

Apr 04, 2025Abstract:Subretinal injection is a critical procedure for delivering therapeutic agents to treat retinal diseases such as age-related macular degeneration (AMD). However, retinal motion caused by physiological factors such as respiration and heartbeat significantly impacts precise needle positioning, increasing the risk of retinal pigment epithelium (RPE) damage. This paper presents a fully autonomous robotic subretinal injection system that integrates intraoperative optical coherence tomography (iOCT) imaging and deep learning-based motion prediction to synchronize needle motion with retinal displacement. A Long Short-Term Memory (LSTM) neural network is used to predict internal limiting membrane (ILM) motion, outperforming a Fast Fourier Transform (FFT)-based baseline model. Additionally, a real-time registration framework aligns the needle tip position with the robot's coordinate frame. Then, a dynamic proportional speed control strategy ensures smooth and adaptive needle insertion. Experimental validation in both simulation and ex vivo open-sky porcine eyes demonstrates precise motion synchronization and successful subretinal injections. The experiment achieves a mean tracking error below 16.4 {\mu}m in pre-insertion phases. These results show the potential of AI-driven robotic assistance to improve the safety and accuracy of retinal microsurgery.

Model Predictive Path Integral Control of I2RIS Robot Using RBF Identifier and Extended Kalman Filter

Mar 18, 2025Abstract:Modeling and controlling cable-driven snake robots is a challenging problem due to nonlinear mechanical properties such as hysteresis, variable stiffness, and unknown friction between the actuation cables and the robot body. This challenge is more significant for snake robots in ophthalmic surgery applications, such as the Improved Integrated Robotic Intraocular Snake (I$^2$RIS), given its small size and lack of embedded sensory feedback. Data-driven models take advantage of global function approximations, reducing complicated analytical models' challenge and computational costs. However, their performance might deteriorate in case of new data unseen in the training phase. Therefore, adding an adaptation mechanism might improve these models' performance during snake robots' interactions with unknown environments. In this work, we applied a model predictive path integral (MPPI) controller on a data-driven model of the I$^2$RIS based on the Gaussian mixture model (GMM) and Gaussian mixture regression (GMR). To analyze the performance of the MPPI in unseen robot-tissue interaction situations, unknown external disturbances and environmental loads are simulated and added to the GMM-GMR model. These uncertainties of the robot model are then identified online using a radial basis function (RBF) whose weights are updated using an extended Kalman filter (EKF). Simulation results demonstrated the robustness of the optimal control solutions of the MPPI algorithm and its computational superiority over a conventional model predictive control (MPC) algorithm.

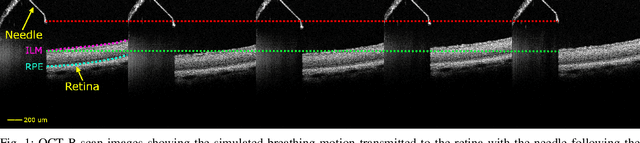

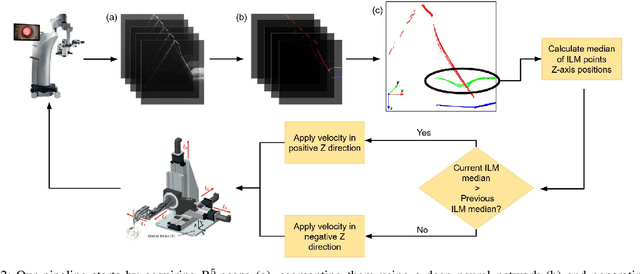

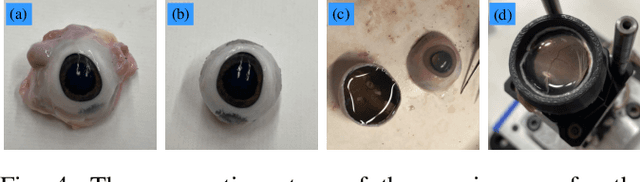

Towards Motion Compensation in Autonomous Robotic Subretinal Injections

Nov 27, 2024

Abstract:Exudative (wet) age-related macular degeneration (AMD) is a leading cause of vision loss in older adults, typically treated with intravitreal injections. Emerging therapies, such as subretinal injections of stem cells, gene therapy, small molecules or RPE cells require precise delivery to avoid damaging delicate retinal structures. Autonomous robotic systems can potentially offer the necessary precision for these procedures. This paper presents a novel approach for motion compensation in robotic subretinal injections, utilizing real-time Optical Coherence Tomography (OCT). The proposed method leverages B$^{5}$-scans, a rapid acquisition of small-volume OCT data, for dynamic tracking of retinal motion along the Z-axis, compensating for physiological movements such as breathing and heartbeat. Validation experiments on \textit{ex vivo} porcine eyes revealed challenges in maintaining a consistent tool-to-retina distance, with deviations of up to 200 $\mu m$ for 100 $\mu m$ amplitude motions and over 80 $\mu m$ for 25 $\mu m$ amplitude motions over one minute. Subretinal injections faced additional difficulties, with horizontal shifts causing the needle to move off-target and inject into the vitreous. These results highlight the need for improved motion prediction and horizontal stability to enhance the accuracy and safety of robotic subretinal procedures.

Real-time Deformation-aware Control for Autonomous Robotic Subretinal Injection under iOCT Guidance

Nov 10, 2024

Abstract:Robotic platforms provide repeatable and precise tool positioning that significantly enhances retinal microsurgery. Integration of such systems with intraoperative optical coherence tomography (iOCT) enables image-guided robotic interventions, allowing to autonomously perform advanced treatment possibilities, such as injecting therapeutic agents into the subretinal space. Yet, tissue deformations due to tool-tissue interactions are a major challenge in autonomous iOCT-guided robotic subretinal injection, impacting correct needle positioning and, thus, the outcome of the procedure. This paper presents a novel method for autonomous subretinal injection under iOCT guidance that considers tissue deformations during the insertion procedure. This is achieved through real-time segmentation and 3D reconstruction of the surgical scene from densely sampled iOCT B-scans, which we refer to as B5-scans, to monitor the positioning of the instrument regarding a virtual target layer defined at a relative position between the ILM and RPE. Our experiments on ex-vivo porcine eyes demonstrate dynamic adjustment of the insertion depth and overall improved accuracy in needle positioning compared to previous autonomous insertion approaches. Compared to a 35% success rate in subretinal bleb generation with previous approaches, our proposed method reliably and robustly created subretinal blebs in all our experiments.

Bimanual Manipulation of Steady Hand Eye Robots with Adaptive Sclera Force Control: Cooperative vs. Teleoperation Strategies

Feb 28, 2024

Abstract:Performing intricate eye microsurgery, such as retinal vein cannulation (RVC), as a potential treatment for retinal vein occlusion (RVO), without the assistance of a surgical robotic system is very challenging to do safely. The main limitation has to do with the physiological hand tremor of surgeons. Robot-assisted eye surgery technology may resolve the problems of hand tremors and fatigue and improve the safety and precision of RVC. The Steady-Hand Eye Robot (SHER) is an admittance-based robotic system that can filter out hand tremors and enables ophthalmologists to manipulate a surgical instrument inside the eye cooperatively. However, the admittance-based cooperative control mode does not address crucial safety considerations, such as minimizing contact force between the surgical instrument and the sclera surface to prevent tissue damage. An adaptive sclera force control algorithm was proposed to address this limitation using an FBG-based force-sensing tool to measure and minimize the tool-sclera interaction force. Additionally, features like haptic feedback or hand motion scaling, which can improve the safety and precision of surgery, require a teleoperation control framework. We implemented a bimanual adaptive teleoperation (BMAT) control mode using SHER 2.0 and SHER 2.1 and compared its performance with a bimanual adaptive cooperative (BMAC) mode. Both BMAT and BMAC modes were tested in sitting and standing postures during a vessel-following experiment under a surgical microscope. It is shown, for the first time to the best of our knowledge in robot-assisted retinal surgery, that integrating the adaptive sclera force control algorithm with the bimanual teleoperation framework enables surgeons to safely perform bimanual telemanipulation of the eye without over-stretching it, even in the absence of registration between the two robots.

Cooperative vs. Teleoperation Control of the Steady Hand Eye Robot with Adaptive Sclera Force Control: A Comparative Study

Dec 04, 2023Abstract:A surgeon's physiological hand tremor can significantly impact the outcome of delicate and precise retinal surgery, such as retinal vein cannulation (RVC) and epiretinal membrane peeling. Robot-assisted eye surgery technology provides ophthalmologists with advanced capabilities such as hand tremor cancellation, hand motion scaling, and safety constraints that enable them to perform these otherwise challenging and high-risk surgeries with high precision and safety. Steady-Hand Eye Robot (SHER) with cooperative control mode can filter out surgeon's hand tremor, yet another important safety feature, that is, minimizing the contact force between the surgical instrument and sclera surface for avoiding tissue damage cannot be met in this control mode. Also, other capabilities, such as hand motion scaling and haptic feedback, require a teleoperation control framework. In this work, for the first time, we implemented a teleoperation control mode incorporated with an adaptive sclera force control algorithm using a PHANTOM Omni haptic device and a force-sensing surgical instrument equipped with Fiber Bragg Grating (FBG) sensors attached to the SHER 2.1 end-effector. This adaptive sclera force control algorithm allows the robot to dynamically minimize the tool-sclera contact force. Moreover, for the first time, we compared the performance of the proposed adaptive teleoperation mode with the cooperative mode by conducting a vessel-following experiment inside an eye phantom under a microscope.

Human-Robot Interaction in Retinal Surgery: A Comparative Study of Serial and Parallel Cooperative Robots

Apr 01, 2023

Abstract:Cooperative robots for intraocular surgery allow surgeons to perform vitreoretinal surgery with high precision and stability. Several robot structural designs have shown capabilities to perform these surgeries. This research investigates the comparative performance of a serial and parallel cooperative-controlled robot in completing a retinal vessel-following task, with a focus on human-robot interaction performance and user experience. Our results indicate that despite differences in robot structure and interaction forces and torques, the two robots exhibited similar levels of performance in terms of general robot-to-patient interaction and average operating time. These findings have implications for the development and implementation of surgical robotics, suggesting that both serial and parallel cooperative-controlled robots can be effective for vitreoretinal surgery tasks.

Using an Improved Output Feedback MPC Approach for Developing a Haptic Virtual Training System

Mar 12, 2023Abstract:Haptic training simulators generally consist of three major components, namely a human operator, a haptic interface, and a virtual environment. Appropriate dynamic modeling of each of these components can have far-reaching implications for the whole system's performance improvement in terms of transparency, the analogy to the real environment, and stability. In this paper, we developed a virtual-based haptic training simulator for Endoscopic Sinus Surgery (ESS) by doing a dynamic characterization of the phenomenological sinus tissue fracture in the virtual environment, using an input-constrained linear parametric variable model. A parallel robot manipulator equipped with a calibrated force sensor is employed as a haptic interface. A lumped five-parameter single-degree-of-freedom mass-stiffness-damping impedance model is assigned to the operator's arm dynamic. A robust online output feedback quasi-min-max model predictive control (MPC) framework is proposed to stabilize the system during the switching between the piecewise linear dynamics of the virtual environment. The simulations and the experimental results demonstrate the effectiveness of the proposed control algorithm in terms of robustness and convergence to the desired impedance quantities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge