Muhammad Hadi

Cooperative vs. Teleoperation Control of the Steady Hand Eye Robot with Adaptive Sclera Force Control: A Comparative Study

Dec 04, 2023Abstract:A surgeon's physiological hand tremor can significantly impact the outcome of delicate and precise retinal surgery, such as retinal vein cannulation (RVC) and epiretinal membrane peeling. Robot-assisted eye surgery technology provides ophthalmologists with advanced capabilities such as hand tremor cancellation, hand motion scaling, and safety constraints that enable them to perform these otherwise challenging and high-risk surgeries with high precision and safety. Steady-Hand Eye Robot (SHER) with cooperative control mode can filter out surgeon's hand tremor, yet another important safety feature, that is, minimizing the contact force between the surgical instrument and sclera surface for avoiding tissue damage cannot be met in this control mode. Also, other capabilities, such as hand motion scaling and haptic feedback, require a teleoperation control framework. In this work, for the first time, we implemented a teleoperation control mode incorporated with an adaptive sclera force control algorithm using a PHANTOM Omni haptic device and a force-sensing surgical instrument equipped with Fiber Bragg Grating (FBG) sensors attached to the SHER 2.1 end-effector. This adaptive sclera force control algorithm allows the robot to dynamically minimize the tool-sclera contact force. Moreover, for the first time, we compared the performance of the proposed adaptive teleoperation mode with the cooperative mode by conducting a vessel-following experiment inside an eye phantom under a microscope.

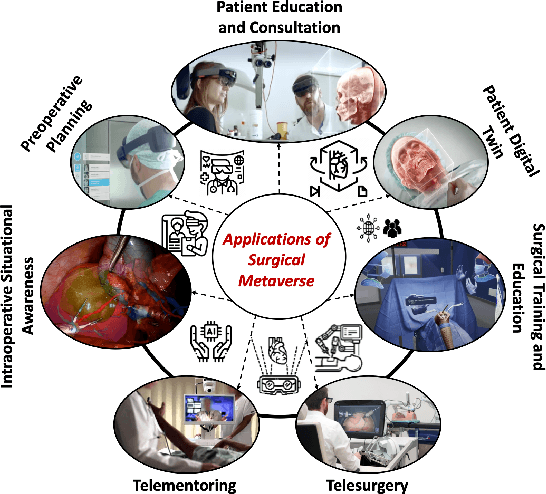

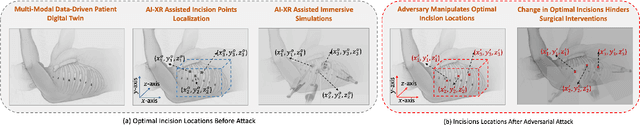

Can We Revitalize Interventional Healthcare with AI-XR Surgical Metaverses?

Mar 25, 2023

Abstract:Recent advancements in technology, particularly in machine learning (ML), deep learning (DL), and the metaverse, offer great potential for revolutionizing surgical science. The combination of artificial intelligence and extended reality (AI-XR) technologies has the potential to create a surgical metaverse, a virtual environment where surgeries can be planned and performed. This paper aims to provide insight into the various potential applications of an AI-XR surgical metaverse and the challenges that must be addressed to bring its full potential to fruition. It is important for the community to focus on these challenges to fully realize the potential of the AI-XR surgical metaverses. Furthermore, to emphasize the need for secure and robust AI-XR surgical metaverses and to demonstrate the real-world implications of security threats to the AI-XR surgical metaverses, we present a case study in which the ``an immersive surgical attack'' on incision point localization is performed in the context of preoperative planning in a surgical metaverse.

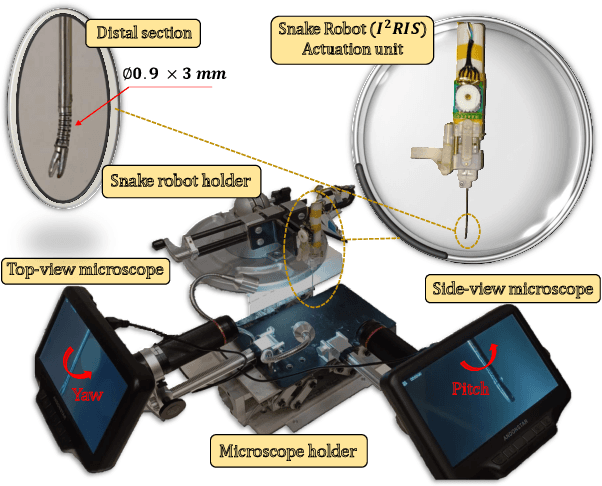

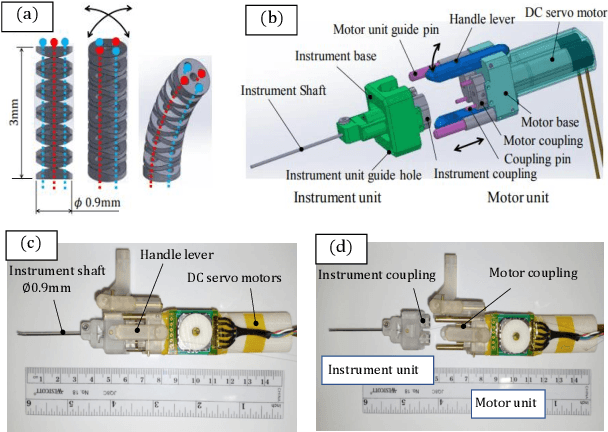

A Data-Driven Model with Hysteresis Compensation for I2RIS Robot

Mar 10, 2023

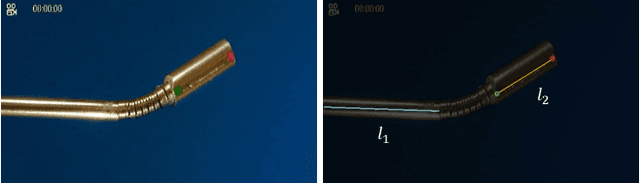

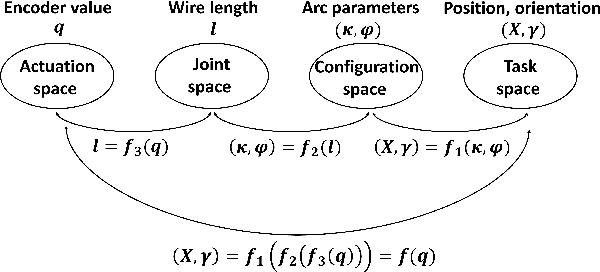

Abstract:Retinal microsurgery is a high-precision surgery performed on an exceedingly delicate tissue. It now requires extensively trained and highly skilled surgeons. Given the restricted range of instrument motion in the confined intraocular space, and also potentially restricting instrument contact with the sclera, snake-like robots may prove to be a promising technology to provide surgeons with greater flexibility, dexterity, space access, and positioning accuracy during retinal procedures requiring high precision and advantageous tooltip approach angles, such as retinal vein cannulation and epiretinal membrane peeling. Kinematics modeling of these robots is an essential step toward accurate position control, however, as opposed to conventional manipulators, modeling of these robots does not follow a straightforward method due to their complex mechanical structure and actuation mechanisms. Especially, in wire-driven snake-like robots, the hysteresis problem due to the wire tension condition can have a significant impact on the positioning accuracy of these robots. In this paper, we proposed an experimental kinematics model with a hysteresis compensation algorithm using the probabilistic Gaussian mixture models (GMM) Gaussian mixture regression (GMR) approach. Experimental results on the two-degree-of-freedom (DOF) integrated robotic intraocular snake (I2RIS) show that the proposed model provides 0.4 deg accuracy, which is an overall 60% and 70% of improvement for yaw and pitch degrees of freedom, respectively, compared to a previous model of this robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge