Hunaid Vohra

TEMSET-24K: Densely Annotated Dataset for Indexing Multipart Endoscopic Videos using Surgical Timeline Segmentation

Feb 10, 2025Abstract:Indexing endoscopic surgical videos is vital in surgical data science, forming the basis for systematic retrospective analysis and clinical performance evaluation. Despite its significance, current video analytics rely on manual indexing, a time-consuming process. Advances in computer vision, particularly deep learning, offer automation potential, yet progress is limited by the lack of publicly available, densely annotated surgical datasets. To address this, we present TEMSET-24K, an open-source dataset comprising 24,306 trans-anal endoscopic microsurgery (TEMS) video micro-clips. Each clip is meticulously annotated by clinical experts using a novel hierarchical labeling taxonomy encompassing phase, task, and action triplets, capturing intricate surgical workflows. To validate this dataset, we benchmarked deep learning models, including transformer-based architectures. Our in silico evaluation demonstrates high accuracy (up to 0.99) and F1 scores (up to 0.99) for key phases like Setup and Suturing. The STALNet model, tested with ConvNeXt, ViT, and SWIN V2 encoders, consistently segmented well-represented phases. TEMSET-24K provides a critical benchmark, propelling state-of-the-art solutions in surgical data science.

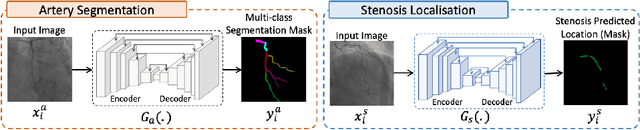

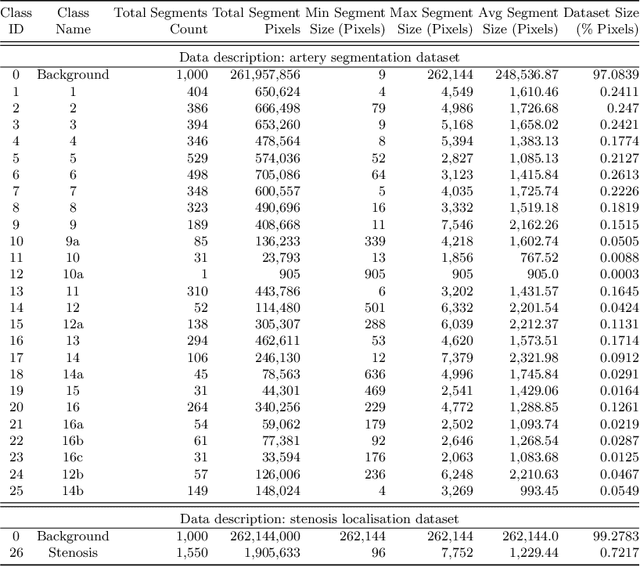

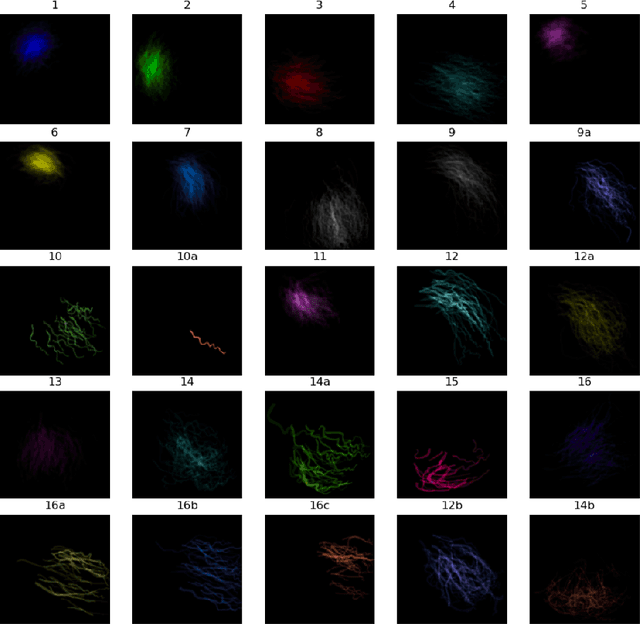

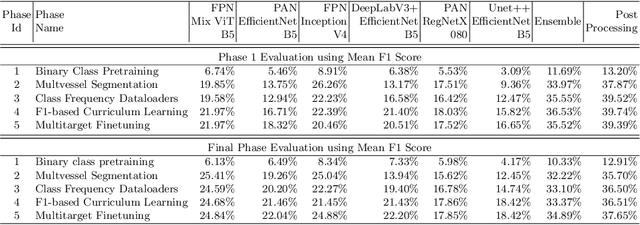

Multivessel Coronary Artery Segmentation and Stenosis Localisation using Ensemble Learning

Oct 27, 2023

Abstract:Coronary angiography analysis is a common clinical task performed by cardiologists to diagnose coronary artery disease (CAD) through an assessment of atherosclerotic plaque's accumulation. This study introduces an end-to-end machine learning solution developed as part of our solution for the MICCAI 2023 Automatic Region-based Coronary Artery Disease diagnostics using x-ray angiography imagEs (ARCADE) challenge, which aims to benchmark solutions for multivessel coronary artery segmentation and potential stenotic lesion localisation from X-ray coronary angiograms. We adopted a robust baseline model training strategy to progressively improve performance, comprising five successive stages of binary class pretraining, multivessel segmentation, fine-tuning using class frequency weighted dataloaders, fine-tuning using F1-based curriculum learning strategy (F1-CLS), and finally multi-target angiogram view classifier-based collective adaptation. Unlike many other medical imaging procedures, this task exhibits a notable degree of interobserver variability. %, making it particularly amenable to automated analysis. Our ensemble model combines the outputs from six baseline models using the weighted ensembling approach, which our analysis shows is found to double the predictive accuracy of the proposed solution. The final prediction was further refined, targeting the correction of misclassified blobs. Our solution achieved a mean F1 score of $37.69\%$ for coronary artery segmentation, and $39.41\%$ for stenosis localisation, positioning our team in the 5th position on both leaderboards. This work demonstrates the potential of automated tools to aid CAD diagnosis, guide interventions, and improve the accuracy of stent injections in clinical settings.

Robust Surgical Tools Detection in Endoscopic Videos with Noisy Data

Jul 03, 2023

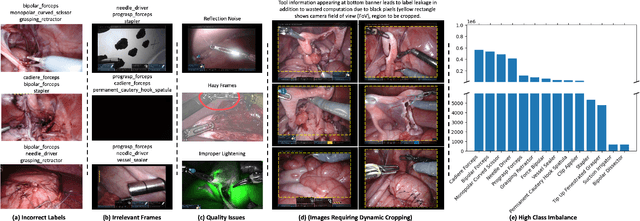

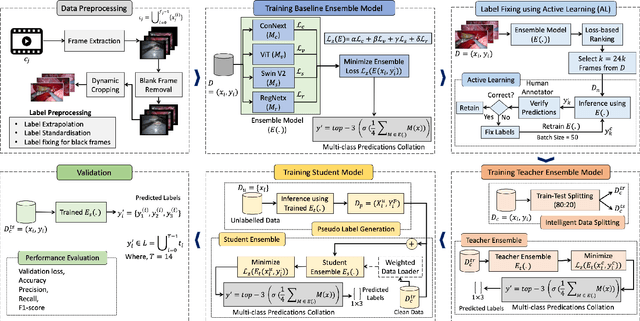

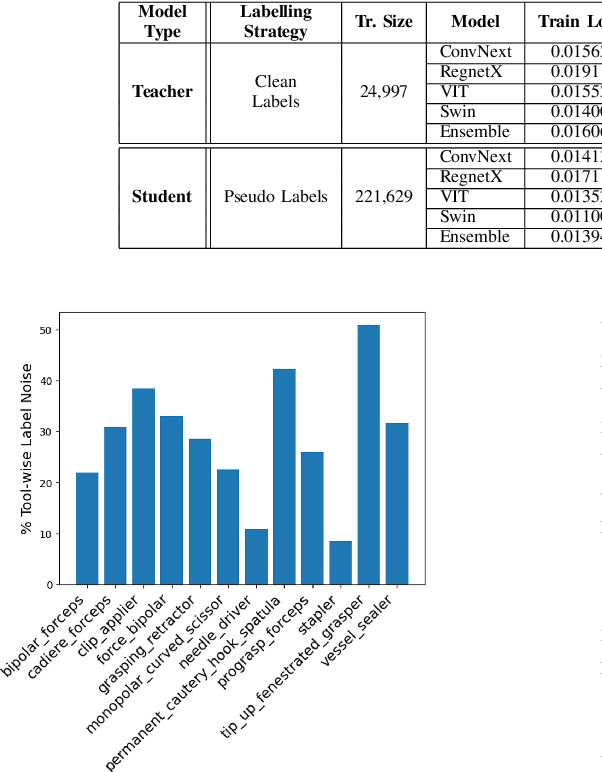

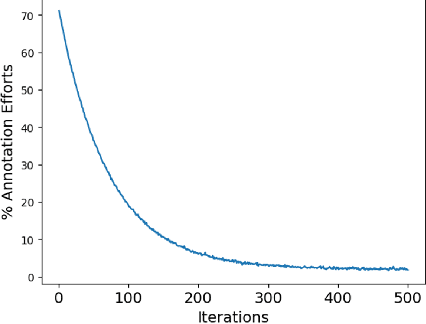

Abstract:Over the past few years, surgical data science has attracted substantial interest from the machine learning (ML) community. Various studies have demonstrated the efficacy of emerging ML techniques in analysing surgical data, particularly recordings of procedures, for digitizing clinical and non-clinical functions like preoperative planning, context-aware decision-making, and operating skill assessment. However, this field is still in its infancy and lacks representative, well-annotated datasets for training robust models in intermediate ML tasks. Also, existing datasets suffer from inaccurate labels, hindering the development of reliable models. In this paper, we propose a systematic methodology for developing robust models for surgical tool detection using noisy data. Our methodology introduces two key innovations: (1) an intelligent active learning strategy for minimal dataset identification and label correction by human experts; and (2) an assembling strategy for a student-teacher model-based self-training framework to achieve the robust classification of 14 surgical tools in a semi-supervised fashion. Furthermore, we employ weighted data loaders to handle difficult class labels and address class imbalance issues. The proposed methodology achieves an average F1-score of 85.88\% for the ensemble model-based self-training with class weights, and 80.88\% without class weights for noisy labels. Also, our proposed method significantly outperforms existing approaches, which effectively demonstrates its effectiveness.

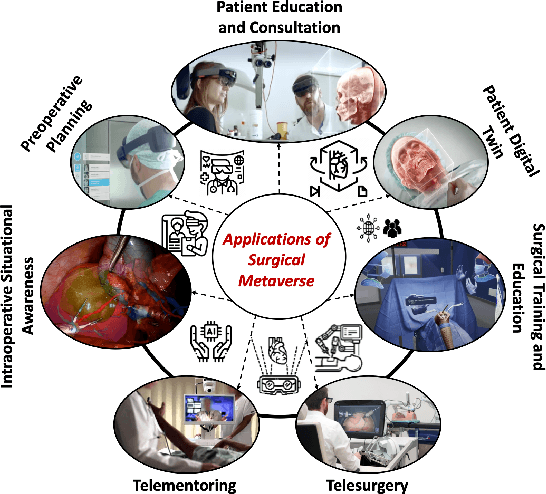

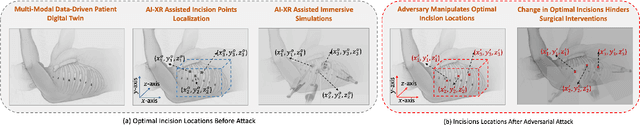

Can We Revitalize Interventional Healthcare with AI-XR Surgical Metaverses?

Mar 25, 2023

Abstract:Recent advancements in technology, particularly in machine learning (ML), deep learning (DL), and the metaverse, offer great potential for revolutionizing surgical science. The combination of artificial intelligence and extended reality (AI-XR) technologies has the potential to create a surgical metaverse, a virtual environment where surgeries can be planned and performed. This paper aims to provide insight into the various potential applications of an AI-XR surgical metaverse and the challenges that must be addressed to bring its full potential to fruition. It is important for the community to focus on these challenges to fully realize the potential of the AI-XR surgical metaverses. Furthermore, to emphasize the need for secure and robust AI-XR surgical metaverses and to demonstrate the real-world implications of security threats to the AI-XR surgical metaverses, we present a case study in which the ``an immersive surgical attack'' on incision point localization is performed in the context of preoperative planning in a surgical metaverse.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge