Michael Sommersperger

Towards Motion Compensation in Autonomous Robotic Subretinal Injections

Nov 27, 2024

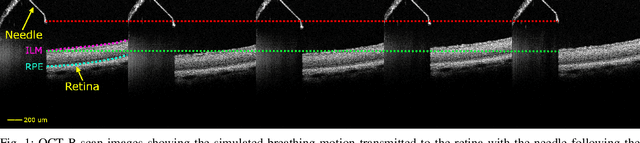

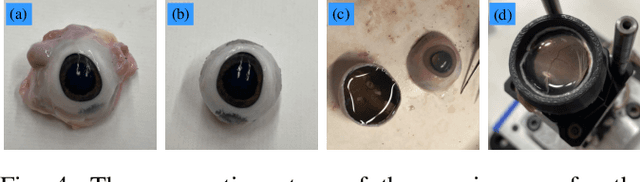

Abstract:Exudative (wet) age-related macular degeneration (AMD) is a leading cause of vision loss in older adults, typically treated with intravitreal injections. Emerging therapies, such as subretinal injections of stem cells, gene therapy, small molecules or RPE cells require precise delivery to avoid damaging delicate retinal structures. Autonomous robotic systems can potentially offer the necessary precision for these procedures. This paper presents a novel approach for motion compensation in robotic subretinal injections, utilizing real-time Optical Coherence Tomography (OCT). The proposed method leverages B$^{5}$-scans, a rapid acquisition of small-volume OCT data, for dynamic tracking of retinal motion along the Z-axis, compensating for physiological movements such as breathing and heartbeat. Validation experiments on \textit{ex vivo} porcine eyes revealed challenges in maintaining a consistent tool-to-retina distance, with deviations of up to 200 $\mu m$ for 100 $\mu m$ amplitude motions and over 80 $\mu m$ for 25 $\mu m$ amplitude motions over one minute. Subretinal injections faced additional difficulties, with horizontal shifts causing the needle to move off-target and inject into the vitreous. These results highlight the need for improved motion prediction and horizontal stability to enhance the accuracy and safety of robotic subretinal procedures.

Real-time Deformation-aware Control for Autonomous Robotic Subretinal Injection under iOCT Guidance

Nov 10, 2024

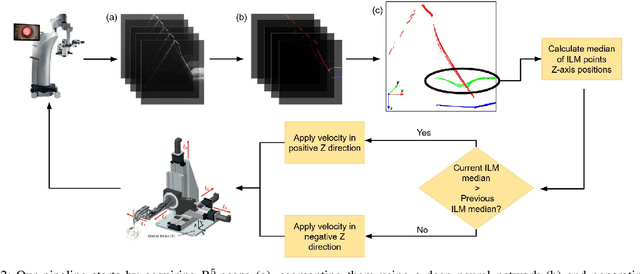

Abstract:Robotic platforms provide repeatable and precise tool positioning that significantly enhances retinal microsurgery. Integration of such systems with intraoperative optical coherence tomography (iOCT) enables image-guided robotic interventions, allowing to autonomously perform advanced treatment possibilities, such as injecting therapeutic agents into the subretinal space. Yet, tissue deformations due to tool-tissue interactions are a major challenge in autonomous iOCT-guided robotic subretinal injection, impacting correct needle positioning and, thus, the outcome of the procedure. This paper presents a novel method for autonomous subretinal injection under iOCT guidance that considers tissue deformations during the insertion procedure. This is achieved through real-time segmentation and 3D reconstruction of the surgical scene from densely sampled iOCT B-scans, which we refer to as B5-scans, to monitor the positioning of the instrument regarding a virtual target layer defined at a relative position between the ILM and RPE. Our experiments on ex-vivo porcine eyes demonstrate dynamic adjustment of the insertion depth and overall improved accuracy in needle positioning compared to previous autonomous insertion approaches. Compared to a 35% success rate in subretinal bleb generation with previous approaches, our proposed method reliably and robustly created subretinal blebs in all our experiments.

SpecstatOR: Speckle statistics-based iOCT Segmentation Network for Ophthalmic Surgery

Apr 30, 2024Abstract:This paper presents an innovative approach to intraoperative Optical Coherence Tomography (iOCT) image segmentation in ophthalmic surgery, leveraging statistical analysis of speckle patterns to incorporate statistical pathology-specific prior knowledge. Our findings indicate statistically different speckle patterns within the retina and between retinal layers and surgical tools, facilitating the segmentation of previously unseen data without the necessity for manual labeling. The research involves fitting various statistical distributions to iOCT data, enabling the differentiation of different ocular structures and surgical tools. The proposed segmentation model aims to refine the statistical findings based on prior tissue understanding to leverage statistical and biological knowledge. Incorporating statistical parameters, physical analysis of light-tissue interaction, and deep learning informed by biological structures enhance segmentation accuracy, offering potential benefits to real-time applications in ophthalmic surgical procedures. The study demonstrates the adaptability and precision of using Gamma distribution parameters and the derived binary maps as sole inputs for segmentation, notably enhancing the model's inference performance on unseen data.

Physics-Encoded Graph Neural Networks for Deformation Prediction under Contact

Feb 05, 2024

Abstract:In robotics, it's crucial to understand object deformation during tactile interactions. A precise understanding of deformation can elevate robotic simulations and have broad implications across different industries. We introduce a method using Physics-Encoded Graph Neural Networks (GNNs) for such predictions. Similar to robotic grasping and manipulation scenarios, we focus on modeling the dynamics between a rigid mesh contacting a deformable mesh under external forces. Our approach represents both the soft body and the rigid body within graph structures, where nodes hold the physical states of the meshes. We also incorporate cross-attention mechanisms to capture the interplay between the objects. By jointly learning geometry and physics, our model reconstructs consistent and detailed deformations. We've made our code and dataset public to advance research in robotic simulation and grasping.

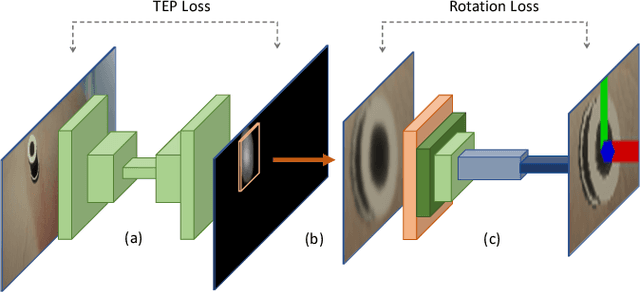

Robotic Navigation Autonomy for Subretinal Injection via Intelligent Real-Time Virtual iOCT Volume Slicing

Jan 17, 2023Abstract:In the last decade, various robotic platforms have been introduced that could support delicate retinal surgeries. Concurrently, to provide semantic understanding of the surgical area, recent advances have enabled microscope-integrated intraoperative Optical Coherent Tomography (iOCT) with high-resolution 3D imaging at near video rate. The combination of robotics and semantic understanding enables task autonomy in robotic retinal surgery, such as for subretinal injection. This procedure requires precise needle insertion for best treatment outcomes. However, merging robotic systems with iOCT introduces new challenges. These include, but are not limited to high demands on data processing rates and dynamic registration of these systems during the procedure. In this work, we propose a framework for autonomous robotic navigation for subretinal injection, based on intelligent real-time processing of iOCT volumes. Our method consists of an instrument pose estimation method, an online registration between the robotic and the iOCT system, and trajectory planning tailored for navigation to an injection target. We also introduce intelligent virtual B-scans, a volume slicing approach for rapid instrument pose estimation, which is enabled by Convolutional Neural Networks (CNNs). Our experiments on ex-vivo porcine eyes demonstrate the precision and repeatability of the method. Finally, we discuss identified challenges in this work and suggest potential solutions to further the development of such systems.

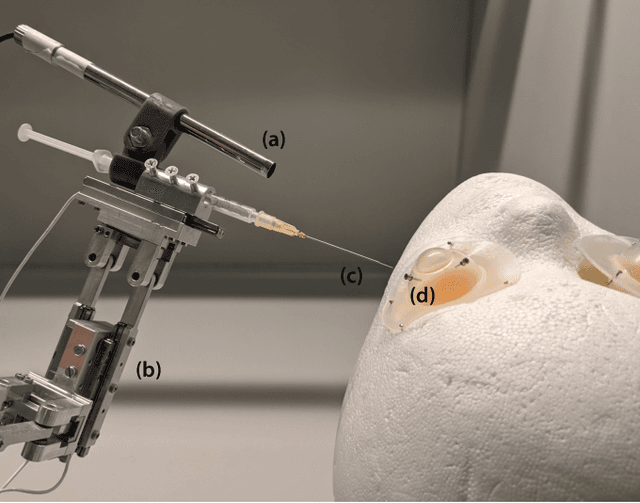

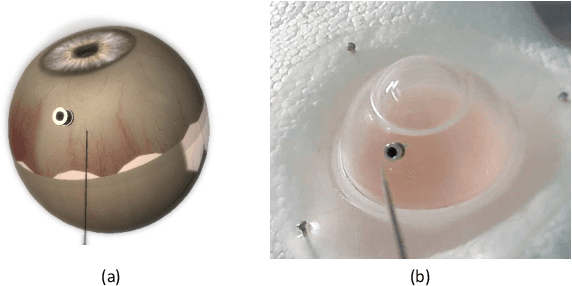

ColibriDoc: An Eye-in-Hand Autonomous Trocar Docking System

Nov 30, 2021

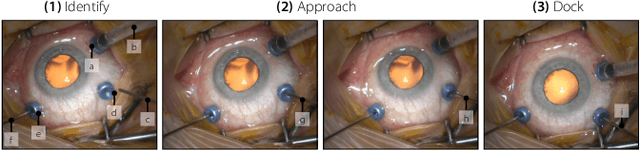

Abstract:Retinal surgery is a complex medical procedure that requires exceptional expertise and dexterity. For this purpose, several robotic platforms are currently being developed to enable or improve the outcome of microsurgical tasks. Since the control of such robots is often designed for navigation inside the eye in proximity to the retina, successful trocar docking and inserting the instrument into the eye represents an additional cognitive effort, and is, therefore, one of the open challenges in robotic retinal surgery. For this purpose, we present a platform for autonomous trocar docking that combines computer vision and a robotic setup. Inspired by the Cuban Colibri (hummingbird) aligning its beak to a flower using only vision, we mount a camera onto the endeffector of a robotic system. By estimating the position and pose of the trocar, the robot is able to autonomously align and navigate the instrument towards the Trocar's Entry Point (TEP) and finally perform the insertion. Our experiments show that the proposed method is able to accurately estimate the position and pose of the trocar and achieve repeatable autonomous docking. The aim of this work is to reduce the complexity of robotic setup preparation prior to the surgical task and therefore, increase the intuitiveness of the system integration into the clinical workflow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge