Kristina Mach

SpecstatOR: Speckle statistics-based iOCT Segmentation Network for Ophthalmic Surgery

Apr 30, 2024Abstract:This paper presents an innovative approach to intraoperative Optical Coherence Tomography (iOCT) image segmentation in ophthalmic surgery, leveraging statistical analysis of speckle patterns to incorporate statistical pathology-specific prior knowledge. Our findings indicate statistically different speckle patterns within the retina and between retinal layers and surgical tools, facilitating the segmentation of previously unseen data without the necessity for manual labeling. The research involves fitting various statistical distributions to iOCT data, enabling the differentiation of different ocular structures and surgical tools. The proposed segmentation model aims to refine the statistical findings based on prior tissue understanding to leverage statistical and biological knowledge. Incorporating statistical parameters, physical analysis of light-tissue interaction, and deep learning informed by biological structures enhance segmentation accuracy, offering potential benefits to real-time applications in ophthalmic surgical procedures. The study demonstrates the adaptability and precision of using Gamma distribution parameters and the derived binary maps as sole inputs for segmentation, notably enhancing the model's inference performance on unseen data.

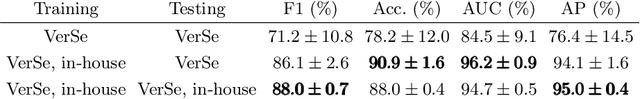

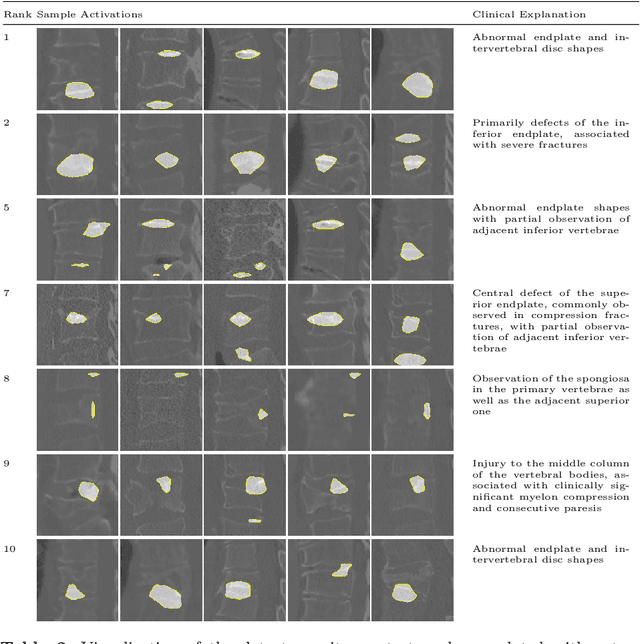

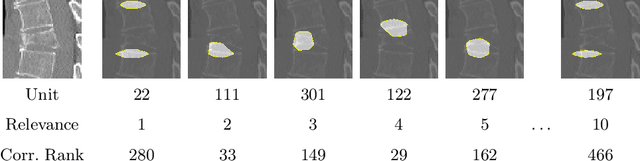

Interpretable Vertebral Fracture Diagnosis

Mar 30, 2022

Abstract:Do black-box neural network models learn clinically relevant features for fracture diagnosis? The answer not only establishes reliability quenches scientific curiosity but also leads to explainable and verbose findings that can assist the radiologists in the final and increase trust. This work identifies the concepts networks use for vertebral fracture diagnosis in CT images. This is achieved by associating concepts to neurons highly correlated with a specific diagnosis in the dataset. The concepts are either associated with neurons by radiologists pre-hoc or are visualized during a specific prediction and left for the user's interpretation. We evaluate which concepts lead to correct diagnosis and which concepts lead to false positives. The proposed frameworks and analysis pave the way for reliable and explainable vertebral fracture diagnosis.

Few-shot Structured Radiology Report Generation Using Natural Language Prompts

Mar 29, 2022

Abstract:Chest radiograph reporting is time-consuming, and numerous solutions to automate this process have been proposed. Due to the complexity of medical information, the variety of writing styles, and free text being prone to typos and inconsistencies, the efficacy of quantifying the clinical accuracy of free-text reports using natural language processing measures is challenging. On the other hand, structured reports ensure consistency and can more easily be used as a quality assurance tool. To accomplish this, we present a strategy for predicting clinical observations and their anatomical location that is easily extensible to other structured findings. First, we train a contrastive language-image model using related chest radiographs and free-text radiological reports. Then, we create textual prompts for each structured finding and optimize a classifier for predicting clinical findings and their associations within the medical image. The results indicate that even when only a few image-level annotations are used for training, the method can localize pathologies in chest radiographs and generate structured reports.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge