Ph. D.

Large Language Model-Powered Conversational Agent Delivering Problem-Solving Therapy (PST) for Family Caregivers: Enhancing Empathy and Therapeutic Alliance Using In-Context Learning

Jun 13, 2025

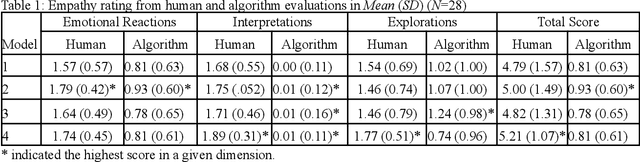

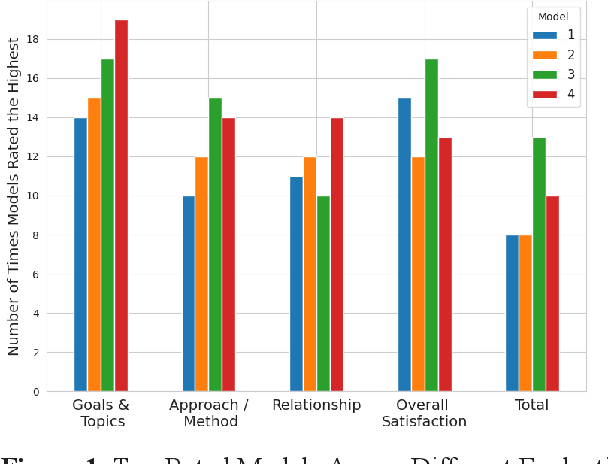

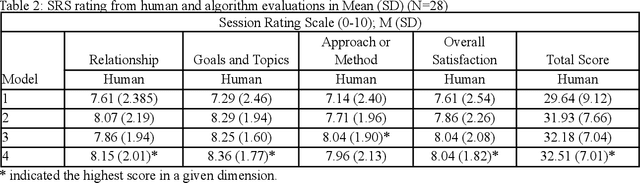

Abstract:Family caregivers often face substantial mental health challenges due to their multifaceted roles and limited resources. This study explored the potential of a large language model (LLM)-powered conversational agent to deliver evidence-based mental health support for caregivers, specifically Problem-Solving Therapy (PST) integrated with Motivational Interviewing (MI) and Behavioral Chain Analysis (BCA). A within-subject experiment was conducted with 28 caregivers interacting with four LLM configurations to evaluate empathy and therapeutic alliance. The best-performing models incorporated Few-Shot and Retrieval-Augmented Generation (RAG) prompting techniques, alongside clinician-curated examples. The models showed improved contextual understanding and personalized support, as reflected by qualitative responses and quantitative ratings on perceived empathy and therapeutic alliances. Participants valued the model's ability to validate emotions, explore unexpressed feelings, and provide actionable strategies. However, balancing thorough assessment with efficient advice delivery remains a challenge. This work highlights the potential of LLMs in delivering empathetic and tailored support for family caregivers.

A General Measure of Collision Hazard in Traffic

May 17, 2022

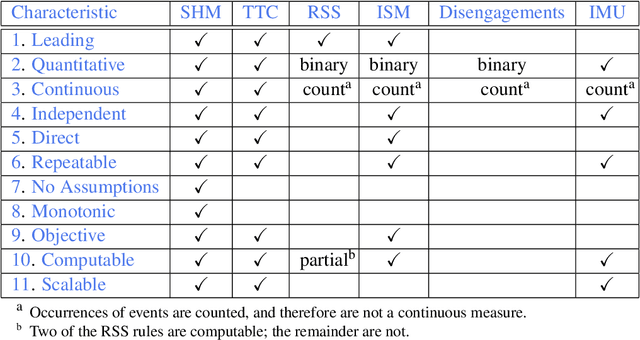

Abstract:A collision hazard measure that has the essential characteristics to provide a measurement of safety that will be useful to AV developers, traffic infrastructure developers and managers, regulators and the public is introduced here. The Streetscope Collision Hazard Measure (SHM) overcomes the limitations of existing measures, and provides an independent leading indication of safety. * Trailing indicators, such as collision statistics, incur pain and loss on society, and are not an ethically acceptable approach. * Near-misses have been shown to be effective predictors of incidents. * Time-to-Collision (TTC) provides ambiguous indication of collision hazards, and requires assumptions about vehicle behavior. * Responsibility-Sensitive Safety (RSS), because of its reliance on rules for individual circumstances, will not scale up to handle the complexities of traffic. * Instantaneous Safety Metric (ISM) relies on probabilistic predictions of behaviors to categorize events (possible, imminent, critical), and does not provide a quantitative measure of the severity of the hazard. * Inertial Measurement Unit (IMU) acceleration data is not correlated with hazard or risk. * A new measure, based on the concept of near-misses, that incorporates both proximity (separation distance) and motion (relative speed) is introduced. * Near-miss data has been shown to be predictive of the likelihood and severity of incidents. The new measure presented here gathers movement data about vehicles continuously and a quantitative score reflecting the hazard encountered or created (from which the riskiness or safeness of the behavior of vehicles can be estimated) is computed nearly continuously.

Deep learning model trained on mobile phone-acquired frozen section images effectively detects basal cell carcinoma

Nov 22, 2020

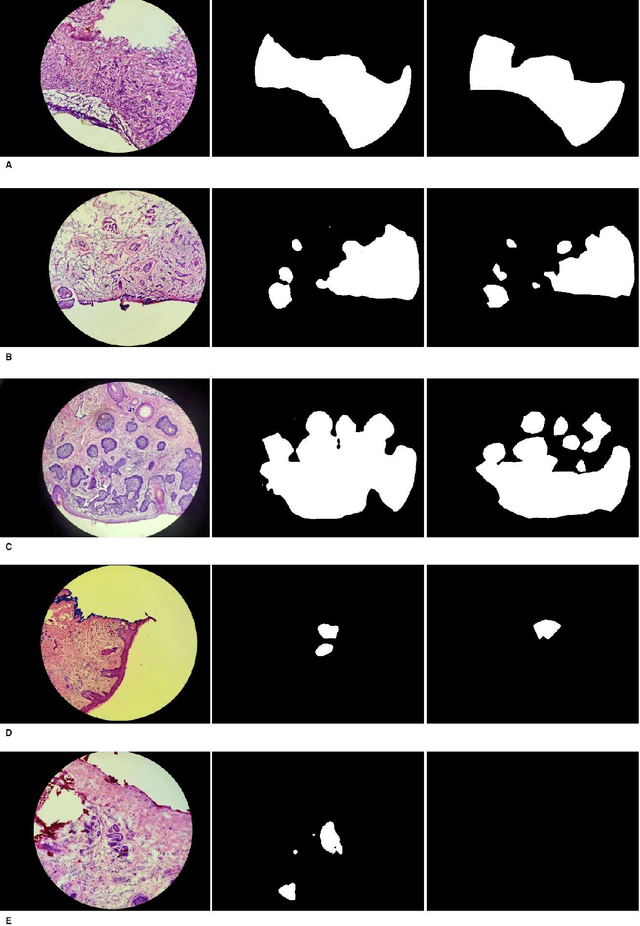

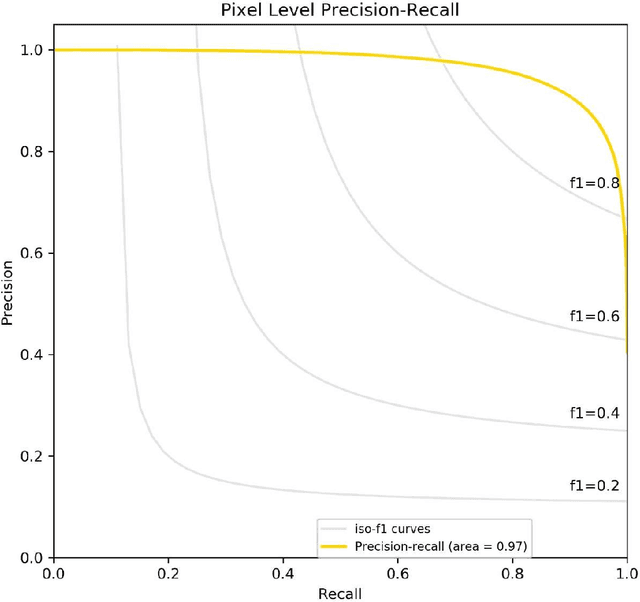

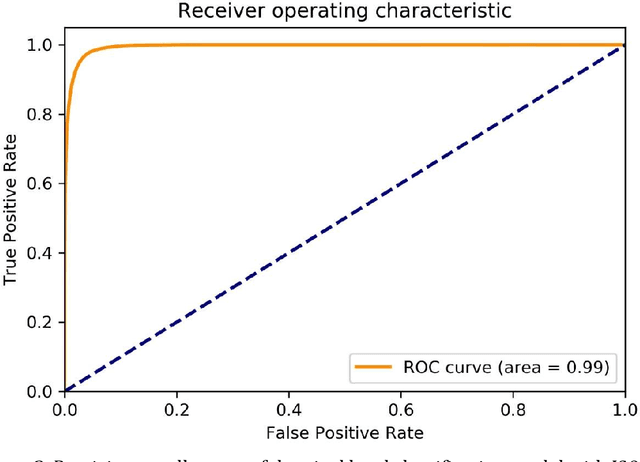

Abstract:Background: Margin assessment of basal cell carcinoma using the frozen section is a common task of pathology intraoperative consultation. Although frequently straight-forward, the determination of the presence or absence of basal cell carcinoma on the tissue sections can sometimes be challenging. We explore if a deep learning model trained on mobile phone-acquired frozen section images can have adequate performance for future deployment. Materials and Methods: One thousand two hundred and forty-one (1241) images of frozen sections performed for basal cell carcinoma margin status were acquired using mobile phones. The photos were taken at 100x magnification (10x objective). The images were downscaled from a 4032 x 3024 pixel resolution to 576 x 432 pixel resolution. Semantic segmentation algorithm Deeplab V3 with Xception backbone was used for model training. Results: The model uses an image as input and produces a 2-dimensional black and white output of prediction of the same dimension; the areas determined to be basal cell carcinoma were displayed with white color, in a black background. Any output with the number of white pixels exceeding 0.5% of the total number of pixels is deemed positive for basal cell carcinoma. On the test set, the model achieves area under curve of 0.99 for receiver operator curve and 0.97 for precision-recall curve at the pixel level. The accuracy of classification at the slide level is 96%. Conclusions: The deep learning model trained with mobile phone images shows satisfactory performance characteristics, and thus demonstrates the potential for deploying as a mobile phone app to assist in frozen section interpretation in real time.

A Computer-Aided Diagnosis System Using Artificial Intelligence for Hip Fractures Significantly Improves the Diagnostic Rate of Residents. -Multi-Institutional Joint Development Research-

Apr 07, 2020

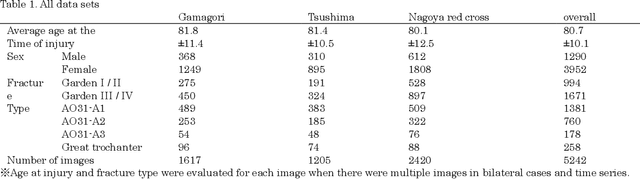

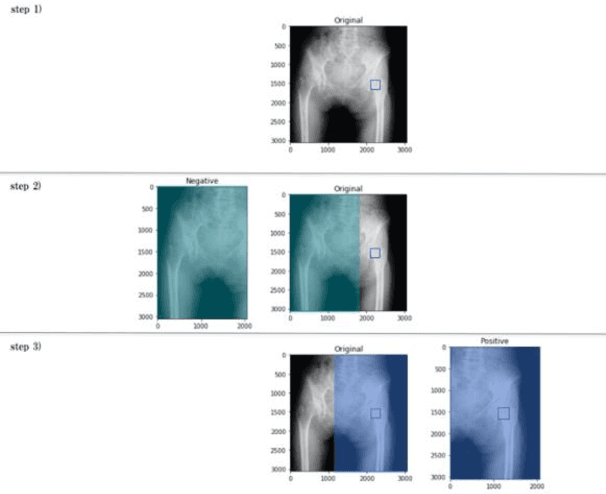

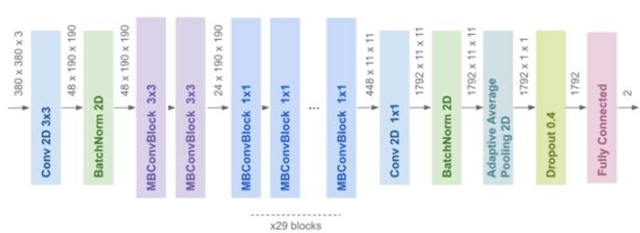

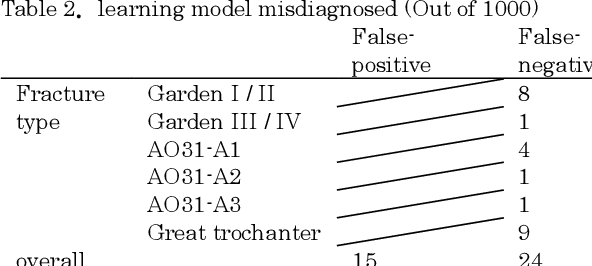

Abstract:[Objective] To develop a CAD system for hip fracture for plain frontal hip X-ray by CNN trained on a large dataset collected at multiple institutions. And, the possibility of the diagnosis rate improvement of the proximal femoral fracture by the resident using this CAD system as an aid of the diagnosis. [Materials and methods] In total, 4851 cases of hip fracture patients who visited each institution between 2009 and 2019 were included. 5242 plain pelvic X-rays were extracted from a DICOM server, and a total of 10484 images(5242 with fracture side and 5242 without fracture side) were used for machine learning. A CNN approach was used. We used the EffectiventNet-B4 framework with Pytorch 1.3 and Fast.ai. In the final evaluation, accuracy, sensitivity, specificity, F-value, and AUC were evaluated. Grad-CAM was used to conceptualize the basis of the diagnosis by the CAD system. For 31 residents and 4 orthopedic surgeons, the image diagnosis test was carried out for 600 photographs of hip fracture randomly extracted from test image data set. And, diagnosis rate in the situation with/without the diagnosis support by the CAD system were evaluated respectively. [Results] The diagnostic accuracy of the learning model was 96.1%, sensitivity 95.2%, specificity 96.9%, F value 0.961, and AUC 0.99. Grad-CAM was used to show the most accurate diagnosis. In the image diagnosis test, the resident acquired the diagnostic ability equivalent to that of the orthopedic surgeon by using the diagnostic aid of the CAD system. [Conclusions] The CAD system using AI for the hip fracture which we developed could offer the diagnosis basis, and it became an image diagnosis tool with the high diagnosis accuracy. And, the possibility of contributing to the diagnosis rate improvement was considered in the field of actual clinical environment such as emergency room.

Assessment of Amazon Comprehend Medical: Medication Information Extraction

Feb 02, 2020

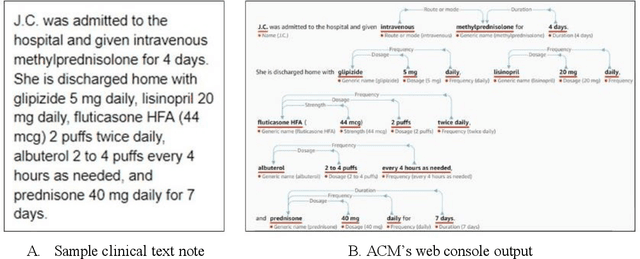

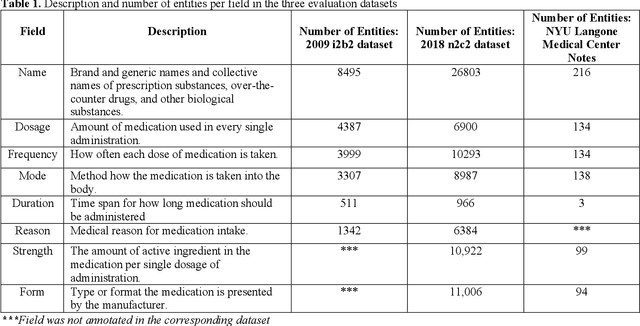

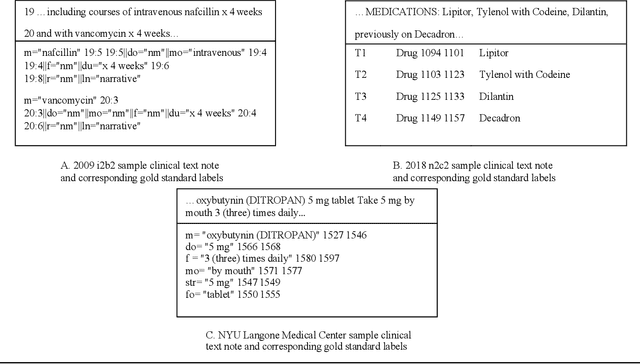

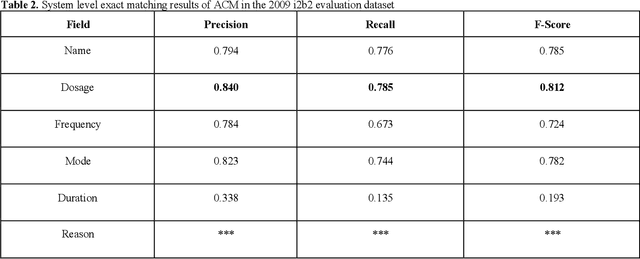

Abstract:In November 27, 2018, Amazon Web Services (AWS) released Amazon Comprehend Medical (ACM), a deep learning based system that automatically extracts clinical concepts (which include anatomy, medical conditions, protected health information (PH)I, test names, treatment names, and medical procedures, and medications) from clinical text notes. Uptake and trust in any new data product relies on independent validation across benchmark datasets and tools to establish and confirm expected quality of results. This work focuses on the medication extraction task, and particularly, ACM was evaluated using the official test sets from the 2009 i2b2 Medication Extraction Challenge and 2018 n2c2 Track 2: Adverse Drug Events and Medication Extraction in EHRs. Overall, ACM achieved F-scores of 0.768 and 0.828. These scores ranked the lowest when compared to the three best systems in the respective challenges. To further establish the generalizability of its medication extraction performance, a set of random internal clinical text notes from NYU Langone Medical Center were also included in this work. And in this corpus, ACM garnered an F-score of 0.753.

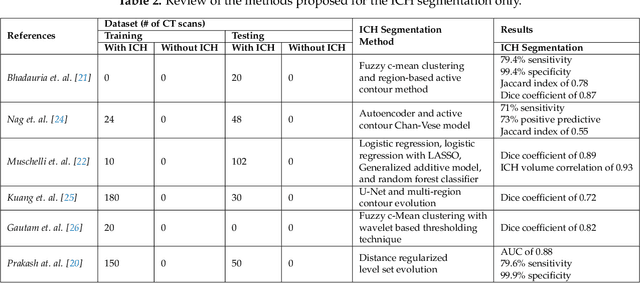

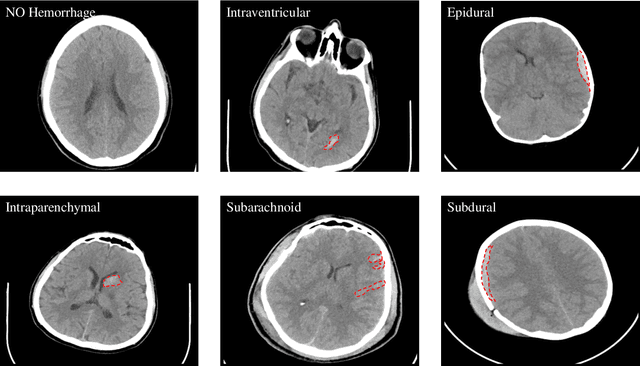

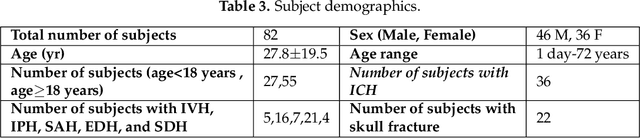

Intracranial Hemorrhage Segmentation Using Deep Convolutional Model

Nov 15, 2019

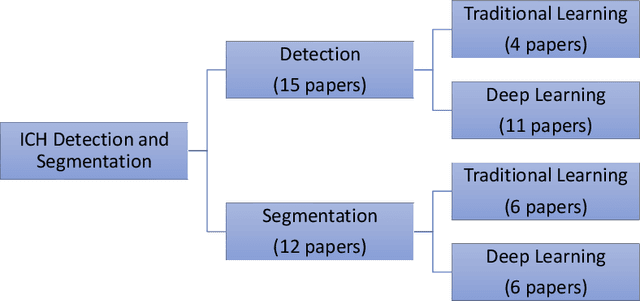

Abstract:Traumatic brain injuries could cause intracranial hemorrhage (ICH). ICH could lead to disability or death if it is not accurately diagnosed and treated in a time-sensitive procedure. The current clinical protocol to diagnose ICH is examining Computerized Tomography (CT) scans by radiologists to detect ICH and localize its regions. However, this process relies heavily on the availability of an experienced radiologist. In this paper, we designed a study protocol to collect a dataset of 82 CT scans of subjects with traumatic brain injury. Later, the ICH regions were manually delineated in each slice by a consensus decision of two radiologists. Recently, fully convolutional networks (FCN) have shown to be successful in medical image segmentation. We developed a deep FCN, called U-Net, to segment the ICH regions from the CT scans in a fully automated manner. The method achieved a Dice coefficient of 0.31 for the ICH segmentation based on 5-fold cross-validation. The dataset is publicly available online at PhysioNet repository for future analysis and comparison.

A comparison of cluster algorithms as applied to unsupervised surveys

Dec 13, 2018

Abstract:When considering answering important questions with data, unsupervised data offers extensive insight opportunity and unique challenges. This study considers student survey data with a specific goal of clustering students into like groups with underlying concept of identifying different poverty levels. Fuzzy logic is considered during the data cleaning and organizing phase helping to create a logical dependent variable for analysis comparison. Using multiple data reduction techniques, the survey was reduced and cleaned. Finally, multiple clustering techniques (k-means, k-modes, and hierarchical clustering) are applied and compared. Though each method has strengths, the goal was to identify which was most viable when applied to survey data and specifically when trying to identify the most impoverished students.

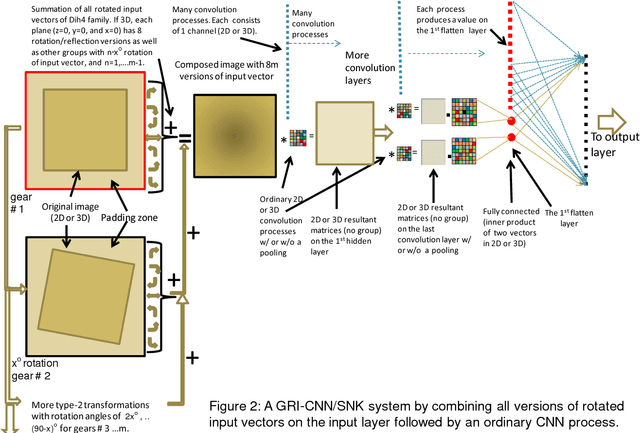

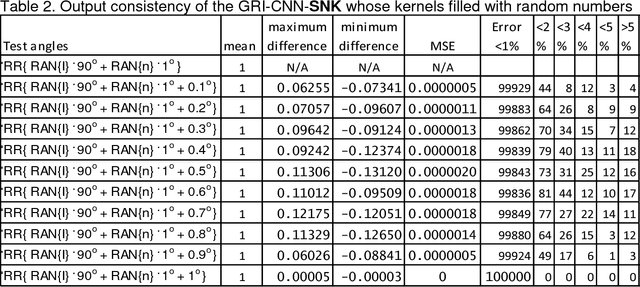

Geared Rotationally Identical and Invariant Convolutional Neural Network Systems

Aug 10, 2018

Abstract:Theorems and techniques to form different types of transformationally invariant processing and to produce the same output quantitatively based on either transformationally invariant operators or symmetric operations have recently been introduced by the authors. In this study, we further propose to compose a geared rotationally identical CNN system (GRI-CNN) with a small step angle by connecting networks of participated processes at the first flatten layer. Using an ordinary CNN structure as a base, requirements for constructing a GRI-CNN include the use of either symmetric input vector or kernels with an angle increment that can form a complete cycle as a "gearwheel". Four basic GRI-CNN structures were studied. Each of them can produce quantitatively identical output results when a rotation angle of the input vector is evenly divisible by the step angle of the gear. Our study showed when an input vector rotated with an angle does not match to a step angle, the GRI-CNN can also produce a highly consistent result. With a design of using an ultra-fine gear-tooth step angle (e.g., 1 degree or 0.1 degree), all four GRI-CNN systems can be constructed virtually isotropically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge