Ryosuke Goto

On permutation-invariant neural networks

Mar 28, 2024

Abstract:Conventional machine learning algorithms have traditionally been designed under the assumption that input data follows a vector-based format, with an emphasis on vector-centric paradigms. However, as the demand for tasks involving set-based inputs has grown, there has been a paradigm shift in the research community towards addressing these challenges. In recent years, the emergence of neural network architectures such as Deep Sets and Transformers has presented a significant advancement in the treatment of set-based data. These architectures are specifically engineered to naturally accommodate sets as input, enabling more effective representation and processing of set structures. Consequently, there has been a surge of research endeavors dedicated to exploring and harnessing the capabilities of these architectures for various tasks involving the approximation of set functions. This comprehensive survey aims to provide an overview of the diverse problem settings and ongoing research efforts pertaining to neural networks that approximate set functions. By delving into the intricacies of these approaches and elucidating the associated challenges, the survey aims to equip readers with a comprehensive understanding of the field. Through this comprehensive perspective, we hope that researchers can gain valuable insights into the potential applications, inherent limitations, and future directions of set-based neural networks. Indeed, from this survey we gain two insights: i) Deep Sets and its variants can be generalized by differences in the aggregation function, and ii) the behavior of Deep Sets is sensitive to the choice of the aggregation function. From these observations, we show that Deep Sets, one of the well-known permutation-invariant neural networks, can be generalized in the sense of a quasi-arithmetic mean.

Outfit Completion via Conditional Set Transformation

Nov 28, 2023

Abstract:In this paper, we formulate the outfit completion problem as a set retrieval task and propose a novel framework for solving this problem. The proposal includes a conditional set transformation architecture with deep neural networks and a compatibility-based regularization method. The proposed method utilizes a map with permutation-invariant for the input set and permutation-equivariant for the condition set. This allows retrieving a set that is compatible with the input set while reflecting the properties of the condition set. In addition, since this structure outputs the element of the output set in a single inference, it can achieve a scalable inference speed with respect to the cardinality of the output set. Experimental results on real data reveal that the proposed method outperforms existing approaches in terms of accuracy of the outfit completion task, condition satisfaction, and compatibility of completion results.

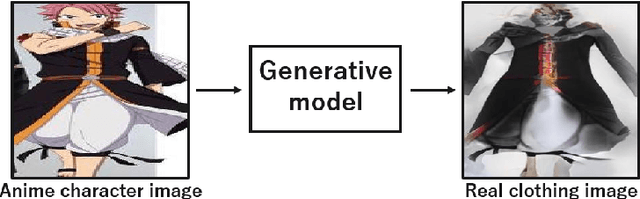

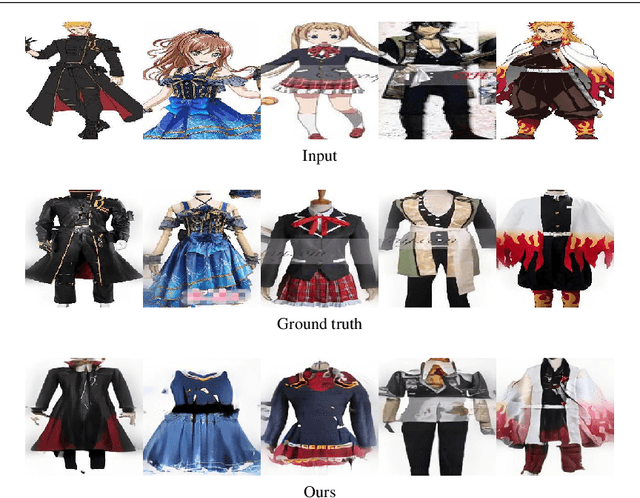

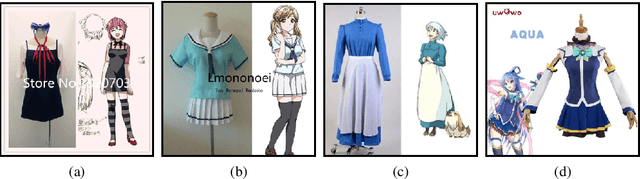

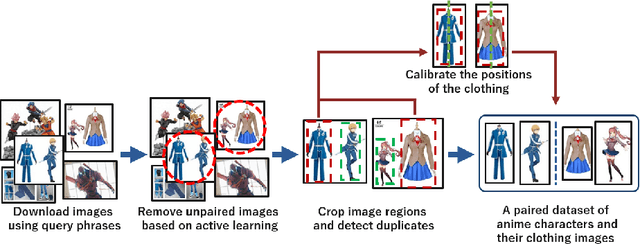

Anime-to-Real Clothing: Cosplay Costume Generation via Image-to-Image Translation

Aug 26, 2020

Abstract:Cosplay has grown from its origins at fan conventions into a billion-dollar global dress phenomenon. To facilitate imagination and reinterpretation from animated images to real garments, this paper presents an automatic costume image generation method based on image-to-image translation. Cosplay items can be significantly diverse in their styles and shapes, and conventional methods cannot be directly applied to the wide variation in clothing images that are the focus of this study. To solve this problem, our method starts by collecting and preprocessing web images to prepare a cleaned, paired dataset of the anime and real domains. Then, we present a novel architecture for generative adversarial networks (GANs) to facilitate high-quality cosplay image generation. Our GAN consists of several effective techniques to fill the gap between the two domains and improve both the global and local consistency of generated images. Experiments demonstrated that, with two types of evaluation metrics, the proposed GAN achieves better performance than existing methods. We also showed that the images generated by the proposed method are more realistic than those generated by the conventional methods. Our codes and pretrained model are available on the web.

A Computer-Aided Diagnosis System Using Artificial Intelligence for Hip Fractures Significantly Improves the Diagnostic Rate of Residents. -Multi-Institutional Joint Development Research-

Apr 07, 2020

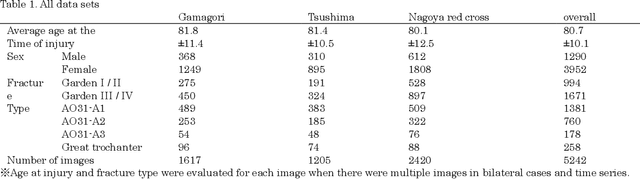

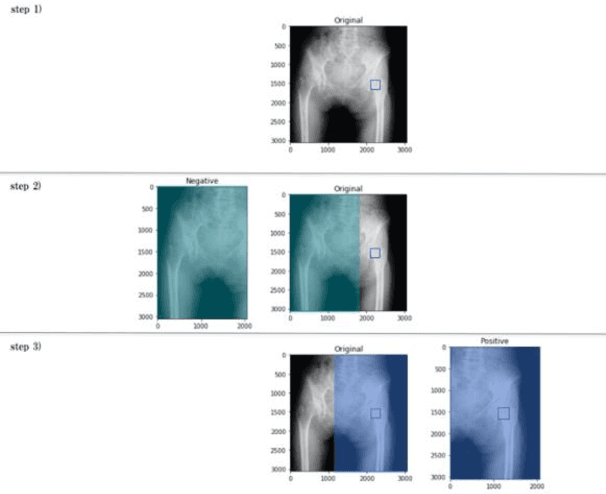

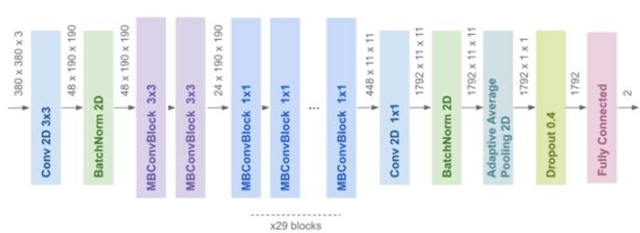

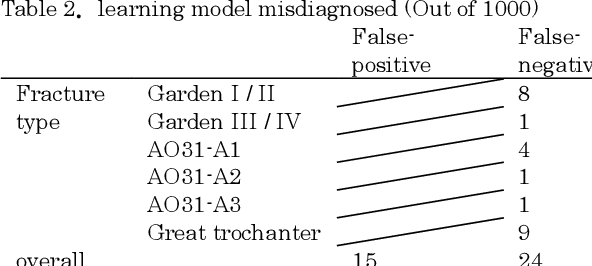

Abstract:[Objective] To develop a CAD system for hip fracture for plain frontal hip X-ray by CNN trained on a large dataset collected at multiple institutions. And, the possibility of the diagnosis rate improvement of the proximal femoral fracture by the resident using this CAD system as an aid of the diagnosis. [Materials and methods] In total, 4851 cases of hip fracture patients who visited each institution between 2009 and 2019 were included. 5242 plain pelvic X-rays were extracted from a DICOM server, and a total of 10484 images(5242 with fracture side and 5242 without fracture side) were used for machine learning. A CNN approach was used. We used the EffectiventNet-B4 framework with Pytorch 1.3 and Fast.ai. In the final evaluation, accuracy, sensitivity, specificity, F-value, and AUC were evaluated. Grad-CAM was used to conceptualize the basis of the diagnosis by the CAD system. For 31 residents and 4 orthopedic surgeons, the image diagnosis test was carried out for 600 photographs of hip fracture randomly extracted from test image data set. And, diagnosis rate in the situation with/without the diagnosis support by the CAD system were evaluated respectively. [Results] The diagnostic accuracy of the learning model was 96.1%, sensitivity 95.2%, specificity 96.9%, F value 0.961, and AUC 0.99. Grad-CAM was used to show the most accurate diagnosis. In the image diagnosis test, the resident acquired the diagnostic ability equivalent to that of the orthopedic surgeon by using the diagnostic aid of the CAD system. [Conclusions] The CAD system using AI for the hip fracture which we developed could offer the diagnosis basis, and it became an image diagnosis tool with the high diagnosis accuracy. And, the possibility of contributing to the diagnosis rate improvement was considered in the field of actual clinical environment such as emergency room.

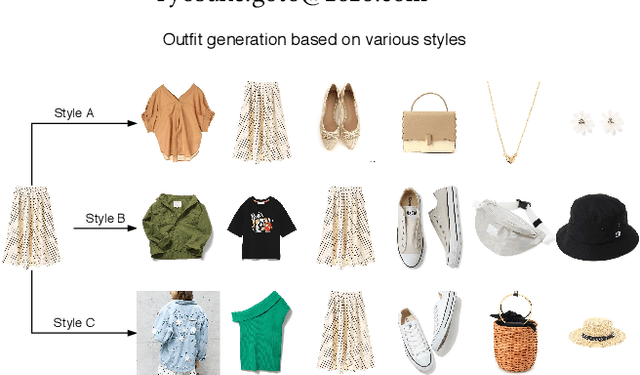

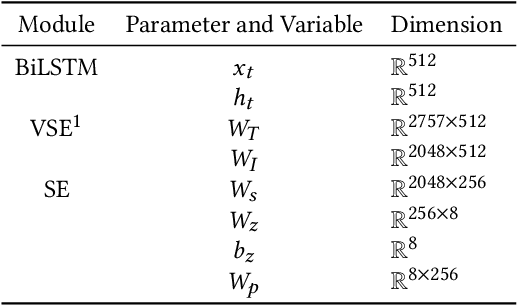

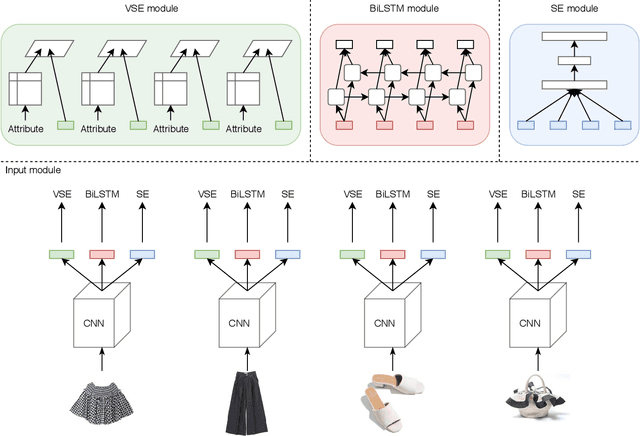

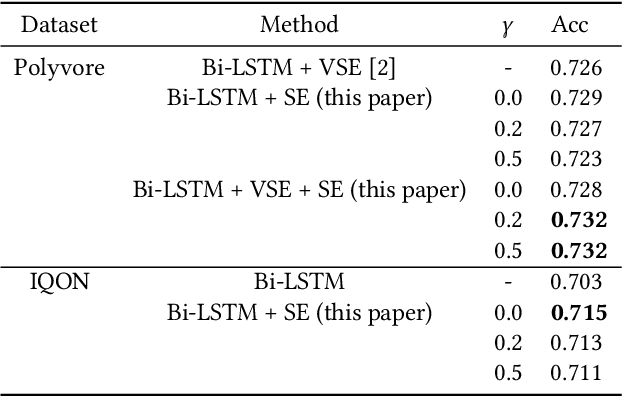

Outfit Generation and Style Extraction via Bidirectional LSTM and Autoencoder

Oct 23, 2018

Abstract:When creating an outfit, style is a criterion in selecting each fashion item. This means that style can be regarded as a feature of the overall outfit. However, in various previous studies on outfit generation, there have been few methods focusing on global information obtained from an outfit. To address this deficiency, we have incorporated an unsupervised style extraction module into a model to learn outfits. Using the style information of an outfit as a whole, the proposed model succeeded in generating outfits more flexibly without requiring additional information. Moreover, the style information extracted by the proposed model is easy to interpret. The proposed model was evaluated on two human-generated outfit datasets. In a fashion item prediction task (missing prediction task), the proposed model outperformed a baseline method. In a style extraction task, the proposed model extracted some easily distinguishable styles. In an outfit generation task, the proposed model generated an outfit while controlling its styles. This capability allows us to generate fashionable outfits according to various preferences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge