Marie Katsurai

MambaPainter: Neural Stroke-Based Rendering in a Single Step

Oct 16, 2024

Abstract:Stroke-based rendering aims to reconstruct an input image into an oil painting style by predicting brush stroke sequences. Conventional methods perform this prediction stroke-by-stroke or require multiple inference steps due to the limitations of a predictable number of strokes. This procedure leads to inefficient translation speed, limiting their practicality. In this study, we propose MambaPainter, capable of predicting a sequence of over 100 brush strokes in a single inference step, resulting in rapid translation. We achieve this sequence prediction by incorporating the selective state-space model. Additionally, we introduce a simple extension to patch-based rendering, which we use to translate high-resolution images, improving the visual quality with a minimal increase in computational cost. Experimental results demonstrate that MambaPainter can efficiently translate inputs to oil painting-style images compared to state-of-the-art methods. The codes are available at https://github.com/STomoya/MambaPainter.

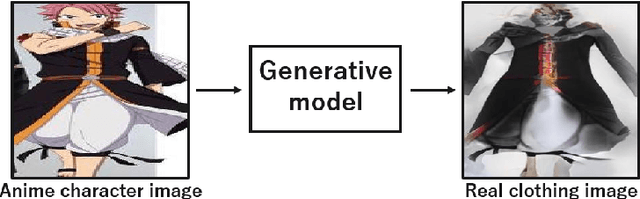

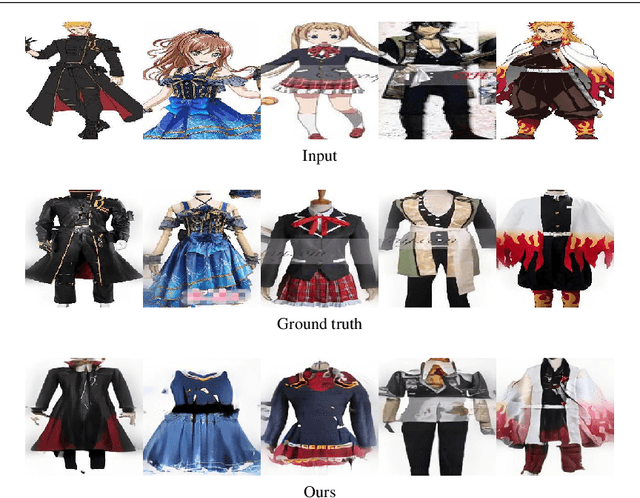

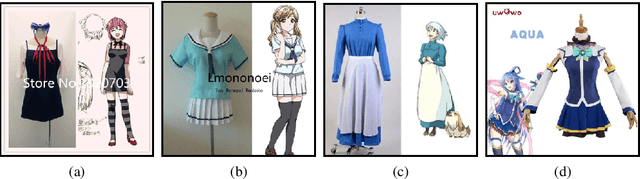

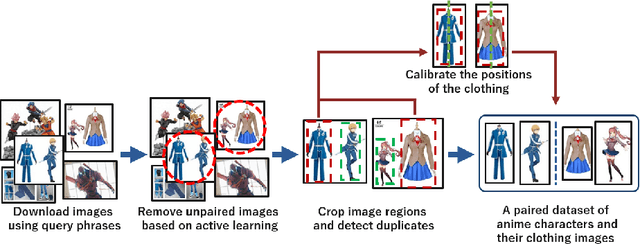

Anime-to-Real Clothing: Cosplay Costume Generation via Image-to-Image Translation

Aug 26, 2020

Abstract:Cosplay has grown from its origins at fan conventions into a billion-dollar global dress phenomenon. To facilitate imagination and reinterpretation from animated images to real garments, this paper presents an automatic costume image generation method based on image-to-image translation. Cosplay items can be significantly diverse in their styles and shapes, and conventional methods cannot be directly applied to the wide variation in clothing images that are the focus of this study. To solve this problem, our method starts by collecting and preprocessing web images to prepare a cleaned, paired dataset of the anime and real domains. Then, we present a novel architecture for generative adversarial networks (GANs) to facilitate high-quality cosplay image generation. Our GAN consists of several effective techniques to fill the gap between the two domains and improve both the global and local consistency of generated images. Experiments demonstrated that, with two types of evaluation metrics, the proposed GAN achieves better performance than existing methods. We also showed that the images generated by the proposed method are more realistic than those generated by the conventional methods. Our codes and pretrained model are available on the web.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge