Yuki Saito

Geneses: Unified Generative Speech Enhancement and Separation

Jan 26, 2026Abstract:Real-world audio recordings often contain multiple speakers and various degradations, which limit both the quantity and quality of speech data available for building state-of-the-art speech processing models. Although end-to-end approaches that concatenate speech enhancement (SE) and speech separation (SS) to obtain a clean speech signal for each speaker are promising, conventional SE-SS methods suffer from complex degradations beyond additive noise. To this end, we propose \textbf{Geneses}, a generative framework to achieve unified, high-quality SE--SS. Our Geneses leverages latent flow matching to estimate each speaker's clean speech features using multi-modal diffusion Transformer conditioned on self-supervised learning representation from noisy mixture. We conduct experimental evaluation using two-speaker mixtures from LibriTTS-R under two conditions: additive-noise-only and complex degradations. The results demonstrate that Geneses significantly outperforms a conventional mask-based SE--SS method across various objective metrics with high robustness against complex degradations. Audio samples are available in our demo page.

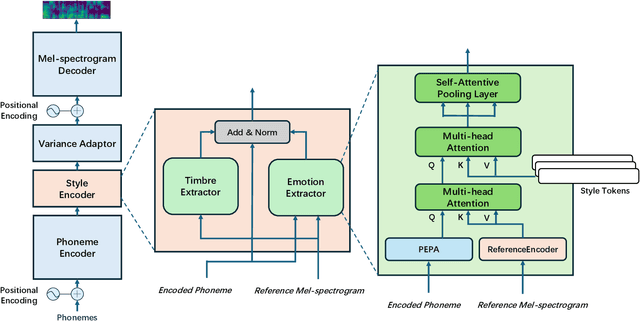

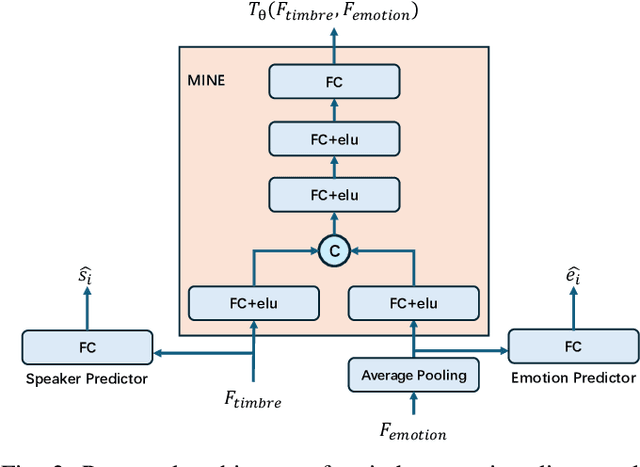

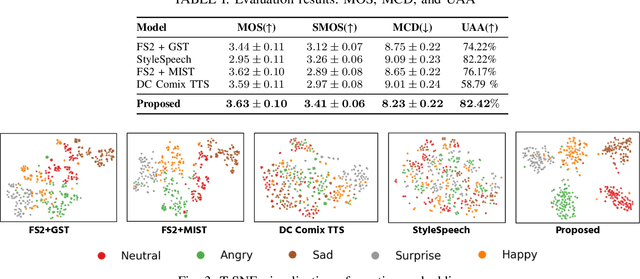

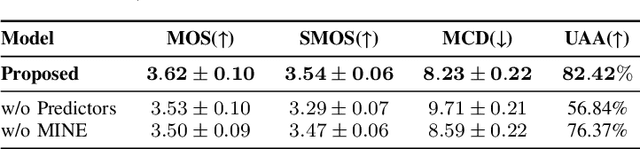

Emotional Text-To-Speech Based on Mutual-Information-Guided Emotion-Timbre Disentanglement

Oct 02, 2025

Abstract:Current emotional Text-To-Speech (TTS) and style transfer methods rely on reference encoders to control global style or emotion vectors, but do not capture nuanced acoustic details of the reference speech. To this end, we propose a novel emotional TTS method that enables fine-grained phoneme-level emotion embedding prediction while disentangling intrinsic attributes of the reference speech. The proposed method employs a style disentanglement method to guide two feature extractors, reducing mutual information between timbre and emotion features, and effectively separating distinct style components from the reference speech. Experimental results demonstrate that our method outperforms baseline TTS systems in generating natural and emotionally rich speech. This work highlights the potential of disentangled and fine-grained representations in advancing the quality and flexibility of emotional TTS systems.

Static Word Embeddings for Sentence Semantic Representation

Jun 05, 2025

Abstract:We propose new static word embeddings optimised for sentence semantic representation. We first extract word embeddings from a pre-trained Sentence Transformer, and improve them with sentence-level principal component analysis, followed by either knowledge distillation or contrastive learning. During inference, we represent sentences by simply averaging word embeddings, which requires little computational cost. We evaluate models on both monolingual and cross-lingual tasks and show that our model substantially outperforms existing static models on sentence semantic tasks, and even rivals a basic Sentence Transformer model (SimCSE) on some data sets. Lastly, we perform a variety of analyses and show that our method successfully removes word embedding components that are irrelevant to sentence semantics, and adjusts the vector norms based on the influence of words on sentence semantics.

Shallow Flow Matching for Coarse-to-Fine Text-to-Speech Synthesis

May 18, 2025Abstract:We propose a shallow flow matching (SFM) mechanism to enhance flow matching (FM)-based text-to-speech (TTS) models within a coarse-to-fine generation paradigm. SFM constructs intermediate states along the FM paths using coarse output representations. During training, we introduce an orthogonal projection method to adaptively determine the temporal position of these states, and apply a principled construction strategy based on a single-segment piecewise flow. The SFM inference starts from the intermediate state rather than pure noise and focuses computation on the latter stages of the FM paths. We integrate SFM into multiple TTS models with a lightweight SFM head. Experiments show that SFM consistently improves the naturalness of synthesized speech in both objective and subjective evaluations, while significantly reducing inference when using adaptive-step ODE solvers. Demo and codes are available at https://ydqmkkx.github.io/SFMDemo/.

Causal Speech Enhancement with Predicting Semantics based on Quantized Self-supervised Learning Features

Dec 26, 2024Abstract:Real-time speech enhancement (SE) is essential to online speech communication. Causal SE models use only the previous context while predicting future information, such as phoneme continuation, may help performing causal SE. The phonetic information is often represented by quantizing latent features of self-supervised learning (SSL) models. This work is the first to incorporate SSL features with causality into an SE model. The causal SSL features are encoded and combined with spectrogram features using feature-wise linear modulation to estimate a mask for enhancing the noisy input speech. Simultaneously, we quantize the causal SSL features using vector quantization to represent phonetic characteristics as semantic tokens. The model not only encodes SSL features but also predicts the future semantic tokens in multi-task learning (MTL). The experimental results using VoiceBank + DEMAND dataset show that our proposed method achieves 2.88 in PESQ, especially with semantic prediction MTL, in which we confirm that the semantic prediction played an important role in causal SE.

An Environment-Adaptive Position/Force Control Based on Physical Property Estimation

Dec 19, 2024

Abstract:The technology for generating robot actions has significantly contributed to the automation and efficiency of tasks. However, the ability to adapt to objects of different shapes and hardness remains a challenge for general industrial robots. Motion reproduction systems (MRS) replicate previously acquired actions using position and force control, but generating actions for significantly different environments is difficult. Furthermore, methods based on machine learning require the acquisition of a large amount of motion data. This paper proposes a new method that matches the impedance of two pre-recorded action data with the current environmental impedance to generate highly adaptable actions. This method recalculates the command values for position and force based on the current impedance to improve reproducibility in different environments. Experiments conducted under conditions of extreme action impedance, such as position control and force control, confirmed the superiority of the proposed method over MRS. The advantages of this method include using only two sets of motion data, significantly reducing the burden of data acquisition compared to machine learning-based methods, and eliminating concerns about stability by using existing stable control systems. This study contributes to improving robots' environmental adaptability while simplifying the action generation method.

An Empirical Analysis of GPT-4V's Performance on Fashion Aesthetic Evaluation

Oct 31, 2024

Abstract:Fashion aesthetic evaluation is the task of estimating how well the outfits worn by individuals in images suit them. In this work, we examine the zero-shot performance of GPT-4V on this task for the first time. We show that its predictions align fairly well with human judgments on our datasets, and also find that it struggles with ranking outfits in similar colors. The code is available at https://github.com/st-tech/gpt4v-fashion-aesthetic-evaluation.

Construction and Analysis of Impression Caption Dataset for Environmental Sounds

Oct 20, 2024Abstract:Some datasets with the described content and order of occurrence of sounds have been released for conversion between environmental sound and text. However, there are very few texts that include information on the impressions humans feel, such as "sharp" and "gorgeous," when they hear environmental sounds. In this study, we constructed a dataset with impression captions for environmental sounds that describe the impressions humans have when hearing these sounds. We used ChatGPT to generate impression captions and selected the most appropriate captions for sound by humans. Our dataset consists of 3,600 impression captions for environmental sounds. To evaluate the appropriateness of impression captions for environmental sounds, we conducted subjective and objective evaluations. From our evaluation results, we indicate that appropriate impression captions for environmental sounds can be generated.

Disentangling Likes and Dislikes in Personalized Generative Explainable Recommendation

Oct 17, 2024

Abstract:Recent research on explainable recommendation generally frames the task as a standard text generation problem, and evaluates models simply based on the textual similarity between the predicted and ground-truth explanations. However, this approach fails to consider one crucial aspect of the systems: whether their outputs accurately reflect the users' (post-purchase) sentiments, i.e., whether and why they would like and/or dislike the recommended items. To shed light on this issue, we introduce new datasets and evaluation methods that focus on the users' sentiments. Specifically, we construct the datasets by explicitly extracting users' positive and negative opinions from their post-purchase reviews using an LLM, and propose to evaluate systems based on whether the generated explanations 1) align well with the users' sentiments, and 2) accurately identify both positive and negative opinions of users on the target items. We benchmark several recent models on our datasets and demonstrate that achieving strong performance on existing metrics does not ensure that the generated explanations align well with the users' sentiments. Lastly, we find that existing models can provide more sentiment-aware explanations when the users' (predicted) ratings for the target items are directly fed into the models as input. We will release our code and datasets upon acceptance.

The T05 System for The VoiceMOS Challenge 2024: Transfer Learning from Deep Image Classifier to Naturalness MOS Prediction of High-Quality Synthetic Speech

Sep 14, 2024

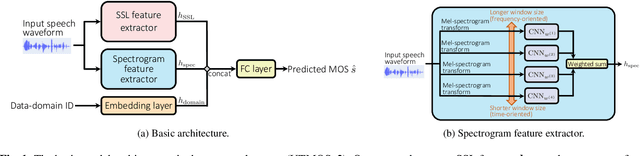

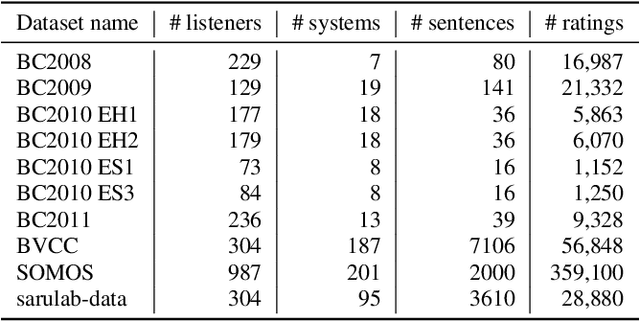

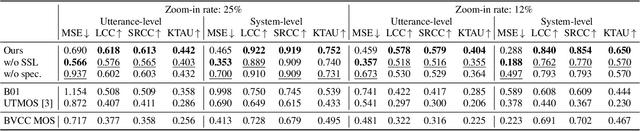

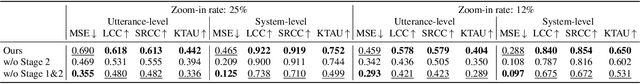

Abstract:We present our system (denoted as T05) for the VoiceMOS Challenge (VMC) 2024. Our system was designed for the VMC 2024 Track 1, which focused on the accurate prediction of naturalness mean opinion score (MOS) for high-quality synthetic speech. In addition to a pretrained self-supervised learning (SSL)-based speech feature extractor, our system incorporates a pretrained image feature extractor to capture the difference of synthetic speech observed in speech spectrograms. We first separately train two MOS predictors that use either of an SSL-based or spectrogram-based feature. Then, we fine-tune the two predictors for better MOS prediction using the fusion of two extracted features. In the VMC 2024 Track 1, our T05 system achieved first place in 7 out of 16 evaluation metrics and second place in the remaining 9 metrics, with a significant difference compared to those ranked third and below. We also report the results of our ablation study to investigate essential factors of our system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge