Junlin Li

Eliminating VAE for Fast and High-Resolution Generative Detail Restoration

Feb 11, 2026Abstract:Diffusion models have attained remarkable breakthroughs in the real-world super-resolution (SR) task, albeit at slow inference and high demand on devices. To accelerate inference, recent works like GenDR adopt step distillation to minimize the step number to one. However, the memory boundary still restricts the maximum processing size, necessitating tile-by-tile restoration of high-resolution images. Through profiling the pipeline, we pinpoint that the variational auto-encoder (VAE) is the bottleneck of latency and memory. To completely solve the problem, we leverage pixel-(un)shuffle operations to eliminate the VAE, reversing the latent-based GenDR to pixel-space GenDR-Pix. However, upscale with x8 pixelshuffle may induce artifacts of repeated patterns. To alleviate the distortion, we propose a multi-stage adversarial distillation to progressively remove the encoder and decoder. Specifically, we utilize generative features from the previous stage models to guide adversarial discrimination. Moreover, we propose random padding to augment generative features and avoid discriminator collapse. We also introduce a masked Fourier space loss to penalize the outliers of amplitude. To improve inference performance, we empirically integrate a padding-based self-ensemble with classifier-free guidance to improve inference scaling. Experimental results show that GenDR-Pix performs 2.8x acceleration and 60% memory-saving compared to GenDR with negligible visual degradation, surpassing other one-step diffusion SR. Against all odds, GenDR-Pix can restore 4K image in only 1 second and 6GB.

A Tri-Dynamic Preprocessing Framework for UGC Video Compression

Dec 18, 2025

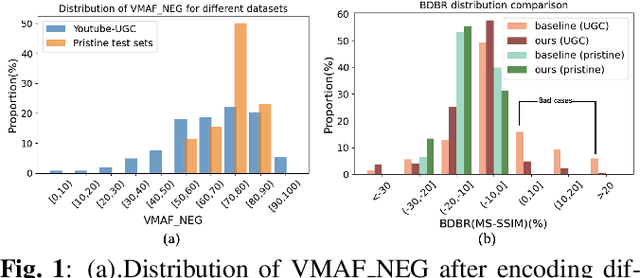

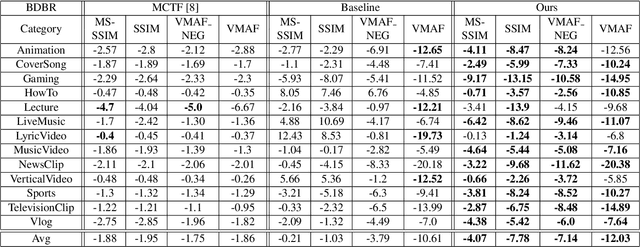

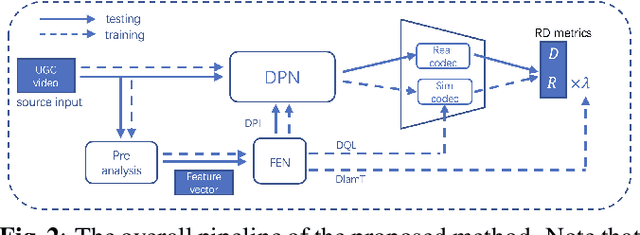

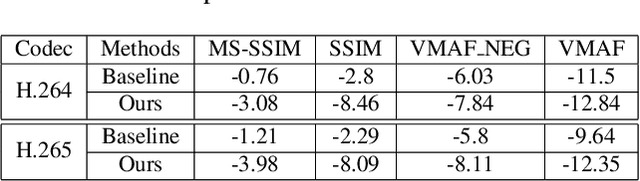

Abstract:In recent years, user generated content (UGC) has become the dominant force in internet traffic. However, UGC videos exhibit a higher degree of variability and diverse characteristics compared to traditional encoding test videos. This variance challenges the effectiveness of data-driven machine learning algorithms for optimizing encoding in the broader context of UGC scenarios. To address this issue, we propose a Tri-Dynamic Preprocessing framework for UGC. Firstly, we employ an adaptive factor to regulate preprocessing intensity. Secondly, an adaptive quantization level is employed to fine-tune the codec simulator. Thirdly, we utilize an adaptive lambda tradeoff to adjust the rate-distortion loss function. Experimental results on large-scale test sets demonstrate that our method attains exceptional performance.

Audio-Visual Cross-Modal Compression for Generative Face Video Coding

Dec 17, 2025Abstract:Generative face video coding (GFVC) is vital for modern applications like video conferencing, yet existing methods primarily focus on video motion while neglecting the significant bitrate contribution of audio. Despite the well-established correlation between audio and lip movements, this cross-modal coherence has not been systematically exploited for compression. To address this, we propose an Audio-Visual Cross-Modal Compression (AVCC) framework that jointly compresses audio and video streams. Our framework extracts motion information from video and tokenizes audio features, then aligns them through a unified audio-video diffusion process. This allows synchronized reconstruction of both modalities from a shared representation. In extremely low-rate scenarios, AVCC can even reconstruct one modality from the other. Experiments show that AVCC significantly outperforms the Versatile Video Coding (VVC) standard and state-of-the-art GFVC schemes in rate-distortion performance, paving the way for more efficient multimodal communication systems.

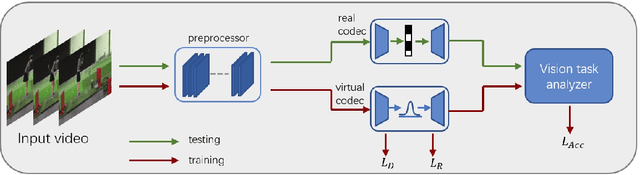

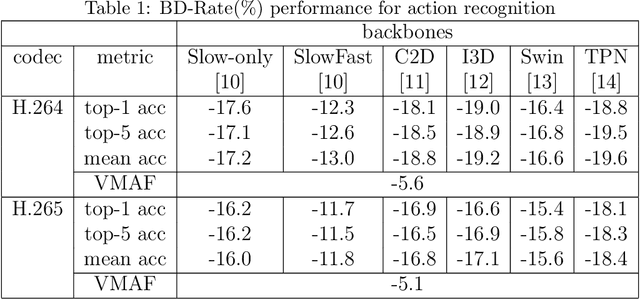

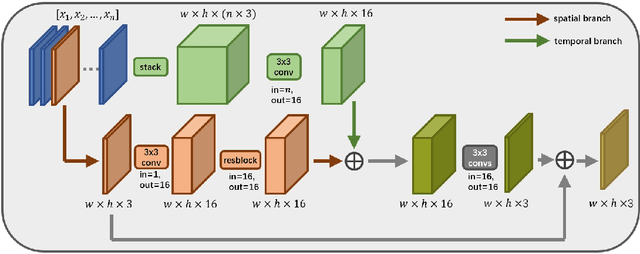

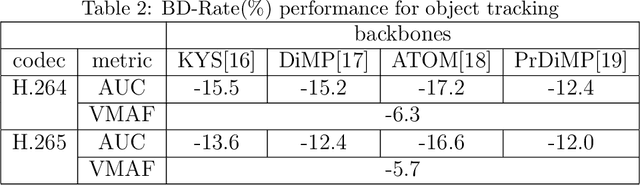

A Preprocessing Framework for Video Machine Vision under Compression

Dec 17, 2025

Abstract:There has been a growing trend in compressing and transmitting videos from terminals for machine vision tasks. Nevertheless, most video coding optimization method focus on minimizing distortion according to human perceptual metrics, overlooking the heightened demands posed by machine vision systems. In this paper, we propose a video preprocessing framework tailored for machine vision tasks to address this challenge. The proposed method incorporates a neural preprocessor which retaining crucial information for subsequent tasks, resulting in the boosting of rate-accuracy performance. We further introduce a differentiable virtual codec to provide constraints on rate and distortion during the training stage. We directly apply widely used standard codecs for testing. Therefore, our solution can be easily applied to real-world scenarios. We conducted extensive experiments evaluating our compression method on two typical downstream tasks with various backbone networks. The experimental results indicate that our approach can save over 15% of bitrate compared to using only the standard codec anchor version.

Generative Preprocessing for Image Compression with Pre-trained Diffusion Models

Dec 17, 2025Abstract:Preprocessing is a well-established technique for optimizing compression, yet existing methods are predominantly Rate-Distortion (R-D) optimized and constrained by pixel-level fidelity. This work pioneers a shift towards Rate-Perception (R-P) optimization by, for the first time, adapting a large-scale pre-trained diffusion model for compression preprocessing. We propose a two-stage framework: first, we distill the multi-step Stable Diffusion 2.1 into a compact, one-step image-to-image model using Consistent Score Identity Distillation (CiD). Second, we perform a parameter-efficient fine-tuning of the distilled model's attention modules, guided by a Rate-Perception loss and a differentiable codec surrogate. Our method seamlessly integrates with standard codecs without any modification and leverages the model's powerful generative priors to enhance texture and mitigate artifacts. Experiments show substantial R-P gains, achieving up to a 30.13% BD-rate reduction in DISTS on the Kodak dataset and delivering superior subjective visual quality.

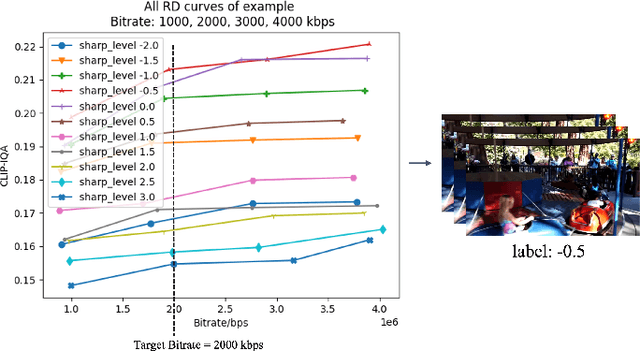

Region-Adaptive Video Sharpening via Rate-Perception Optimization

Aug 12, 2025

Abstract:Sharpening is a widely adopted video enhancement technique. However, uniform sharpening intensity ignores texture variations, degrading video quality. Sharpening also increases bitrate, and there's a lack of techniques to optimally allocate these additional bits across diverse regions. Thus, this paper proposes RPO-AdaSharp, an end-to-end region-adaptive video sharpening model for both perceptual enhancement and bitrate savings. We use the coding tree unit (CTU) partition mask as prior information to guide and constrain the allocation of increased bits. Experiments on benchmarks demonstrate the effectiveness of the proposed model qualitatively and quantitatively.

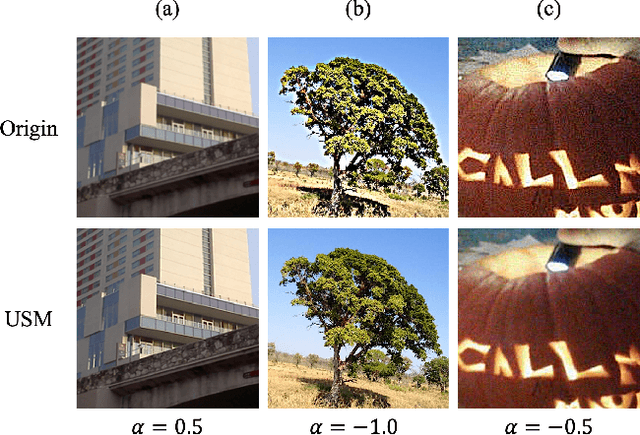

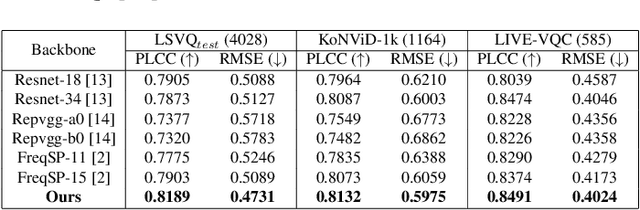

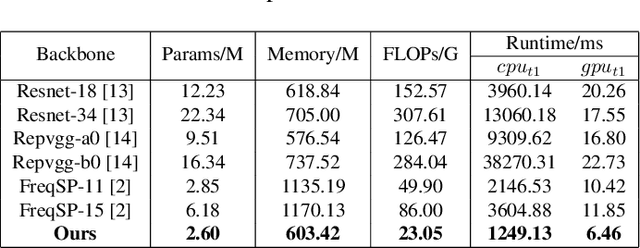

Frequency-Assisted Adaptive Sharpening Scheme Considering Bitrate and Quality Tradeoff

Aug 12, 2025Abstract:Sharpening is a widely adopted technique to improve video quality, which can effectively emphasize textures and alleviate blurring. However, increasing the sharpening level comes with a higher video bitrate, resulting in degraded Quality of Service (QoS). Furthermore, the video quality does not necessarily improve with increasing sharpening levels, leading to issues such as over-sharpening. Clearly, it is essential to figure out how to boost video quality with a proper sharpening level while also controlling bandwidth costs effectively. This paper thus proposes a novel Frequency-assisted Sharpening level Prediction model (FreqSP). We first label each video with the sharpening level correlating to the optimal bitrate and quality tradeoff as ground truth. Then taking uncompressed source videos as inputs, the proposed FreqSP leverages intricate CNN features and high-frequency components to estimate the optimal sharpening level. Extensive experiments demonstrate the effectiveness of our method.

Adaptive High-Frequency Preprocessing for Video Coding

Aug 12, 2025

Abstract:High-frequency components are crucial for maintaining video clarity and realism, but they also significantly impact coding bitrate, resulting in increased bandwidth and storage costs. This paper presents an end-to-end learning-based framework for adaptive high-frequency preprocessing to enhance subjective quality and save bitrate in video coding. The framework employs the Frequency-attentive Feature pyramid Prediction Network (FFPN) to predict the optimal high-frequency preprocessing strategy, guiding subsequent filtering operators to achieve the optimal tradeoff between bitrate and quality after compression. For training FFPN, we pseudo-label each training video with the optimal strategy, determined by comparing the rate-distortion (RD) performance across different preprocessing types and strengths. Distortion is measured using the latest quality assessment metric. Comprehensive evaluations on multiple datasets demonstrate the visually appealing enhancement capabilities and bitrate savings achieved by our framework.

POQD: Performance-Oriented Query Decomposer for Multi-vector retrieval

May 25, 2025Abstract:Although Multi-Vector Retrieval (MVR) has achieved the state of the art on many information retrieval (IR) tasks, its performance highly depends on how to decompose queries into smaller pieces, say phrases or tokens. However, optimizing query decomposition for MVR performance is not end-to-end differentiable. Even worse, jointly solving this problem and training the downstream retrieval-based systems, say RAG systems could be highly inefficient. To overcome these challenges, we propose Performance-Oriented Query Decomposer (POQD), a novel query decomposition framework for MVR. POQD leverages one LLM for query decomposition and searches the optimal prompt with an LLM-based optimizer. We further propose an end-to-end training algorithm to alternatively optimize the prompt for query decomposition and the downstream models. This algorithm can achieve superior MVR performance at a reasonable training cost as our theoretical analysis suggests. POQD can be integrated seamlessly into arbitrary retrieval-based systems such as Retrieval-Augmented Generation (RAG) systems. Extensive empirical studies on representative RAG-based QA tasks show that POQD outperforms existing query decomposition strategies in both retrieval performance and end-to-end QA accuracy. POQD is available at https://github.com/PKU-SDS-lab/POQD-ICML25.

Neural Parameter Search for Slimmer Fine-Tuned Models and Better Transfer

May 24, 2025Abstract:Foundation models and their checkpoints have significantly advanced deep learning, boosting performance across various applications. However, fine-tuned models often struggle outside their specific domains and exhibit considerable redundancy. Recent studies suggest that combining a pruned fine-tuned model with the original pre-trained model can mitigate forgetting, reduce interference when merging model parameters across tasks, and improve compression efficiency. In this context, developing an effective pruning strategy for fine-tuned models is crucial. Leveraging the advantages of the task vector mechanism, we preprocess fine-tuned models by calculating the differences between them and the original model. Recognizing that different task vector subspaces contribute variably to model performance, we introduce a novel method called Neural Parameter Search (NPS-Pruning) for slimming down fine-tuned models. This method enhances pruning efficiency by searching through neural parameters of task vectors within low-rank subspaces. Our method has three key applications: enhancing knowledge transfer through pairwise model interpolation, facilitating effective knowledge fusion via model merging, and enabling the deployment of compressed models that retain near-original performance while significantly reducing storage costs. Extensive experiments across vision, NLP, and multi-modal benchmarks demonstrate the effectiveness and robustness of our approach, resulting in substantial performance gains. The code is publicly available at: https://github.com/duguodong7/NPS-Pruning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge