Clarence W. de Silva

What Has Been Overlooked in Contrastive Source-Free Domain Adaptation: Leveraging Source-Informed Latent Augmentation within Neighborhood Context

Dec 18, 2024Abstract:Source-free domain adaptation (SFDA) involves adapting a model originally trained using a labeled dataset ({\em source domain}) to perform effectively on an unlabeled dataset ({\em target domain}) without relying on any source data during adaptation. This adaptation is especially crucial when significant disparities in data distributions exist between the two domains and when there are privacy concerns regarding the source model's training data. The absence of access to source data during adaptation makes it challenging to analytically estimate the domain gap. To tackle this issue, various techniques have been proposed, such as unsupervised clustering, contrastive learning, and continual learning. In this paper, we first conduct an extensive theoretical analysis of SFDA based on contrastive learning, primarily because it has demonstrated superior performance compared to other techniques. Motivated by the obtained insights, we then introduce a straightforward yet highly effective latent augmentation method tailored for contrastive SFDA. This augmentation method leverages the dispersion of latent features within the neighborhood of the query sample, guided by the source pre-trained model, to enhance the informativeness of positive keys. Our approach, based on a single InfoNCE-based contrastive loss, outperforms state-of-the-art SFDA methods on widely recognized benchmark datasets.

Ensemble diverse hypotheses and knowledge distillation for unsupervised cross-subject adaptation

Apr 15, 2022

Abstract:Recognizing human locomotion intent and activities is important for controlling the wearable robots while walking in complex environments. However, human-robot interface signals are usually user-dependent, which causes that the classifier trained on source subjects performs poorly on new subjects. To address this issue, this paper designs the ensemble diverse hypotheses and knowledge distillation (EDHKD) method to realize unsupervised cross-subject adaptation. EDH mitigates the divergence between labeled data of source subjects and unlabeled data of target subjects to accurately classify the locomotion modes of target subjects without labeling data. Compared to previous domain adaptation methods based on the single learner, which may only learn a subset of features from input signals, EDH can learn diverse features by incorporating multiple diverse feature generators and thus increases the accuracy and decreases the variance of classifying target data, but it sacrifices the efficiency. To solve this problem, EDHKD (student) distills the knowledge from the EDH (teacher) to a single network to remain efficient and accurate. The performance of the EDHKD is theoretically proved and experimentally validated on a 2D moon dataset and two public human locomotion datasets. Experimental results show that the EDHKD outperforms all other methods. The EDHKD can classify target data with 96.9%, 94.4%, and 97.4% average accuracy on the above three datasets with a short computing time (1 ms). Compared to a benchmark (BM) method, the EDHKD increases 1.3% and 7.1% average accuracy for classifying the locomotion modes of target subjects. The EDHKD also stabilizes the learning curves. Therefore, the EDHKD is significant for increasing the generalization ability and efficiency of the human intent prediction and human activity recognition system, which will improve human-robot interactions.

Preserving Domain Private Representation via Mutual Information Maximization

Jan 09, 2022

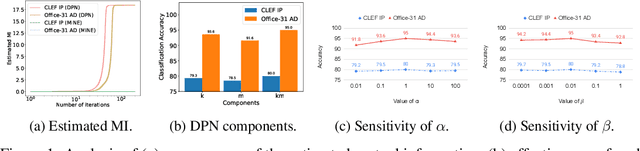

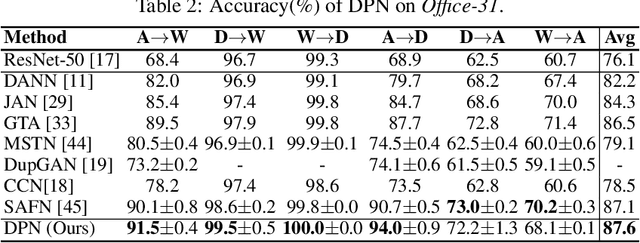

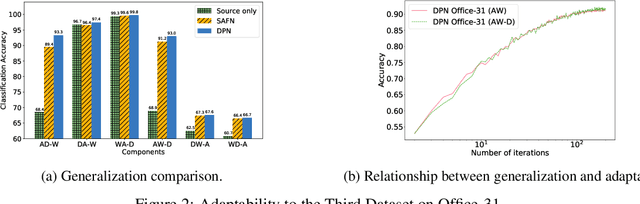

Abstract:Recent advances in unsupervised domain adaptation have shown that mitigating the domain divergence by extracting the domain-invariant representation could significantly improve the generalization of a model to an unlabeled data domain. Nevertheless, the existing methods fail to effectively preserve the representation that is private to the label-missing domain, which could adversely affect the generalization. In this paper, we propose an approach to preserve such representation so that the latent distribution of the unlabeled domain could represent both the domain-invariant features and the individual characteristics that are private to the unlabeled domain. In particular, we demonstrate that maximizing the mutual information between the unlabeled domain and its latent space while mitigating the domain divergence can achieve such preservation. We also theoretically and empirically validate that preserving the representation that is private to the unlabeled domain is important and of necessity for the cross-domain generalization. Our approach outperforms state-of-the-art methods on several public datasets.

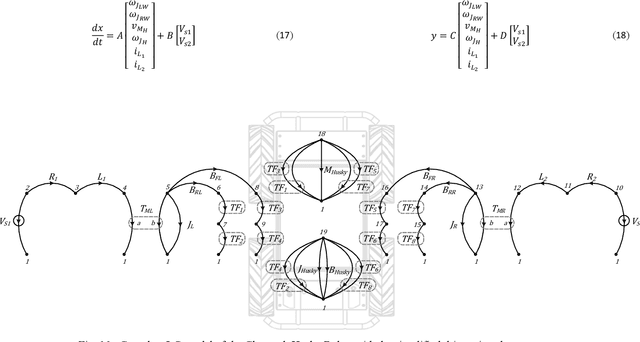

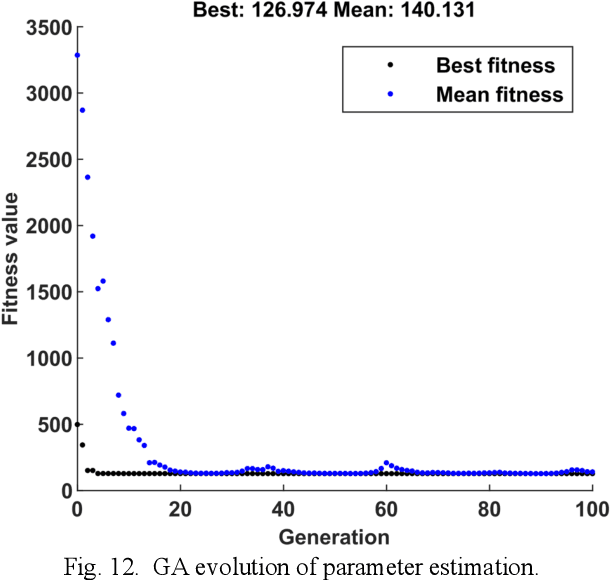

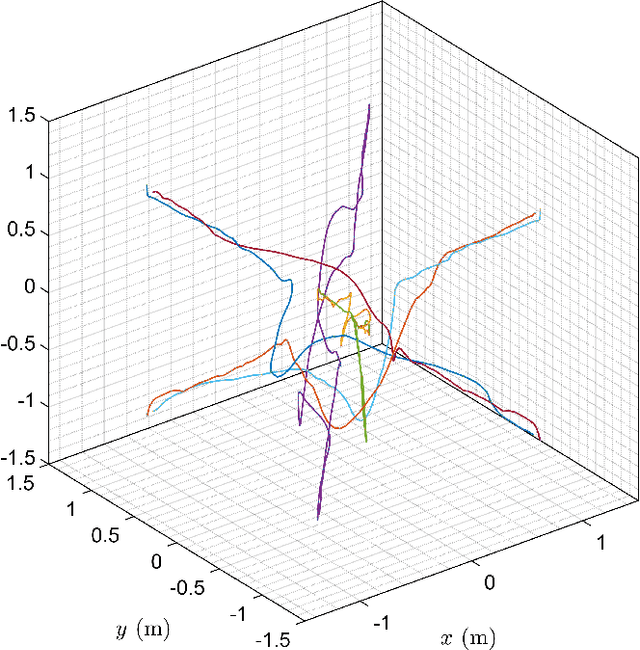

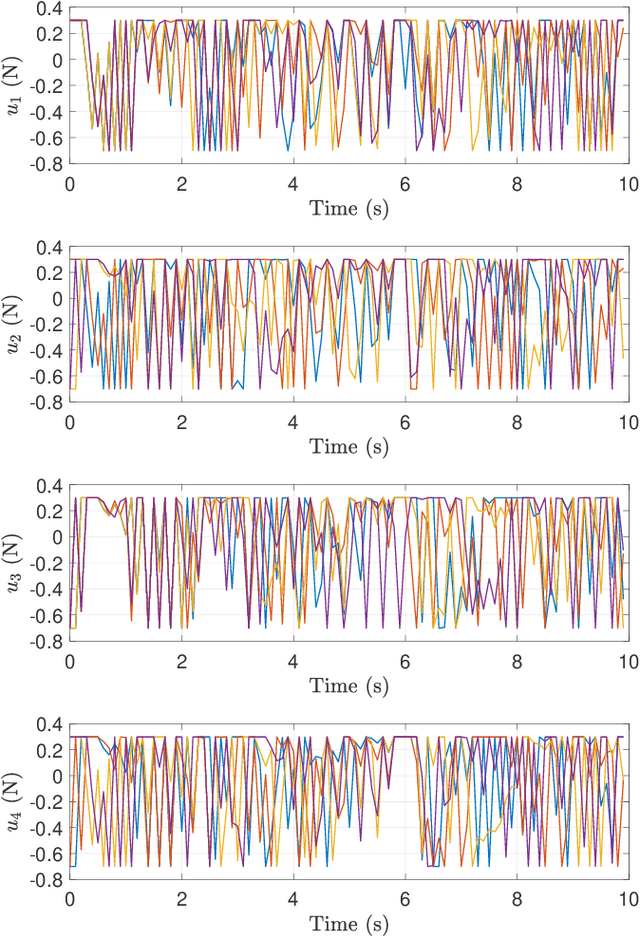

Dynamic Modeling and Simulation of a Four-wheel Skid-Steer Mobile Robot using Linear Graphs

Oct 01, 2021

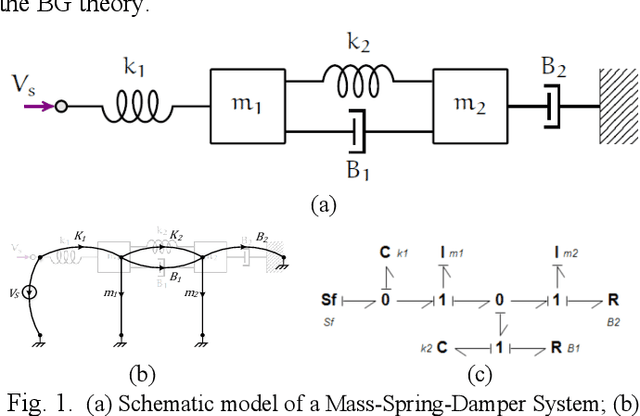

Abstract:This paper presents the application of the concepts and approaches of linear graph (LG) theory in the modeling and simulation of a 4-wheel skid-steer mobile robotic system. An LG representation of the system is proposed and the accompanying state-space model of the dynamics of a mobile robot system is evaluated using the associated LGtheory MATLAB toolbox, which was developed in our lab. A genetic algorithm (GA)-based parameter estimation method is employed to determine the system parameters, which leads to a very accurate simulation of the model. The developed model is then evaluated and validated by comparing the simulated LG model trajectory with the trajectory of a ROS Gazebo simulated robot and experimental data obtained from the physical robotic system. The obtained results demonstrate that the proposed LG model, combined with the GA parameter estimation process, produces a highly accurate method of modeling and simulating a mobile robotic system.

Data-Driven Predictive Control for Multi-Agent Decision Making With Chance Constraints

Nov 06, 2020

Abstract:In the recent literature, significant and substantial efforts have been dedicated to the important area of multi-agent decision-making problems. Particularly here, the model predictive control (MPC) methodology has demonstrated its effectiveness in various applications, such as mobile robots, unmanned vehicles, and drones. Nevertheless, in many specific scenarios involving the MPC methodology, accurate and effective system identification is a commonly encountered challenge. As a consequence, the overall system performance could be significantly weakened in outcome when the traditional MPC algorithm is adopted under such circumstances. To cater to this rather major shortcoming, this paper investigates an alternate data-driven approach to solve the multi-agent decision-making problem. Utilizing an innovative modified methodology with suitable closed-loop input/output measurements that comply with the appropriate persistency of excitation condition, a non-parametric predictive model is suitably constructed. This non-parametric predictive model approach in the work here attains the key advantage of alleviating the rather heavy computational burden encountered in the optimization procedures typical in alternative methodologies requiring open-loop input/output measurement data collection and parametric system identification. Then with a conservative approximation of probabilistic chance constraints for the MPC problem, a resulting deterministic optimization problem is formulated and solved efficiently and effectively. In the work here, this intuitive data-driven approach is also shown to preserve good robustness properties. Finally, a multi-drone system is used to demonstrate the practical appeal and highly effective outcome of this promising development in achieving very good system performance.

Accelerated Hierarchical ADMM for Nonconvex Optimization in Multi-Agent Decision Making

Nov 01, 2020

Abstract:Distributed optimization is widely used to solve large-scale problems effectively in a localized and coordinated manner. Thus, it is noteworthy that the methodology of distributed model predictive control (DMPC) has become a promising approach to achieve effective outcomes; and particularly in decision-making tasks for multi-agent systems. However, the typical deployment of such DMPC frameworks would lead to involvement of nonlinear processes with a large number of nonconvex constraints. Noting all these attendant constraints and limitations, the development and innovation of a hierarchical three-block alternating direction method of multipliers (ADMM) approach is presented in the work here to solve the nonconvex optimization problem that arises for such a decision-making problem in multi-agent systems. Firstly thus, an additional slack variable is introduced to relax the original large-scale nonconvex optimization problem; such that the intractable nonconvex coupling constraints are suitably related to the distributed agents. Then, the approach with a hierarchical ADMM that contains outer loop iteration by the augmented Lagrangian method (ALM), and inner loop iteration by three-block semi-proximal ADMM, is utilized to solve the resulting relaxed nonconvex optimization problem. Additionally, it is shown that the appropriate desired stationary point exists for the procedures of the hierarchical stages for convergence in the algorithm. Next, the approximate optimization with a barrier method is then applied to accelerate the computational efficiency. Finally, a multi-agent system involving decision-making for multiple unmanned aerial vehicles (UAVs) is utilized to demonstrate the effectiveness of the proposed method in terms of attained performance and computational efficiency.

How does the structure embedded in learning policy affect learning quadruped locomotion?

Aug 29, 2020

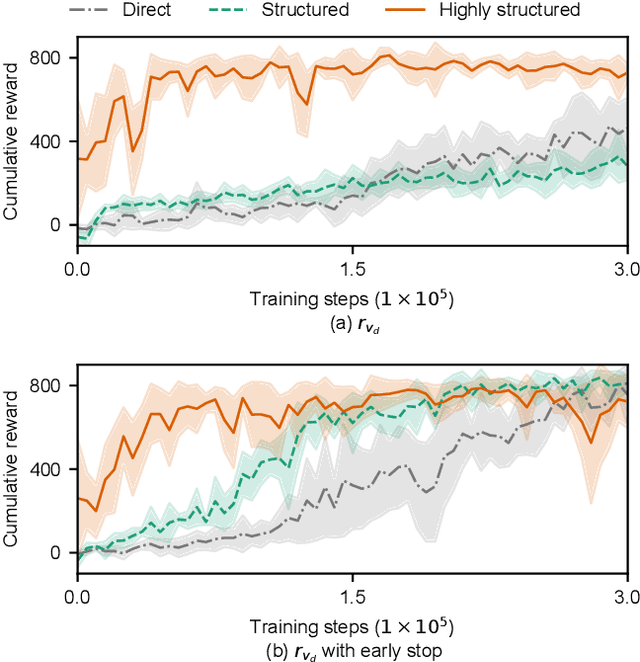

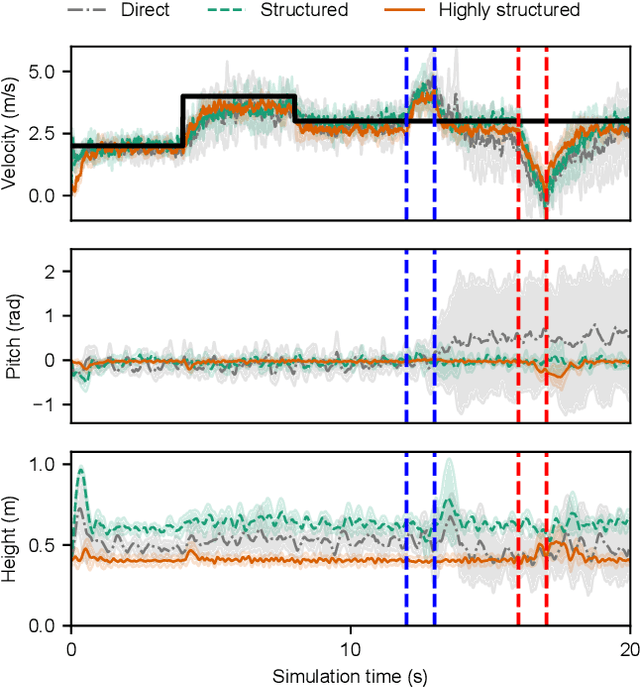

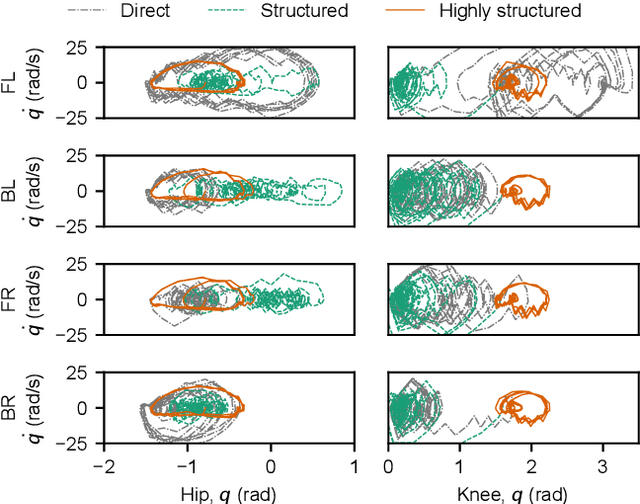

Abstract:Reinforcement learning (RL) is a popular data-driven method that has demonstrated great success in robotics. Previous works usually focus on learning an end-to-end (direct) policy to directly output joint torques. While the direct policy seems convenient, the resultant performance may not meet our expectations. To improve its performance, more sophisticated reward functions or more structured policies can be utilized. This paper focuses on the latter because the structured policy is more intuitive and can inherit insights from previous model-based controllers. It is unsurprising that the structure, such as a better choice of the action space and constraints of motion trajectory, may benefit the training process and the final performance of the policy at the cost of generality, but the quantitative effect is still unclear. To analyze the effect of the structure quantitatively, this paper investigates three policies with different levels of structure in learning quadruped locomotion: a direct policy, a structured policy, and a highly structured policy. The structured policy is trained to learn a task-space impedance controller and the highly structured policy learns a controller tailored for trot running, which we adopt from previous work. To evaluate trained policies, we design a simulation experiment to track different desired velocities under force disturbances. Simulation results show that structured policy and highly structured policy require 1/3 and 3/4 fewer training steps than the direct policy to achieve a similar level of cumulative reward, and seem more robust and efficient than the direct policy. We highlight that the structure embedded in the policies significantly affects the overall performance of learning a complicated task when complex dynamics are involved, such as legged locomotion.

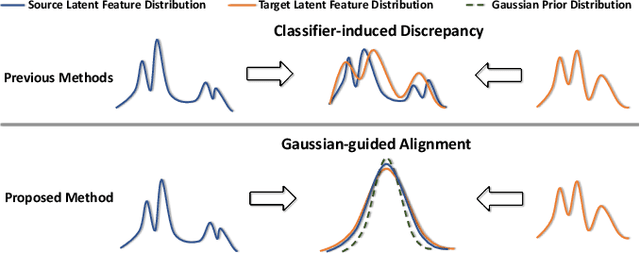

Discriminative Feature Alignment: Improving Transferability of Unsupervised Domain Adaptation by Gaussian-guided Latent Alignment

Jul 16, 2020

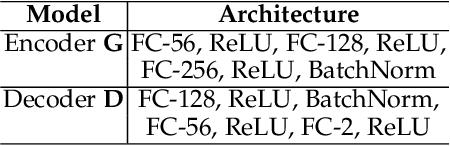

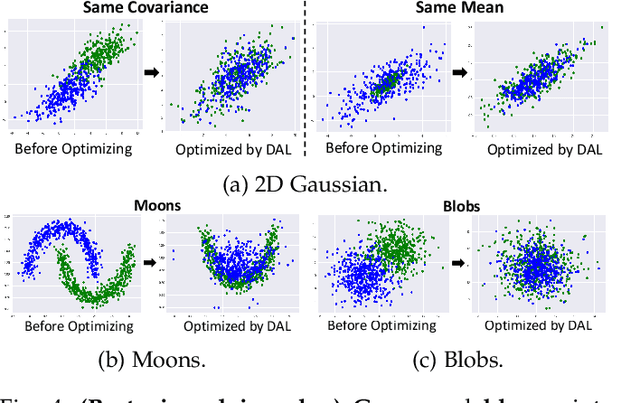

Abstract:In this study, we focus on the unsupervised domain adaptation problem where an approximate inference model is to be learned from a labeled data domain and expected to generalize well to an unlabeled data domain. The success of unsupervised domain adaptation largely relies on the cross-domain feature alignment. Previous work has attempted to directly align latent features by the classifier-induced discrepancies. Nevertheless, a common feature space cannot always be learned via this direct feature alignment especially when a large domain gap exists. To solve this problem, we introduce a Gaussian-guided latent alignment approach to align the latent feature distributions of the two domains under the guidance of the prior distribution. In such an indirect way, the distributions over the samples from the two domains will be constructed on a common feature space, i.e., the space of the prior, which promotes better feature alignment. To effectively align the target latent distribution with this prior distribution, we also propose a novel unpaired L1-distance by taking advantage of the formulation of the encoder-decoder. The extensive evaluations on nine benchmark datasets validate the superior knowledge transferability through outperforming state-of-the-art methods and the versatility of the proposed method by improving the existing work significantly.

Teach Biped Robots to Walk via Gait Principles and Reinforcement Learning with Adversarial Critics

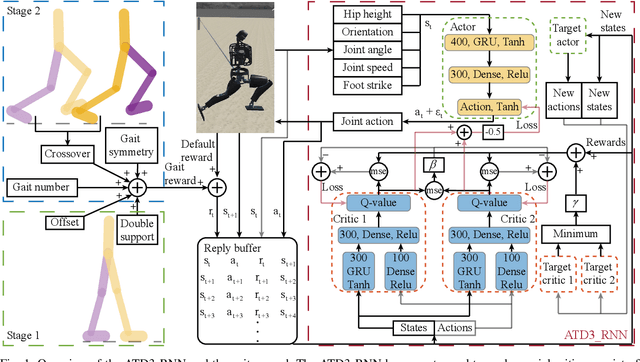

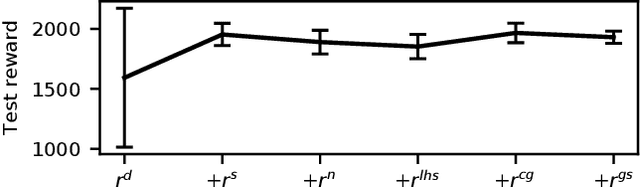

Oct 22, 2019

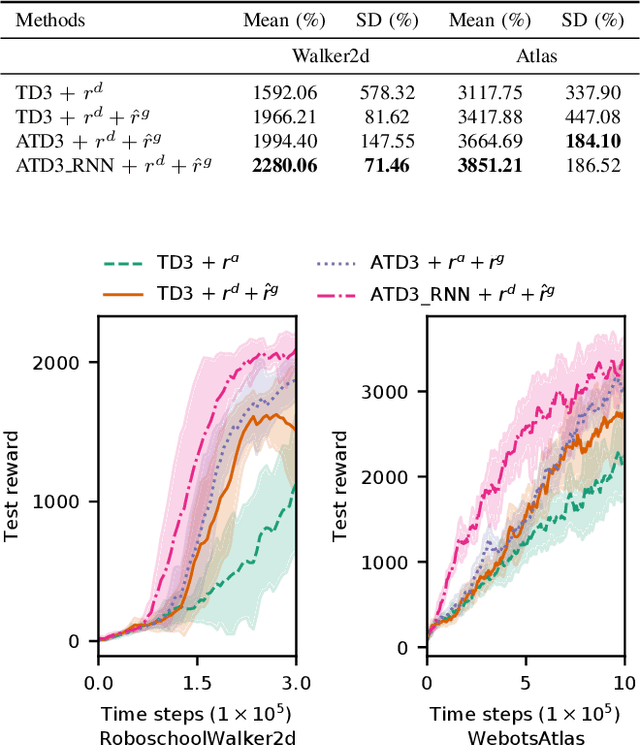

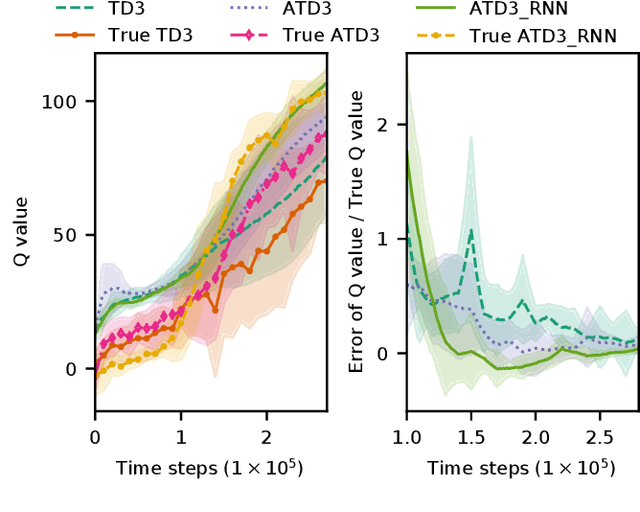

Abstract:Controlling a biped robot to walk stably is a challenging task considering its nonlinearity and hybrid dynamics. Reinforcement learning can address these issues by directly mapping the observed states to optimal actions that maximize the cumulative reward. However, the local minima caused by unsuitable rewards and the overestimation of the cumulative reward impede the maximization of the cumulative reward. To increase the cumulative reward, this paper designs a gait reward based on walking principles, which compensates the local minima for unnatural motions. Besides, an Adversarial Twin Delayed Deep Deterministic (ATD3) policy gradient algorithm with a recurrent neural network (RNN) is proposed to further boost the cumulative reward by mitigating the overestimation of the cumulative reward. Experimental results in the Roboschool Walker2d and Webots Atlas simulators indicate that the test rewards increase by 23.50% and 9.63% after adding the gait reward. The test rewards further increase by 15.96% and 12.68% after using the ATD3_RNN, and the reason may be that the ATD3_RNN decreases the error of estimating cumulative reward from 19.86% to 3.35%. Besides, the cosine kinetic similarity between the human and the biped robot trained by the gait reward and ATD3_RNN increases by over 69.23%. Consequently, the designed gait reward and ATD3_RNN boost the cumulative reward and teach biped robots to walk better.

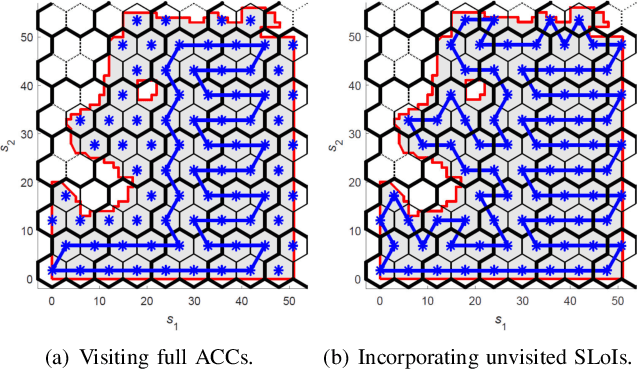

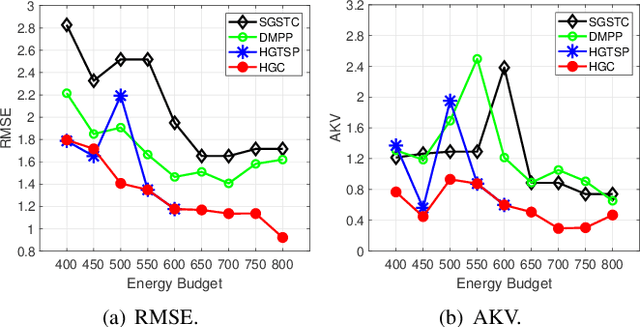

Coverage Sampling Planner for UAV-enabled Environmental Exploration and Field Mapping

Jul 12, 2019

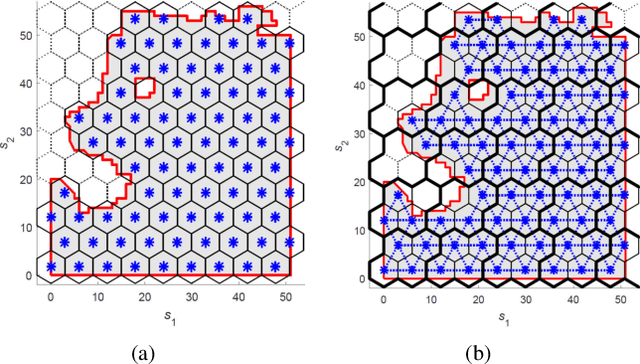

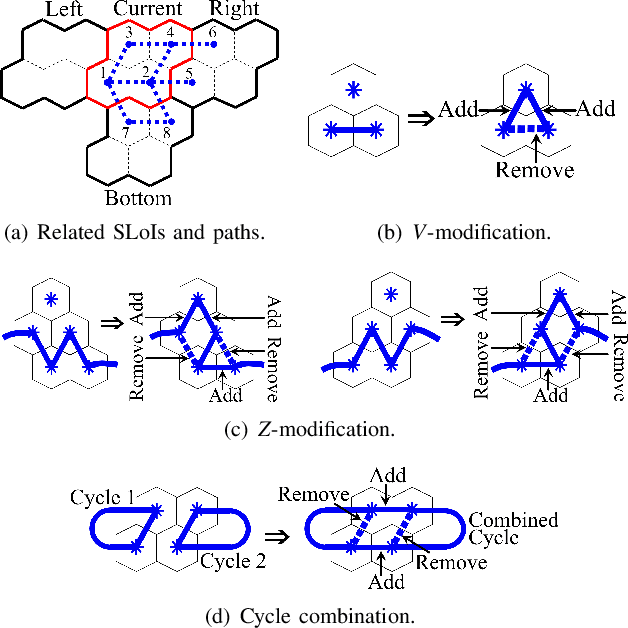

Abstract:Unmanned Aerial Vehicles (UAVs) have been implemented for environmental monitoring by using their capabilities of mobile sensing, autonomous navigation, and remote operation. However, in real-world applications, the limitations of on-board resources (e.g., power supply) of UAVs will constrain the coverage of the monitored area and the number of the acquired samples, which will hinder the performance of field estimation and mapping. Therefore, the issue of constrained resources calls for an efficient sampling planner to schedule UAV-based sensing tasks in environmental monitoring. This paper presents a mission planner of coverage sampling and path planning for a UAV-enabled mobile sensor to effectively explore and map an unknown environment that is modeled as a random field. The proposed planner can generate a coverage path with an optimal coverage density for exploratory sampling, and the associated energy cost is subjected to a power supply constraint. The performance of the developed framework is evaluated and compared with the existing state-of-the-art algorithms, using a real-world dataset that is collected from an environmental monitoring program as well as physical field experiments. The experimental results illustrate the reliability and accuracy of the presented coverage sampling planner in a prior survey for environmental exploration and field mapping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge