Teng Li

State Key Laboratory of Millimeter Waves, Southeast University, Nanjing, China, Purple Mountain Laboratories, Nanjing, China

The Llama 4 Herd: Architecture, Training, Evaluation, and Deployment Notes

Jan 15, 2026Abstract:This document consolidates publicly reported technical details about Metas Llama 4 model family. It summarizes (i) released variants (Scout and Maverick) and the broader herd context including the previewed Behemoth teacher model, (ii) architectural characteristics beyond a high-level MoE description covering routed/shared-expert structure, early-fusion multimodality, and long-context design elements reported for Scout (iRoPE and length generalization strategies), (iii) training disclosures spanning pre-training, mid-training for long-context extension, and post-training methodology (lightweight SFT, online RL, and lightweight DPO) as described in release materials, (iv) developer-reported benchmark results for both base and instruction-tuned checkpoints, and (v) practical deployment constraints observed across major serving environments, including provider-specific context limits and quantization packaging. The manuscript also summarizes licensing obligations relevant to redistribution and derivative naming, and reviews publicly described safeguards and evaluation practices. The goal is to provide a compact technical reference for researchers and practitioners who need precise, source-backed facts about Llama 4.

RealCam-Vid: High-resolution Video Dataset with Dynamic Scenes and Metric-scale Camera Movements

Apr 11, 2025

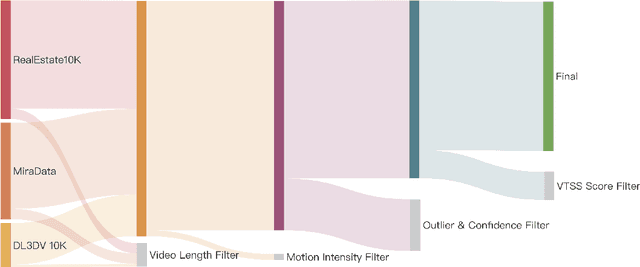

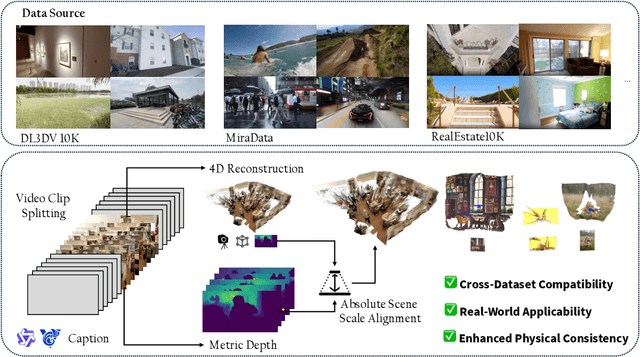

Abstract:Recent advances in camera-controllable video generation have been constrained by the reliance on static-scene datasets with relative-scale camera annotations, such as RealEstate10K. While these datasets enable basic viewpoint control, they fail to capture dynamic scene interactions and lack metric-scale geometric consistency-critical for synthesizing realistic object motions and precise camera trajectories in complex environments. To bridge this gap, we introduce the first fully open-source, high-resolution dynamic-scene dataset with metric-scale camera annotations in https://github.com/ZGCTroy/RealCam-Vid.

Uni-Gaussians: Unifying Camera and Lidar Simulation with Gaussians for Dynamic Driving Scenarios

Mar 11, 2025Abstract:Ensuring the safety of autonomous vehicles necessitates comprehensive simulation of multi-sensor data, encompassing inputs from both cameras and LiDAR sensors, across various dynamic driving scenarios. Neural rendering techniques, which utilize collected raw sensor data to simulate these dynamic environments, have emerged as a leading methodology. While NeRF-based approaches can uniformly represent scenes for rendering data from both camera and LiDAR, they are hindered by slow rendering speeds due to dense sampling. Conversely, Gaussian Splatting-based methods employ Gaussian primitives for scene representation and achieve rapid rendering through rasterization. However, these rasterization-based techniques struggle to accurately model non-linear optical sensors. This limitation restricts their applicability to sensors beyond pinhole cameras. To address these challenges and enable unified representation of dynamic driving scenarios using Gaussian primitives, this study proposes a novel hybrid approach. Our method utilizes rasterization for rendering image data while employing Gaussian ray-tracing for LiDAR data rendering. Experimental results on public datasets demonstrate that our approach outperforms current state-of-the-art methods. This work presents a unified and efficient solution for realistic simulation of camera and LiDAR data in autonomous driving scenarios using Gaussian primitives, offering significant advancements in both rendering quality and computational efficiency.

Energy-Guided Optimization for Personalized Image Editing with Pretrained Text-to-Image Diffusion Models

Mar 06, 2025Abstract:The rapid advancement of pretrained text-driven diffusion models has significantly enriched applications in image generation and editing. However, as the demand for personalized content editing increases, new challenges emerge especially when dealing with arbitrary objects and complex scenes. Existing methods usually mistakes mask as the object shape prior, which struggle to achieve a seamless integration result. The mostly used inversion noise initialization also hinders the identity consistency towards the target object. To address these challenges, we propose a novel training-free framework that formulates personalized content editing as the optimization of edited images in the latent space, using diffusion models as the energy function guidance conditioned by reference text-image pairs. A coarse-to-fine strategy is proposed that employs text energy guidance at the early stage to achieve a natural transition toward the target class and uses point-to-point feature-level image energy guidance to perform fine-grained appearance alignment with the target object. Additionally, we introduce the latent space content composition to enhance overall identity consistency with the target. Extensive experiments demonstrate that our method excels in object replacement even with a large domain gap, highlighting its potential for high-quality, personalized image editing.

AIM: Additional Image Guided Generation of Transferable Adversarial Attacks

Jan 02, 2025Abstract:Transferable adversarial examples highlight the vulnerability of deep neural networks (DNNs) to imperceptible perturbations across various real-world applications. While there have been notable advancements in untargeted transferable attacks, targeted transferable attacks remain a significant challenge. In this work, we focus on generative approaches for targeted transferable attacks. Current generative attacks focus on reducing overfitting to surrogate models and the source data domain, but they often overlook the importance of enhancing transferability through additional semantics. To address this issue, we introduce a novel plug-and-play module into the general generator architecture to enhance adversarial transferability. Specifically, we propose a \emph{Semantic Injection Module} (SIM) that utilizes the semantics contained in an additional guiding image to improve transferability. The guiding image provides a simple yet effective method to incorporate target semantics from the target class to create targeted and highly transferable attacks. Additionally, we propose new loss formulations that can integrate the semantic injection module more effectively for both targeted and untargeted attacks. We conduct comprehensive experiments under both targeted and untargeted attack settings to demonstrate the efficacy of our proposed approach.

Priority-Aware Model-Distributed Inference at Edge Networks

Dec 16, 2024Abstract:Distributed inference techniques can be broadly classified into data-distributed and model-distributed schemes. In data-distributed inference (DDI), each worker carries the entire Machine Learning (ML) model but processes only a subset of the data. However, feeding the data to workers results in high communication costs, especially when the data is large. An emerging paradigm is model-distributed inference (MDI), where each worker carries only a subset of ML layers. In MDI, a source device that has data processes a few layers of ML model and sends the output to a neighboring device, i.e., offloads the rest of the layers. This process ends when all layers are processed in a distributed manner. In this paper, we investigate the design and development of MDI when multiple data sources co-exist. We consider that each data source has a different importance and, hence, a priority. We formulate and solve a priority-aware model allocation optimization problem. Based on the structure of the optimal solution, we design a practical Priority-Aware Model- Distributed Inference (PA-MDI) algorithm that determines model allocation and distribution over devices by taking into account the priorities of different sources. Experiments were conducted on a real-life testbed of NVIDIA Jetson Xavier and Nano edge devices as well as in the Colosseum testbed with ResNet-50, ResNet- 56, and GPT-2 models. The experimental results show that PA-MDI performs priority-aware model allocation successfully while reducing the inference time as compared to baselines.

A Teleoperation System with Impedance Control and Disturbance Observer for Robot-Assisted Rehabilitation

Dec 04, 2024

Abstract:Physical movement therapy is a crucial method of rehabilitation aimed at reinstating mobility among patients facing motor dysfunction due to neurological conditions or accidents. Such therapy is usually featured as patient-specific, repetitive, and labor-intensive. The conventional method, where therapists collaborate with patients to conduct repetitive physical training, proves strenuous due to these characteristics. The concept of robot-assisted rehabilitation, assisting therapists with robotic systems, has gained substantial popularity. However, building such systems presents challenges, such as diverse task demands, uncertainties in dynamic models, and safety issues. To address these concerns, in this paper, we proposed a bilateral teleoperation system for rehabilitation. The control scheme of the system is designed as an integrated framework of impedance control and disturbance observer where the former can ensure compliant human-robot interaction without the need for force sensors while the latter can compensate for dynamic uncertainties when only a roughly identified dynamic model is available. Furthermore, the scheme allows free switching between tracking tasks and physical human-robot interaction (pHRI). The presented system can execute a wide array of pre-defined trajectories with varying patterns, adaptable to diverse needs. Moreover, the system can capture therapists' demonstrations, replaying them as many times as necessary. The effectiveness of the teleoperation system is experimentally evaluated and demonstrated.

HeteroSample: Meta-path Guided Sampling for Heterogeneous Graph Representation Learning

Nov 11, 2024

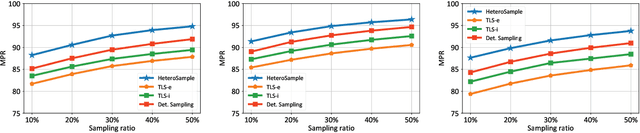

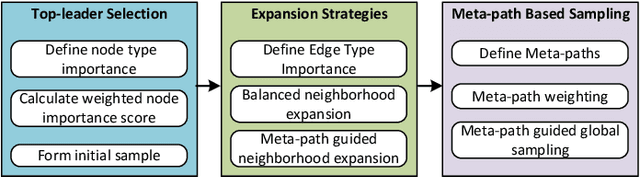

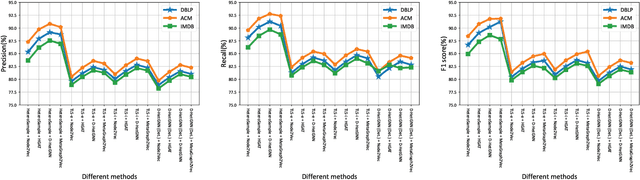

Abstract:The rapid expansion of Internet of Things (IoT) has resulted in vast, heterogeneous graphs that capture complex interactions among devices, sensors, and systems. Efficient analysis of these graphs is critical for deriving insights in IoT scenarios such as smart cities, industrial IoT, and intelligent transportation systems. However, the scale and diversity of IoT-generated data present significant challenges, and existing methods often struggle with preserving the structural integrity and semantic richness of these complex graphs. Many current approaches fail to maintain the balance between computational efficiency and the quality of the insights generated, leading to potential loss of critical information necessary for accurate decision-making in IoT applications. We introduce HeteroSample, a novel sampling method designed to address these challenges by preserving the structural integrity, node and edge type distributions, and semantic patterns of IoT-related graphs. HeteroSample works by incorporating the novel top-leader selection, balanced neighborhood expansion, and meta-path guided sampling strategies. The key idea is to leverage the inherent heterogeneous structure and semantic relationships encoded by meta-paths to guide the sampling process. This approach ensures that the resulting subgraphs are representative of the original data while significantly reducing computational overhead. Extensive experiments demonstrate that HeteroSample outperforms state-of-the-art methods, achieving up to 15% higher F1 scores in tasks such as link prediction and node classification, while reducing runtime by 20%.These advantages make HeteroSample a transformative tool for scalable and accurate IoT applications, enabling more effective and efficient analysis of complex IoT systems, ultimately driving advancements in smart cities, industrial IoT, and beyond.

CamI2V: Camera-Controlled Image-to-Video Diffusion Model

Oct 21, 2024

Abstract:Recently, camera pose, as a user-friendly and physics-related condition, has been introduced into text-to-video diffusion model for camera control. However, existing methods simply inject camera conditions through a side input. These approaches neglect the inherent physical knowledge of camera pose, resulting in imprecise camera control, inconsistencies, and also poor interpretability. In this paper, we emphasize the necessity of integrating explicit physical constraints into model design. Epipolar attention is proposed for modeling all cross-frame relationships from a novel perspective of noised condition. This ensures that features are aggregated from corresponding epipolar lines in all noised frames, overcoming the limitations of current attention mechanisms in tracking displaced features across frames, especially when features move significantly with the camera and become obscured by noise. Additionally, we introduce register tokens to handle cases without intersections between frames, commonly caused by rapid camera movements, dynamic objects, or occlusions. To support image-to-video, we propose the multiple guidance scale to allow for precise control for image, text, and camera, respectively. Furthermore, we establish a more robust and reproducible evaluation pipeline to solve the inaccuracy and instability of existing camera control measurement. We achieve a 25.5\% improvement in camera controllability on RealEstate10K while maintaining strong generalization to out-of-domain images. Only 24GB and 12GB are required for training and inference, respectively. We plan to release checkpoints, along with training and evaluation codes. Dynamic videos are best viewed at \url{https://zgctroy.github.io/CamI2V}.

Task-Oriented Communication for Vehicle-to-Infrastructure Cooperative Perception

Jul 30, 2024

Abstract:Vehicle-to-infrastructure (V2I) cooperative perception plays a crucial role in autonomous driving scenarios. Despite its potential to improve perception accuracy and robustness, the large amount of raw sensor data inevitably results in high communication overhead. To mitigate this issue, we propose TOCOM-V2I, a task-oriented communication framework for V2I cooperative perception, which reduces bandwidth consumption by transmitting only task-relevant information, instead of the raw data stream, for perceiving the surrounding environment. Our contributions are threefold. First, we propose a spatial-aware feature selection module to filter out irrelevant information based on spatial relationships and perceptual prior. Second, we introduce a hierarchical entropy model to exploit redundancy within the features for efficient compression and transmission. Finally, we utilize a scaled dot-product attention architecture to fuse vehicle-side and infrastructure-side features to improve perception performance. Experimental results demonstrate the effectiveness of TOCOM-V2I.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge