Zikang Yuan

SR-LIO++: Efficient LiDAR-Inertial Odometry and Quantized Mapping with Sweep Reconstruction

Mar 29, 2025Abstract:Addressing the inherent low acquisition frequency limitation of 3D LiDAR to achieve high-frequency output has become a critical research focus in the LiDAR-Inertial Odometry (LIO) domain. To ensure real-time performance, frequency-enhanced LIO systems must process each sweep within significantly reduced timeframe, which presents substantial challenges for deployment on low-computational-power platforms. To address these limitations, we introduce SR-LIO++, an innovative LIO system capable of achieving doubled output frequency relative to input frequency on resource-constrained hardware platforms, including the Raspberry Pi 4B. Our system employs a sweep reconstruction methodology to enhance LiDAR sweep frequency, generating high-frequency reconstructed sweeps. Building upon this foundation, we propose a caching mechanism for intermediate results (i.e., surface parameters) of the most recent segments, effectively minimizing redundant processing of common segments in adjacent reconstructed sweeps. This method decouples processing time from the traditionally linear dependence on reconstructed sweep frequency. Furthermore, we present a quantized map point management based on index table mapping, significantly reducing memory usage by converting global 3D point storage from 64-bit double precision to 8-bit char representation. This method also converts the computationally intensive Euclidean distance calculations in nearest neighbor searches from 64-bit double precision to 16-bit short and 32-bit integer formats, significantly reducing both memory and computational cost. Extensive experimental evaluations across three distinct computing platforms and four public datasets demonstrate that SR-LIO++ maintains state-of-the-art accuracy while substantially enhancing efficiency. Notably, our system successfully achieves 20Hz state output on Raspberry Pi 4B hardware.

Uni-Gaussians: Unifying Camera and Lidar Simulation with Gaussians for Dynamic Driving Scenarios

Mar 11, 2025Abstract:Ensuring the safety of autonomous vehicles necessitates comprehensive simulation of multi-sensor data, encompassing inputs from both cameras and LiDAR sensors, across various dynamic driving scenarios. Neural rendering techniques, which utilize collected raw sensor data to simulate these dynamic environments, have emerged as a leading methodology. While NeRF-based approaches can uniformly represent scenes for rendering data from both camera and LiDAR, they are hindered by slow rendering speeds due to dense sampling. Conversely, Gaussian Splatting-based methods employ Gaussian primitives for scene representation and achieve rapid rendering through rasterization. However, these rasterization-based techniques struggle to accurately model non-linear optical sensors. This limitation restricts their applicability to sensors beyond pinhole cameras. To address these challenges and enable unified representation of dynamic driving scenarios using Gaussian primitives, this study proposes a novel hybrid approach. Our method utilizes rasterization for rendering image data while employing Gaussian ray-tracing for LiDAR data rendering. Experimental results on public datasets demonstrate that our approach outperforms current state-of-the-art methods. This work presents a unified and efficient solution for realistic simulation of camera and LiDAR data in autonomous driving scenarios using Gaussian primitives, offering significant advancements in both rendering quality and computational efficiency.

Direct Sparse Odometry with Continuous 3D Gaussian Maps for Indoor Environments

Mar 05, 2025

Abstract:Accurate localization is essential for robotics and augmented reality applications such as autonomous navigation. Vision-based methods combining prior maps aim to integrate LiDAR-level accuracy with camera cost efficiency for robust pose estimation. Existing approaches, however, often depend on unreliable interpolation procedures when associating discrete point cloud maps with dense image pixels, which inevitably introduces depth errors and degrades pose estimation accuracy. We propose a monocular visual odometry framework utilizing a continuous 3D Gaussian map, which directly assigns geometrically consistent depth values to all extracted high-gradient points without interpolation. Evaluations on two public datasets demonstrate superior tracking accuracy compared to existing methods. We have released the source code of this work for the development of the community.

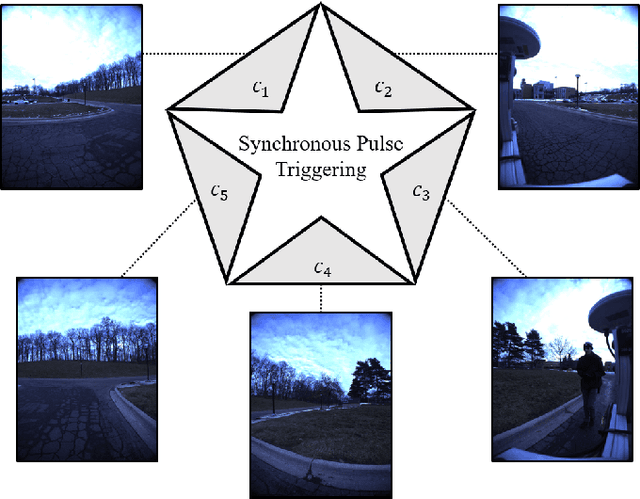

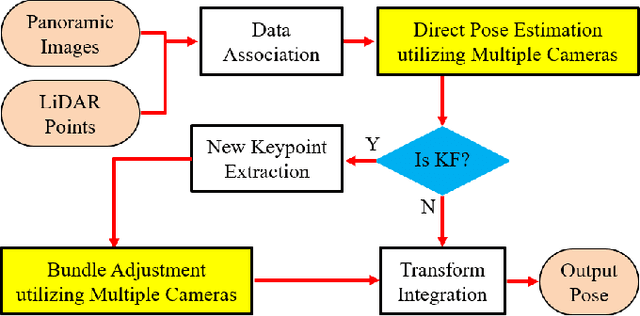

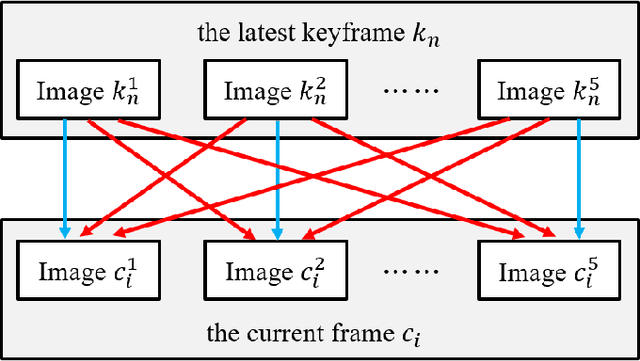

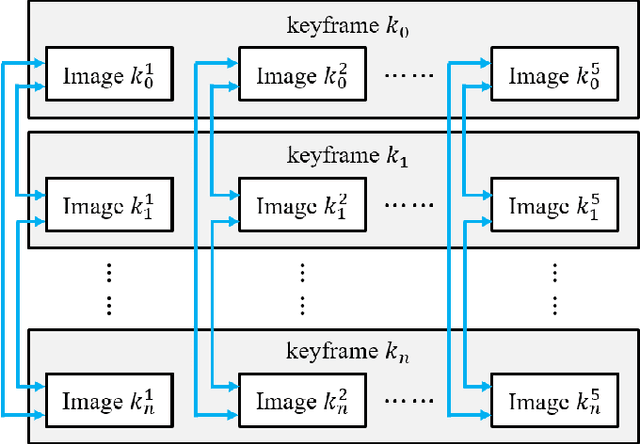

Panoramic Direct LiDAR-assisted Visual Odometry

Sep 14, 2024

Abstract:Enhancing visual odometry by exploiting sparse depth measurements from LiDAR is a promising solution for improving tracking accuracy of an odometry. Most existing works utilize a monocular pinhole camera, yet could suffer from poor robustness due to less available information from limited field-of-view (FOV). This paper proposes a panoramic direct LiDAR-assisted visual odometry, which fully associates the 360-degree FOV LiDAR points with the 360-degree FOV panoramic image datas. 360-degree FOV panoramic images can provide more available information, which can compensate inaccurate pose estimation caused by insufficient texture or motion blur from a single view. In addition to constraints between a specific view at different times, constraints can also be built between different views at the same moment. Experimental results on public datasets demonstrate the benefit of large FOV of our panoramic direct LiDAR-assisted visual odometry to state-of-the-art approaches.

* 6 pages, 6 figures

A Fast Dynamic Point Detection Method for LiDAR-Inertial Odometry in Driving Scenarios

Jul 04, 2024

Abstract:Existing 3D point-based dynamic point detection and removal methods have a significant time overhead, making them difficult to adapt to LiDAR-inertial odometry systems. This paper proposes a label consistency based dynamic point detection and removal method for handling moving vehicles and pedestrians in autonomous driving scenarios, and embeds the proposed dynamic point detection and removal method into a self-designed LiDAR-inertial odometry system. Experimental results on three public datasets demonstrate that our method can accomplish the dynamic point detection and removal with extremely low computational overhead (i.e., 1$\sim$9ms) in LIO systems, meanwhile achieve comparable preservation rate and rejection rate to state-of-the-art methods and significantly enhance the accuracy of pose estimation. We have released the source code of this work for the development of the community.

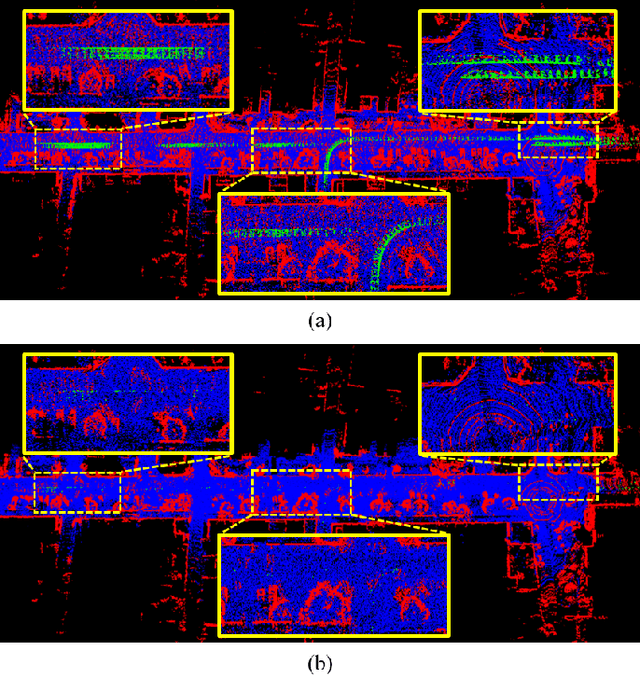

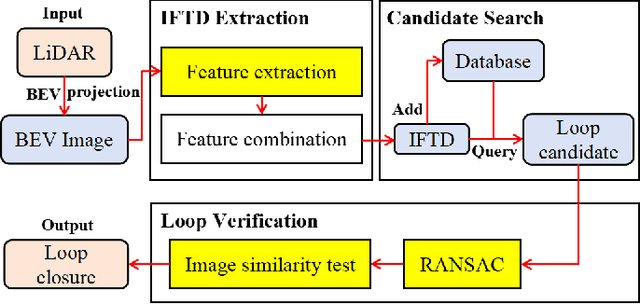

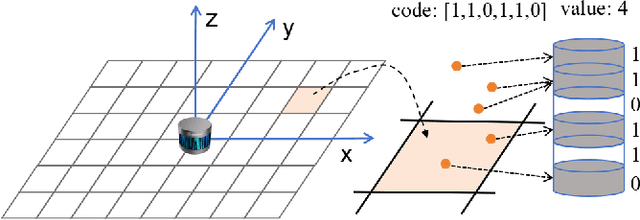

IFTD: Image Feature Triangle Descriptor for Loop Detection in Driving Scenes

Jun 12, 2024

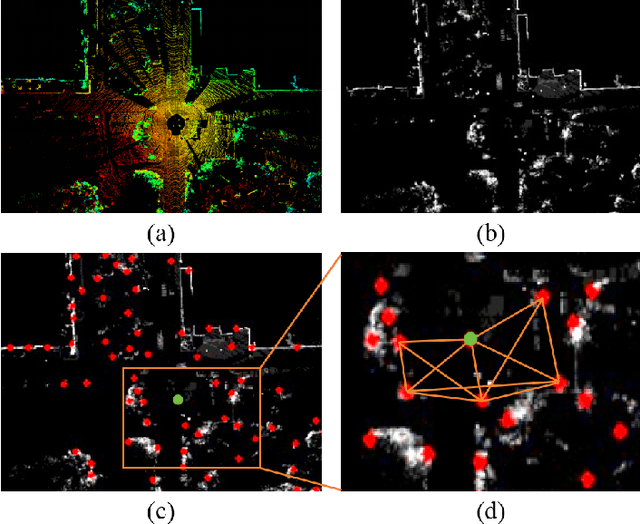

Abstract:In this work, we propose a fast and robust Image Feature Triangle Descriptor (IFTD) based on the STD method, aimed at improving the efficiency and accuracy of place recognition in driving scenarios. We extract keypoints from BEV projection image of point cloud and construct these keypoints into triangle descriptors. By matching these feature triangles, we achieved precise place recognition and calculated the 4-DOF pose estimation between two keyframes. Furthermore, we employ image similarity inspection to perform the final place recognition. Experimental results on three public datasets demonstrate that our IFTD can achieve greater robustness and accuracy than state-of-the-art methods with low computational overhead.

SR-LIVO: LiDAR-Inertial-Visual Odometry and Mapping with Sweep Reconstruction

Dec 28, 2023Abstract:Existing LiDAR-inertial-visual odometry and mapping (LIV-SLAM) systems mainly utilize the LiDAR-inertial odometry (LIO) module for structure reconstruction and the visual-inertial odometry (VIO) module for color rendering. However, the accuracy of VIO is often compromised by photometric changes, weak textures and motion blur, unlike the more robust LIO. This paper introduces SR-LIVO, an advanced and novel LIV-SLAM system employing sweep reconstruction to align reconstructed sweeps with image timestamps. This allows the LIO module to accurately determine states at all imaging moments, enhancing pose accuracy and processing efficiency. Experimental results on two public datasets demonstrate that: 1) our SRLIVO outperforms existing state-of-the-art LIV-SLAM systems in both pose accuracy and time efficiency; 2) our LIO-based pose estimation prove more accurate than VIO-based ones in several mainstream LIV-SLAM systems (including ours). We have released our source code to contribute to the community development in this field.

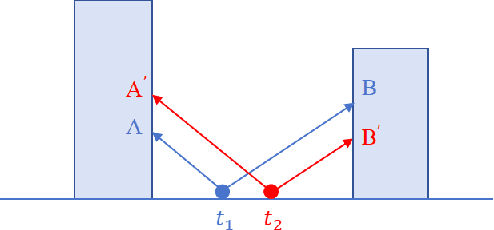

Semi-Elastic LiDAR-Inertial Odometry

Jul 15, 2023

Abstract:Existing LiDAR-inertial state estimation methods treats the state at the beginning of current sweep as equal to the state at the end of previous sweep. However, if the previous state is inaccurate, the current state cannot satisfy the constraints from LiDAR and IMU consistently, and in turn yields local inconsistency in the estimated states (e.g., zigzag trajectory or high-frequency oscillating velocity). To address this issue, this paper proposes a semi-elastic LiDAR-inertial state estimation method. Our method provides the state sufficient flexibility to be optimized to the correct value, thus preferably ensuring improved accuracy, consistency, and robustness of state estimation. We integrate the proposed method into an optimization-based LiDARinertial odometry (LIO) framework. Experimental results on four public datasets demonstrate that our method outperforms existing state-of-the-art LiDAR-inertial odometry systems in terms of accuracy. In addition, our semi-elastic LiDAR-inertial state estimation method can better enhance the accuracy, consistency, and robustness. We have released the source code of this work to contribute to advancements in LiDAR-inertial state estimation and benefit the broader research community.

LIW-OAM: Lidar-Inertial-Wheel Odometry and Mapping

Feb 28, 2023Abstract:LiDAR-inertial odometry and mapping (LIOAM), which fuses complementary information of a LiDAR and an Inertial Measurement Unit (IMU), is an attractive solution for pose estimation and mapping. In LI-OAM, both pose and velocity are regarded as state variables that need to be solved. However, the widely-used Iterative Closest Point (ICP) algorithm can only provide constraint for pose, while the velocity can only be constrained by IMU pre-integration. As a result, the velocity estimates inclined to be updated accordingly with the pose results. In this paper, we propose LIW-OAM, an accurate and robust LiDAR-inertial-wheel odometry and mapping system, which fuses the measurements from LiDAR, IMU and wheel encoder in a bundle adjustment (BA) based optimization framework. The involvement of a wheel encoder could provide velocity measurement as an important observation, which assists LI-OAM to provide a more accurate state prediction. In addition, constraining the velocity variable by the observation from wheel encoder in optimization can further improve the accuracy of state estimation. Experiment results on two public datasets demonstrate that our system outperforms all state-of-the-art LI-OAM systems in terms of smaller absolute trajectory error (ATE), and embedding a wheel encoder can greatly improve the performance of LI-OAM based on the BA framework.

SR-LIO: LiDAR-Inertial Odometry with Sweep Reconstruction

Oct 19, 2022

Abstract:This paper proposes a novel LiDAR-inertial odometry (LIO), named SR-LIO, based on an improved bundle adjustment (BA) framework. The core of our SR-LIO is a novel sweep reconstruction method, which segments and reconstructs raw input sweeps from spinning LiDAR to obtain reconstructed sweeps with higher frequency. Such method can effectively reduce the time interval for each IMU pre-integration, reducing the IMU pre-integration error and enabling the usage of BA based LIO optimization. In order to make all the states during the period of a reconstructed sweep can be equally optimized, we further propose multi-segment joint LIO optimization, which allows the state of each sweep segment to be constrained from both LiDAR and IMU. Experimental results on three public datasets demonstrate that our SR-LIO outperforms all existing state-of-the-art methods on accuracy, and reducing the IMU pre-integration error via the proposed sweep reconstruction is very importance for the success of a BA based LIO framework. The source code of SR-LIO is publicly available for the development of the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge